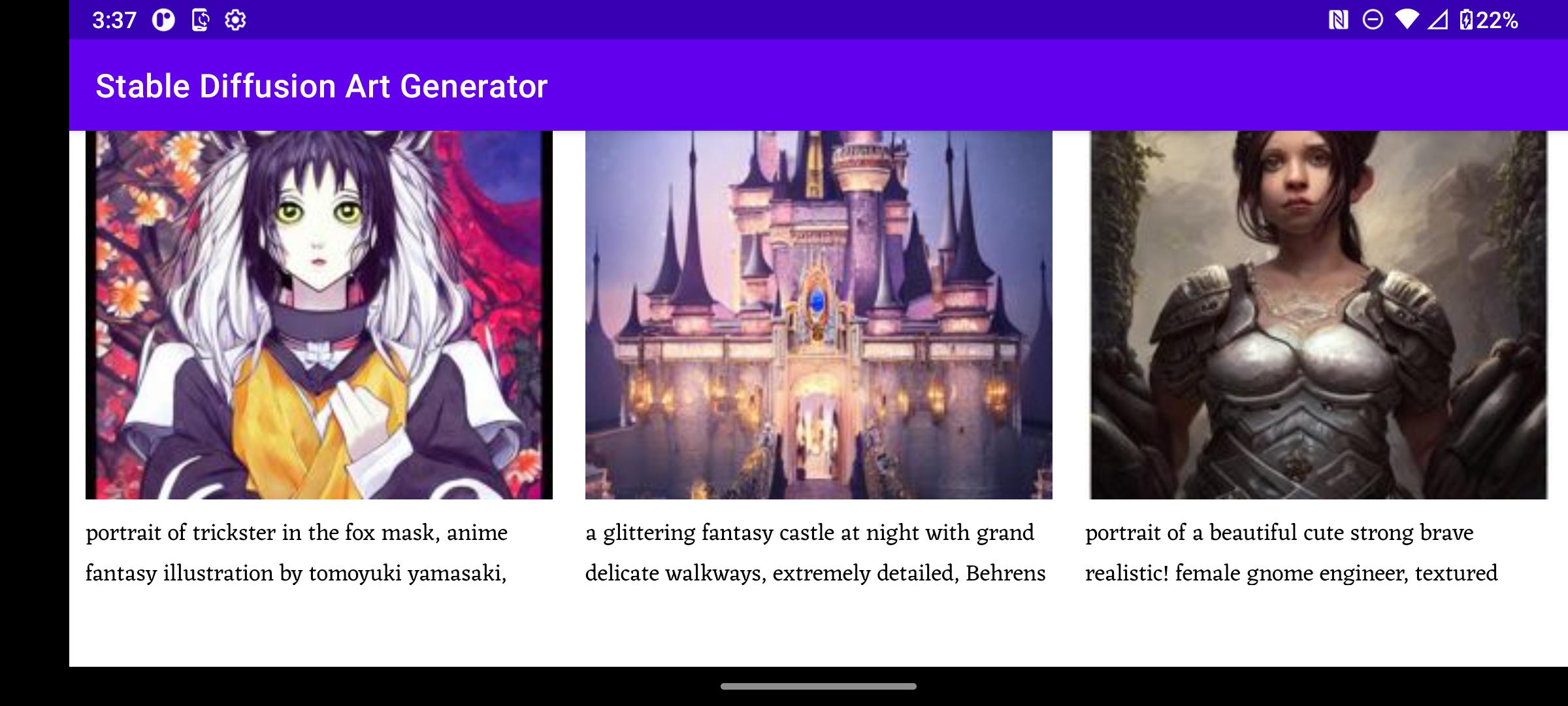

You’ve seen the images. Maybe it’s a hyper-realistic astronaut riding a horse or a cyberpunk version of a 1920s jazz club. It's everywhere. But while everyone is busy fighting over whether Midjourney’s latest update is worth fifteen bucks a month, a quieter, more technical revolution has been happening right on people's hard drives.

It’s called Stable Diffusion.

Unlike the walled gardens of DALL-E or Midjourney, Stable Diffusion ai art is open-source. That one distinction changes everything. It means you don't need a subscription. You don't even need an internet connection once it's set up. If you have a decent GPU, you own the factory. Honestly, the level of control this gives you is kind of terrifying if you’re used to just typing a prompt and hoping for the best.

What Stable Diffusion Is (and Why It’s Not Just a Prompt Box)

Stable Diffusion was released by Stability AI in 2022, built on work by researchers at LMU Munich and funded by a mix of private and academic interests. It uses a process called diffusion. Basically, the AI starts with a field of pure digital static—think of the "snow" on an old analog TV—and slowly "denoises" it into an image based on your text.

It’s math. Just really complex math.

The math happens in a "latent space," which is a compressed version of image data. This is why you can run it on a consumer-grade laptop instead of a massive server farm. Most people start with the base models, like SD 1.5 or the much more complex SDXL, but the real magic is in the community. Developers like the team behind Automatic1111 or the creators of ComfyUI have built interfaces that turn this code into a professional-grade studio.

The Myth of the "Magic Prompt"

People think the secret to great Stable Diffusion ai art is a 500-word prompt filled with words like "masterpiece" and "4k."

It isn't.

In reality, the community has moved way past simple prompting. We’re using ControlNet now. ControlNet is a neural network structure that allows you to control the exact pose of a character or the depth of a scene. Want a character to point at a specific object? You don't pray to the prompt gods; you feed the AI a "depth map" or a "Canny edge" sketch. It follows your lines exactly.

Then there’s LoRA (Low-Rank Adaptation). Think of a LoRA as a "mini-plugin" for your AI. If the base model doesn't know what a specific 90s anime style looks like, you download a 100MB LoRA file, and suddenly, it's an expert. It's granular. It's specific. It’s why people are using this for actual architectural visualization and storyboard work rather than just making weird memes for Reddit.

✨ Don't miss: The F-111 Dump and Burn Was the Cold War’s Wildest Party Trick

The Problem with "Good Enough"

A lot of the art you see online looks... well, like AI. It has that weird, waxy skin texture. It has six fingers. Sometimes the eyes are looking in two different directions.

This is where the divide happens between casual users and the pros. To get high-quality Stable Diffusion ai art, you have to use "Inpainting." This is the process of selecting a small part of an image—say, a mangled hand—and telling the AI to redraw just that one section. You might do this fifty times for a single portrait. It’s tedious. It’s actual digital labor. But it’s how you get results that genuinely fool the eye.

Ethics, Copyright, and the 2024 Legal Landscape

We have to talk about the elephant in the room. Stable Diffusion was trained on the LAION-5B dataset. That's billions of images scraped from the web, including copyrighted work from living artists.

It’s a mess.

There are ongoing lawsuits, like the one led by artists Sarah Andersen, Kelly McKernan, and Karla Ortiz. They argue that these models are "derivative works." On the flip side, developers argue that the AI is "learning" concepts, much like a human art student looks at a museum painting to understand lighting.

Regardless of where you stand, the industry is shifting. Adobe Firefly and Getty Images have released models trained only on licensed content. Stable Diffusion 3 also made attempts to address these concerns by changing how the model handles certain prompts. But let's be real: the open-source genie is out of the bottle. You can't un-release a model that's already on a million hard drives.

Hardware: What You Actually Need

Don't listen to the people who say you can run this on a toaster. Technically, you can run it on a CPU, but you'll be waiting twenty minutes for one image of a cat.

- GPU: You want an NVIDIA card. Period. Stable Diffusion is built on CUDA, which is NVIDIA's proprietary tech.

- VRAM: This is the most important stat. 8GB of VRAM is the bare minimum for a decent experience. If you want to use the high-end SDXL models or train your own LoRAs, you really want 12GB or 16GB.

- RAM: 16GB of system memory is fine, but 32GB makes everything smoother when you're multitasking.

- Storage: The models are huge. A single "checkpoint" (the brain of the AI) can be 2GB to 7GB. You’ll end up with a folder full of them before you know it.

The Hidden Power of Local Training

The coolest thing about Stable Diffusion isn't generating art—it's training the AI on you.

With a technique called Dreambooth, you can take 20 photos of yourself, or your dog, or your house, and "inject" them into the model. After about thirty minutes of training, the AI knows exactly who you are. You can then generate images of yourself on Mars, or as a character in a Pixar movie, or wearing clothes you haven't bought yet.

This has massive implications for small businesses. Imagine being a small jewelry designer. You train a LoRA on your specific ring designs. Now, you can generate professional-looking lifestyle photos of models wearing your rings in any location on Earth without ever hiring a photographer or flying to a beach.

Why This Isn't Just a Trend

Stable Diffusion ai art is becoming an "under the hood" technology. It’s being integrated into Photoshop, Blender, and even Krita. It’s not just about the website interface anymore. It’s becoming a tool in the belt, like the "Clone Stamp" tool was twenty years ago.

📖 Related: Finding an iPhone SE back template that actually fits your skin or case design

People are scared it will replace artists. And honestly? For some entry-level commercial work, it might. Stock photography is already feeling the hit. But for high-end creative direction, it’s just a force multiplier. It allows a single artist to do the work of a small studio. It’s about speed.

How to Get Started the Right Way

If you’re ready to actually try this, don't go to some shady website that asks for a credit card.

- Download Stability Matrix: It’s a "one-click" installer that handles all the complicated Python coding stuff for you. It’s the easiest way to manage different interfaces like Automatic1111 or ComfyUI.

- Visit Civitai: This is the "App Store" of AI models. It’s where people share their custom-trained styles. Just be warned: turn on the "Safe" filters if you’re at work. It gets weird fast.

- Learn the "Negative Prompt": Most people focus on what they want. In Stable Diffusion, telling the AI what you don't want—like "extra fingers, blurry, low quality"—is just as important.

- Try Upscaling: Don't settle for the 512x512 images the AI spits out first. Use "Hires. fix" or "Topaz Gigapixel" to bring those images up to a resolution where they actually look good on a screen.

The learning curve is steep. You will get errors. You will see a "CUDA out of memory" message that makes you want to throw your PC out the window. But once you get that first perfect generation, you'll realize why people are obsessed. You aren't just clicking a button; you're directing a digital brain.

Start by installing a lightweight interface like Forge. It’s optimized for lower-end cards and includes most of the features you’ll actually use daily. Once you understand how "Denoising Strength" affects an image, you've already moved past 90% of the people using AI. From there, explore the world of "Tiled Diffusion" for creating massive, 8K murals that would have been impossible for a home computer just three years ago.