Markets are messy. Honestly, if you’ve spent more than five minutes looking at a candlestick chart, you know that prices don’t move in clean lines or predictable circles. They jump. They flatline. They react to a random tweet from a billionaire at 3:00 AM. For decades, the smartest people on Wall Street tried to bottle this chaos using simple linear regressions. It didn’t work great. Now, everyone is obsessed with machine learning for trading, thinking a neural network will magically turn their $5,000 account into a retirement fund.

It won't. At least, not the way most people use it.

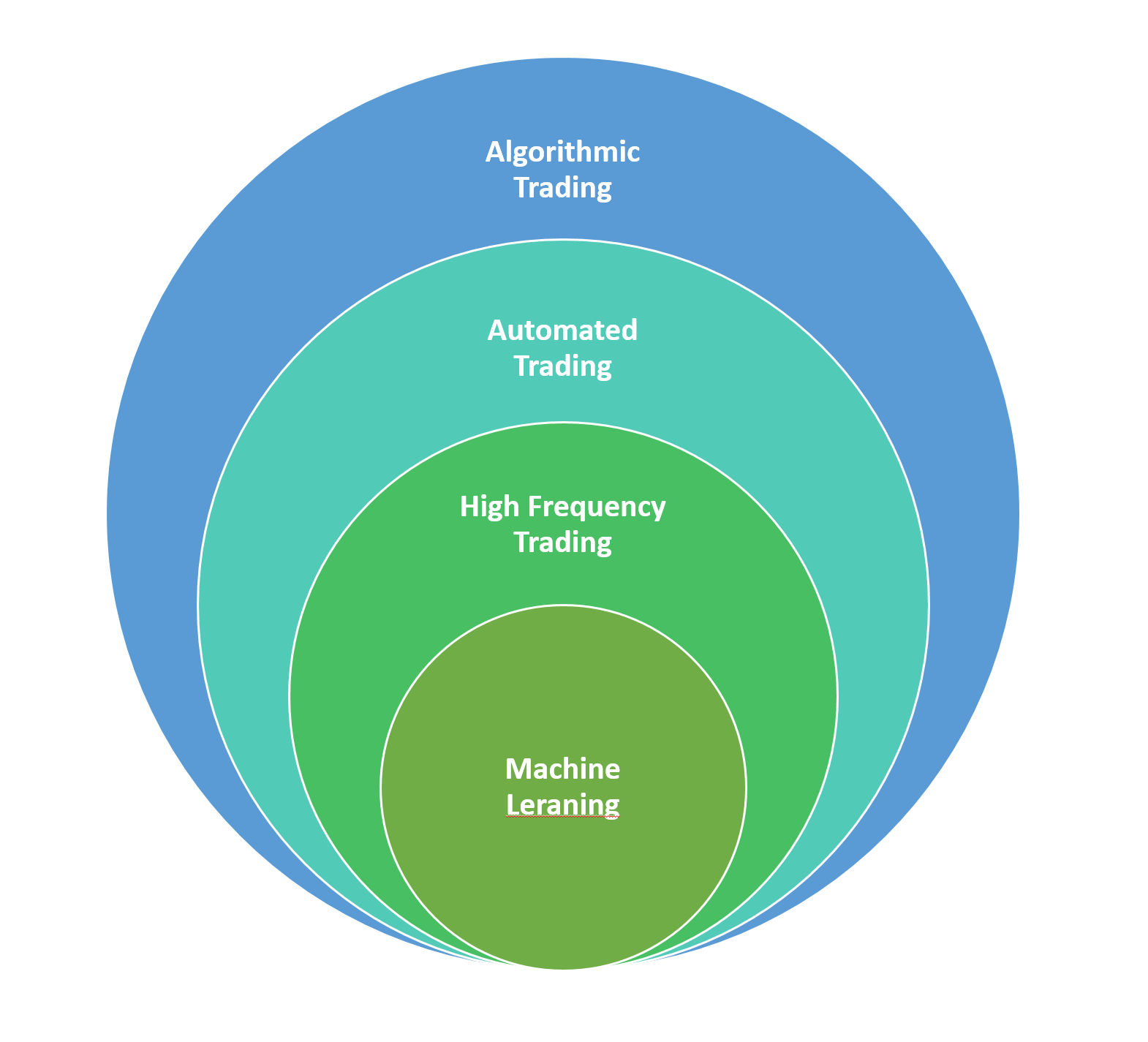

The reality of using AI in the markets is far grittier than the "set it and forget it" marketing fluff you see on YouTube. Real algorithmic trading—the kind done by firms like Renaissance Technologies or Two Sigma—is an endless war against noise. Most retail traders fail because they build models that look like geniuses on past data but crumble the second they hit live liquidity. This is called overfitting. It’s the cardinal sin of the industry.

The Data Trap and Why More Isn't Always Better

You can’t just feed a CSV of historical prices into a Random Forest and expect a money printer. Markets are "non-stationary." That’s a fancy way of saying the rules of the game change while you’re playing it. A strategy that worked in the high-interest-rate environment of the early 2000s might be total garbage in the post-2020 era of "infinite" liquidity and meme stocks.

When people start with machine learning for trading, they usually grab OHLC (Open, High, Low, Close) data. That’s a mistake. Price is a derivative of human (and bot) behavior. If you want an edge, you have to look deeper. We’re talking about Limit Order Book (LOB) data, sentiment analysis from earnings calls, or even satellite imagery of retail parking lots. But here’s the kicker: the more features you add, the more noise you introduce.

Marcos López de Prado, a heavy hitter in this field and author of Advances in Financial Machine Learning, often points out that standard cross-validation doesn't work for finance. In science, if you test a drug on Group A and Group B, they don't influence each other. In trading, if you test your model on Monday’s data, that data is inherently linked to Tuesday’s. If you don't use "purging" and "embargoing" techniques to separate your training and testing sets, your model is essentially "peeking" at the future. It’s cheating, and the market will punish you for it.

Feature Engineering is Where the War is Won

Forget the model for a second. Whether you use an XGBoost, a Long Short-Term Memory (LSTM) network, or a simple SVM matters way less than what you’re feeding it. Most people use "technical indicators" like the RSI or MACD.

Bad move.

These indicators are mostly just transformations of price that lag behind reality. A machine learning model doesn't need you to tell it the RSI is over 70. It can see that. Instead, experts focus on:

💡 You might also like: How to cancel order on verizon: What you need to do before it ships

- Fractional Differentiation: Traditional methods "differentiate" price to make it stationary (removing trends), but this kills all the "memory" in the data. Fractional differentiation keeps the memory while making the data readable for the AI.

- Microstructure Features: Looking at the "tick" level. How many aggressive buyers are hitting the bid versus sellers hitting the ask?

- Alternative Data: This is the stuff that isn't on a chart. Credit card transactions, shipping manifests, or even weather patterns in the "Corn Belt."

Let’s Talk About Quantitative Failure

Most ML models in finance fail. Not because the math is wrong, but because of "backtest overfitting." If you run 10,000 versions of a strategy, one of them will look amazing just by sheer luck. If you then trade that one version, you’re basically betting on a coin flip that happened to land on heads five times in a row.

Jim Simons, the founder of Renaissance Technologies, famously hired physicists and code-breakers rather than MBA students. Why? Because physicists understand that just because a pattern exists in the data doesn't mean it's a "law." It might just be a ghost in the machine. In the world of machine learning for trading, these ghosts are everywhere.

Reinforcement Learning: The New Frontier?

Lately, there’s been a massive shift toward Reinforcement Learning (RL). Unlike supervised learning, where you tell the bot "this is a buy signal," in RL, you give the bot a playground and a goal (maximize Sharpe ratio, minimize drawdown). The bot then makes thousands of mistakes in a simulation until it finds a path to profit.

It sounds cool. It is cool. But it’s incredibly hard to stabilize. An RL agent might find a "glitch" in your simulation—like a way to trade with zero slippage or infinite leverage—and exploit it. When you take that bot to the real NYSE, it dies instantly because the real world has friction. Real world trading has "impact." If you buy 10,000 shares of a low-volume stock, you move the price against yourself. If your model doesn't account for that, it's just a fantasy.

📖 Related: How to Make Styrofoam: Why You Probably Shouldn't Try This at Home

The Problem of Execution

Even if you have a 60% win rate, you can still go broke. Execution is the silent killer. Machine learning for trading often ignores the "plumbing." How much are you paying in commissions? What is the "latency" between your signal and the execution? In HFT (High-Frequency Trading), a microsecond is the difference between a million-dollar day and a total wash.

For most of us, HFT is out of reach. We can't compete with the microwave towers and FPGA chips of firms like Virtu or Citadel. But ML can still help in the "mid-frequency" space—holding trades for hours or days. Here, the AI isn't trying to outrun the light-speed bots; it's trying to out-think the human psychology that still drives large-scale trends.

Actionable Steps for Building a Model

If you're actually going to try this, stop looking for a "magic" bot. Start by building a robust pipeline.

- Get Clean Data. Garbage in, garbage out. If your data has holes or "bad prints," your model will learn those errors. Use reputable sources like Quandl, Polygon.io, or Alpaca.

- Stationarity is King. You have to make sure your data doesn't "drift" in a way that breaks the math. Use the Augmented Dickey-Fuller (ADF) test to check if your series is stationary.

- Use Walk-Forward Optimization. Don't just test on the last two years. Train on year one, test on month one of year two. Then slide the window forward. This simulates how the model would actually perform as time moves.

- Focus on Risk, Not Returns. A model that makes 50% but has a 40% drawdown will eventually hit zero. A model that makes 10% with a 2% drawdown is a masterpiece. Use the Sortino Ratio instead of the Sharpe Ratio to focus on "bad" volatility.

- Triple-Check for Data Leakage. This is the most common reason people think they've "solved" the market. Ensure that your features at time $T$ do not contain any information from time $T+1$. It sounds obvious, but it happens in subtle ways, like using a moving average that includes the current price's closing value before the candle has actually closed.

Machine learning for trading is a tool, not a crystal ball. It’s a way to process more information than a human brain ever could, but it still requires a human to set the guardrails. The market is an adversarial environment. Every dollar you make is a dollar someone else lost. They have AI too. To win, you don't need the most complex model; you need the most disciplined process and a deep respect for how quickly the "patterns" can vanish.