Everyone is looking for the DeepSeek R2 release date, but honestly, the AI world is currently obsessed with the wrong thing. While social media is buzzing with "R2" rumors, the actual movement coming out of Hangzhou right now is much more nuanced—and frankly, a bit more complicated than just a simple version jump.

If you’re waiting for a specific calendar alert to pop up, you might be waiting a while. As of January 15, 2026, DeepSeek has stayed remarkably quiet on a formal "R2" branding. Instead, they’ve been dropping breadcrumbs in the form of technical papers and "V-series" updates that suggest the next massive reasoning leap is hiding in plain sight.

When is the DeepSeek R2 release date actually happening?

The short answer? There is no official date yet. The long answer is that the industry is currently split. Some insiders at The Information have recently pointed toward a mid-February 2026 launch window, specifically coinciding with the Lunar New Year.

DeepSeek has a history here. They dropped R1 right before the holiday last year, which basically ruined the vacation of every AI researcher in Silicon Valley. It was a power move. Now, reports suggest a new flagship model is coming in "the coming weeks." But here is the kicker: many are calling it DeepSeek V4, not R2.

Why the name matters (and why it doesn't)

DeepSeek is weird about naming. They usually only jump a version number when the architecture fundamentally shifts. Last year, they released DeepSeek V3 and R1. R1 was the "reasoning" specialist—the one that sits there and "thinks" before it talks.

👉 See also: Q\* Explained: Why Everyone Is Obsessing Over OpenAI's Mysterious Project

So, when people ask about the DeepSeek R2 release date, they are really asking: "When is the next model that thinks better than R1 coming out?"

- The V4 Overlap: Many analysts believe the upcoming V4 model is the successor to both the general-purpose V3 and the reasoning-focused R1.

- Infrastructure Hurdles: There’s been a lot of talk about hardware. China's access to top-tier NVIDIA chips is... let's say "restricted." DeepSeek has had to get incredibly creative with how they train these models on domestic hardware like Huawei’s Ascend 910B.

- Internal Leaks: A GitHub repo for something called "DeepSeek-Engram" leaked recently. It hints at a new memory system that could be the backbone of whatever R2 or V4 ends up being.

What is DeepSeek R2 supposed to do anyway?

If the leaks from sites like Medium and Dataconomy are even half-true, we are looking at something pretty wild. The "leaked" specs for R2 suggest a 1.2 trillion parameter model. Now, before you roll your eyes at another massive number, listen to this: it’s a Mixture-of-Experts (MoE) setup.

Basically, it might have 1.2 trillion parameters in total, but it only "wakes up" about 78 billion of them for any given task. This is how DeepSeek keeps costs so low. They aren't just throwing brute-force compute at the problem; they’re being surgical.

🔗 Read more: That Viral Picture of the Observable Universe is Way Crazier Than You Think

The Reasoning Leap

DeepSeek R1-Zero proved that you could teach a model to "reason" through pure reinforcement learning without needing a million humans to hold its hand. R2 is expected to take that to the next level. We're talking:

- Generative Reward Modeling (GRM): The model essentially grades its own homework during training.

- Multimodal Reasoning: Imagine an AI that doesn't just "see" an image, but "thinks" through the physics of what's happening in it.

- Extreme Coding: Word on the street is that the new model (whether called V4 or R2) is outperforming Claude 3.5 and GPT-4o in repository-level debugging.

The Hardware Elephant in the Room

You can't talk about a DeepSeek release without talking about chips. It's the drama of the year. Back in August 2025, rumors surfaced that the R2 launch was delayed because training on Huawei’s Ascend chips was hitting a brick wall.

They apparently had to revert back to their existing stash of NVIDIA cards for the heavy lifting. This matters to you because it dictates the release speed. If they’ve finally cracked the code on using domestic Chinese hardware efficiently—using something they call "Manifold-Constrained Hyper-Connections" (mHC)—then the floodgates are about to open.

📖 Related: How Much Is the iPhone 15 Pro: What You’ll Actually Pay in 2026

Is DeepSeek R2 even real?

Some people in the LocalLLaMA community think R2 was actually "swallowed" by the V3.1 and V3.2 updates we saw late last year. They argue that DeepSeek is moving away from the "R" naming convention and folding all those reasoning capabilities into their main flagship line.

However, a January 2026 paper on algorithmic efficiency explicitly mentions "next-generation R2 models" in the context of infrastructure innovation. So, the name is definitely still alive in their labs.

What you should do while you wait

Instead of refreshing their Twitter (X) feed every five minutes, there are a few practical things you can actually do to prepare for the DeepSeek R2 release date:

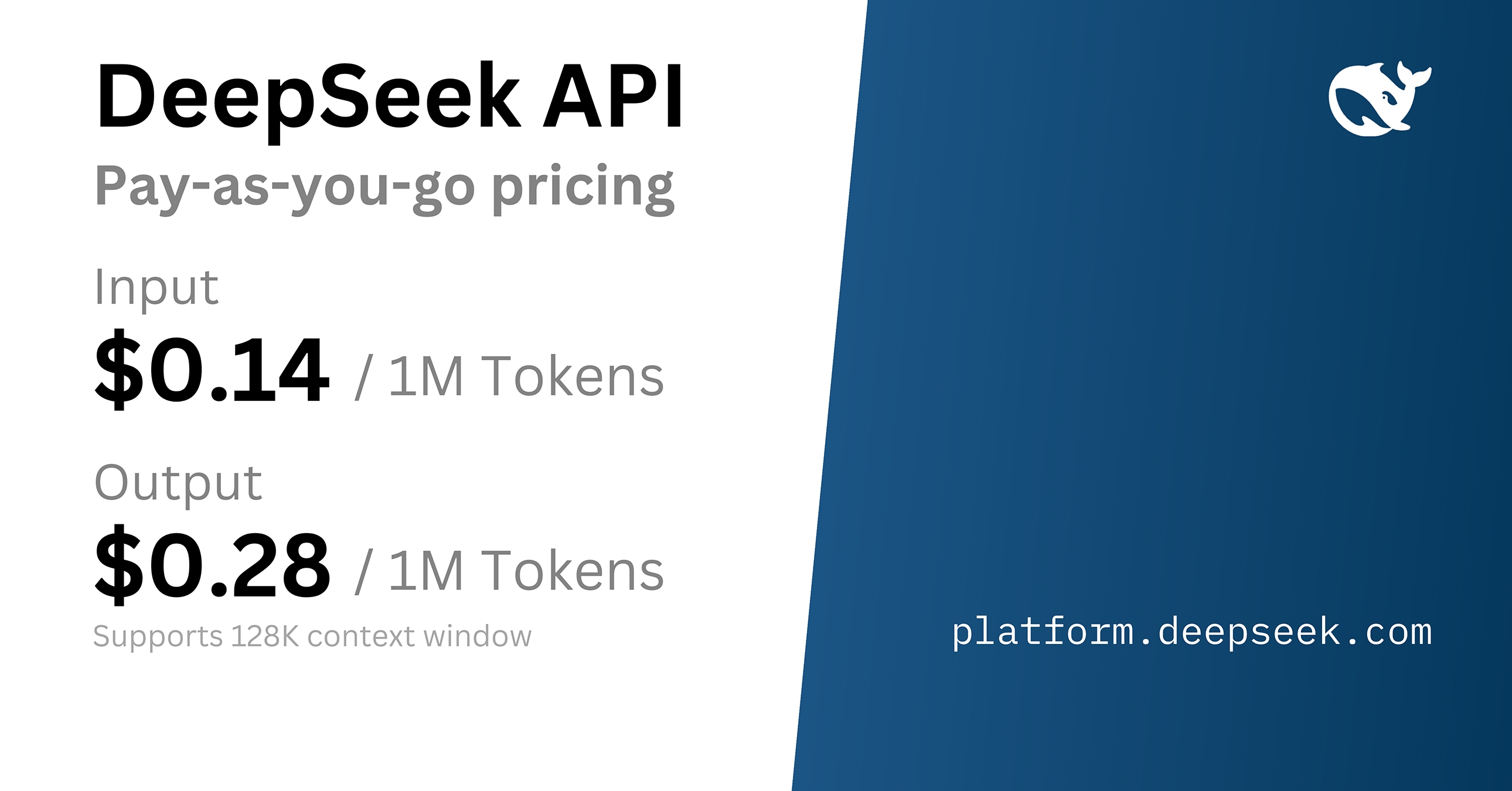

- Audit your API costs: If you're using OpenAI or Anthropic, look at your monthly bill. DeepSeek R1 was 90% cheaper. R2 will likely follow suit. Be ready to pivot your backend if the performance matches up.

- Watch the Lunar New Year: Keep your eyes on February 2026. If a drop happens, it'll likely be a "weights-available" release, meaning you can run it yourself if you have the hardware (likely a beefy setup with dual RTX 5090s or similar).

- Check the "Think" tags: If you use the current DeepSeek models, start getting used to the

<think>and</think>output format. It's a different way of interacting with AI, and R2 will likely double down on this "Chain of Thought" transparency. - Don't believe every "leak": There's a lot of fake benchmark data floating around. Wait for the official DeepSeek GitHub or their Hugging Face page to verify the numbers.

The reality of DeepSeek is that they don't do hype cycles like Silicon Valley. They just drop a paper, release the weights, and let the hardware markets panic. Whether it's called R2 or V4, something big is coming this quarter, and it’s probably going to make our current models look a bit dim.

Next Steps to Stay Ready:

Follow the official DeepSeek AI account on X (formerly Twitter) and keep an eye on the Hugging Face "Trending" models. Historically, DeepSeek uploads their model weights there hours before making a formal announcement. If you see a massive new MoE model appear with "R2" or "V4" in the title, that’s your signal to start testing.