Let's be real. If you’ve spent five minutes on LinkedIn lately, you’ve probably seen some guru claiming that AI in UX design is going to replace designers by next Tuesday. It's a loud, exhausting narrative. But if you actually sit down and try to build a complex design system using nothing but a prompt, you’ll realize pretty quickly that the "magic" has some very jagged edges. We aren't looking at the death of the designer; we’re looking at a massive, messy, and honestly kind of exciting shift in how we solve problems for actual human beings.

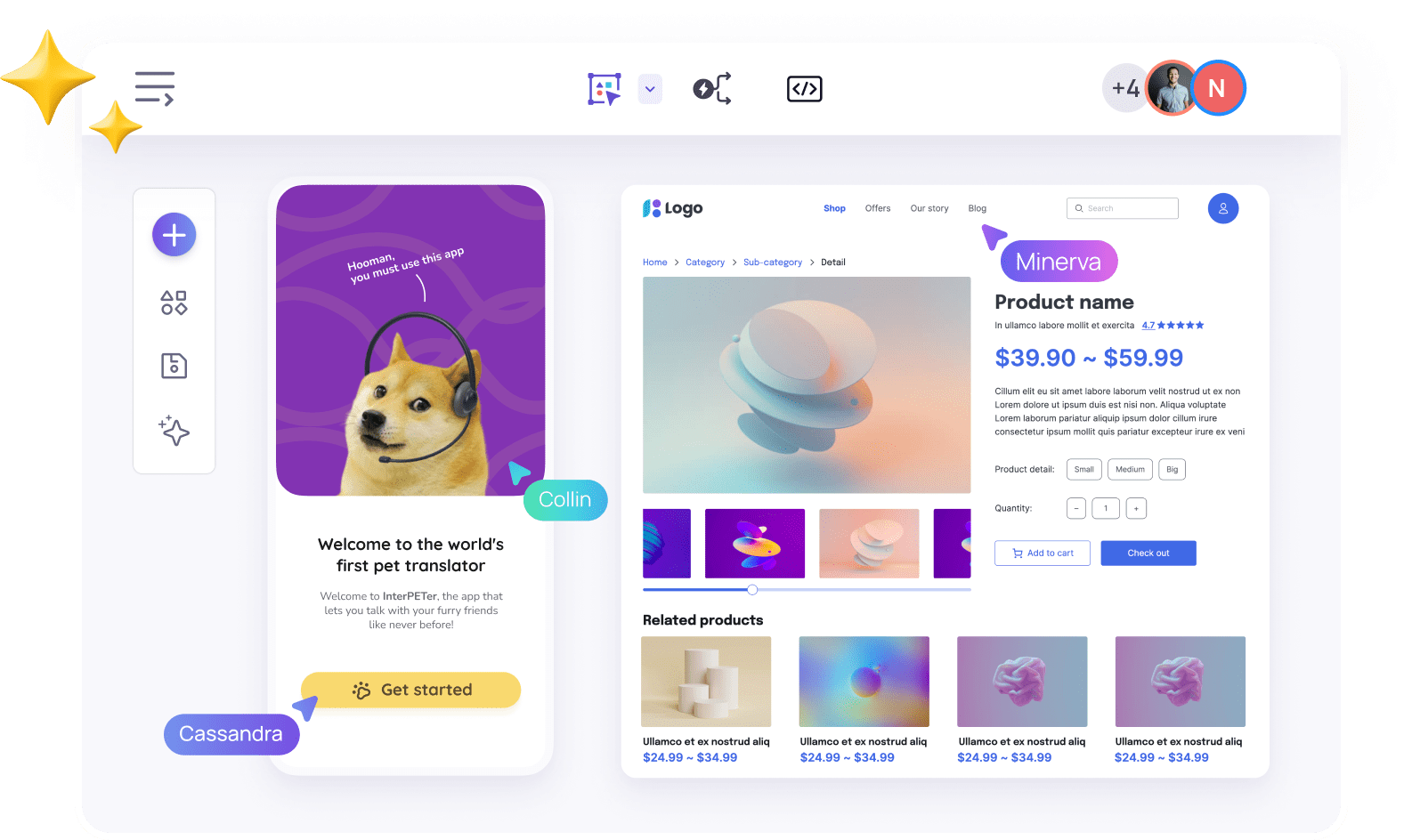

The reality is that tools like Figma’s AI features or Galileo aren't just "faster" versions of what we already do. They change the nature of the work. It’s like moving from being a carpenter to being an architect who also happens to own a fleet of robotic builders.

Why AI in UX Design Isn't Just About Pretty Buttons

Most people think AI in UX design starts and ends with generating UI mockups. That’s a mistake. The real power—the stuff that actually helps users—is happening in the background where nobody sees it. We’re talking about computational design and generative research.

Think about the sheer amount of user feedback a company like Spotify or Airbnb gets every single day. A human researcher can read maybe a hundred tickets a day without losing their mind. An LLM-powered tool like Dovetail or EnjoyHQ can synthesize thousands of hours of user interviews in seconds, pulling out themes that a tired human might miss. This isn't just about efficiency. It's about finding the "why" behind user behavior at a scale that was physically impossible five years ago.

Designers often get stuck in the "pixel pushing" phase. We spend hours nudging buttons three pixels to the left. AI is taking that over. Tools like Uizard or Figma’s Autolayout (when it works right) are beginning to handle the grunt work. This leaves us more time to think about the actual user journey. Honestly, if you're worried about a machine taking your job because it can draw a card component faster than you, you probably weren't doing much "design" to begin with. You were doing production work.

The Problem With Synthetic Users

There’s this weird trend happening right now where companies are using "synthetic users" to test their designs. Basically, they feed a persona into an AI and ask it, "Hey, would a 45-year-old accountant from Ohio find this menu confusing?"

It sounds efficient. It’s also incredibly dangerous.

Real humans are unpredictable. We have bad days. We get distracted by a crying baby or a slow Wi-Fi connection. A synthetic user is a mathematical average of a persona. It doesn't have "friction" in the same way a person does. If you rely too heavily on AI-generated personas, you end up designing for a ghost. You lose the edge cases. And as any senior UX designer will tell you, the edge cases are where the real usability problems live.

💡 You might also like: 2 AI Talking to Each Other: What Really Happens When Humans Leave the Room

Jakob Nielsen, the pioneer of usability, has noted that while AI can significantly boost the productivity of low-skilled writers and designers, it doesn't necessarily raise the ceiling for experts yet. It raises the floor. It makes "okay" design accessible to everyone, which means the "great" design has to be even more human-centric to stand out.

Predictive UX and the End of the Static Interface

This is where things get spooky. Traditionally, we design static screens. We build a flow, and everyone sees the same thing. But with AI in UX design, we’re moving toward "anticipatory design."

Imagine an app that changes its entire layout based on your current stress level or the time of day. This is already happening in small ways. Netflix doesn't just suggest movies; it changes the artwork of those movies to match what it thinks you’ll click on. If you like romances, you see the lead couple. If you like action, you see the explosion.

- This is dynamic personalization.

- It happens in real-time.

- The designer's role shifts from "drawing the screen" to "setting the rules for the screen."

We are becoming system designers. Instead of saying "The button is blue," we are saying "The button should be the most high-contrast color based on the user's visual accessibility profile and the ambient light of their room." It’s a higher level of thinking. It requires a deep understanding of logic and ethics.

The Ethical Mess We’re Stepping Into

We have to talk about bias. AI models are trained on the internet, and the internet is—to put it mildly—a bit of a disaster. If you use AI to generate images of "a professional CEO" for your mockups, you’re probably going to get a lot of white men in suits. If you don't actively fight against that, your design inherits that bias.

There’s also the "Black Box" problem. If an AI decides to hide a certain feature from a user because it thinks they don't need it, and that user actually does need it, who is responsible? The designer? The developer? The person who trained the model?

We’re seeing a rise in AI Ethics specialists within design teams for this exact reason. Companies like Google and Microsoft have published extensive "AI + Human" guidelines, but the reality on the ground is still the Wild West. You've got to be the one to say, "Hey, maybe we shouldn't let the algorithm decide who gets the 'Apply for Loan' button."

Practical Implementation: How to Actually Use This Stuff

If you want to stay relevant, you need to stop treatin AI like a threat and start treating it like a very fast, very literal intern.

💡 You might also like: Why How You Convert an Audio File Is Probably Ruining Your Sound Quality

- Automate the Boring Stuff: Use AI for generating copy (lorem ipsum is dead), creating variations of icons, or tidying up layers.

- Synthesize Research: Drop your interview transcripts into a private, secure LLM and ask for "conflicting viewpoints." Don't just ask for a summary. Ask it to find where two users disagreed. That’s where the insight is.

- Prototyping with Data: Instead of using fake names like "John Doe," use AI to generate a CSV of 500 diverse, realistic user profiles to see how your layout holds up under different name lengths and data types.

Designers like Molly Hellmuth have shown how AI can accelerate component creation within Figma, but she also emphasizes that the logic—the "why"—remains human. You still need to know how a design system scales. You still need to know how a developer is going to inspect that code.

The New Skillset: Prompt Engineering for Visuals

It’s not just about typing "make it look like Apple." It’s about understanding the vocabulary of design. To get the most out of AI in UX design tools, you need to speak the language of lighting, composition, and hierarchy.

A prompt like "clean modern app" is useless.

A prompt like "High-fidelity mobile UI, bento box layout, 8px grid system, soft shadows, SF Pro typography, accessibility-compliant contrast ratios" actually gives the machine something to work with.

You aren't losing your design skills; you're translating them into a new medium.

The Future is Collaborative, Not Automated

We are heading toward a world of Generative UI. This is the idea that the interface doesn't even exist until the user requests it. The AI assembles the components on the fly to solve a specific task. If I want to "book a flight to Tokyo," I don't need a homepage, a search page, a results page, and a checkout page. I need a single, fluid experience that lets me do that one thing.

Does this mean UX designers are out of a job? No. It means we have to design the components and the logic that allows the AI to assemble them safely. We become the guardians of the brand and the advocates for the user's mental model.

The human brain is still the only thing capable of true empathy. A machine can't feel the frustration of a user who just lost their credit card and is trying to freeze it in a panic. It can only follow a pattern. Our job is to make sure that pattern doesn't make a bad situation worse.

Moving Forward with AI in UX Design

Don't wait for your company to hand you an "AI strategy." Start small.

- Download a plugin like Magician or Relume and see where it fails. Understanding the limitations is just as important as knowing the features.

- Take an old project and try to "remix" it using Midjourney or DALL-E 3. See if it catches visual metaphors you missed.

- Read the Microsoft HAX Toolkit. It’s one of the best resources for understanding how to design human-AI interactions that don't feel creepy or broken.

The goal isn't to be an "AI Designer." The goal is to be a better designer who happens to be powered by AI. Keep your eyes on the user, keep your hands on the logic, and let the machine handle the pixels. Honestly, you'll probably find that you enjoy the work a lot more when you aren't spending four hours a day renaming layers.

✨ Don't miss: Samsung Galaxy Note 6: What Really Happened to the Missing Phablet

The transition is happening now. It's messy, it's weird, and it's definitely not as simple as the "one-click" demos make it look. But for those willing to learn the new grammar of design, the potential to create truly personalized, helpful experiences is higher than it’s ever been.

Focus on the strategy. Master the tools. Stay skeptical of the hype, but don't ignore the shift. That is how you win in the age of AI.