You're looking at a screen right now. Underneath that glass, behind the pixels, there is a frantic, invisible conversation happening in a language you probably weren't born speaking. It’s all ones and zeros. But humans? We hate long strings of ones and zeros. We can't read them. If I showed you 1010111100101101, your brain would likely glaze over instantly. That is exactly why we use hexadecimal. It's the shorthand. It’s the "CliffNotes" for machine code. To bridge that gap, you need a hex to binary table, which is basically the Rosetta Stone for anyone poking around in a computer's brain.

Computers are fundamentally simple creatures. They understand "on" and "off." That’s it. High voltage or low voltage. We represent this as 1 and 0. But as software got more complex, writing out those binary strings became a nightmare for engineers. Imagine trying to debug a memory address that is 64 bits long. You’d lose your mind by the tenth digit. Hexadecimal (base-16) was the solution because it maps perfectly—and I mean perfectly—to four bits of binary.

The Math Behind the Magic

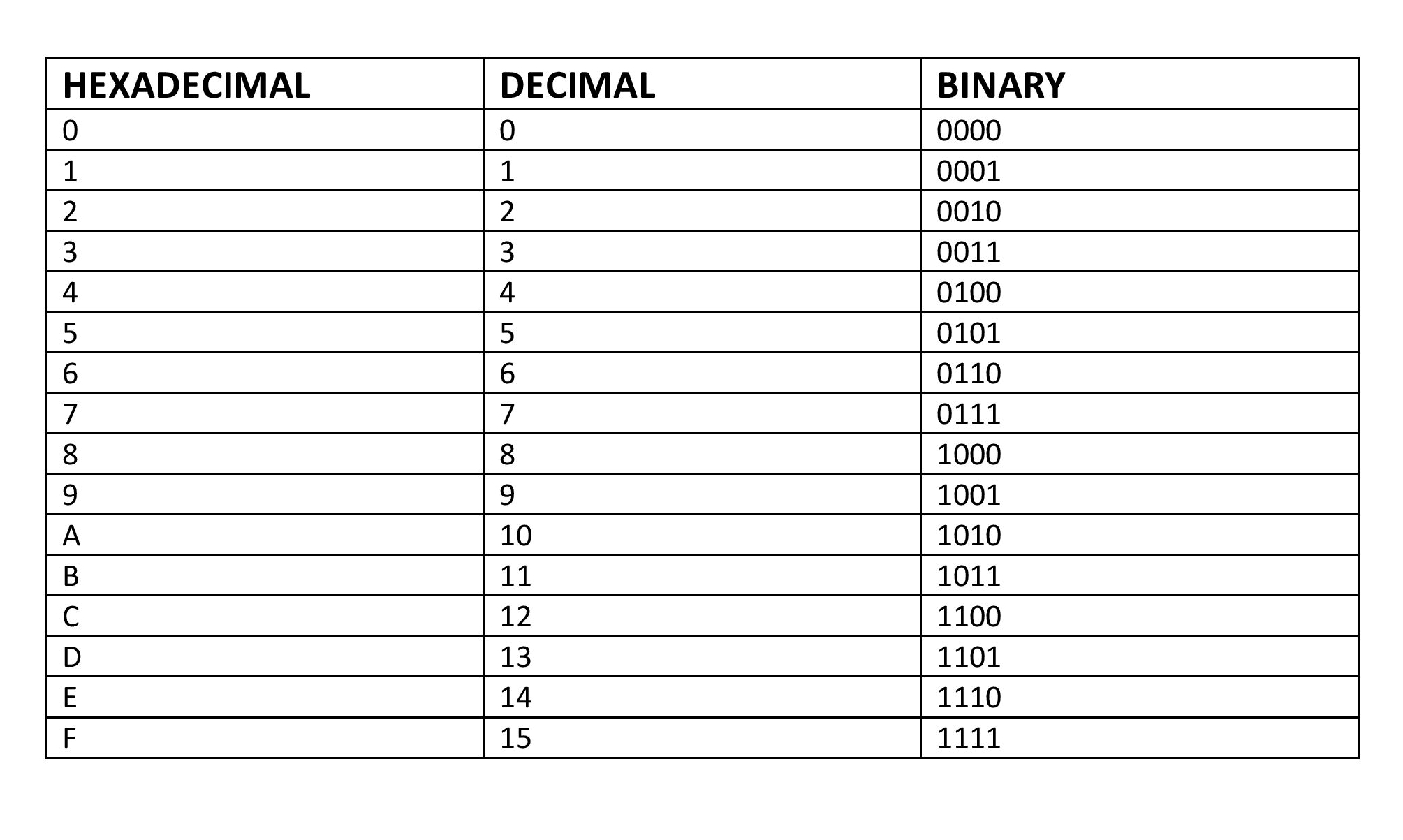

Let’s get real about why this works. Most of us grew up with base-10. We have ten fingers, so we count 0 through 9. When we hit ten, we carry the one. Hexadecimal uses sixteen symbols. It goes 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, and then it gets weird. We use letters: A, B, C, D, E, and F.

Why sixteen? Because $2^4 = 16$. This isn't just a happy coincidence; it’s the bedrock of computing architecture. One hex digit represents exactly four binary digits, also known as a nibble. Half a byte. This clean division is why hex is the king of the dev world.

🔗 Read more: Why POGIL Activities for AP Biology Actually Work (And How to Use Them)

If you want to understand the hex to binary table, you have to see the alignment.

Zero in hex is 0000 in binary.

One is 0001.

Two is 0010.

Three is 0011.

By the time you get to Nine, you’re at 1001.

Then comes A. In our normal world, A is 10. In binary, that’s 1010.

B is 11, which is 1011.

C is 12, or 1100.

D is 13, appearing as 1101.

E is 14, or 1110.

Finally, F is 15, which is 1111.

It’s a perfect square. When you see FF in a CSS color code, you’re actually looking at 11111111. That’s 255 in decimal, the highest value a single byte can hold. It’s why white is #FFFFFF—it’s just a way of telling the computer to turn all the sub-pixels to their maximum binary state.

Why the Hex to Binary Table is Still Relevant in 2026

You might think we’ve moved past this. We have AI that writes code now, right? True. But the "metal"—the actual hardware—hasn't changed its fundamental logic. Whether you are working on a neural network or a smart toaster, the underlying data is still moved in chunks that are best described by hex.

Low-level debugging still requires this knowledge. If you're using a tool like Wireshark to sniff network packets, you aren't going to see pretty English sentences. You're going to see a wall of hex. If you can't mentally translate 0x41 to 01000001 (which is the letter 'A' in ASCII), you're going to have a hard time understanding why a packet is failing.

Cybersecurity is another big one. Malware analysis often involves looking at "hexdumps" of executable files. Hackers look for specific patterns in the hex to identify where a program stores its secrets. If you’re trying to prevent a buffer overflow attack, you are living and breathing in the world of memory addresses. Those addresses are always, always in hex.

✨ Don't miss: Skytyping and Drone Art: Why Drawing in the Sky is Getting Way More Complex

Converting on the Fly Without a Calculator

Honestly, you don't need a printed table if you know the "8-4-2-1" rule. This is the secret handshake of computer science. Each bit in a 4-bit binary nibble has a value. The leftmost bit is worth 8, the next is 4, then 2, then 1.

Want to convert D to binary? You know D is 13. How do you make 13 using 8, 4, 2, and 1? You need an 8, a 4, and a 1 ($8+4+1=13$). So, you put a '1' in those slots and a '0' in the 2s slot. You get 1101. It takes two seconds.

It works the other way, too. See 1010? That’s an 8 and a 2. That equals 10. In hex, 10 is A.

The Color of Your Internet

Ever wonder why "Twitter Blue" or "Facebook Blue" has a specific code? Those hex codes are just binary instructions for your monitor's hardware. A color like #3b5998 is actually three separate groups of binary.3b controls the Red.59 controls the Green.98 controls the Blue.

When your browser reads that hex, it references its internal hex to binary table logic to tell the screen exactly how much voltage to send to each tiny LED. If we used decimal, the numbers would be clunky. If we used binary, the CSS file would be ten times larger. Hex is the "Goldilocks" zone of data representation—just enough information density without being unreadable.

Common Mistakes People Make

Most beginners trip up on the letters. They see an E and their brain freezes. Just remember that A is 10 and count up on your fingers if you have to. No shame in it.

Another mistake? Miscounting the zeros. In binary, 0001 is very different from 1000. One is 1, the other is 8. In hex, that's the difference between a tiny bit of data and a much larger value. Always keep your nibbles in groups of four. If you have a binary string like 10110, don't just start converting. Pad it with zeros on the left first: 0001 0110. Now you can see it’s actually 16 in hex.

Real World Application: IPv6

We ran out of IP addresses years ago. IPv4 used decimal numbers (like 192.168.1.1), but it only allowed for about 4 billion addresses. That sounds like a lot until you realize every lightbulb and fridge now needs an IP.

Enter IPv6. These addresses look like this: 2001:0db8:85a3:0000:0000:8a2e:0370:7334.

That is pure hexadecimal. Why? Because an IPv6 address is 128 bits long. If we wrote that in binary, the address would be a paragraph long. If we used decimal, it would be a nightmare to calculate subnet masks. By using hex, we keep the address manageable while still providing enough unique IDs for every grain of sand on earth to have its own website.

Actionable Insights for Mastery

If you really want to get good at this, stop using online converters for a day. Try to read the "hidden" hex around you.

- Practice the 8-4-2-1 method. It’s faster than looking at a table once you get the hang of it.

- Inspect Element on a website. Look at the CSS colors. Try to guess if a color has more "Red" or "Blue" just by looking at the first two digits of the hex code.

- Check your router settings. Look at your MAC address. It’s a 12-digit hex string. Try converting the first few digits to binary. It’ll tell you things about the manufacturer of your device.

- Memorize the "Anchor Points". Know that

7is0111andFis1111. If you know those, you can usually figure out the ones around them without much effort.

Understanding the transition from hex to binary isn't just for passing a computer science exam. It's about seeing the world the way your devices do. It turns the "black box" of technology into something transparent. Once you stop seeing letters and start seeing the bit-patterns they represent, you’re not just a user anymore—you’re someone who actually understands the machinery.