Precision is a weird thing. You can hold a ruler up to a piece of wood, eyeball a sixteenth of an inch, and call it a day. But when you’re dealing with the guts of a smartphone or the fuel injector of a high-performance engine, that ruler is basically a blunt club. This is where we run into the micrometre, or the "micron." Most people just call it the "um." Honestly, trying to wrap your head around an um to inches conversion is like trying to explain the distance to the moon using only the length of your thumb. It’s small. Really small.

One micrometre is a millionth of a meter. If you take a single human hair, you’re looking at something roughly 70 microns wide. Now, imagine trying to translate that tiny sliver of reality into the imperial system we use for everything from Subway sandwiches to construction lumber. It’s a mess. Yet, if you’re a machinist or a lab tech, this conversion isn't just a math homework problem. It's the difference between a part that fits and a pile of expensive scrap metal.

The math behind um to inches conversion (and why it’s annoying)

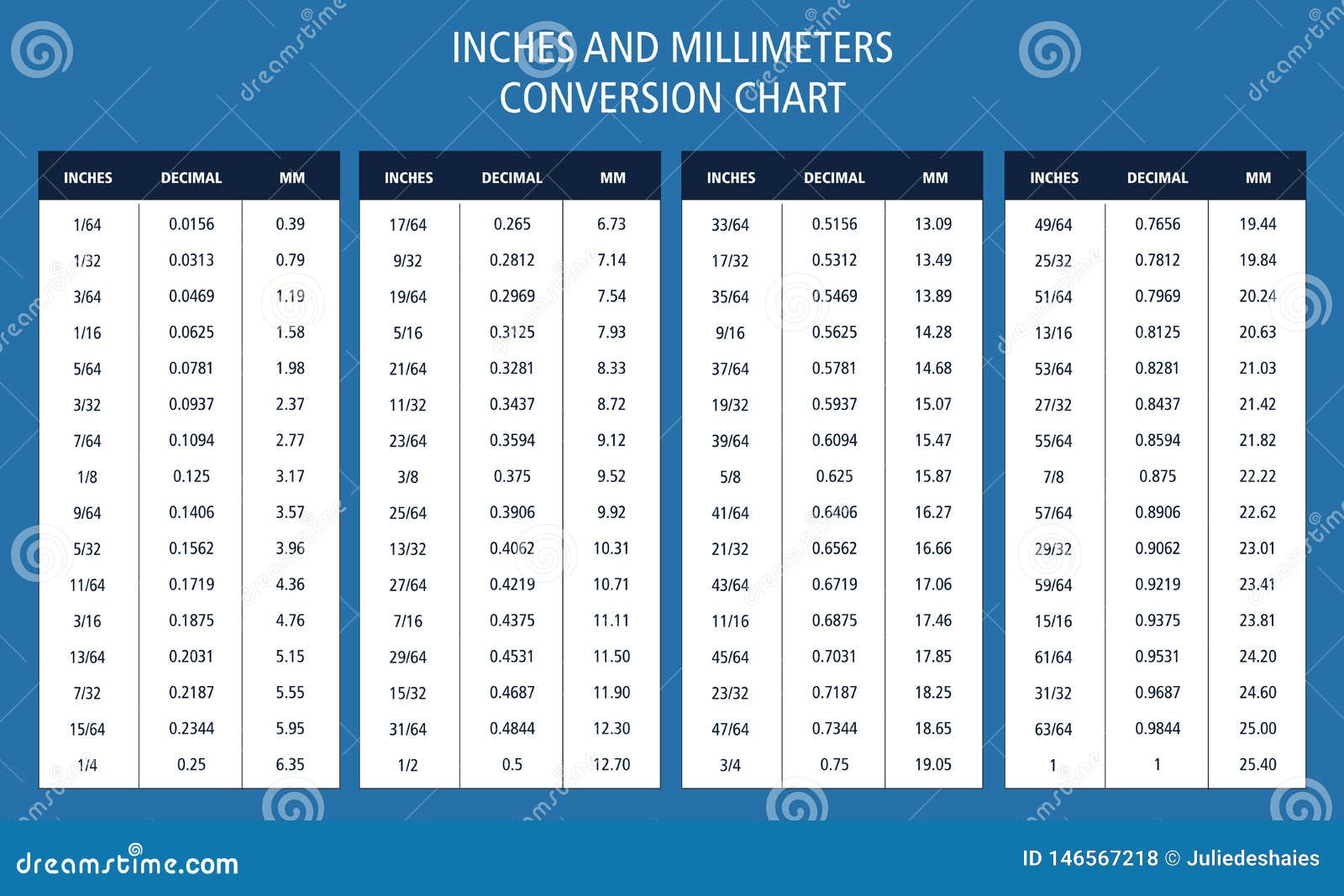

Let's get the raw numbers out of the way. One inch is exactly 25,400 micrometres. That’s the hard standard set back in 1959. So, if you want to go from um to inches, you’re dividing by 25,400.

✨ Don't miss: How Do I Hook Up Roku to My TV Without Losing My Mind?

$1 \text{ inch} = 25.4 \text{ mm} = 25,400 \mu\text{m}$

If you have a coating that is 10 microns thick, you do the math: $10 / 25,400$. You end up with 0.0003937 inches. Most people in a machine shop will just call that "four tenths," meaning four ten-thousandths of an inch. It's a linguistic shorthand that drives outsiders crazy. You're mixing decimals, fractions, and two different measurement systems in a single breath.

Why do we still do this? Because the United States is stubborn. While the rest of the scientific world moved to the clean, base-10 logic of the metric system, American manufacturing still breathes in inches. This creates a constant friction. You might get a blueprint from a designer in Germany using microns, but your CNC machine in Ohio is calibrated in inches. If you round off too early in your um to inches conversion, you’re toasted.

Real-world stakes of getting it wrong

Think about the semiconductor industry. Companies like Intel or TSMC are working with transistors measured in nanometers, which are even smaller than microns (1,000 nanometers per micron). When they describe the thickness of a silicon wafer, they might use microns. If a technician miscalculates that conversion when setting up a tool, they could ruin a batch of chips worth millions.

It’s not just tech, though. Think about your car. The "clear coat" on your paint is usually between 35 and 50 microns thick. If a detailer uses a heavy-duty abrasive and takes off 10 microns, they’ve just removed a significant chunk of your car’s UV protection. They need to know exactly what that 10 um to inches conversion looks like—about 0.0004 inches—so they can calibrate their paint thickness gauge correctly.

Common pitfalls in the conversion process

The biggest mistake is rounding. People love rounding. It makes the brain feel good. But in the world of microns, rounding is the enemy.

If you round 0.0003937 to 0.0004, you’ve introduced a 1.6% error. That might sound like nothing. It’s tiny, right? But if you’re stacking twenty different parts in a high-pressure valve, those tiny errors compound. Suddenly, your valve leaks because the "tolerance stack-up" became too great.

Another issue is the "thou" vs. "micron" confusion. A "thou" is one-thousandth of an inch (0.001"). One micron is roughly 1/25th of a "thou." I've seen hobbyists get these confused and end up ordering parts that are literally 25 times smaller or larger than what they actually needed. It's an expensive way to learn a lesson about decimals.

Tools that actually work

Don't use a cheap plastic caliper for this. Just don't. A standard digital caliper usually has an accuracy of plus or minus 0.001 inches. Since a micron is 0.000039 inches, your caliper is basically guessing.

For real um to inches conversion verification, you need a micrometer. Not the unit of measurement, but the tool. A high-quality digital micrometer can measure down to a tenth of a "thou" (0.0001 inches). Even then, you’re still only seeing about 2.5 microns at a time. To see a single micron, you’re moving into the realm of laser interferometry or high-end air gauging.

- Standard Micrometer: Good for ± 2 microns.

- Digital Indicator: Can hit the 1-micron mark if it’s a high-end Mitutoyo or Mahr.

- Optical Comparator: Useful for checking shapes, but harder for raw conversion checks.

Why the "Inch" won't die in precision manufacturing

You'd think we would have given up on inches by now. It’s 2026. The metric system is clearly superior for math. But the "Inch" is baked into the infrastructure. There are trillions of dollars worth of lathes, mills, and inspection tools in the US and UK that are natively "inch" machines.

Converting a whole factory from inches to microns isn't just about changing the settings on a screen. It’s about retraining the "feel" of the machinists. An experienced pro knows what a 0.002" clearance feels like with a feeler gauge. Ask them what 50 microns feels like, and they have to pause and do the mental math. That pause costs time, and in manufacturing, time is a heartless thief.

Actionable steps for accurate conversion

If you're stuck between these two worlds, don't wing it. Precision requires a process.

First, standardize your decimal places. If you are working in microns, keep at least six decimal places in your inch results until the very final step of your calculation. This prevents the "rounding creep" that ruins assemblies.

Second, use a dedicated conversion constant. Memorize 25.4. It is the bridge.

- Inches to mm: Multiply by 25.4

- mm to um: Multiply by 1,000

- um to inches: Divide by 25,400

Third, verify with a physical reference. If you're calibrating a sensor to measure in microns but your reference blocks are in inches, use a Grade 0 gauge block. These are stabilized steel or ceramic blocks that are accurate to within a few millionths of an inch. They are the "gold standard" for making sure your digital conversion matches physical reality.

Finally, always double-check the "direction" of your math. It sounds stupid, but the most common error is multiplying when you should have divided. A micron is smaller than an inch. Therefore, your number in inches should always be much, much smaller than your number in microns. If you convert 10 microns and get 254,000 inches, you’ve clearly had a bad day at the calculator.

Stop relying on mental math for anything under 100 microns. At that scale, the human brain isn't wired to visualize the difference between 0.0002" and 0.0003", but the machine you're building certainly is. Stick to the 25,400 divisor, use high-quality measurement tools, and always keep your decimal points in a straight row.