Tony Stark didn't just build suits. He built a vibe. Honestly, when we think about the most iconic parts of the Marvel Cinematic Universe, it isn’t the repulsor blasts or the snappy dialogue that sticks in the back of our brains—it's the way he moved his hands through thin air to manipulate data. Those glowing blue interfaces. The volumetric displays. Iron Man holograms changed how an entire generation views computing. They made our flat, glass rectangles look like Stone Age tools.

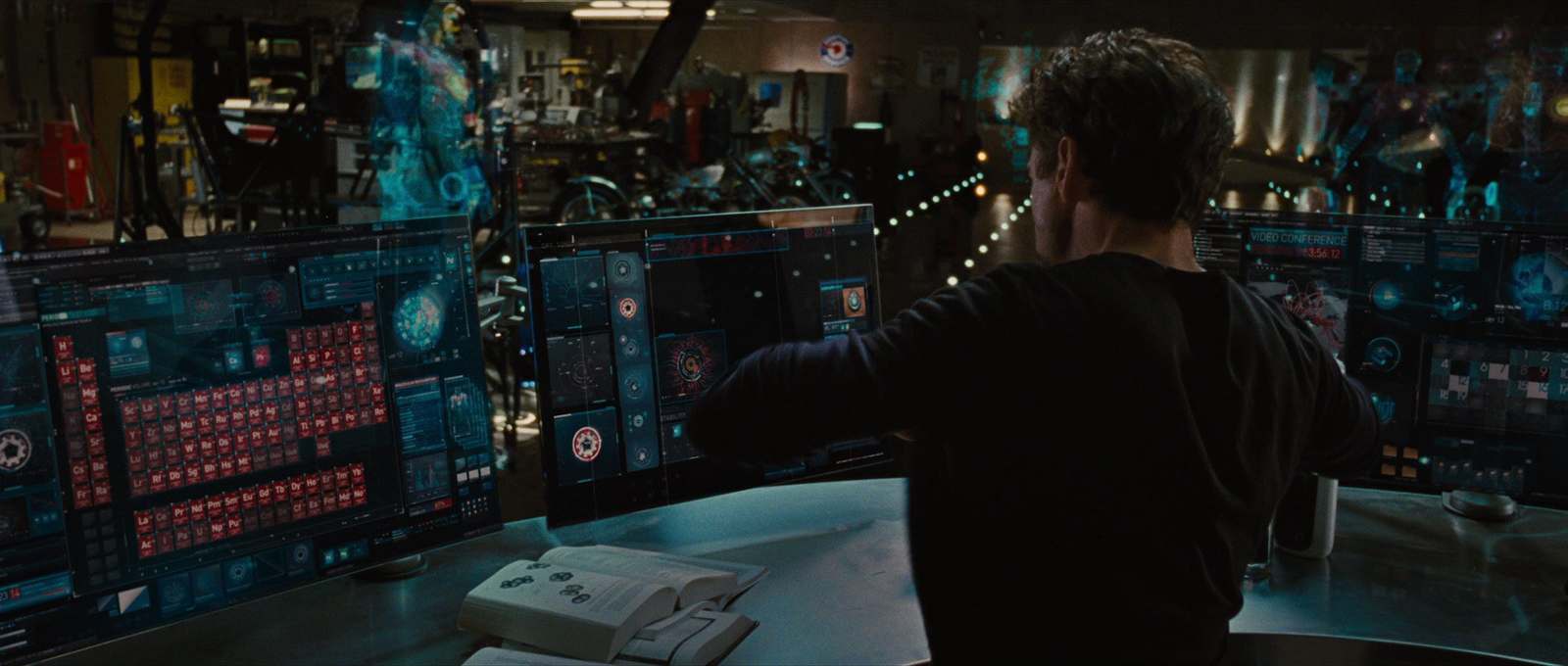

Think back to the basement in Malibu.

Stark is tossing virtual engine components across the room like they’re physical junk. That’s the dream, right? We want to touch digital things. But there's a huge gap between what Marvel showed us and what actually sits on our desks in 2026.

The Science of Volumetric Displays vs. Movie Magic

Most people call them holograms. Technically? They aren't. Not in the way physics defines a hologram, which usually involves laser interference patterns recorded on a medium. What Stark uses are more accurately described as volumetric displays or interactive light fields. In the films, especially starting with Iron Man 2 and The Avengers, these displays aren't tethered to a screen. They exist in free space.

In the real world, light doesn't just stop in mid-air. It keeps going until it hits something.

To make a real-life version of the Iron Man holograms, you need a medium to reflect that light. This is where companies like Looking Glass Factory or researchers at Brigham Young University come in. BYU’s "Optical Trap Display" is probably the closest we’ve ever gotten to Stark tech. They use a laser to trap a tiny particle—basically a speck of dust—and move it so fast that it draws an image in the air. It’s like a 3D version of a long-exposure sparkler photo. It's tiny. It's flickering. But it is actually there.

Hollywood, however, doesn't care about the "optical trap." They care about the interface.

The genius of the VFX team at Perception—the agency actually responsible for designing these interfaces for Marvel—wasn't just making it look pretty. They focused on "Tactile User Interface" (TUI). They wanted Stark to feel the data. When he pulls a wireframe apart, it shouldn't just scale up; it should react like a physical object. That's why we see those micro-interactions, the little haptic "clicks" represented by light pulses.

Why We Are Still Obsessed With JARVIS and HUDs

It’s about the flow.

👉 See also: Wait, What is 1000 Times 1000 Exactly? Let’s Talk About a Million

When you see the Iron Man holograms inside the helmet—the Heads-Up Display or HUD—it serves a functional purpose. It’s "cognitive load management." That sounds like boring corporate speak, but it's basically just a way to say Stark gets exactly what he needs to see without looking away from the fight.

Real-world pilots use this. The F-35 Lightning II helmet costs about $400,000 and does exactly what the Mark III helmet did in 2008. It projects flight data, targeting, and even "sees through" the floor of the plane using external cameras.

But for the rest of us? We’re stuck with AR headsets.

The Meta Quest 3 or the Apple Vision Pro are the current "peak." They get us 80% of the way to the Iron Man experience, but there's a catch. You have to wear a brick on your face. Stark’s tech was social. He could stand around a holographic table with Bruce Banner and they both saw the same thing. In our world, if you aren't wearing the goggles, you're just watching a guy wave his hands at nothing like a lunatic.

That "shared space" is the holy grail.

The Problems Nobody Talks About

We need to be real for a second. If you actually tried to work like Tony Stark for eight hours, your arms would fall off.

Designers call this "Gorilla Arm Syndrome." It’s a well-documented issue from the 1980s when touchscreens first appeared on vertical monitors. Lifting your arms to manipulate Iron Man holograms in the air is exhausting. Our biology isn't built for mid-air sculpting. We need desks. We need places to rest our elbows.

Then there’s the legibility.

Have you ever noticed how the holograms in the movies are always blue? There's a reason for that. Blue light has a shorter wavelength and looks "high-tech," but it’s also terrible for contrast in a bright room. Stark’s lab is usually dim or has specific lighting to make those displays pop. In a sunlit office, those holograms would be invisible ghosts.

- Transparency is a curse: You can’t have "black" in a hologram because black is just the absence of light. You can't project "darkness."

- Haptic feedback: You can't "feel" a beam of light, though some startups are using focused ultrasound waves to create "air haptics" that tickle your skin when you touch a virtual button.

- Processing power: Rendering a 360-degree volumetric heart that you can "explode" into its component parts requires massive GPU overhead that, until recently, would have required a server rack the size of a fridge.

How to Get the "Stark Look" Today

You don't need a billion dollars, but you do need a bit of a nerd streak. If you want to move closer to the Iron Man holograms workflow, the path isn't through physical projectors yet—it's through Spatial Computing.

First, look at Spatial.io or Gravity Sketch. These platforms allow you to manipulate 3D models in a way that feels almost identical to the movie scenes. Gravity Sketch, in particular, is used by actual car designers at Ford to "walk around" their designs.

Second, check out the Looking Glass Go. It’s a small, holographic display that doesn't require glasses. It uses a lenticular lens system to show different images to each of your eyes, creating a real 3D effect. It’s the closest thing to having a piece of Stark’s desk on your own.

🔗 Read more: Mica: Why This Sparkly Mineral Is Probably In Everything You Own

Lastly, the AI side is finally catching up. The true power of the Iron Man holograms wasn't the light; it was JARVIS. The display was just the "face" of an intelligent system. With the rise of Large Language Models (LLMs) and Multimodal AI, we’re finally getting to a point where we can talk to our computers and have them "show" us results.

Actionable Steps for Future-Proofing Your Workflow

If you’re a creator, designer, or just a tech enthusiast who wants that Stark-level efficiency, stop waiting for a glass table that glows. It’s not coming this year. Instead, do this:

- Adopt a "Spatial First" Mindset: Start using AR tools for layout tasks. Use apps like Polycam to scan real-world objects into 3D models. This is exactly how Stark "imported" the palladium core into his workspace.

- Master Gesture Control: Invest in a Leap Motion Controller 2. It’s a small bar that sits on your desk and tracks your hands with incredible precision. You can map it to your PC to control music, scroll pages, or rotate 3D objects with a wave of your hand.

- Prioritize Voice-to-Visual Pipelines: Start using AI tools that generate 3D assets from text. We are seeing the birth of "Text-to-3D." Soon, you'll say "show me a bracket for a 2024 Ducati" and the model will appear. That’s the real "Iron Man" magic.

The technology is fragmented right now. We have the screens, we have the AI, and we have the hand tracking. We’re just waiting for one device to stitch them together without the "brick on the face" requirement. Until then, keep your lab dim and your GPUs fast.