Ever get that feeling where your eyes just... stop working? You’ve been staring at a Google Doc for forty-five minutes. The words start swimming. You realize you’ve read the same sentence about "synergistic workflows" six times and still have zero clue what it means. It’s annoying. Honestly, it’s a bit of a cognitive wall. This is exactly where a text to speech reader stops being a "cool accessibility tool" and starts being a survival mechanism for the modern brain.

We’re basically living in an era of information gluttony.

📖 Related: Black Pine Incident Response: What the Security Community Is Still Getting Wrong

But our eyes weren't really designed to stare at backlit glass for twelve hours a day. Evolution didn't prep us for the sheer volume of Slack messages, PDFs, and long-form substacks we consume. So, we pivot. We use our ears. And the tech behind this has moved so fast that if you’re still thinking of those robotic, "Speak & Spell" voices from 2005, you’re missing out on some seriously impressive neural processing.

The Death of the Robot Voice

Nobody wants to listen to a toaster talk. For years, that was the biggest hurdle. You'd fire up a text to speech reader and it would sound like a Dalek with a head cold. It was choppy. The prosody—that’s the rhythm and intonation of language—was completely broken. It didn't know when to pause for a comma or how to raise its pitch for a question.

That changed with Neural TTS.

Big players like Google, Amazon (with Polly), and Microsoft started using deep learning to map how humans actually breathe between sentences. They looked at things like "coarticulation," which is just a fancy way of saying how one sound bleeds into the next when we talk fast. Now, when you listen to something through a high-end text to speech reader like Speechify or NaturalReader, the AI is literally predicting the emotional weight of the words. It knows that a "!" at the end of a sentence shouldn't just be louder; it should have a different tonal lift.

It’s kinda wild. You can actually listen to a 30-page research paper while doing the dishes and not feel like your brain is being sanded down by a mechanical voice.

Why Your Brain Prefers Listening (Sometimes)

There is this misconception that "listening isn't reading." It’s a bit elitist, frankly. Research, like the 2016 study from the University of California, Berkeley, published in The Journal of Neuroscience, showed that the same semantic areas of the brain are activated whether you’re taking in information via your eyes or your ears. The "word meaning" stays the same.

But here is the nuance.

For people with dyslexia, a text to speech reader isn't a shortcut; it's a bridge. It offloads the "decoding" part of reading—the struggle to turn letters into sounds—so the brain can focus entirely on "comprehension." If you spend all your energy just trying to figure out what the word says, you have no energy left to figure out what the story means.

I’ve seen students who were labeled "slow learners" suddenly start acing history exams because they could finally "read" the textbook with their ears. It’s about cognitive load. When you use a text to speech reader, you’re freeing up mental RAM. You can crank the speed up to 2x—which sounds crazy at first—and your brain actually adapts. You’re consuming a book a week while commuting. That’s a superpower.

The Real Tech Under the Hood

You might hear people talk about WaveNet. That’s Google’s baby. Unlike older systems that just stitched together tiny snippets of recorded human speech (concatenative TTS), WaveNet builds the waveform from scratch. It’s like an artist painting a portrait pixel by pixel instead of making a collage out of old photos.

Then you have ElevenLabs, which has recently set the bar for "cloning." They use a variety of the Transformer model architecture—the same stuff that powers GPT—to understand the context of a sentence. If a character in a story is whispered to, the text to speech reader actually whispers.

But it’s not all perfect. Let’s be real.

- Contextual errors: A reader might see "St." and say "Street" when the text meant "Saint."

- Acronyms: Sometimes it tries to pronounce "NASA" as "N-A-S-A" or vice versa.

- Emotional Flatness: Even the best AI can't quite capture sarcasm yet. It’s getting there, but it’s still a bit "straight-faced."

Choosing the Right Tool for the Job

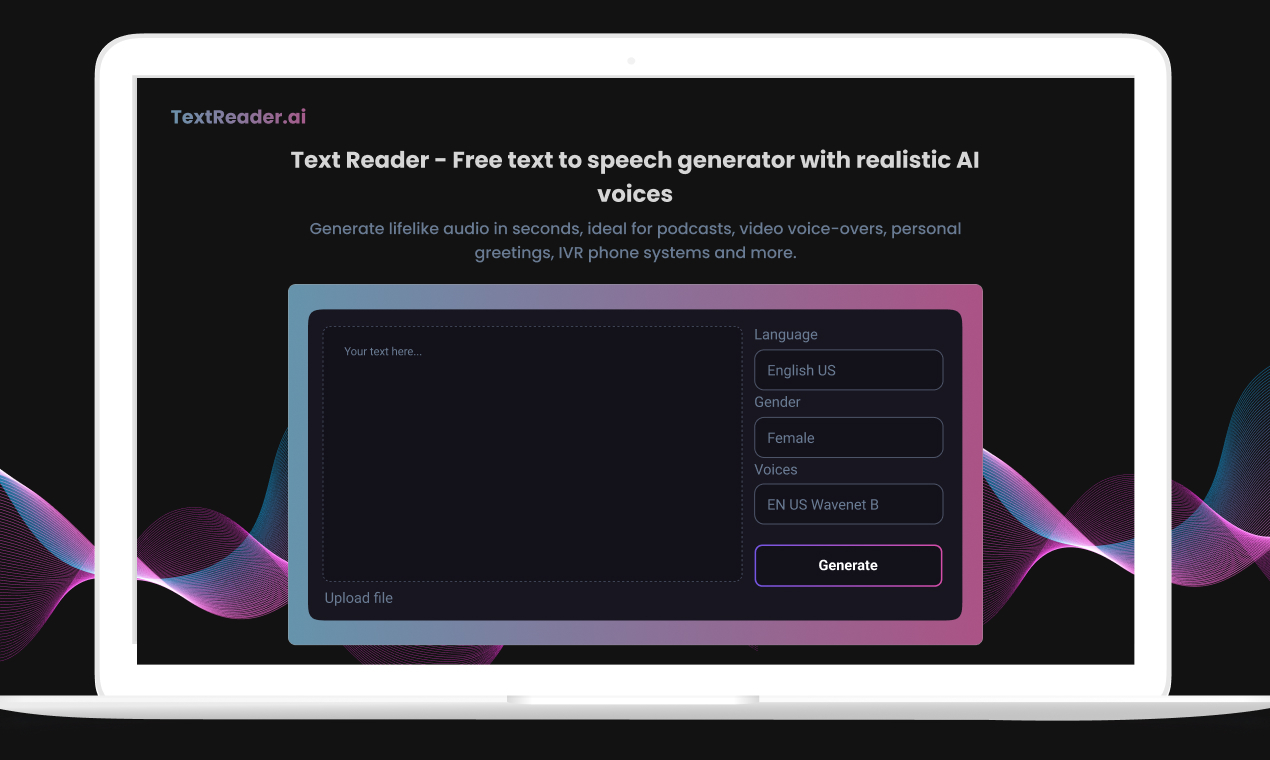

There are so many options now that it’s overwhelming. You’ve got browser extensions, standalone apps, and built-in OS features.

If you’re just trying to get through a long article, the "Read Aloud" feature in Microsoft Edge is surprisingly good. It’s free and uses their "Natural" voices which are some of the best in the business. But if you’re a power user—say, a lawyer who needs to get through 500 pages of discovery—you’re probably looking at something like Speechify. Their "AI Voice" tech is specifically tuned for speed-listening.

For the Kindle crowd, there’s Immersion Reading. This is the gold standard. It syncs the professional narration from Audible with the text on your screen. As the text to speech reader (or human narrator) speaks, the words are highlighted. This dual-sensory input is a beast for retention. You remember more. Period.

Privacy and the "Uncanny Valley"

We have to talk about the creepy factor. Voice cloning is getting so good that people are getting scammed by "grandkid" voices on the phone. When you use a text to speech reader that allows you to clone your own voice, you're handing over a lot of biometric data. Most reputable companies encrypt this, but it’s something to keep in the back of your mind.

Also, there’s the "Uncanny Valley." Sometimes a voice is too human. It has little breaths and mouth clicks that can feel a bit jarring if you’re just trying to listen to a technical manual about plumbing. Sometimes, a slightly "cleaner," less-human voice is actually easier to focus on for long periods.

Moving Beyond Just "Reading"

The future of this tech isn't just about reading books. It’s about an "Audio-First" internet.

📖 Related: NYU Tandon School of Engineering Acceptance Rate: What the Numbers Actually Mean for You

Imagine a world where your emails aren't something you look at, but something you "attend" to while walking the dog. We’re seeing more and more publications like The New York Times or The Atlantic provide a "listen" button at the top of every article. They aren't always using human voice actors; they’re using a high-fidelity text to speech reader.

And it makes sense. People are busy.

If you’re a creator, you should be thinking about this too. If your content isn't "listenable," you're locking out a huge chunk of your audience. Whether it’s someone with visual impairment, someone with ADHD who needs the "bimodal" stimulation to stay focused, or just a guy at the gym who wants to learn about quantum physics while hitting the treadmill.

How to Actually Use This Every Day

Don't just turn it on and hope for the best. You have to train your ears.

Start at 1.2x speed. It’ll feel a little fast for about five minutes. Then, your brain will normalize it. A week later, bump it to 1.5x. Eventually, you’ll find that 2x is your "sweet spot" for non-fiction. For fiction, keep it slow. You want to hear the drama.

- Get a Browser Extension: Use something like "Selection Reader" or "Read Aloud." It makes any website instantly audible.

- Use Pocket or Instapaper: These apps let you save articles for later and have built-in text to speech reader functions that work great on mobile.

- Check Your OS Settings: Both iOS (Spoken Content) and Android (Select to Speak) have these features baked into the software for free. You don't always need to pay for a subscription.

- Listen to Your Own Writing: This is the best editing tip ever. When you hear a text to speech reader read your own work back to you, you will instantly hear every clunky sentence, every repeated word, and every weird transition. It’s brutal, but it works.

The goal here isn't to replace your eyes. It’s to give them a break. It's about making the most of those "dead" moments in your day—the commute, the chores, the mindless gym sessions. By turning text into a portable, auditory experience, you're basically gaining hours of productive time that used to just disappear.

Try it with this article. Copy the text, throw it into a reader, and see if you catch something you missed while scanning. You probably will.

Immediate Action Steps

Stop treating "reading" as a purely visual task. Start by enabling the native speech-to-text tools on your phone. Go to Settings > Accessibility > Spoken Content on an iPhone and turn on "Speak Screen." Next time you’re in a long article, swipe down with two fingers. Let the AI take over. See how much more you actually finish when you aren't tied to a desk.

👉 See also: How to change the date in iPhone settings when things get weird

If you're a student or a professional, download a dedicated app like Speechify or NaturalReader for a week. Use it for your most "boring" reading. The difference in your fatigue levels at 4 PM will be noticeable. We’re moving toward a screenless interface for a lot of our data consumption; you might as well get ahead of the curve now.