You’re sitting in the interview. The whiteboard is staring back at you, blank and clinical. The interviewer just asked you to design TikTok or a global payment gateway like Stripe, and suddenly, your brain feels like it’s running on a 56k dial-up modem. We've all been there. It’s that specific brand of panic where you forget whether a Load Balancer comes before or after the API Gateway. This is exactly why having a system design cheat sheet tucked away in your mental back pocket isn't just "nice to have"—it is survival.

Most people treat system design like a trivia game. They memorize definitions of Sharding or Paxos without actually understanding the "why" behind the trade-offs. Honestly? That’s the fastest way to get a "no" from a Senior Staff Engineer. Real engineering is about the messy middle. It’s about knowing that if you choose consistency, you’re probably going to sacrifice availability.

The Core Foundations of a System Design Cheat Sheet

Before we get into the heavy stuff like Consistent Hashing or Gossip Protocols, we need to talk about the "Golden Rule" of high-level architecture: The CAP Theorem. Eric Brewer pointed this out years ago, and it still dictates almost every decision we make in distributed systems today.

Basically, you get to pick two: Consistency, Availability, or Partition Tolerance. But here’s the kicker—in a distributed world, network partitions are inevitable. You must have Partition Tolerance. So, the real choice is usually between CP (Consistency and Partition Tolerance) and AP (Availability and Partition Tolerance). If you’re building a banking app, you want CP. If you're building a social media feed where it doesn't matter if a post shows up five seconds late, you go for AP.

How to Estimate Scale Without Looking Like a Rookie

If you don't do the math, you aren't doing system design. You're just drawing boxes. Every system design cheat sheet should include "Back-of-the-envelope" estimations.

Think about it this way. If a service has 100 million daily active users (DAU) and each user makes 10 requests a day, that’s a billion requests. Spread that over 86,400 seconds in a day, and you’re looking at roughly 12,000 queries per second (QPS). Knowing these rough numbers helps you decide if you need a single SQL instance or a massive NoSQL cluster.

- Latency Matters: L1 cache access is about 0.5 nanoseconds.

- Disk vs Memory: Reading 1 MB sequentially from memory takes about 250 microseconds, while reading from a disk takes 20,000.

- The Global Factor: A round trip from New York to London is roughly 150 milliseconds.

When you start throwing these numbers around in an interview, you stop looking like a student and start looking like someone who has actually stayed up at 3 AM fixing a production outage.

Load Balancing and the Art of Not Crashing

Where do you put the Load Balancer (LB)? Everywhere. Seriously. Between the user and the web server, between the web server and the internal services, and between the services and the database.

You’ve got options here. Round Robin is the simplest, but it’s kinda dumb. It just sends requests in order regardless of how hard the server is working. Least Connections is smarter. Then there’s IP Hash, which is great if you need a user to keep talking to the same server for session persistence.

Why Nginx and HAProxy Still Rule

Even in 2026, with all the fancy cloud-native tools, Nginx and HAProxy are the heavy lifters. They handle SSL termination, compression, and even basic caching. If your system design cheat sheet doesn't mention the "Sidecar" pattern for modern microservices—where you use something like Envoy—you’re missing the shift toward Service Meshes.

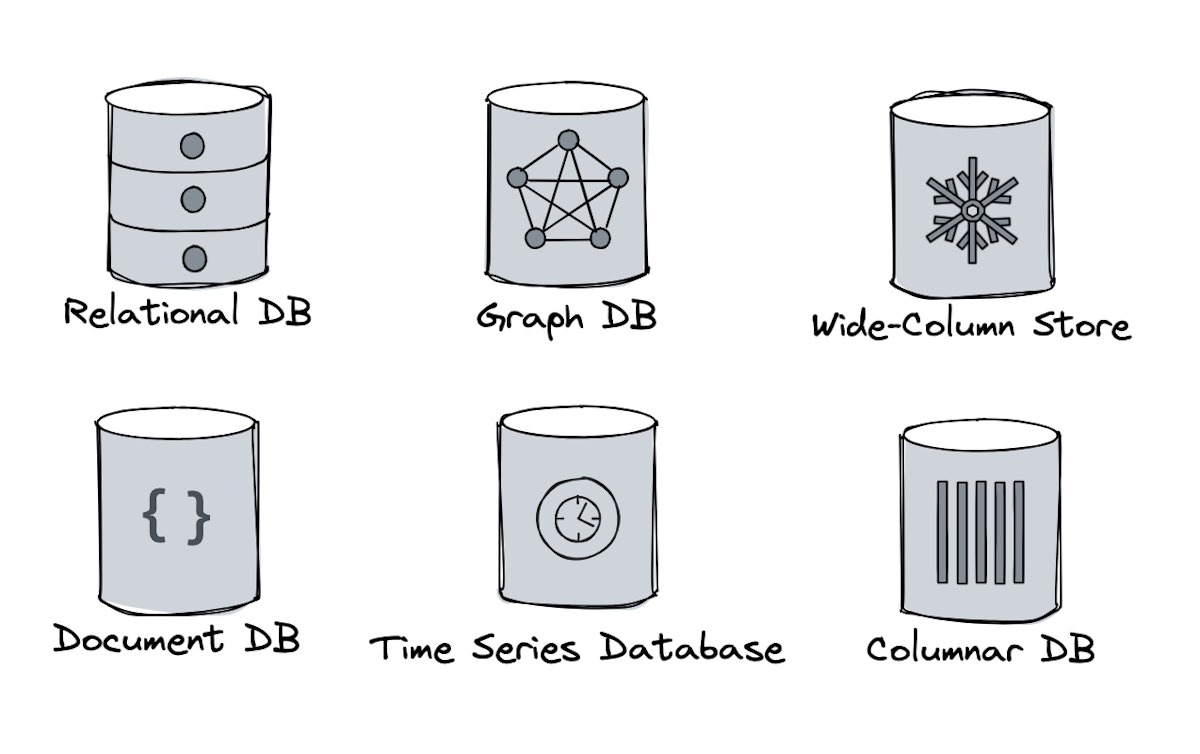

Databases: Picking Your Poison

This is where most designs go to die. Should you use SQL or NoSQL?

If your data is highly structured and you need ACID compliance (Atomicity, Consistency, Isolation, Durability), stick with Postgres or MySQL. Don't let anyone tell you SQL doesn't scale. Look at Vitess (used by YouTube) or Citus. They shard SQL like a pro.

On the flip side, if you're dealing with massive amounts of unstructured data or need high write throughput, NoSQL is your friend.

- Key-Value: Redis, Memcached (Lightning fast, great for sessions).

- Document: MongoDB, CouchDB (Flexible schema, good for content management).

- Wide-Column: Cassandra, ScyllaDB (Built for massive scale, used by Instagram and Netflix).

- Graph: Neo4j (Perfect for social networks or fraud detection).

The Sharding Headache

Sharding is basically horizontal partitioning. You split your big database into smaller pieces (shards) based on a key, like UserID. But be careful. If you shard by "Last Name," and everyone on your app is named "Smith," you've just created a massive hot spot. This is why Consistent Hashing is such a vital part of any system design cheat sheet. It minimizes the amount of data that needs to be moved when you add or remove a node from your cluster.

📖 Related: Why the Tecno Phantom Ultimate 2 Might Actually Change Your Pocket Forever

Caching: The Speed Demon

Caching is the closest thing we have to magic in computer science. You can put a cache at the client level (browser), the CDN (Content Delivery Network), or the server side.

Redis is the industry standard for a reason. It’s an in-memory data structure store that is stupidly fast. But you have to manage it. What happens when the cache gets full? You need an eviction policy.

- LRU (Least Recently Used): Kick out the stuff that hasn't been touched in a while.

- LFU (Least Frequently Used): Get rid of the unpopular stuff.

- FIFO: First in, first out.

And then there’s the "Cache Aside" pattern. The application looks in the cache. If it’s not there (a cache miss), it grabs it from the DB and stuffs it into the cache for next time. Simple. Effective.

Communication: How Services Talk Without Screaming

In a monolithic app, everything happens in one process. In distributed systems, everything happens over the network, and the network is unreliable.

Synchronous (REST/gRPC)

When Service A calls Service B and waits for an answer. REST is the classic—it's easy, human-readable, and works over HTTP. gRPC is the newer, cooler sibling. It uses Protocol Buffers (Protobuf) instead of JSON, which makes it way faster and more compact. If you're building internal microservices, gRPC is usually the right call.

Asynchronous (Message Queues)

This is for when you don't need an answer right away. Think of an "Order Confirmed" email. You don't want the user to wait for the email to send before they see the success page. You drop a message into RabbitMQ or Apache Kafka, and a worker process picks it up whenever it's ready.

💡 You might also like: Predator Killer of Killers 2025: Why This Niche Trend is Dominating the Tactical Market

Kafka is particularly interesting because it’s a distributed commit log. It handles millions of messages per second and allows you to "replay" events if something goes wrong. It’s the backbone of event-driven architecture.

Microservices vs. The Majestic Monolith

Don't jump straight to microservices.

It’s tempting. It’s trendy. But it adds a massive amount of operational complexity. You now have to deal with service discovery, distributed tracing (using tools like Jaeger), and complex deployment pipelines. Sometimes, a well-structured monolith is all you need until you’re at a certain scale.

If you do go microservices, you need an API Gateway. It acts as the single entry point for all clients. It handles authentication, rate limiting, and request routing. It keeps the "scary" internal stuff hidden from the public internet.

Real-World Nuance: The Stuff They Don't Tell You

Most system design cheat sheet resources ignore the "soft" side of architecture. For example, Observability.

If a system goes down and you don't have logs, metrics, and traces, you're flying blind. You need the ELK stack (Elasticsearch, Logstash, Kibana) or Prometheus and Grafana. You need to know your "P99" latency—meaning the latency experienced by the 99th percentile of users. If your average latency is 100ms but your P99 is 5 seconds, your app is actually broken for your most active users.

📖 Related: Why Amazon Flex App Download Is Harder Than You Think (And How to Actually Do It)

Also, consider "Security." It’s not an afterthought. You need to think about OAuth2, JWTs (JSON Web Tokens), and encryption at rest and in transit. A system that is fast but leaks user data is a failure.

Practical Next Steps for Your Architecture Journey

Knowing the components is only half the battle. You have to practice putting them together.

Start by picking a popular app and trying to clone it on paper.

- Design a URL Shortener (Bitly): Focus on read/write ratios and hashing.

- Design a Chat System (WhatsApp): Focus on WebSockets and long polling for real-time updates.

- Design a Web Crawler: Focus on multithreading and avoiding duplicate content.

Actionable Insights to Take Away:

- Always ask for requirements first. Never start drawing until you know the DAU, the read/write ratio, and the data retention policy.

- Focus on the bottlenecks. Is it the CPU? The Memory? The Network I/O? Identify it early.

- Embrace Failure. Assume every server will crash. Use "Redundancy" and "Replication" to make sure the system stays up anyway.

- Keep it Simple. Don't use Kafka if a simple Python script and a Cron job will work. Over-engineering is the enemy of progress.

Go through your system design cheat sheet one more time. Make sure you understand the "Trade-offs." In the world of high-level architecture, there are no "best" solutions, only choices that involve losing something to gain something else. If you can explain why you chose a specific database over another, you’ve already won half the battle. Now, go build something that doesn't break.