You open Google Search Console, click on the indexing report, and there it is. A giant grey bar. You see the phrase discovered - currently not indexed staring back at you like a digital "Keep Out" sign. It's frustrating. You spent hours writing that post, and Google knows it exists, yet it won’t show it to anyone.

Why?

Most people think it’s a bug. It isn’t. Honestly, it’s just Google being picky about its resources. Your site isn't broken, but Google has decided that, for right now, your content isn't a priority for their crawlers.

The harsh truth about crawl budget and quality

Googlebot is busy. It has billions of pages to look at every single day. When you see discovered - currently not indexed, it means Google found the URL—maybe through a sitemap or a link—but it haven't actually crawled the page yet. They’ve put it in a "to-do" pile that might be a mile high.

Gary Illyes from Google has mentioned multiple times that this usually comes down to "crawl budget." But that's a bit of a buzzword. Basically, if Google thinks your site is a bit slow, or if it thinks the content on those specific pages is thin or repetitive, it won't rush to index them. It’s like a restaurant that knows you’re standing outside but decides not to open the door because the kitchen is already struggling with the tables they have.

Sometimes the issue is purely technical. If your server is having a bad day and throws a 5xx error when Googlebot tries to visit, Google might back off. They don't want to crash your site. So, they wait. And they wait. Meanwhile, your traffic stays at zero.

Is your content actually unique?

We have to be real here. A lot of times, discovered - currently not indexed happens because the content is too similar to something else on the web—or even on your own site. If you have ten pages all talking about "the best way to peel an orange," Google might index the first three and flag the rest as discovered but not indexed.

They aren't saying your page is "bad." They're saying it doesn't add anything new to their index right now. It's redundant.

✨ Don't miss: Why You Should Reset iPhone Home Screen Layout and How to Actually Do It

I’ve seen this happen a lot with e-commerce sites. Imagine a store with 500 different t-shirts, but each page is exactly the same except for the color of the shirt. Google sees 500 URLs but only one "idea." It’s going to ignore most of those pages. It’s not a penalty; it’s an efficiency tactic.

The "Overloaded Server" theory

If your hosting is cheap, you’re more likely to see this. When Googlebot hits a site, it measures how fast the server responds. If the server starts lagging, Googlebot slows down. If it keeps lagging, Googlebot stops. It marks those pages as "discovered" because it saw the link, but it never actually "indexed" them because it didn't want to break your server.

How to actually get these pages into Google

First, don't panic. You can't just mash the "Request Indexing" button a thousand times. That rarely works for this specific status. Instead, you need to change how Google perceives the value of those pages.

Internal linking is your best friend. Seriously.

If you have a page stuck in this status, go to your most popular, highest-ranking post. Add a natural, helpful link from that "powerhouse" page to the stuck page. This tells Google, "Hey, this page is important enough that my best content is talking about it." It forces the bot to re-evaluate the priority of that URL.

Check your sitemaps too. Are they messy? If your sitemap is full of 404s or redirects, Google starts to distrust it. A clean, updated XML sitemap is like giving Googlebot a high-quality map instead of a napkin sketch.

Fixing the "Thin Content" problem

If you suspect the content might be the issue, you have to beef it up.

- Add original images (not stock photos).

- Include data or quotes that aren't anywhere else.

- Make the word count meaningful—don't just add fluff.

- Ensure the metadata (Title tags and H1s) isn't identical to other pages.

I once worked with a travel blog that had 40 pages in the discovered - currently not indexed bucket. They were all short "photo gallery" posts with about 100 words of text. We combined those 40 short posts into 5 massive, high-quality guides. Within two weeks, all 5 were indexed and ranking on the first page. More isn't always better. Sometimes, less is more.

The role of Site Authority

New sites deal with this more than established ones. If your domain is only a month old, Google doesn't trust you yet. You're a stranger. Why should they spend their precious computing power indexing 1,000 pages from a stranger? They won't. They’ll index five or ten, see how people react, and keep the rest in the "discovered" pile.

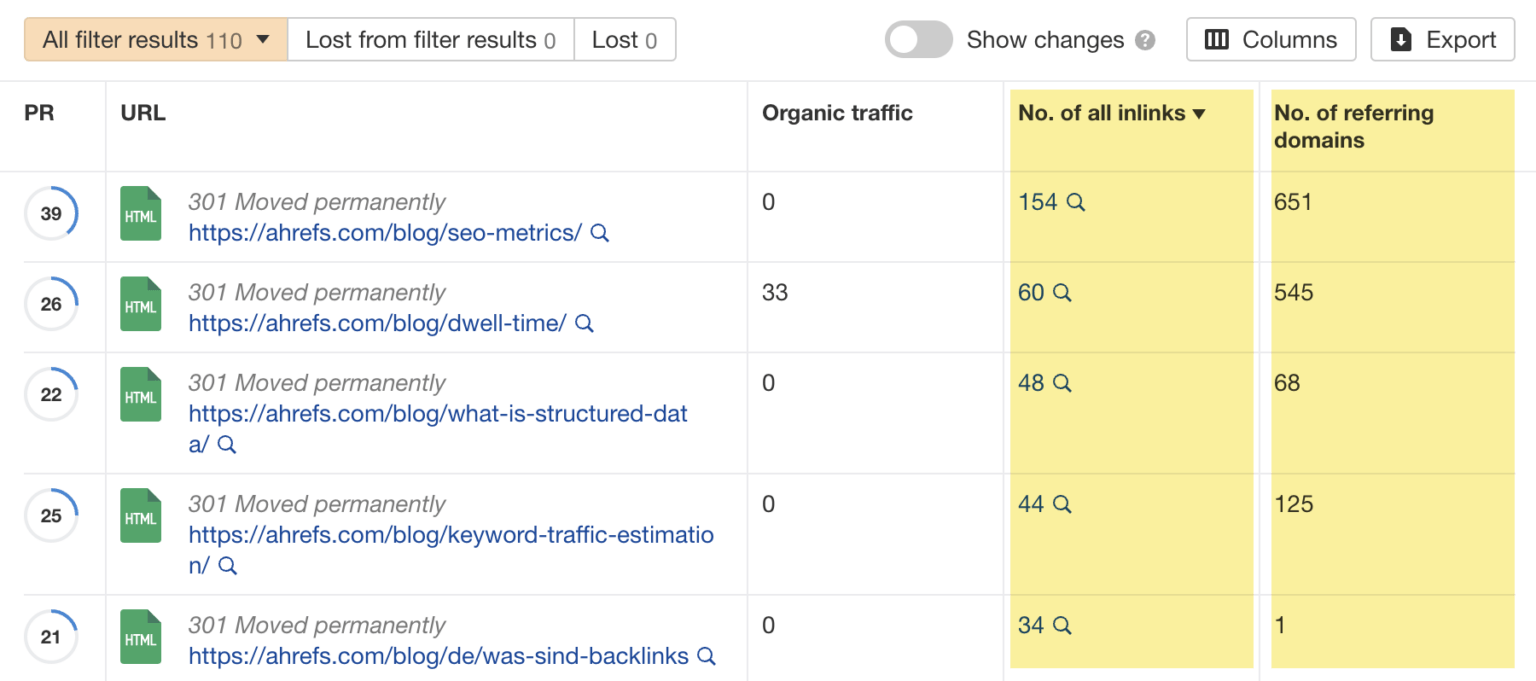

As you get more backlinks from reputable sites, your "crawl priority" goes up. This is a slow game. You can't skip the line. You just have to keep proving that your site provides value to users.

Technical checks you can do right now

Use the URL Inspection tool in GSC for one of the affected pages. Click "Test Live URL." If it says "URL is available to Google," then your robots.txt and noindex tags are fine. The problem is purely about Google's choice, not a technical block. If the live test fails, you’ve found your smoking gun—usually a rogue noindex tag in your header or a robots.txt rule you forgot about.

📖 Related: Spotify Free Trial Premium: How to Actually Get It Without the Usual Headache

Also, check your "Crawl Stats" report in the settings of Search Console. Look for a spike in "Total crawl requests" followed by a drop. If you see a lot of "Server errors (5xx)," your host is the bottleneck. You might need to upgrade your plan or move to a faster provider like WP Engine or Cloudways.

Stop waiting and start acting

You can't just wait for Google to "get around to it." Sometimes they do, but often they don't.

If a page has been stuck in discovered - currently not indexed for more than a month, it's a signal. Google is telling you something is wrong with the value proposition of that page or the site’s technical health.

Take a look at your site architecture. Is the page more than three clicks away from the homepage? If it’s buried that deep, Googlebot might never bother. Bring it closer to the surface. Put it in your footer, or better yet, a "Featured Posts" section on the sidebar.

Actionable Next Steps

Start by auditing the URLs in that report. Sort them by importance. If the pages aren't actually important, maybe they shouldn't even be in your sitemap. Delete the junk.

For the pages that do matter, follow this checklist:

- Update the content to be significantly better than what's currently ranking for that keyword.

- Add 2-3 internal links from high-authority pages on your own site.

- Check your "Page Load" speed in Core Web Vitals; if it's "Poor," Googlebot will keep skipping you.

- Ensure the URL is included in your XML sitemap and that the sitemap is submitted correctly.

- If the page is very similar to another one you own, use a canonical tag to point to the "main" version, or merge them.

If you do these things, the "discovered" status will eventually flip to "indexed." It won't happen overnight, but it will happen. Google wants to index good content—you just have to prove that yours is worth the effort.