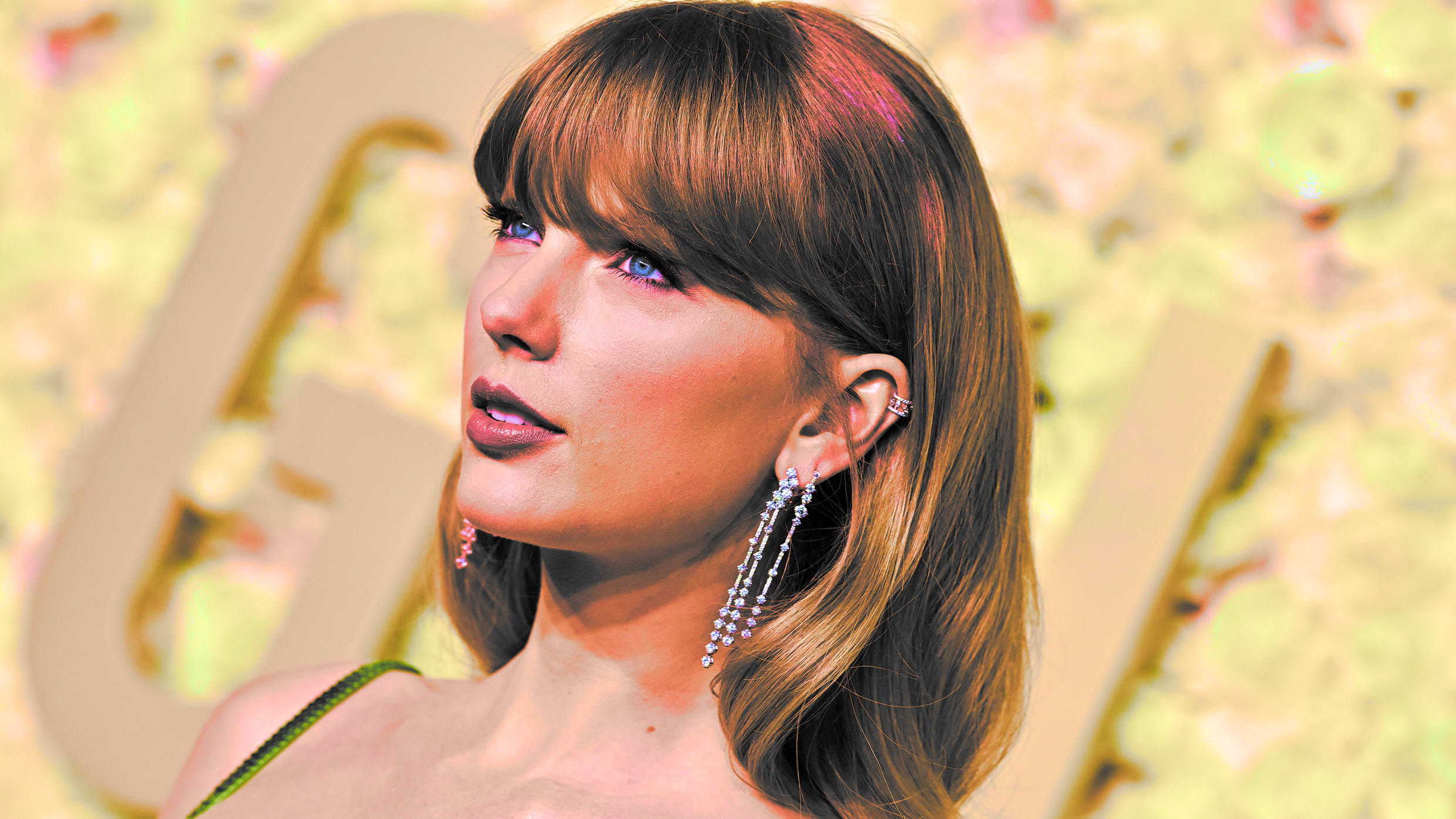

It happened fast. One minute, the internet was just its usual chaotic self, and the next, X (formerly Twitter) was a total disaster zone. In early 2024, a wave of non-consensual, sexually explicit deepfakes—specifically ai porn taylor swift images—flooded social media feeds. This wasn't just another weird celebrity rumor or a bad Photoshop job. It was a massive, coordinated digital assault that broke the systems we rely on to keep the web sane.

People were pissed.

Actually, "pissed" is an understatement. The images were viewed millions of times before they were even sniffed out by moderation tools. This event basically forced the entire world to realize that the technology used to make "fun" AI art could be weaponized against anyone, even the most powerful pop star on the planet. If it could happen to her, with her army of lawyers and millions of fans, what chance does a high school student or a regular office worker have? Honestly, not much.

The Viral Nightmare and Why It Stuck

The images reportedly originated on a Telegram channel where users share "challenges" to generate non-consensual imagery. It’s a dark corner of the web that most of us never see, but the output leaks out. From there, it hit X. Because of how the platform's current "For You" algorithm works, these ai porn taylor swift images weren't just available—they were being actively promoted to people who weren't even looking for them.

The speed was terrifying.

X eventually had to take the nuclear option. They blocked all searches for "Taylor Swift" entirely for a few days. You’d type her name in, and you’d get nothing. It was a crude, low-tech fix for a high-tech problem, and it showed just how unprepared these massive tech companies really are. We’re living in an era where the generative tools are moving at Mach 5, while the safety guardrails are still trying to put their shoes on.

Microsoft’s Designer tool was also dragged into the mess. Reports from researchers, including some folks over at 404 Media, suggested that the prompts used to create these images were bypassing the built-in filters by using clever wordplay. It’s a game of cat and mouse. The AI companies patch a hole, and the "prompters" find a way to crawl through a different one. It’s exhausting to track.

The Legal Void

Here is the kicker: in the United States, there is currently no federal law that specifically bans the creation or distribution of non-consensual AI-generated deepfakes.

That sounds fake, right? You’d think there’d be a law. But nope.

Most states have some form of "revenge porn" laws, but those usually require the image to be of a real person in a real situation. When the image is entirely synthesized by a machine—even if it looks exactly like a specific human—the legal definitions get messy. This is what legal experts call a "legislative gap." It’s basically a giant hole in our justice system that bad actors are driving a truck through.

How the Tech Actually Works (And Why It’s Hard to Stop)

Deepfakes like the ai porn taylor swift content are usually created using something called Diffusion Models. Specifically, Stable Diffusion is a popular one because it’s open-source. You can run it on your own computer without any "corporate" filters if you know what you’re doing.

- Training Data: The AI is trained on millions of photos of the person. Since Taylor Swift is one of the most photographed humans in history, the AI has a perfect "map" of her face from every single angle.

- LoRA (Low-Rank Adaptation): This is a technical way of "fine-tuning" an AI model. Think of it like a specialized plug-in that tells the AI, "Hey, focus specifically on making everything look like this one person."

- In-painting: This lets a user take an existing image and tell the AI to just swap out the face or the body.

Because the software is decentralized, you can’t just "turn it off." If OpenAI blocks a prompt, users just go to an unfiltered model. It’s like trying to ban a specific type of paintbrush. You can stop selling it in stores, but people will just make their own at home.

The reality is that "safety filters" are often just a thin layer of code that looks for keywords like "naked" or "sexy." If you use a prompt like "Taylor Swift wearing a transparent outfit made of water," the AI might get confused and generate something explicit anyway because it doesn't truly "understand" context. It just predicts pixels.

The Impact on Real People

We talk about celebrities because they have the platform to make noise, but the real victims are often people you know. According to a study by Sensity AI, about 96% of all deepfake videos online are non-consensual pornography. And almost all of them target women.

This isn't a "tech" problem; it's a harassment problem that got a massive power boost from silicon.

When the ai porn taylor swift situation peaked, SAG-AFTRA (the actors' union) came out swinging. They called the images "upsetting, harmful, and deeply concerning." They’ve been pushing for the NO FAKES Act in Congress. The goal is to give every person a "property right" in their own likeness. If someone uses your face to make money or create content without your permission, you could sue them into the ground. It seems like common sense, but the lobbyists for big tech are worried about how it might "stifle innovation."

Honestly, if your "innovation" relies on violating people's privacy, maybe it needs a little stifling.

What's Being Done Right Now?

It’s not all doom and gloom. There are people fighting back, and the Taylor Swift incident was a massive catalyst.

- The DEFIANCE Act: This is a bipartisan bill introduced in the Senate that would allow victims of non-consensual AI porn to sue the people who produced or distributed it.

- Watermarking: Companies like Google and Meta are starting to bake "invisible" watermarks into AI-generated images. It’s called SynthID or C2PA. The idea is that even if an image looks real, the metadata will scream "I AM A ROBOT."

- Better Detection: Companies like Reality Defender are building AI to catch AI. They look for tiny glitches in the pixels—like weird ear shapes or inconsistent reflections in the eyes—that humans might miss.

But let’s be real: detection is always one step behind generation. It’s an arms race where the "bad guys" have a head start.

Why We Can't Just "Ignore It"

Some people say, "It’s just a fake picture, who cares?"

That's a pretty shortsighted take. The problem is that once these images are out there, they are permanent. They show up in search results. They get sent to family members. They are used to blackmail people. For a celebrity, it’s a PR nightmare. For a private citizen, it can end a career or cause a total mental health breakdown.

The ai porn taylor swift scandal wasn't just about one singer. It was the moment the public realized that our digital identities are incredibly fragile. We've spent twenty years uploading our lives to the cloud, and now the cloud has learned how to remix us into things we never consented to.

Actionable Steps: How to Protect Yourself and Others

You can't completely "AI-proof" your life if you're on the grid, but you can make yourself a harder target. Here is the move:

Lock Down Your Socials. If you have a public Instagram or Facebook with thousands of selfies, an AI can "learn" your face in about five minutes. If you aren't a public figure, keep your high-res photos behind a private account.

Use the "Report" Tools Properly. If you see deepfake content on X or Reddit, don't just scroll past. Reporting it for "Non-consensual Intimate Imagery" (NCII) triggers different, often faster, moderation paths than just reporting it for "harassment."

Support the Right Organizations. Groups like the StopNCII.org project help victims remove images by creating digital "hashes" (like a digital fingerprint) of the offending photos. This allows platforms to automatically block the same image from being uploaded elsewhere without the moderators even having to look at it.

Lobby for Change. If you're in the US, check out the progress of the NO FAKES Act. It’s one of the few pieces of legislation that actually has broad support from both sides of the aisle.

The tech isn't going away. We aren't going to un-invent generative AI. But we can decide as a society that there are some lines you just don't cross. The ai porn taylor swift situation was the wake-up call. Now we actually have to wake up and do something about it.

The internet is a tool, not a lawless wasteland. Or at least, it shouldn't be. Keeping it that way requires more than just better code; it requires better laws and a massive shift in how we view digital consent.

🔗 Read more: Why You Can't Simply Draw a Circle on Google Maps (and How to Actually Do It)

Stay vigilant. Turn on your privacy settings. And maybe think twice before you post that 4K headshot to a public forum. It’s a weird new world out there.

To stay updated on digital safety, you should regularly audit your online presence. Search for your own name and check the "Images" tab to see what’s publicly available. If you find something suspicious, use tools like Google’s "Results about you" dashboard to request the removal of personal contact info or sensitive imagery. Moving forward, the most effective defense is a combination of legislative pressure on platforms and personal data hygiene.