You're staring at a math problem or a spreadsheet and suddenly the labels don't make sense. Is a zero a whole number? Does a negative five count as an integer? Most people treat them like they're the same thing. They aren't. Honestly, it's one of those tiny distinctions that doesn't matter until it absolutely ruins your code or your tax return.

Numbers are the language of everything we build. But we've gotten sloppy with the vocabulary. When we talk about whole number versus integer distinctions, we’re really talking about where the "floor" of your number system lives. For whole numbers, the floor is zero. For integers, there is no floor. It just keeps going down into the basement forever.

Let's get the definitions out of the way before we look at why this breaks things in the real world.

The Zero Problem: Where Whole Numbers Begin

A whole number is basically what a toddler understands before they learn about debt or getting cold. It’s 0, 1, 2, 3, and so on. You’ve got no fractions here. No decimals. If you try to bring a 2.5 to the whole number party, you're getting kicked out.

There’s actually a bit of a nerd-war regarding "natural numbers" versus "whole numbers." Some mathematicians, particularly those following older European traditions, say natural numbers start at 1. They call them "counting numbers" because nobody starts counting their sheep with "Zero, one, two..." But in modern American curriculum and most computer science contexts, we say:

- Natural Numbers: 1, 2, 3...

- Whole Numbers: 0, 1, 2, 3...

The only difference is that tiny, circular void we call zero. If you include the zero, you’re in whole number territory.

Entering the Basement: The Integer Reality

Integers are the older, more depressed sibling of the whole number. They include everything the whole numbers have, but they also drag in the negatives. We’re talking ...-3, -2, -1, 0, 1, 2, 3...

Think of it like an elevator in a fancy hotel. Whole numbers only let you see the lobby and the floors above. Integers give you the keycard to the underground parking garage and the creepy boiler room in the sub-basement.

In mathematics, we use the symbol $\mathbb{Z}$ for integers. Why Z? It comes from the German word Zahlen, which just means "numbers." This isn't just trivia. When you see a $\mathbb{Z}$ in a technical manual or a high-level data science paper, they are explicitly telling you that negative values are allowed. If they use $\mathbb{W}$ or $\mathbb{N}$, they are telling you that the moment a value drops below zero, the system is going to throw an error.

Why Does This Distinction Even Matter?

You might think I'm splitting hairs. I'm not.

In software engineering, specifically when you're working with databases like MySQL or PostgreSQL, you have to define your "types." If you define a column as an UNSIGNED INT, you are essentially telling the computer to treat it as a whole number. It cannot be negative. This is great for things like "Age" or "Number of items in a cart." You can't be -5 years old.

🔗 Read more: BJ's 55 inch TV: What Most People Get Wrong

But if you use that same logic for a "Bank Balance" column, you're in trouble. The moment someone overdraws their account, the system won't know what to do with that negative sign. It might wrap around to a massive positive number—a bug known as an integer overflow—and suddenly your broke customer has $4 billion.

The Real-World Impact of Misunderstanding Whole Number Versus Integer

Let's talk about temperature. If you’re a scientist at the McMurdo Station in Antarctica, you live and breathe integers. Using whole numbers to describe the weather would be impossible because it’s almost always "integer territory" down there.

On the flip side, consider "Total Population." You can't have a negative person. It's impossible. Using an integer type for population is actually less efficient for a computer than using a whole number type (unsigned), because the computer has to reserve a "bit" of memory just to store the plus or minus sign. By ditching the sign and sticking to whole numbers, you can actually store much larger values in the same amount of space.

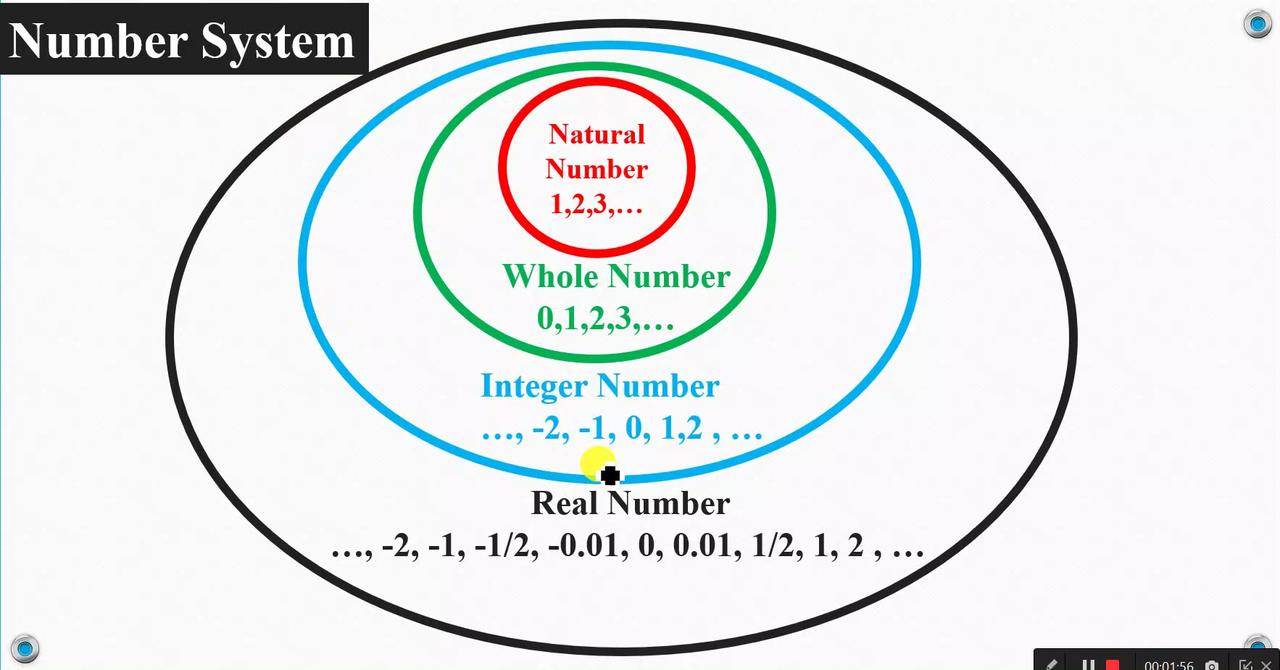

The Venn Diagram You Need to Visualize

It’s easiest to think of this as a set of Russian nesting dolls.

- At the very center, you have Natural Numbers (1, 2, 3).

- Wrapped around that are Whole Numbers (Natural Numbers + 0).

- Wrapped around that are Integers (Whole Numbers + Negatives).

- Wrapped around that are Rational Numbers (Integers + Fractions/Decimals).

Every whole number is an integer. Every natural number is a whole number. But it doesn't work the other way around. A -5 is an integer, but it sure isn't a whole number.

This hierarchy is why we get confused. Since 5 is both a whole number and an integer, we start using the terms interchangeably. We get lazy. Then we try to calculate the trajectory of a rocket or the interest on a loan, and the "lazy" vocabulary leads to "lazy" logic.

Misconception: "Integers are just 'Whole' Negatives"

I hear this a lot. People think integers are the "negative ones" and whole numbers are the "positive ones."

Nope.

Integers are the entire collection. If you have a bag of integers, and you reach in, you might pull out a -10, or you might pull out a 10. Both are integers. A whole number is just a subset that refuses to deal with the negativity.

Technical Depth: The Set Theory Perspective

If you look at the work of Georg Cantor or Richard Dedekind—the giants of set theory—they didn't care much about the "labels" we use in 5th grade. They cared about the properties.

Whole numbers are closed under addition and multiplication. This means if you add two whole numbers, you always get another whole number. 2 + 5 = 7. Easy.

But whole numbers are not closed under subtraction. 2 - 5 = -3.

Wait. -3 isn't a whole number.

This is the exact moment in human history where integers became necessary. We needed a set of numbers that was "closed" under subtraction. We needed a system where you could subtract any number from any other number and still stay within the same family. Integers fixed that.

Coding and Data Science: The "Int" Confusion

In Python, Java, or C++, you’ll see the keyword int.

🔗 Read more: How to pay using PayPal on Amazon: The Workarounds That Actually Work

Usually, int refers to integers. But here’s where it gets weird: some languages handle "whole numbers" differently. In C#, you have uint (unsigned integer). This is literally the programming version of a whole number.

If you're a data analyst using Excel, you might use the INT() function. Fun fact: INT() doesn't just check if a number is an integer; it rounds a number down to the nearest integer. So INT(8.9) becomes 8. But INT(-8.9) becomes -9.

This is where the whole number versus integer debate moves from the classroom to the cubicle. If you don't understand how your specific software handles the "floor" of a number, your data is going to be slightly skewed. And "slightly skewed" is how bridges fall down or why your paycheck is $0.12 short.

A Note on Decimals

Neither whole numbers nor integers allow decimals.

4.0 is technically an integer value, but in the world of computing, 4.0 is often stored as a "float" or a "double." Even if there's nothing after the decimal point, the presence of the point changes how the computer's CPU processes the math.

To a mathematician, 4 and 4.0 are the same. To a computer, 4 is an integer and 4.0 is a floating-point number. They are handled in entirely different parts of the processor (the ALU vs the FPU).

Practical Ways to Remember the Difference

If you're struggling to keep these straight for a test or a project, try these mental shortcuts:

- Whole starts with a 0. It looks like a zero. So, whole numbers include zero.

- Integers starts with an I, which looks like a straight line. Think of the number line stretching forever in both directions (negative and positive).

- Whole implies something complete and not "broken" into pieces (no decimals), but it also implies "positive" vibes (starting from nothing and going up).

Honestly, the easiest way is to just remember that "Integer" is the bigger, more inclusive category. If it's a "clean" number (no fractions), it's probably an integer. If it's a "clean" number that isn't negative, it's also a whole number.

Summary of the Key Differences

Look at it this way:

- Range: Whole numbers go from 0 to infinity. Integers go from negative infinity to positive infinity.

- Sign: Whole numbers are never negative. Integers can be negative, positive, or zero.

- Visual: A whole number line has a hard stop on the left side. An integer number line has arrows on both ends.

- Usage: Use whole numbers for counting objects (3 cars, 0 apples). Use integers for measuring change (a 10-degree drop, a $50 debt).

Actionable Next Steps

If you're working with data or math, here is how you can apply this knowledge today:

1. Audit your data types.

If you are building a spreadsheet or a database, look at your columns. If you have a column for "Quantity," set it to a whole number constraint (unsigned). This prevents accidental negative entries that could mess up your inventory logic.

2. Check your rounding functions.

If you're using software to "integer-ize" your data, verify if it's rounding or truncating. Truncating 4.9 gives you 4. Rounding 4.9 gives you 5. Most INT functions in programming actually truncate toward the floor, not the nearest neighbor.

3. Use the right terminology in documentation.

If you're writing a report, don't say "The average number of users was an integer." It probably wasn't. It was likely a rational number (a decimal). If you mean that the count must be a non-negative non-decimal, call it a "whole number." It makes you look like you actually know what you're talking about.

4. Map it out.

If you're teaching this to someone else, draw the number line. Physical visualization is the only way the brain truly separates the "starting at zero" concept from the "infinite in both directions" concept.

The distinction between a whole number versus an integer might seem like pedantic nonsense, but it’s the foundation of logic. Once you get the floor right, the rest of the math usually falls into place.