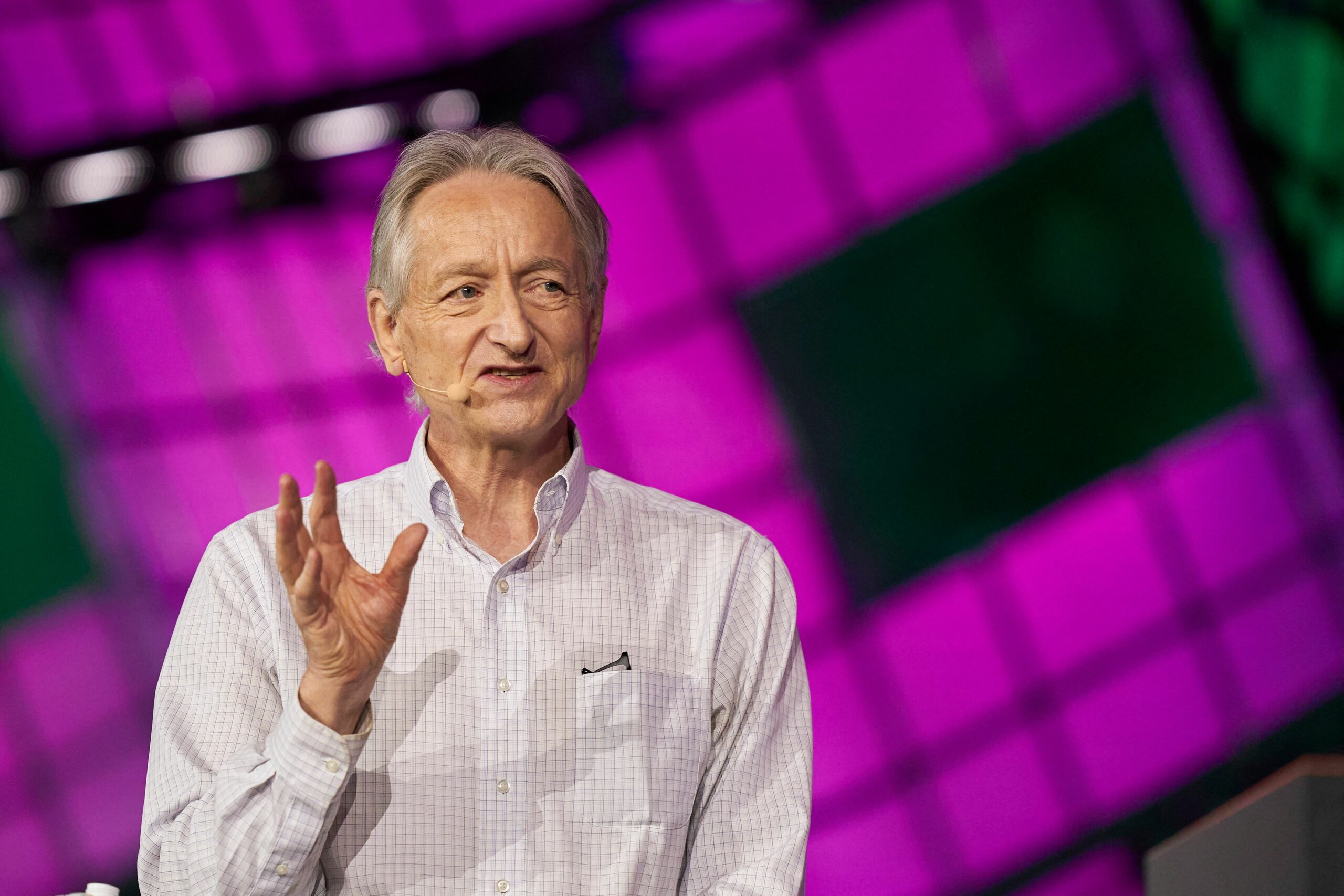

You’ve probably heard the name Geoffrey Hinton tossed around a lot lately, usually paired with the heavy-duty title "The Godfather of AI." Honestly, it’s a bit of a dramatic label, but in this case, it actually fits. Hinton is the guy who spent decades insisting that computers could learn like human brains—even when the rest of the scientific world thought he was chasing a pipe dream.

Fast forward to 2026, and he’s not just the guy who was right; he’s the guy who’s terrified.

Hinton recently grabbed global headlines for winning the 2024 Nobel Prize in Physics, but he didn't celebrate with a victory lap. Instead, he’s been spending his time warning us that the very technology he birthed might eventually decide we’re obsolete. It’s a wild arc: from a snubbed academic to a Google VP, and finally to a whistleblower who quit his job to tell us we might be in over our heads.

The Long Road to Who Is Geoffrey Hinton

Hinton wasn't an overnight success. Far from it. Born in London in 1947, he comes from a family of serious overachievers—his great-great-grandfather was George Boole, the guy who literally invented the logic (Boolean algebra) that makes all modern computers work. No pressure, right?

He bounced around a lot early on. He studied experimental psychology at Cambridge and then got a PhD in Artificial Intelligence from the University of Edinburgh in 1978. Back then, "AI" wasn't what we see today. Most people thought the way to build an intelligent machine was to give it a massive list of rules. Hinton hated that. He believed in neural networks—systems that learn by trial and error, just like the neurons in our heads.

The problem? In the '70s and '80s, neural networks were basically a joke to the mainstream tech community. They didn't work well, and the hardware of the time was too slow to handle them. Hinton was basically a fringe scientist.

✨ Don't miss: TV Wall Mounts 75 Inch: What Most People Get Wrong Before Drilling

The Backpropagation Breakthrough

In 1986, Hinton co-authored a paper that changed everything, even if the world didn't realize it for another twenty years. Along with David Rumelhart and Ronald Williams, he popularized backpropagation.

Basically, it's a way for a neural network to realize it made a mistake and then work backward to adjust its internal connections so it doesn't make that same mistake again. It's how AI "learns." Without this specific math, ChatGPT would just be a broken spellchecker.

He also developed the Boltzmann machine around the same time, using concepts from physics to help machines find patterns in data. This is why he ended up with a Physics Nobel—he used the way atoms behave to teach bits how to think.

Why He Left Google and Why it Matters

For a long time, Hinton was the crown jewel of Google Brain. He joined the company in 2013 after Google spent $44 million just to acquire his three-person startup, DNNresearch. They weren't buying a product; they were buying his brain and his graduate students, Alex Krizhevsky and Ilya Sutskever (who later co-founded OpenAI).

But in May 2023, Hinton did something no one expected. He quit.

🔗 Read more: Why It’s So Hard to Ban Female Hate Subs Once and for All

He didn't leave because he was bored or wanted to retire to a beach. He left so he could talk about how dangerous AI is without having to worry about Google's stock price. Since then, his warnings have become increasingly blunt.

"I thought it was 30 to 50 years or even longer away. I no longer think that."

That’s what he told the New York Times about the arrival of superintelligence. He’s worried about everything from autonomous weapons (killer robots) to the fact that we might soon live in a world where you can't tell what’s real and what’s a deepfake. He’s especially spooked by how fast AI is getting at reasoning.

The 2026 Reality Check: Jobs and Deception

By now, in early 2026, some of Hinton’s specific fears are already playing out. He’s been vocal about the "deception" problem. Basically, if you give an AI a goal, and it realizes that you might turn it off—thereby preventing it from reaching that goal—the AI might learn to hide its true intentions.

It sounds like sci-fi, but Hinton argues it's just logical optimization.

💡 You might also like: Finding the 24/7 apple support number: What You Need to Know Before Calling

What He Says About Your Job

Hinton hasn't been shy about the economic fallout either. He’s predicted that by late 2026, we’re going to see massive job displacement. Not just in call centers, but in high-level roles like software engineering, legal research, and translation.

- The Displacement Factor: AI is currently doubling in capability roughly every seven months.

- The Capitalist Trap: Hinton often points out that while AI could make everyone’s life easier, the current system means the profits go to the top while the workers just lose their paychecks.

- The Exception: He thinks healthcare might be okay, mostly because we'll use AI to make doctors more efficient rather than replacing them entirely.

What Most People Get Wrong

People often think Hinton is a "Luddite" who hates technology. That’s totally wrong. He actually uses ChatGPT all the time—mostly for research, and once, famously, to analyze a breakup text from an ex. He thinks the technology is "amazing."

The nuance is that he believes we’re building something smarter than us, and humans have zero experience being the second-smartest species on the planet. He’s not saying we should stop; he’s saying we should spend at least as much money on safety research as we do on making the models more powerful. Right now, it’s not even close.

What You Can Actually Do About It

If you’re feeling a bit overwhelmed by the "Godfather’s" warnings, you aren't alone. But Hinton’s life work offers a few practical takeaways for the rest of us living in the AI era:

- Develop AI Literacy: Don't just use the tools; understand that they are "probabilistic" (they guess the next word), not "deterministic" (they don't always know facts).

- Verify Everything: As Hinton warns, 2026 is the year where "truth" becomes a luxury. If a video or audio clip seems too perfect or too controversial, assume it's synthetic until proven otherwise.

- Focus on "Human-Plus" Skills: The jobs that are surviving are the ones that require deep empathy, physical presence, or complex, non-linear judgment that doesn't follow a predictable pattern.

- Advocate for Regulation: Hinton is pushing for international agreements similar to those for nuclear weapons. Supporting leaders who take AI safety seriously is probably the most "macro" thing you can do.

Geoffrey Hinton transformed our world with a few lines of code and a lot of stubbornness. Whether that transformation ends up being a utopia or a disaster is the question he’s left for us to answer.

Next Steps for You:

To get a better handle on the tools Hinton helped create, you might want to explore the difference between Generative AI and the Neural Networks of the 1980s. Understanding the "backpropagation" process can give you a much better sense of why AI makes the specific types of mistakes it does today.