You’ve probably stared at that massive 55-inch 4K OLED in your living room and thought, "Man, my spreadsheets would look incredible on that." It makes sense. Why spend $500 on a cramped 27-inch productivity screen when you have a literal wall of pixels sitting ten feet away? Honestly, the line between a high-end television and a high-end computer monitor has blurred so much in the last few years that the distinction feels almost arbitrary. But if you just plug an HDMI cable into your laptop and hope for the best, you're probably going to hate the experience within twenty minutes.

It's grainy. The mouse feels like it's moving through molasses. The text has this weird, blurry glow that makes your eyes throb. Using TV as monitor isn't as simple as "plug and play." It’s a delicate balance of refresh rates, chroma subsampling, and input lag that most people completely ignore until they're squinting at a pixelated mess.

Why Your TV Looks Like Hot Garbage Initially

The biggest hurdle is something called Chroma Subsampling. TVs are designed for video. When you watch The Bear on Hulu, your eyes don't really notice if the color data is slightly compressed to save bandwidth. Most TVs default to 4:2:2 or 4:2:0 subsampling. This is fine for movies, but for a PC? It’s a disaster. Desktop use involves high-contrast, sharp edges—specifically black text on white backgrounds. In a 4:2:0 environment, that text will look "fringed" with red or blue artifacts. To make this work, your TV must support 4:4:4 Chroma, which treats every single pixel's color data individually. Without it, your "monitor" is basically a glorified blurry projector.

Then there’s the Input Lag issue. Modern TVs do a massive amount of "post-processing." They try to smooth out motion, enhance colors, and upscale lower-resolution content using AI chips like LG’s Alpha 9 or Sony’s Cognitive Processor XR. This takes time. Only milliseconds, sure, but it creates a delay between you moving the mouse and the cursor actually moving on the screen. It feels heavy. To fix this, you almost always have to toggle "Game Mode." This kills the processing and cuts the latency down. If your TV doesn't have a dedicated low-latency mode, don't even bother using it for work; the disconnect between your hand and your eyes will give you a headache.

The Physical Reality of Desk Space

Size is the enemy of ergonomics. If you put a 48-inch TV on a standard 30-inch deep desk, you're going to be turning your entire head just to check the clock in the bottom right corner of Windows. That is a one-way ticket to neck strain. Experts in ergonomics, like those at Herman Miller, generally suggest your eyes should be level with the top third of the screen. With a giant TV, the top of the screen is basically in the clouds.

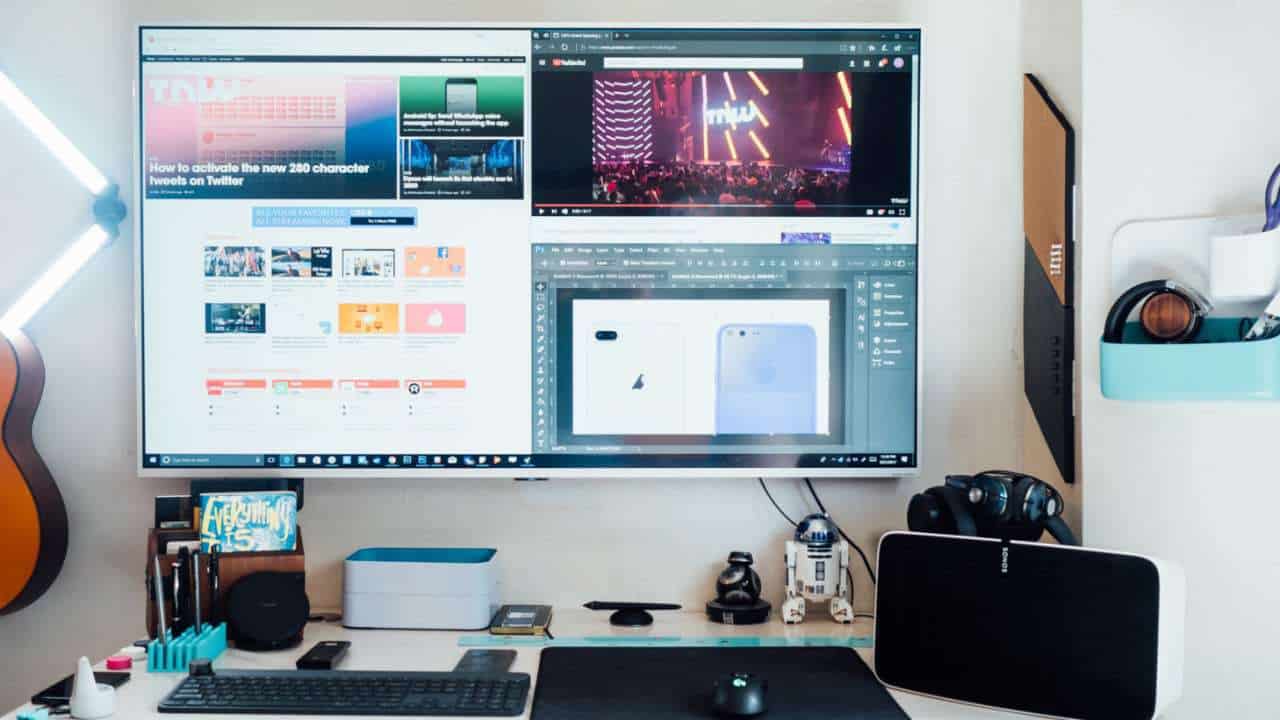

You need distance. A lot of it. For a 4K 42-inch screen—widely considered the "sweet spot" for this setup—you really want about three to four feet of clearance. Most people don't have desks that deep. You might end up needing a floor stand or a heavy-duty wall mount to push the TV back further than the desk actually reaches.

Gaming is the Exception

If you’re a gamer, using TV as monitor is actually a massive upgrade, provided you have the right hardware. We’ve reached a point where TVs like the LG C3 or C4 OLED actually outperform many mid-range gaming monitors. They offer nearly instantaneous response times (0.1ms) because OLED pixels turn off individually. You can't get that "perfect black" on an IPS or VA monitor panel without spending thousands on a Mini-LED display with hundreds of local dimming zones.

🔗 Read more: Why the First Colony Mall Apple Store is Still the Best Spot for Tech in Sugar Land

- HDMI 2.1 is mandatory. If your TV only has HDMI 2.0, you're capped at 4K at 60Hz. To get that buttery smooth 120Hz or 144Hz that modern GPUs like the RTX 4090 can output, you need the 48Gbps bandwidth of HDMI 2.1.

- Variable Refresh Rate (VRR). Check your settings for G-Sync or FreeSync compatibility. This prevents screen tearing when your frame rate dips.

- Auto Low Latency Mode (ALLM). This tells the TV to automatically switch to its fastest settings the second it detects a signal from a PC or console.

The OLED Burn-In Boogeyman

Is burn-in real? Yeah. Is it as bad as the internet says? Probably not, but for desktop use, it’s a genuine concern. Static elements are the killer. The Windows taskbar, the borders of your browser, and those desktop icons are always in the same spot. On an OLED, those static bright pixels wear out faster than the ones around them, eventually leaving a "ghost" image.

If you’re going the OLED route for your monitor, you have to change your habits. Set your taskbar to auto-hide. Use a black wallpaper. Set your screen timeout to 5 minutes so it doesn't stay on while you're grabbing coffee. Some TVs, like the Samsung S90C, have aggressive "Pixel Shift" features that subtly move the image around to prevent this, but you still have to be careful. If you’re a coder who stares at a static IDE for 10 hours a day, an OLED TV is a bad investment. You’d be better off with a Sony X90L or something with a high-quality LCD/LED panel that doesn't suffer from organic degradation.

Text Clarity and Pixel Layout

This is a deep-nerd topic that actually matters: Subpixel Layout. Standard monitors use an RGB (Red, Green, Blue) layout. Windows ClearType is specifically designed to smooth out fonts based on this RGB pattern. Many TVs, especially older OLEDs or certain budget LED panels, use WBGR or BGR layouts. When Windows tries to "smooth" the text, it actually makes it look worse because it's lighting up the wrong subpixels. You can run the ClearType Tuner in Windows to mitigate this, but it’s rarely perfect. If you’re doing heavy text work—writing, legal review, coding—the slight fuzziness of a TV might drive you crazy compared to a dedicated 27-inch 4K monitor with high pixel density (PPI).

Real-World Comparisons: TV vs. Monitor

Let’s look at the LG 42-inch C3 OLED versus a standard 32-inch 4K Monitor.

🔗 Read more: Leaked Credit Card Numbers: Why Your Data is Likely Already Out There

The TV gives you an incredible contrast ratio—literally infinite. It has great speakers (compared to monitor tin-cans) and a remote control, which is surprisingly handy for changing brightness. But the monitor has a "DisplayPort" input, which is much more stable for PC sleep/wake cycles. TVs often fail to "wake up" when you move your mouse, forcing you to reach for the remote every time you come back from lunch. That sounds like a small gripe, but doing it six times a day for a year is infuriating.

Also, monitors usually have a USB Hub. You plug your keyboard and mouse into the screen, then one cable to your laptop. Very few TVs offer this. You’ll likely need a separate docking station, adding to the clutter of your "clean" TV-desk setup.

The "Smart" Interface Problem

TVs are "smart" now, which is actually a downside for a monitor. When you turn on a monitor, it just shows your desktop. When you turn on a TV, it might try to show you an ad for a new Disney+ show or ask you to update the firmware. Some TVs, like those from Vizio or Hisense, can be a bit aggressive with their built-in software. You’ll want to go into the settings and disable as much of the "Smart" features as possible, or just never connect the TV to Wi-Fi at all. Use your PC for the "smarts."

Making It Work: Practical Next Steps

If you’re ready to commit to using TV as monitor, don't just wing it.

First, check your GPU. If you don't have an HDMI 2.1 port on your graphics card (Nvidia 30-series or 40-series, or AMD 6000/7000 series), you won't get the full 4K/120Hz experience. You'll be stuck at 60Hz, which feels sluggish for everything except movies.

Second, buy a Certified Ultra High Speed HDMI cable. Don't use the random one you found in the "cable drawer." If it’s not rated for 48Gbps, you’ll get intermittent black screens or "sparkles" in the image.

👉 See also: Saving Gmail as PDF: The Easiest Ways to Do It Without Losing Your Mind

Third, adjust your Windows scaling. At 4K on a 42-inch screen, 100% scaling makes text tiny. Most people find 125% or 150% to be the sweet spot.

Finally, dive into the TV’s "Picture" menu. Find the setting usually called "Input Label" or "Icon" and change it to "PC." On many LG and Samsung sets, this is the magic toggle that tells the TV to enable 4:4:4 Chroma subsampling. If you don't do this, the text will never look sharp, no matter how much you play with the sharpness slider. Speaking of which, turn the "Sharpness" setting to zero or the neutral midpoint (usually 0 or 50). TVs apply artificial edge enhancement that makes computer text look like it’s vibrating.

Check your room’s lighting too. Most TVs have a glossy finish to make colors pop. That’s great in a dark living room, but if you have a window behind you, the reflection of yourself staring back at the screen will be distracting. A matte monitor is much better at diffusing light, whereas a TV is basically a giant black mirror when it's off.

Switching to a TV is a power move for your productivity and gaming setup, but it requires a bit of "tech-tinkering" to get right. If you value screen real estate above all else and you're willing to manage the quirks of a device that wasn't strictly built for a Windows taskbar, the immersion is unbeatable. Just keep that remote close—you're going to need it more than you think.

Actionable Checklist for Setup:

- Verify HDMI 2.1: Ensure both your PC and TV support the 2.1 standard for 120Hz.

- Rename Input to PC: This often automatically triggers the best settings for text clarity.

- Disable Overscan: In the TV settings, ensure the "Aspect Ratio" is set to "Just Scan" or "Original" so the edges of your desktop aren't cut off.

- Run ClearType Tuner: Search for "Adjust ClearType text" in your Windows search bar to fix font blurring.

- Manage Heat: Large TVs generate significantly more heat than monitors. Ensure your desk area has decent airflow, especially if the TV is pushed up against a wall.