It’s easy to get obsessed with numbers. We look at a list, see a "1" or a "50," and assume we know everything about a school’s quality. But honestly, US education rankings since 1960 tell a story that is way more chaotic than most people realize. For decades, we’ve been trying to quantify "intelligence" and "school quality" through a lens that shifts every few years.

Back in the 60s, a ranking mostly meant how much money a university had or how many old books were in its library. Today, it's about social mobility, research grants, and student debt. If you look at the trajectory, the US hasn't necessarily "fallen behind" as much as the rest of the world caught up, and our internal metrics became a weirdly competitive game of prestige.

The Sputnik Panic and the Birth of Modern Metrics

Everything changed in 1957. When the Soviet Union launched Sputnik, the US collective psyche fractured. Suddenly, education wasn't just about "learning"; it was national security. The National Defense Education Act of 1958 poured money into STEM, and by 1960, we started seeing the first real attempts to rank institutions based on their output of scientists and engineers.

Early rankings were basically "reputation" contests. There was no internet. You couldn't check a graduation rate on a dashboard. Instead, a few guys at organizations like the American Council on Education would survey deans. "Who do you think is the best?" they’d ask. It was a giant echo chamber. If Harvard was famous in 1920, it was ranked #1 in 1960.

This era was dominated by the "Big Three"—Harvard, Yale, and Princeton. But the real story was the rise of the public land-grant universities. Places like UC Berkeley and the University of Michigan began climbing the rungs because they were churning out the technical talent the Cold War demanded. Yet, the rankings we think of today—the ones parents stress over—didn't really exist yet. It was a looser, more localized vibe. People went to the best school in their state and felt fine about it.

When US News and World Report Changed the Game

If you want to know why we are so stressed about US education rankings since 1960, you have to look at 1983. That’s the year US News and World Report published its first "America’s Best Colleges" report. Before this, rankings were for academics. After this, they were for consumers.

💡 You might also like: Tragedy on the Road: What Really Happened When a Man Dies in Car Accident NJ Yesterday

It was a bloodbath.

Suddenly, a magazine was telling people that the university they loved was actually #42, while their rival was #12. The criteria were—and honestly, still are—kind of sketchy. They used "peer assessment" as a huge chunk of the grade. Think about that. They asked college presidents to grade other colleges they had never visited. It’s like asking a chef to rank a restaurant they’ve only seen on Instagram.

This created a feedback loop. Schools realized that if they wanted to move up, they didn't necessarily have to teach better. They just had to spend more on marketing. They sent out glossy brochures to other deans. They built massive "lazy rivers" in their gyms to attract higher-tier applicants. The "rankings game" became a multi-billion dollar arms race that changed the physical landscape of American campuses.

The PISA Shock and the International Slide

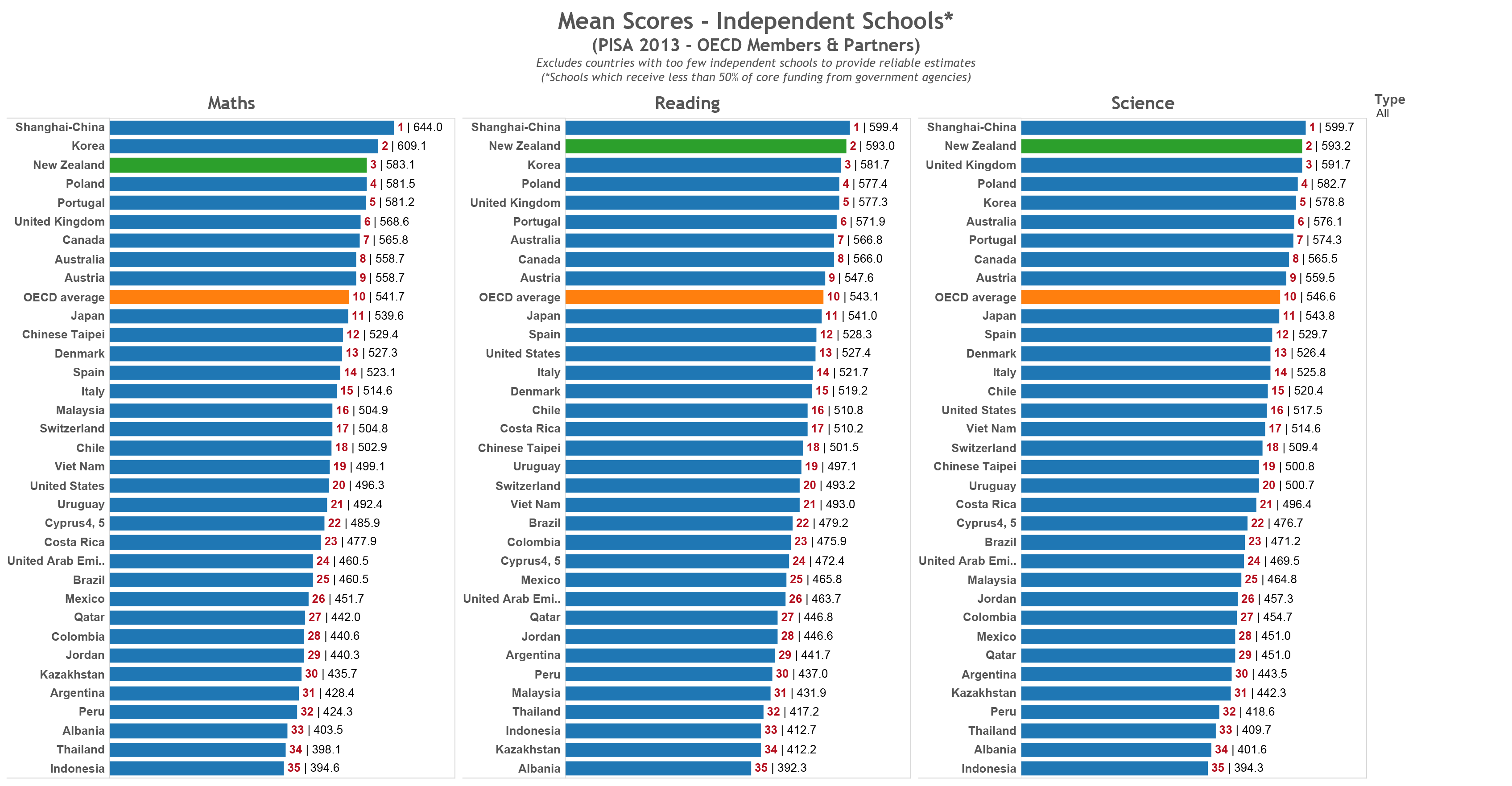

While colleges were fighting over who had the best library, the K-12 system was getting its own wake-up call. Enter PISA (Program for International Student Assessment) in 2000.

For the first time, we had a standardized way to compare 15-year-olds in the US to those in Finland, Singapore, and Japan. The results were... not great. The US usually lands somewhere in the "middle of the pack" for math, though we do better in reading. This fueled the narrative that the American education system was failing.

But here is what most people get wrong about these rankings: they don't account for poverty.

If you disaggregate the data, US schools in wealthy zip codes often outrank almost every other country in the world. Our "average" is low because our inequality is high. When you see a ranking that says the US is 30th in math, you aren't seeing a failure of teaching methods as much as you're seeing a failure of social policy. We are one of the few developed nations that funds schools primarily through local property taxes. That basically guarantees that if you’re born in a poor neighborhood, your school’s "ranking" is doomed before you even walk through the door.

✨ Don't miss: Exactly How Many People Were Killed on 9/11: The Numbers We Still Wrestle With

The Great Ranking Revolt of the 2020s

We are currently in a fascinating moment. After decades of bowing to the gods of the US News and World Report, elite schools are finally walking away. In 2022 and 2023, law schools and medical schools at places like Harvard, Stanford, and Columbia announced they would no longer participate.

Why? Because the rankings were actually punishing them for doing the right thing.

If a law school gave financial aid to students who wanted to go into low-paying public interest law, their "average starting salary" metric would drop, and their ranking would fall. The rankings were literally incentivizing schools to only admit rich kids who wanted to work in corporate law. By stepping away, these schools are signaling that the US education rankings since 1960 have reached a breaking point of absurdity.

A Different Way to Look at the Numbers

If you’re looking at these rankings today, you have to be smarter than the algorithm. You've got to look at "Value Added" metrics. Organizations like the Brookings Institution have started looking at what a school does for a student, rather than just who they let in.

- Social Mobility: Does the school move kids from the bottom 20% of income to the top 20%?

- Price-to-Earnings: How long does it take to pay off the debt based on the average salary of a graduate?

- Research Output: Is the school actually discovering new things, or just resting on a name from 1950?

Schools like CUNY in New York or CSU in California often smoke the Ivy League in these categories. They don't have the prestige, but they have the impact. That is the real evolution of the data since 1960—moving from "who is the most exclusive" to "who is the most effective."

Actionable Insights for Navigating Rankings

Stop looking at the "Top 10" list and start looking at the "Common Data Set." Every university publishes one. It’s the raw data they send to the government. It’s dry, it’s boring, and it’s the only way to see the truth.

- Ignore "Peer Assessment" scores. They are vibes-based. They mean nothing for your actual education.

- Check the "Instructional Spending" per student. If a school is spending more on "Administrative Services" than teaching, run.

- Look at the 10-year salary data via the College Scorecard. This is a US Department of Education tool that uses actual IRS data. It’s way more accurate than a magazine’s survey.

- Prioritize departmental rankings over institutional ones. A university might be ranked #80 overall, but its Engineering or Nursing program might be top 5 in the world.

- Evaluate the "Yield Rate." This tells you how many students who were accepted actually chose to go there. It’s a great pulse-check on whether a school is actually a place people want to be, or just a safety net.

The reality is that US education rankings since 1960 have transitioned from a helpful guide into a distorted mirror. We used to use them to find the best schools; now schools use them to find the "best" (meaning wealthiest or highest-testing) students. If you want a good education in 2026, you have to look past the number on the cover and look at the actual outcomes of the people who look like you.

The "best" school is the one you can afford, that has the specific professors you need, and that doesn't leave you with a mountain of debt that cancels out your degree's value. Everything else is just marketing.