You know that feeling when you check your phone for "just a second" and suddenly forty minutes have vanished into a void of sourdough recipes and political arguments? It’s gross. We all do it. But back in 2020, Netflix dropped The Social Dilemma documentary, and suddenly we had a name for that hollow feeling in our chests. It wasn't just us being lazy. It was by design.

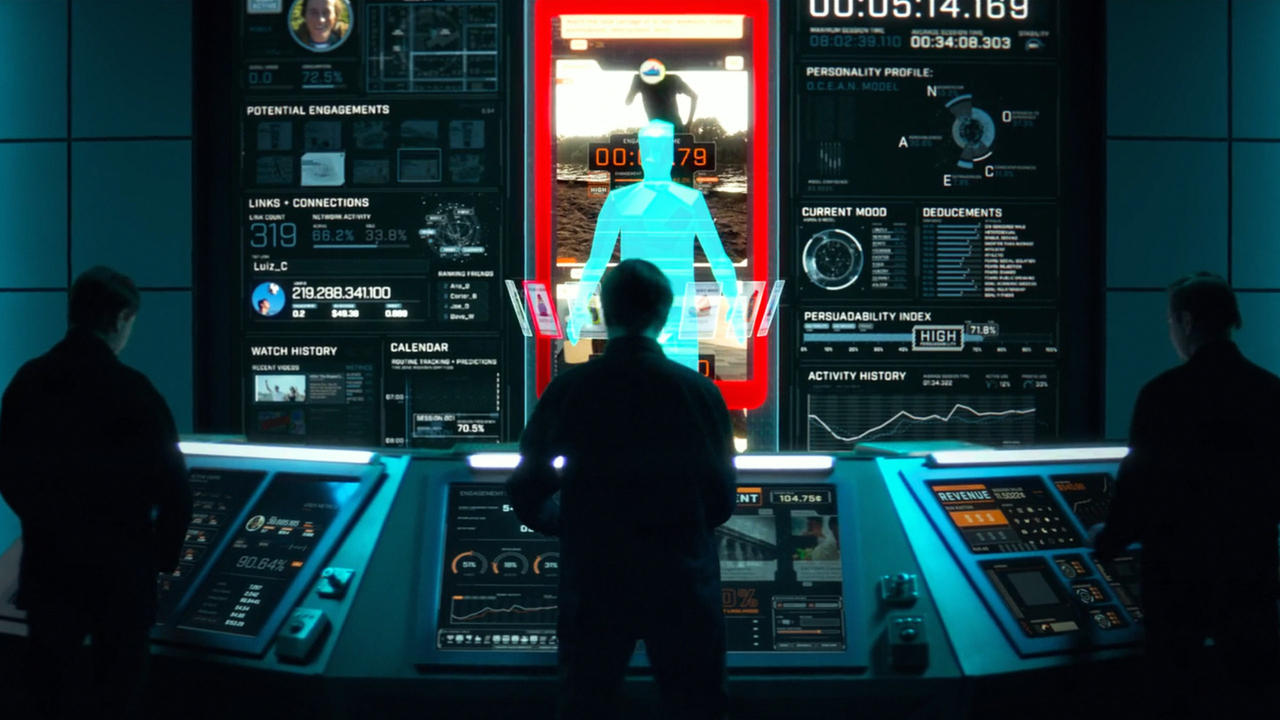

The film didn't just trend; it sparked a massive, global panic about what these glowing rectangles are doing to our brains. Honestly, looking back at it now, some of the dramatizations—like the creepy AI triplets played by Vincent Kartheiser—feel a bit "after-school special." But the experts? The guys who actually built the "Like" button? They were terrified. And their warnings have only aged into more complex, uglier truths.

What the Social Dilemma documentary actually got right (and what it missed)

The core premise of the film is built on the words of Tristan Harris. He’s a former Design Ethicist at Google. That sounds like a fake job, but it’s real, and he spent his time there worrying that a handful of designers in Silicon Valley were essentially "programming" the thoughts of billions. He compares the smartphone to a slot machine. You pull the lever (refresh the feed) and you hope for a hit of dopamine (a like, a comment, a notification).

It’s called intermittent reinforcement.

Behavioral psychologists like B.F. Skinner proved decades ago that if you give a lab rat a reward every single time it hits a lever, it eventually gets bored. But if the reward is random? That rat will hit the lever until its paws bleed. That’s your Instagram feed. That’s why you’re scrolling at 2:00 AM.

The film also features Jaron Lanier, the "founding father" of Virtual Reality, who looks like a wizard and talks like a prophet. He argues that the product isn't actually our data. It’s not about Facebook selling your phone number to a car insurance company. It’s deeper. The product is the gradual, slight, imperceptible change in your own behavior and perception. They aren't just selling your info; they are selling the ability to predict—and then alter—what you do next.

The "Engagement" Trap

The algorithm doesn't care if you're happy. It doesn't care if you're learning. It only cares about "time on device."

Think about the YouTube autoplay feature. Aza Raskin, the guy who actually invented the infinite scroll (and says he's deeply sorry for it), explains that these features were designed to remove "stopping cues." In the old days, you finished a chapter in a book and you stopped. You finished a newspaper and you stopped. Now? The "next" thing is always loaded before you have the conscious chance to decide if you actually want to see it.

💡 You might also like: Why It’s So Hard to Ban Female Hate Subs Once and for All

Does it cause depression?

The documentary points to a sharp spike in anxiety, depression, and self-harm among teenagers, specifically girls, starting around 2011-2013. This correlates almost perfectly with when social media became available on mobile. Dr. Jonathan Haidt, a social psychologist at NYU who appears in the film, has been beating this drum for years.

Critics of the film say this is "correlation, not causation." They argue that the world is just generally a stressful place and the kids are reacting to climate change or economic instability. But the film’s experts argue that the "social comparison" loop—where kids compare their messy "behind-the-scenes" lives to everyone else’s "highlight reels"—is a unique kind of psychological torture that previous generations didn't have to navigate 24/7.

The Business of Polarization

This is where the The Social Dilemma documentary gets really dark. It’s not just about you feeling bad about your body. It’s about how we’ve lost the ability to agree on what is true.

Justin Rosenstein, the co-creator of the Like button (he’s also a former Facebook and Google engineer), points out that the algorithms are optimized to show you stuff you already agree with. It’s an echo chamber. If you think the Earth is flat, the algorithm will find ten thousand other people who think so too and feed you "evidence" until you're convinced.

Why? Because conspiracy theories and outrage generate more "engagement" than boring, nuanced facts.

- Anger is the most "viral" emotion.

- Misinformation spreads six times faster than the truth on Twitter (now X), according to an MIT study cited in the film.

- The "Recommended for You" section is essentially a radicalization engine for whatever niche interest or political grievance you happen to click on first.

If two people go to Google and type in "Is climate change...", they will get different results depending on where they live and what they’ve clicked on before. We aren't just seeing different opinions; we are seeing different facts.

The human cost of the "Free" model

"If you're not paying for the product, you are the product."

📖 Related: Finding the 24/7 apple support number: What You Need to Know Before Calling

We've heard this a million times. It's a cliché. But the documentary forces you to actually look at the machinery. These companies have giant "supercomputers" (metaphorically speaking) that are constantly running simulations of your brain to see which notification will get you to open the app.

It’s an unfair fight. On one side, it’s just you, a human with a biological brain that hasn't evolved much in 50,000 years. On the other side, it's a trillion-dollar company with thousands of the smartest engineers in the world using AI to exploit your prehistoric vulnerabilities.

You're going to lose that fight every time.

Is it all doom and gloom?

Honestly? The film is pretty bleak. It paints a picture of a society heading toward total collapse because we can't talk to each other anymore. But since the film came out, there has been some movement. We've seen more "Digital Wellbeing" tools added to phones. We've seen Congressional hearings (though many of those were just old guys who don't understand how WiFi works asking CEOs silly questions).

The real change hasn't come from the top down. It’s coming from people just... getting tired. There is a growing movement of people switching to "dumb phones" or using apps like Opal to literally lock themselves out of their own devices.

How to actually reclaim your brain

If you watched The Social Dilemma documentary and felt like throwing your iPhone into a lake, you aren't alone. But you probably can't do that. You need it for work. You need it for GPS. You need it to see photos of your nephew.

So, how do you live in the world the film describes without losing your mind?

👉 See also: The MOAB Explained: What Most People Get Wrong About the Mother of All Bombs

Turn off every single non-human notification. This is the big one. If a person didn't send it, you don't need to see it. You don't need your phone to tell you that "someone you might know" posted a photo. You don't need a notification that a game has a "daily bonus" waiting for you. That’s just the slot machine calling your name.

Never use the "Recommended" feed. Go directly to the people you follow. Don't let the algorithm choose your next video or your next post. If you find yourself scrolling the "Explore" page, stop. You've entered the machine.

Give yourself a "Digital Sabbath." Even just four hours on a Sunday where the phone is in a drawer. You'll feel an itch in your brain for the first hour. That’s the withdrawal. It goes away.

Follow people you disagree with. This is hard. It’s annoying. But it breaks the echo chamber. If you're a hardcore liberal, follow three smart conservatives. If you're a conservative, read some progressive long-form essays. Don't do it to argue. Do it to remind your brain that "the other side" consists of actual humans with logical (to them) reasons for what they believe.

Don't give your kids a smartphone until high school. This is what many of the Silicon Valley executives featured in the film do. They know how the sausage is made, and they don't let their own children near it. If the guys who built it won't let their kids use it, that should tell you everything you need to know.

The bottom line on social media

The Social Dilemma wasn't just a movie about apps; it was a movie about the loss of human agency. We think we're making choices, but we're often just reacting to triggers.

The documentary's greatest strength is that it makes the invisible visible. Once you see the "Matrix," it’s harder to be fooled by it. You start to notice when an app is trying to bait you into an argument. You start to see the "infinite scroll" for the trap it is.

It’s not about deleting everything and moving to a cabin in the woods. It’s about intentionality. Use the tool; don't let the tool use you.

Actionable Next Steps

- Audit your notifications right now. Go to Settings > Notifications. Turn off everything except for Messages, Phone, and maybe your Calendar. Everything else—Instagram, News, YouTube, Games—should be "silent" or off.

- Delete one "infinite scroll" app. Just one. The one that makes you feel the most drained. Try it for 48 hours. See if your life actually gets worse. (Spoilers: It won't).

- Charge your phone in a different room. Don't let the "slot machine" be the last thing you see at night and the first thing you see in the morning. Buy a $10 alarm clock.

- Watch the film again with a skeptic's eye. Pay attention to the interviews with Tim Kendall (former President of Pinterest) and Cynthia Wong. Look past the dramatized "teenager" storyline and focus on the mechanics of the business models they describe.

The tech isn't going away. It’s only getting more "immersive" with AI and VR. The only defense we have is awareness and a very loud, very human "no" to the algorithms that want to own our attention.