Honestly, if you look at your phone right now, you’re staring at a miracle of math. People ask about the computer graphics definition and usually expect some dry, textbook answer about pixels or "the art of drawing on a screen." But that’s barely scratching the surface. It’s actually the science of translating digital data into something the human eye can actually understand.

Without it? You’d be looking at rows of green text like a scene from The Matrix.

Computer graphics is basically the entire field of computer science that deals with generating and manipulating images. This isn't just about Photoshop. We're talking about the code that tells a GPU how to bounce light off a virtual car hood in Cyberpunk 2077, the algorithms that help a surgeon see a 3D model of a heart before an operation, and even the simple font rendering that makes this article readable. It is the bridge between binary code and human perception.

What is the Computer Graphics Definition anyway?

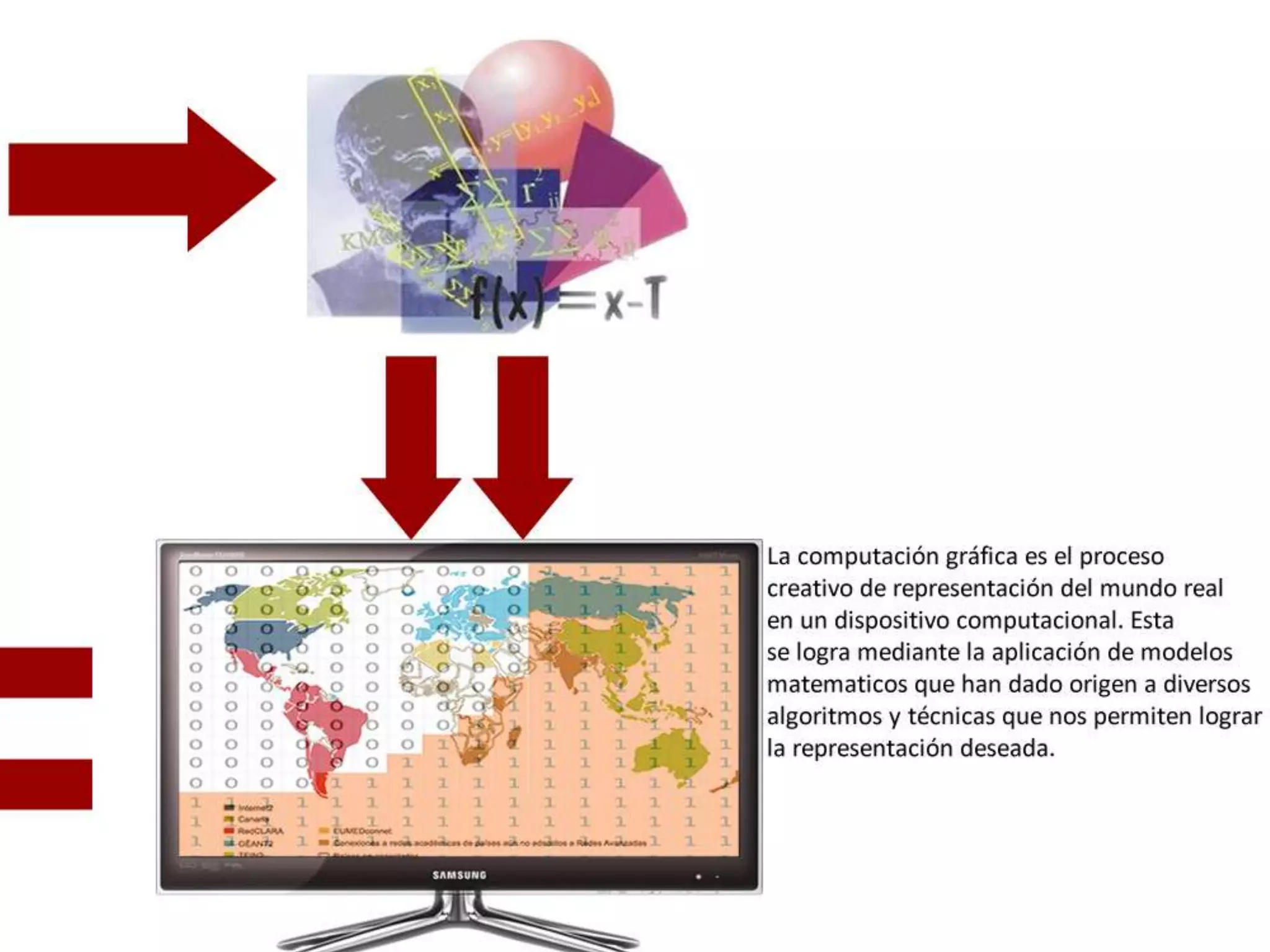

At its core, computer graphics is about representation. It’s how we take a bunch of numbers—vectors, coordinates, color values—and turn them into a visual experience. There’s a distinction you have to make right away: generative vs. cognitive. Generative is about creating the image. Cognitive (often called Image Processing) is about analyzing it.

Think about it this way. When Pixar makes a movie, they are doing "computer graphics." They start with nothing and build a world. When a self-driving Tesla identifies a stop sign, it’s doing image processing. Both live under the same broad umbrella, but the goals are polar opposites.

Ivan Sutherland is widely considered the "grandfather" of this whole thing. Back in 1963, he created Sketchpad. It was the first program that allowed humans to interact with a computer visually using a light pen. Before Sutherland, computers were giant calculators that spat out paper tape. He proved that computers could be a medium for visual expression.

The two flavors: 2D vs. 3D

You’ve got two main branches here. 2D graphics are your flat images. Think typography, digital painting, or the UI on your microwave. It uses two-dimensional models (like planar shapes and text) and is mostly about layout and color.

Then there’s 3D. This is where things get weirdly complex.

In 3D, the computer isn't just "drawing." It is simulating a world. It tracks objects in a three-dimensional space using $X, Y, and Z$ coordinates. To see that world, the computer has to perform a "render." It calculates how light hits a surface, how a shadow falls, and how the camera lens distorts the view.

The stuff that actually makes it work

Geometry.

That’s the foundation. Everything you see on a screen is made of polygons. Usually triangles. Why triangles? Because they are the simplest shape that is always flat (coplanar). If you have three points in space, you have a solid surface. Connect a million of those, and you have a dragon or a face.

✨ Don't miss: Why How to Reduce RAM Usage Is Actually About Changing Your Habits

But geometry is naked without textures. Texturing is like wrapping a 3D model in digital wallpaper. If you have a sphere, it’s just a gray ball. Apply a "rusty metal" texture map, and suddenly it looks like a relic from a shipwreck.

Then comes the hard part: Lighting.

In the early days, we used "Lambertian reflectance." It was basic. It made things look like matte plastic. Now, we use Ray Tracing. This is a massive leap in the computer graphics definition in a practical sense. Ray tracing actually simulates individual rays of light as they bounce around a scene. It’s why modern games have realistic puddles and glass reflections. It’s also why your laptop gets hot enough to fry an egg when you play them.

Raster vs. Vector

This is a huge point of confusion.

- Raster graphics are made of pixels. Your photos are rasters. If you zoom in too far, they get "crunchy" and blocky.

- Vector graphics are made of math paths. An SVG file or a font is a vector. You can scale a vector to the size of a skyscraper and it will stay perfectly sharp because the computer is just recalculating the math for the lines.

Why this matters for the future

We aren't just looking at screens anymore. We are wearing them.

Spatial computing—the fancy word Apple uses for the Vision Pro—is the next frontier of the computer graphics definition. In spatial computing, the "screen" is the real world. The computer has to map your living room in real-time, understand where your coffee table is, and then "render" a digital object so it looks like it’s sitting on that table.

If the shadows don't match your room's lighting, your brain hates it. You get motion sick or the "uncanny valley" feeling.

💡 You might also like: Why AirPods Pro 2 Latest Firmware Updates Keep Changing How Your Ears Work

This requires insane levels of processing power. We are moving toward "Physically Based Rendering" (PBR). This is a workflow where digital materials are defined by their real-world physical properties—how metallic they are, how much light they absorb, their roughness. It’s no longer about "faking" it; it's about simulating reality.

Real-world impact beyond gaming

It’s easy to think this is all just for fun and games. It isn't.

CAD (Computer-Aided Design) is the reason our cars don't fall apart and our bridges stay up. Engineers use computer graphics to stress-test designs before a single piece of steel is cut.

In medicine, "volume rendering" takes 2D slices from a CT scan and stitches them into a 3D model of a patient's skull or lungs. This isn't just a cool visual; it's a diagnostic tool that saves lives. It allows doctors to find tumors that might be hidden in a flat 2D image.

Even the weather forecast depends on this. Those moving heat maps and storm tracks are complex graphical representations of massive datasets. Without the visual layer, the data would be useless to the average person.

Common Misconceptions

People think "more pixels" always means "better graphics."

That’s a trap.

A 4K image with terrible lighting and low-polygon models looks way worse than a 1080p image with high-quality shaders and global illumination. The quality of computer graphics is defined by the sophistication of the "render pipeline," not just the resolution.

Another one: "Graphics cards are just for gamers."

Actually, the GPU (Graphics Processing Unit) is now the backbone of Artificial Intelligence. The same math used to calculate how light bounces off a 3D character (matrix multiplications) is the same math used to train Large Language Models. Nvidia didn't become a trillion-dollar company just because of Call of Duty. They did it because they mastered the hardware needed for parallel processing.

Getting started with CG

If you’re actually interested in doing this, don't start with code. Start with the "why."

Learn the basics of light and shadow. Pick up a tool like Blender—it’s free, open-source, and honestly better than some software that costs thousands. If you want to go the programming route, look into OpenGL or Vulkan. These are the APIs (Application Programming Interfaces) that act as the middleman between your code and the graphics hardware.

You’ll need to brush up on linear algebra. Sorry. There’s no way around it. Matrices and vectors are the language of 3D space.

Actionable Insights for 2026

If you are a creator or a business owner trying to stay relevant in a world dominated by visual tech, here is what you actually need to do:

- Prioritize Vector Assets: For branding and UI, stop using JPEGs where a vector (SVG) will do. It improves site speed and looks better on high-density displays.

- Understand "Real-Time": The world is moving away from pre-rendered content. Whether it's Unreal Engine in filmmaking (The Mandalorian style) or interactive product demos, real-time rendering is the new standard.

- Optimize for Mobile: Most computer graphics are consumed on phones. High-poly models will tank your user experience. Learn about "Retopology"—the process of simplifying complex 3D models so they run smoothly on a mobile GPU.

- Embrace AI-Assisted Texturing: Tools like Adobe Substance or AI-based upscalers can save hundreds of hours in the creative process. Don't fight the tech; use it to handle the grunt work so you can focus on the art.

Computer graphics is no longer just a sub-field of tech. It is the very lens through which we interact with the digital world. From the font on this page to the VR headsets of tomorrow, it is the art of making the invisible visible.