Everyone acts like the world changed overnight because a text box started talking back. It's wild. You’ve seen the demos where someone generates a whole website in thirty seconds or asks an LLM to write a legal brief that sounds, well, mostly legal. But if you spend enough time behind the keyboard, the cracks start showing. Honestly, the limitations of generative AI aren't just minor bugs that a software update will fix tomorrow; they are baked into the very math that makes these systems work in the first place.

Silicon Valley wants you to think we’re five minutes away from Artificial General Intelligence. We aren't.

Large Language Models (LLMs) like GPT-4, Claude, or Gemini are essentially "autofill on steroids." They don't know what a strawberry is. They just know that in a massive dataset of billions of words, the word "strawberry" often appears near "red," "sweet," and "shortcake." When you realize that these systems are probabilistic rather than deterministic, you start to understand why they fail so spectacularly at things a five-year-old masters with ease. It's all about patterns, not pixels or principles.

The Hallucination Problem is a Feature, Not a Bug

If you ask an AI for a recipe for a cake and it tells you to add a cup of gasoline, that's a problem. But why does it happen?

The industry calls it "hallucination." It's a fancy word for lying. But the AI isn't lying because lying requires intent. It's just predicting the next most likely token. If the statistical path leads to a cliff, the AI walks right off it. This is one of the most persistent limitations of generative AI. Because these models don't have a "ground truth" database—they aren't checking a library, they are calculating a sequence—they can't actually verify if the facts they are spitting out are real.

Take the 2023 case of Mata v. Avianca. A lawyer used ChatGPT to research case law and ended up submitting a brief filled with entirely fabricated judicial decisions. The AI didn't just get the dates wrong; it invented names, docket numbers, and quotes from judges who didn't exist. It looked perfect. It sounded professional. It was complete fiction. This happens because the model is designed to be fluent, not factual. Fluency is the mask that hides the lack of actual comprehension.

Logic, Math, and the "Reasoning" Trap

Have you ever tried to play Wordle with an AI? Or asked it a complex riddle? It struggles.

Mathematically, these models have a hard time with "system 2" thinking—the slow, deliberate logic humans use to solve puzzles. They are great at "system 1" thinking—the fast, intuitive associations. If you ask a generative AI to solve a math problem it hasn't seen in its training data, it often fails. It might get the first three steps right because those steps look like common patterns, but then it'll make a basic arithmetic error that ruins the whole result.

Gary Marcus, a cognitive scientist and frequent critic of the current AI hype, often points out that these systems lack a "world model." They don't understand gravity, cause and effect, or the passage of time. They just understand the description of those things. If you tell an AI "I put a ball in a box, moved the box to the kitchen, and took the ball out," it might still think the ball is in the box because the word "in" has a strong statistical tie to "box."

Data Exhaustion and the Ouroboros Effect

We are running out of internet. Seriously.

Researchers from Epoch AI have estimated that tech companies might exhaust the supply of high-quality public text data as early as 2026. This leads to a massive hurdle. If AI starts training on content generated by other AI, the quality collapses. It’s like making a photocopy of a photocopy. This "model collapse" happens when the subtle nuances of human language—slang, sarcasm, genuine soul—get filtered out in favor of the bland, averaged-out style that AI prefers.

- Degradation: The model loses the ability to represent rare or "tail" data.

- Homogenization: Everything starts to sound the same.

- Error Amplification: One AI's "hallucination" becomes the next AI's "fact."

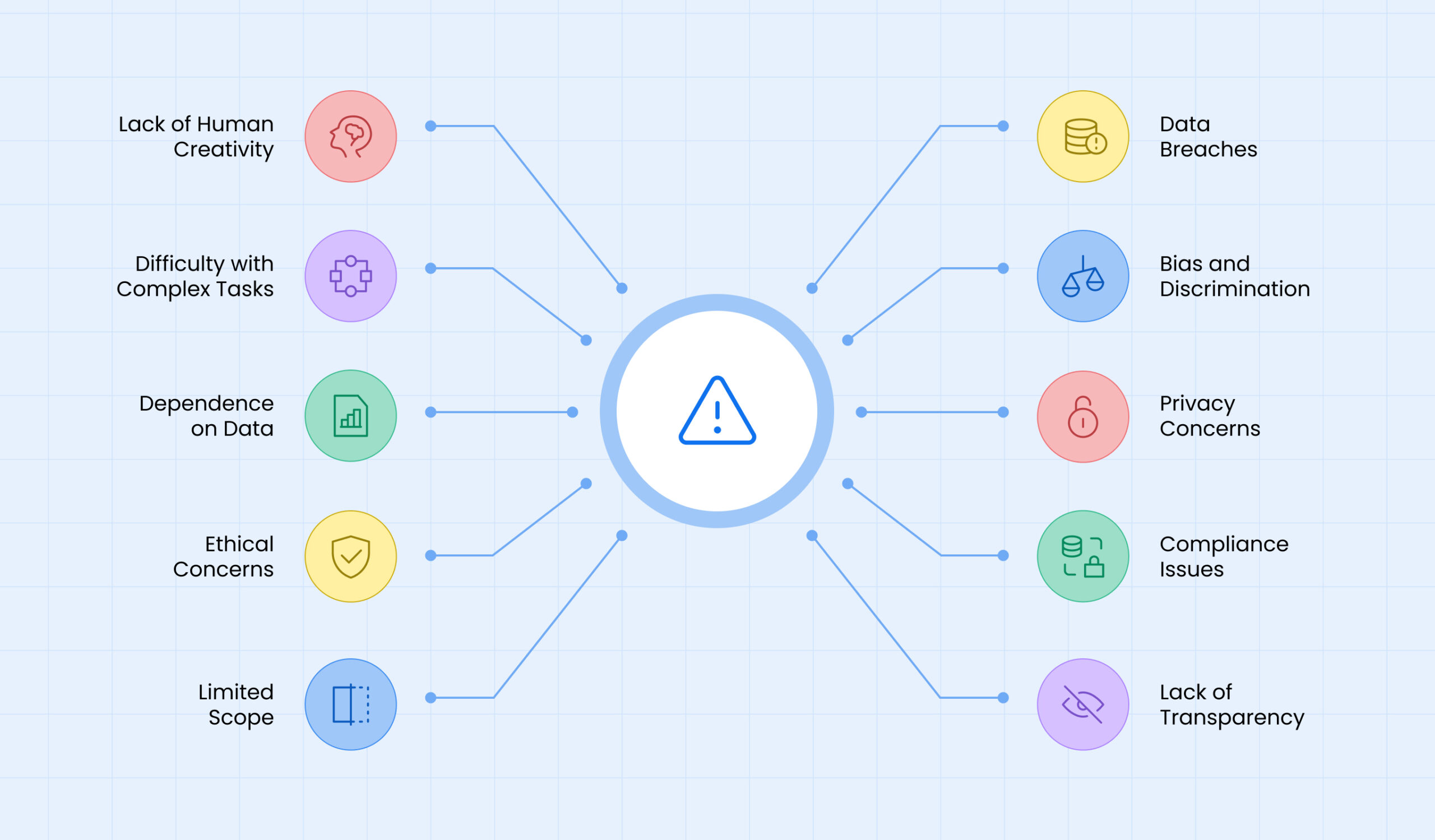

The Ethical Black Box and Biased Outputs

You can't train a model on the internet without training it on the internet's worst impulses. Generative AI inherits the biases of its creators and the data it consumes. This isn't just about "woke" or "not woke" politics; it's about fundamental skews in how it views the world. If most of the medical data in the training set comes from Western countries, the AI might give subpar advice for someone in a different demographic.

💡 You might also like: What Does 0p Mean? Decoding the Digital Slang and Technical Specs

And then there's the black box problem. Even the engineers at OpenAI or Google can't tell you exactly why a model gave a specific answer. They can adjust the "weights" and "biases," but the internal reasoning is a billion-dimensional mystery. This makes it incredibly dangerous for high-stakes industries like healthcare, finance, or criminal justice. If you can't audit the decision-making process, you can't trust the outcome. Period.

Creativity vs. Compilation

Is AI actually creative? Kinda. But not really.

Generative AI is a remix machine. It takes the collective output of human history and blends it into a smoothie. It can create an image "in the style of Van Gogh," but it could never be Van Gogh because it doesn't have a life to draw from. It doesn't have trauma, joy, or a unique perspective. It has pixels.

This is a huge limitation when it comes to true innovation. AI can help you iterate on an idea, but it rarely generates a "black swan" event—a totally new concept that breaks the mold. It thrives on the average. It lives in the center of the bell curve. If you want something truly groundbreaking, you still need a human brain that is willing to be "wrong" in a way that is actually right.

The Massive Environmental Cost

We talk about the cloud like it’s this ethereal thing. It’s not. It’s rows of screaming servers in a warehouse that require millions of gallons of water for cooling and massive amounts of electricity.

A single prompt in a generative AI model consumes significantly more energy than a standard Google search. According to a study by researchers at Hugging Face and Carnegie Mellon University, generating one image using a powerful AI model can use as much energy as fully charging your smartphone. When you multiply that by the millions of people using these tools every hour, the environmental footprint is staggering. This physical limitation is often ignored in the excitement over the software.

Context Windows and Memory Loss

If you've ever had a long conversation with a chatbot, you've probably noticed it starts to "forget" what you said at the beginning. This is the context window limitation. While models are getting better at remembering longer threads, they still eventually hit a wall. They don't have a persistent memory of you. Every time you start a new "session," you're talking to a stranger who has read every book in the world but doesn't know your name.

This makes it difficult for AI to act as a true long-term partner in complex projects. It can't learn your specific preferences over months of collaboration without massive, expensive custom fine-tuning. For most users, the AI is a goldfish with a genius-level IQ.

Navigating the Practical Reality

So, where does that leave us? Are these tools useless? Of course not. But using them effectively requires knowing exactly where they break.

If you're using generative AI for work, you have to be the editor-in-chief. You cannot "set it and forget it." You're the one with the world model. You're the one who knows that the "facts" it just gave you might be total garbage. The secret to winning with AI isn't finding the perfect prompt; it's having the expertise to know when the output is wrong.

Actionable Next Steps for Using AI Safely

- Verify everything that can be verified. Treat AI output like a draft from a very confident but slightly drunk intern. If it gives you a stat, a name, or a date, Google it. Every single time.

- Use it for structure, not substance. AI is brilliant at outlining, brainstorming, and formatting. It's much less reliable for providing deep, nuanced analysis or original research.

- Keep the "Human in the Loop." Never automate a customer-facing or high-stakes process without a human checkpoint. The reputation risk of a hallucination is far higher than the efficiency gain of full automation.

- Be specific about constraints. If you need a factual answer, tell the AI to "only use the provided text" or "cite your sources." It doesn't eliminate hallucinations, but it narrows the playground where they happen.

- Watch the bias. Regularly audit the outputs for skewed perspectives. If you're using it for hiring or sensitive communication, be hyper-aware that the model may be echoing stereotypes from its training data.

The future of technology isn't about AI replacing humans. It's about humans who understand the limitations of generative AI outperforming those who think the machine is infallible. The "magic" is just math, and math—while powerful—doesn't have a lick of common sense. Use it as a tool, but never let it hold the steering wheel.