Honestly, most people in the legal world have been treating Large Language Models (LLMs) like a magic wand. You wave ChatGPT over a mountain of M&A documents, hope for the best, and then act surprised when it hallucinates a "change of control" clause that doesn't exist. It’s messy. But the Addleshaw Goddard RAG report—officially titled The RAG Report: Can Large Language Models be good enough for legal due diligence?—pretty much blew the lid off why "out of the box" AI is failing law firms and how to actually fix it.

This wasn't just some marketing fluff. The firm's innovation team basically spent months stress-testing Retrieval-Augmented Generation (RAG) to see if it could handle the brutal precision required for M&A due diligence.

The results? Eye-opening.

The 74% Problem

When they first started, the baseline accuracy for identifying specific legal provisions was around 74%. In the world of high-stakes corporate law, 74% is basically a failing grade. You can't tell a client you're "mostly sure" about their liability risks. But through some seriously intense optimization, the team managed to push that accuracy up to over 95%.

🔗 Read more: Are There Tariffs on China: What Most People Get Wrong Right Now

That's a massive jump.

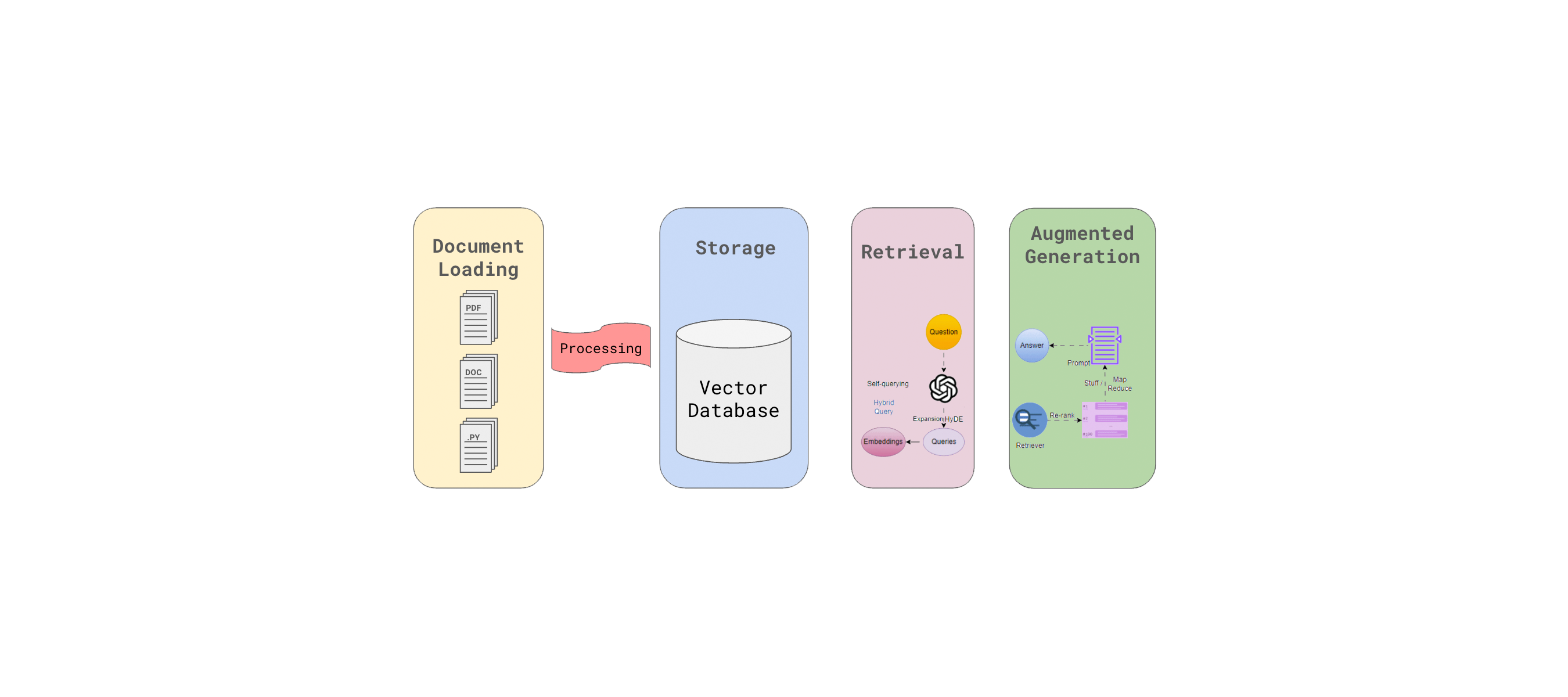

It turns out, the "secret sauce" isn't just having a better model. It's about the architecture surrounding it. The Addleshaw Goddard RAG report proves that how you retrieve information from a document matters just as much as the AI's ability to "read" it. If the retrieval is sloppy, the LLM is just guessing based on bad data.

What Actually Improved the Results?

They didn't just use better prompts like "please be more accurate." They got technical. Very technical.

💡 You might also like: Adani Ports SEZ Share Price: Why the Market is kida Obsessed Right Now

- Optimized Retrieval: By refining how the system searches for chunks of text, they saw a 20% improvement in finding the right provisions.

- Keyword Guidance: Telling the LLM specifically which keywords to hunt for (think "exclusivity" or "termination") boosted recall by about 16%.

- The Follow-Up Prompt: This is a simple but genius move. By asking the AI a second, clarifying question after the first answer, they gained another 9.2% in accuracy. It’s basically the AI version of "are you sure about that?"

The Death of "Off-the-Shelf" AI

One of the most candid parts of the report is the realization that law firms can't just buy a subscription to a generic AI tool and call it a day. The legal industry is too specific. You've got weird formatting, archaic language, and context that spans hundreds of pages.

Addleshaw Goddard didn't just write a paper; they built their own internal tool called AGPT. It’s a sandbox where their lawyers—over 95% of the firm, apparently—can use LLMs safely without leaking client data to the public internet.

But they didn't stop there. By 2025, they actually pivoted away from some earlier tech to adopt a platform called Legora (formerly Leya). Why? Because Legora's "Tabular Review" tool could handle 100,000 documents in parallel. That's the kind of scale you need when a global corporation is being sold and you have three weeks to check every single contract for "poison pills."

📖 Related: 40 Quid to Dollars: Why You Always Get Less Than the Google Rate

Real-World Stakes: The COVID-19 Inquiry

If you think this is all theoretical, look at their work on the UK COVID-19 Inquiry. They had to analyze an "enormous" dataset from over 130 different sub-organisations. Manual review would have taken months.

They deployed an AI-powered workflow that processed 445 reports and extracted 20+ data points from each in just 45 minutes. That’s not just "saving time." That’s doing the impossible.

What You Should Do Next

If you're looking at the Addleshaw Goddard RAG report as a blueprint for your own firm or legal department, here is the reality check: stop chasing the "smartest" model and start focusing on your data pipeline.

- Stop raw-dogging prompts. Don't just dump a PDF into a chat window. You need a RAG system that chunks the data properly so the AI isn't overwhelmed by the context window.

- Verify the retrieval. If your AI says a clause isn't there, you need to know if the AI missed it or if the retrieval system failed to find the right page.

- Implement multi-step reasoning. Use follow-up prompts to force the AI to double-check its own logic. This "agentic" behavior is where the 95%+ accuracy lives.

- Prioritize security. Addleshaw's use of AGPT shows that "private" instances are the only way to go. If you're putting client data into a public LLM, you're a liability.

The era of "playing" with AI is over. The report makes it clear: the firms that win won't be the ones with the most AI "users," but the ones with the most robust, optimized technical architectures. High accuracy in legal tech is a choice, not a default setting.