You’ve spent days, maybe weeks, pouring your soul into a new blog post or a product page. It’s perfect. You hit publish, sit back, and wait for the traffic to roll in. But nothing happens. Hours turn into days. You search for your exact headline on Google, and it’s nowhere to be found. It’s like you’re shouting into a void. Honestly, it’s one of the most frustrating parts of being a site owner. You just want to tell Google to crawl my site and get on with it, but the "Search Engine Giants" don't always make it feel that simple.

Google isn't a magic mirror. It's a massive, complex machine that has to prioritize trillions of pages. If you don't show it the door, it might never find your house.

💡 You might also like: Donna AI Song and Music Maker: What You Actually Get for Your Subscription

The Reality of How Googlebot Actually Works

Most people think Google is just constantly scanning every inch of the internet in real-time. That’s not quite right. Think of it more like a librarian with an infinite stack of books to sort through but a limited amount of coffee. This is what SEOs call "Crawl Budget." Google allocates a certain amount of resources to your site based on how often you update, how fast your server is, and how many people actually care about your content.

If your site is new, Googlebot might only visit once every few weeks. If you're The New York Times, it's there every second. When you try to tell Google to crawl my site, you're basically tapping the librarian on the shoulder and saying, "Hey, I added something important. Look at this first."

Sometimes, the bot gets stuck. It hits a "noindex" tag you forgot to remove after development, or it gets trapped in a redirect loop. If your site structure is a mess, the bot might just give up and go somewhere else. It's not personal; it's just efficiency.

The Direct Approach: Google Search Console

If you haven't set up Google Search Console (GSC), stop everything. Do it now. It is the only direct line of communication you have with the Big G.

To get a specific page indexed fast, use the URL Inspection Tool. You paste your link into the top bar, wait for the data to load, and then hit "Request Indexing." Does this guarantee instant ranking? No. But it puts you in the priority queue. It’s the digital equivalent of the "Express Lane" at the grocery store.

But don't overdo it. Google puts limits on how many manual requests you can make per day. If you’re trying to index 500 pages one by one, you’re doing it wrong. You need a sitemap for that.

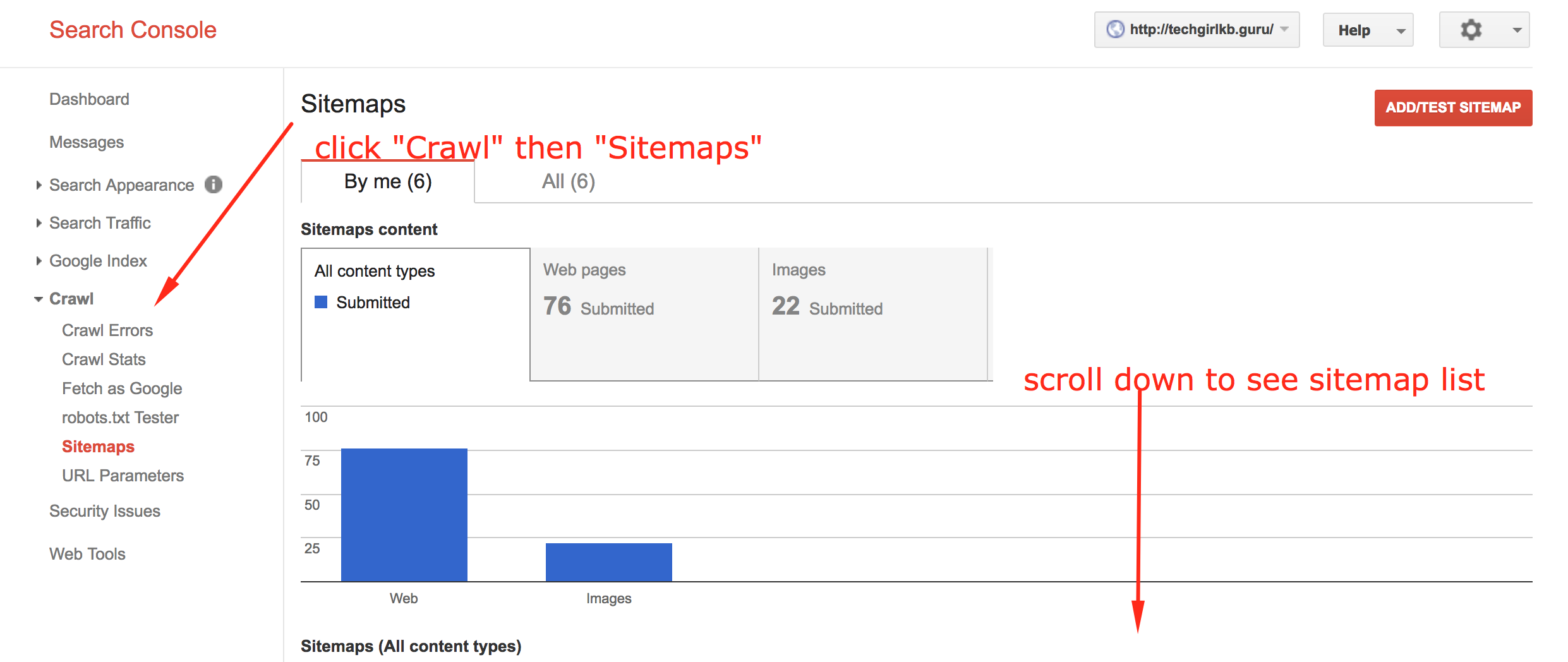

Sitemaps Aren't Optional

A sitemap is basically a GPS map for Googlebot. It’s an XML file that lists every important page on your site. When you submit this in GSC, you aren't just saying "look at this page"; you're saying "here is the entire layout of my digital kingdom."

💡 You might also like: How Much Does It Cost for Windows 10: What Most People Get Wrong

If you use WordPress, plugins like Yoast or Rank Math do this automatically. If you’re on a custom build, you might need to generate one manually. The key is to make sure your sitemap only includes "200 OK" pages. If you include broken links or redirected pages, you’re wasting the bot's time. And Google hates having its time wasted.

Why Your Site Might Be Getting Ignored

You can scream at Google all day, but if your technical foundation is shaky, the bot won't stay. One of the biggest culprits is the robots.txt file. This is a tiny text file on your server that tells bots where they can and cannot go. I’ve seen countless "SEO disasters" where a developer accidentally left a Disallow: / command in the file, effectively telling Google to stay away from the entire site.

Check your robots.txt right now. Go to yourdomain.com/robots.txt. If you see that forward slash after "Disallow," you’ve found your problem.

Another issue is Internal Linking. Googlebot finds new pages by following links. If your new post isn't linked from your homepage or a category page, it’s an "orphan page." It’s much harder for Google to find a page that has zero internal paths leading to it. Link your new stuff from your old, high-authority stuff. It works.

Using the Google Indexing API

This is for the power users. If you run a site with content that changes constantly—like a job board, a news site, or a live event tracker—the standard "Request Indexing" button won't cut it.

The Google Indexing API allows you to programmatically notify Google when pages are added or removed. It’s significantly faster than the standard crawl. However, it’s technically demanding. You’ll need to set up a project in the Google Cloud Console, get a JSON key, and use a script or a plugin to send the requests. For most small blogs, this is overkill. But for a site with 10,000+ pages that change daily, it’s a lifesaver.

💡 You might also like: Struck Thrill Rivet: Why This Fastener Is Driving Automotive Innovation

Social Signals and "Nudging" the Bot

Google says social media likes don't directly improve rankings. That might be true for the "algorithm," but social media does help with discovery. When you share a link on Twitter (X), LinkedIn, or even Pinterest, you’re creating a trail.

There are "ping" services out there, too. Old-school SEOs used to use them all the time. Nowadays, they're less effective, but platforms like IndexNow (used by Bing and Yandex) are gaining traction. While Google hasn't fully adopted IndexNow yet, using a protocol like that shows you're proactive about your site's health.

The "Quality" Wall

Here is the hard truth: Google might crawl your site and still decide not to index it.

In GSC, you might see a status that says "Crawled - currently not indexed." This is the "maybe later" pile. Usually, this happens because Google thinks the content is too thin, repetitive, or just not valuable enough to store on their servers. If you want to tell Google to crawl my site and actually have it stick, your content needs to be better than what’s already on Page 1.

Check your "Value Add." Are you just repeating what everyone else said? Are you using AI-generated fluff that offers zero new insights? Google’s "Helpful Content" updates are designed specifically to filter out the noise. If your page is just a rehash of a Wikipedia entry, the bot might visit, shrug, and leave without adding you to the index.

Performance Matters More Than You Think

If your site takes six seconds to load on a mobile device, Googlebot will get bored. Speed is a crawl factor. A slow site uses more of the bot's "budget." Use tools like PageSpeed Insights to make sure your images are compressed and your code isn't bloated. A lean, fast site is a crawlable site.

Actionable Steps to Get Indexed Today

First, verify your site in Google Search Console. It’s the foundation. Without it, you’re flying blind.

Second, check your robots.txt file for any "Disallow" rules that shouldn't be there. It's a 10-second check that could save you months of headaches.

Third, submit a clean XML sitemap. Make sure it only includes the pages you actually want people to find.

Fourth, use the URL Inspection tool for your most important 3–5 pages. Don't spam it, just use it for the "big" stuff.

Fifth, build internal links. Go to an old post that already gets traffic and add a link to your new content. This is the "secret sauce" of SEO. It passes authority and gives the bot a direct path to follow.

Finally, ensure your content isn't "Thin." If it's under 300 words and doesn't answer a specific question, beef it up. Add an image. Add a unique perspective. Give Google a reason to want your page in its index.

Getting Google to notice you isn't about one single trick. It's about making your site easy to read, fast to load, and genuinely useful. When you do those things, you won't have to beg Google to crawl your site; it will come looking for you.