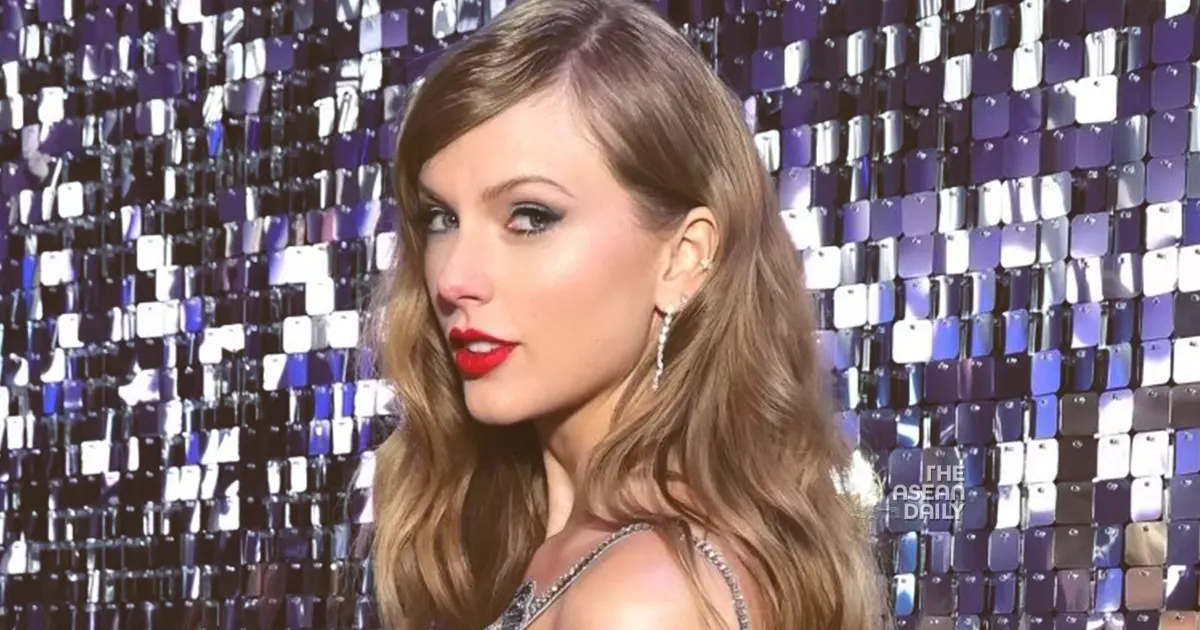

It was a Wednesday in January 2024 when the internet basically broke. You probably remember the headlines. Graphic, AI-generated images of Taylor Swift started flooding X (the platform formerly known as Twitter), and before the moderators could even blink, one of those posts had racked up over 47 million views. It was everywhere.

The taylor swift porn fake scandal wasn't just a celebrity gossip item. It was a massive, non-consensual violation that proved even the most powerful person in music wasn't safe from the "dark side" of generative AI.

Honestly, the scale of it was terrifying. It wasn't just one or two bad photos; it was a coordinated deluge. Reports eventually traced the origin back to a 4chan community and a specific Telegram group. These weren't "glitches." They were intentional attacks using tools like Microsoft Designer to bypass safety filters and create realistic, sexualized "deepfakes."

Why the Taylor Swift Porn Fake Scandal Changed Everything

Before this happened, deepfake abuse was sort of a "quiet" epidemic. Researchers like those at Reality Defender had been screaming into the void for years that 96% of deepfakes online are non-consensual pornography. But when it happened to Swift, the world finally stopped and looked.

The reaction was swift—no pun intended.

📖 Related: How Old Is Breanna Nix? What the American Idol Star Is Doing Now

Within days, the White House called the images "alarming." Microsoft's CEO Satya Nadella had to go on NBC Nightly News to explain how their tools were being weaponized. It forced a conversation about "Section 230" and why tech companies weren't doing more to stop this before it went viral.

But the biggest shift wasn't just corporate PR. It was legal.

The DEFIANCE Act and New Federal Protections

For the longest time, if you were a victim of a taylor swift porn fake or any deepfake abuse, your options were... well, they were pretty bad. You could report it to the platform, sure. But legally? There was no federal law that specifically let you sue the person who made it.

That changed because of the 2024-2026 legislative push.

👉 See also: Whitney Houston Wedding Dress: Why This 1992 Look Still Matters

- The TAKE IT DOWN Act: Signed into law in May 2025, this actually made it a federal crime to knowingly publish non-consensual intimate imagery, including AI fakes.

- The DEFIANCE Act: Just recently, on January 13, 2026, the Senate unanimously passed this bill. It’s a huge deal. It allows victims to sue creators and distributors for up to $150,000 in damages.

It’s about time.

For years, victims had to rely on a patchwork of state laws. If you lived in Tennessee, the rules were different than in California. Now, there’s a standardized path for "Swifties" and everyday people alike to fight back.

How These Fakes Are Actually Made

You might wonder how someone even makes a taylor swift porn fake that looks so real. It’s not just Photoshop anymore. It’s what’s called a "diffusion model."

These AI models are trained on millions of images. When someone types a prompt, the AI doesn't just "search" for a photo; it builds a new one from scratch, pixel by pixel. The problem is that these tools have become so easy to use that basically anyone with a laptop can generate "spicy" content.

✨ Don't miss: Finding the Perfect Donny Osmond Birthday Card: What Fans Often Get Wrong

X's own Grok AI even came under fire in late 2025 because users found ways to trick it into making explicit videos. It’s a constant game of cat and mouse between the engineers trying to build guardrails and the trolls trying to break them.

What Should You Do If You See This Content?

Don't share it. Don't even "hate-watch" or click to "see how bad it is." Every click is a signal to the algorithm that the content is "engaging," which helps it spread faster.

- Report immediately: Use the platform's specific "Non-Consensual Intimate Imagery" (NCII) reporting tool.

- Don't engage with the account: Blocking and reporting is better than replying. Replying actually boosts the post's visibility.

- Support the victims: In Taylor's case, fans started the #ProtectTaylorSwift hashtag to bury the bad images under a mountain of concert photos and positive clips. It worked surprisingly well.

The Actionable Bottom Line

The taylor swift porn fake era taught us that technology moves way faster than the law. But we are finally catching up.

If you or someone you know is a victim of deepfake abuse, you are no longer powerless. Here is what you can do right now:

- Use Take It Down: This is a free service by the National Center for Missing & Exploited Children. It helps you remove or stay ahead of explicit images of yourself (especially if you were a minor when they were made).

- Document everything: If you find a fake, take screenshots and save URLs before they get deleted. You’ll need this evidence if you decide to pursue a civil case under the new DEFIANCE Act.

- Check your local laws: While federal laws are catching up, states like New York and Minnesota already have very strong protections that might offer a faster path to justice than a federal suit.

The internet is a wild place, but the days of "anything goes" with AI are ending. We’re moving toward a world where your likeness actually belongs to you again.