You’ve seen the faces. They look perfect. Maybe a bit too perfect? Those hyper-realistic human portraits on "This Person Does Not Exist" aren't actually photos. They’re the result of a massive shift in how we think about computer vision.

Back in the day—which in AI years means like 2014—Generative Adversarial Networks (GANs) were the wild west. Ian Goodfellow’s original concept was brilliant but fickle. It was basically two neural networks screaming at each other until one got smart enough to lie. But those early images? They were blurry. Distorted. Often nightmare fuel.

Then came NVIDIA.

Specifically, Tero Karras and his team decided to flip the script. They realized that if you want a machine to draw a face, you can't just throw a bunch of random numbers at it and hope for the best. You need control. You need a style-based generator architecture that understands the difference between the shape of a nose and the color of someone's hair.

Honestly, it changed everything.

✨ Don't miss: What Does SWE Stand For? The Real Story Behind the Title

How the Style-Based Generator Architecture Actually Works

Standard GANs are like an artist trying to paint a portrait while someone shakes their arm. The input—that "latent code" $z$—is just a messy pile of variables. If you change one tiny number to try and make the eyes blue, the hair might suddenly turn into a hat. It’s called entanglement. It’s a mess.

The style-based generator architecture solves this by introducing a mapping network. Instead of feeding that random noise directly into the image-making process, the architecture sends it through a series of fully connected layers. This creates a new space, called $W$.

Why does $W$ matter? Because it’s "disentangled."

Think of it like a soundboard in a recording studio. In a traditional GAN, the sliders for "volume" and "bass" are glued together. In StyleGAN, they’re separate. You can turn up the "freckles" without accidentally changing the person's gender or age. It’s a level of granularity that was basically science fiction a decade ago.

The "Aha!" Moment: Adaptive Instance Normalization

The real secret sauce is something called AdaIN (Adaptive Instance Normalization).

Basically, the mapping network produces a "style" (vector $w$) that gets injected into every single layer of the synthesis network. It doesn't just happen at the beginning. It’s a constant guidance system.

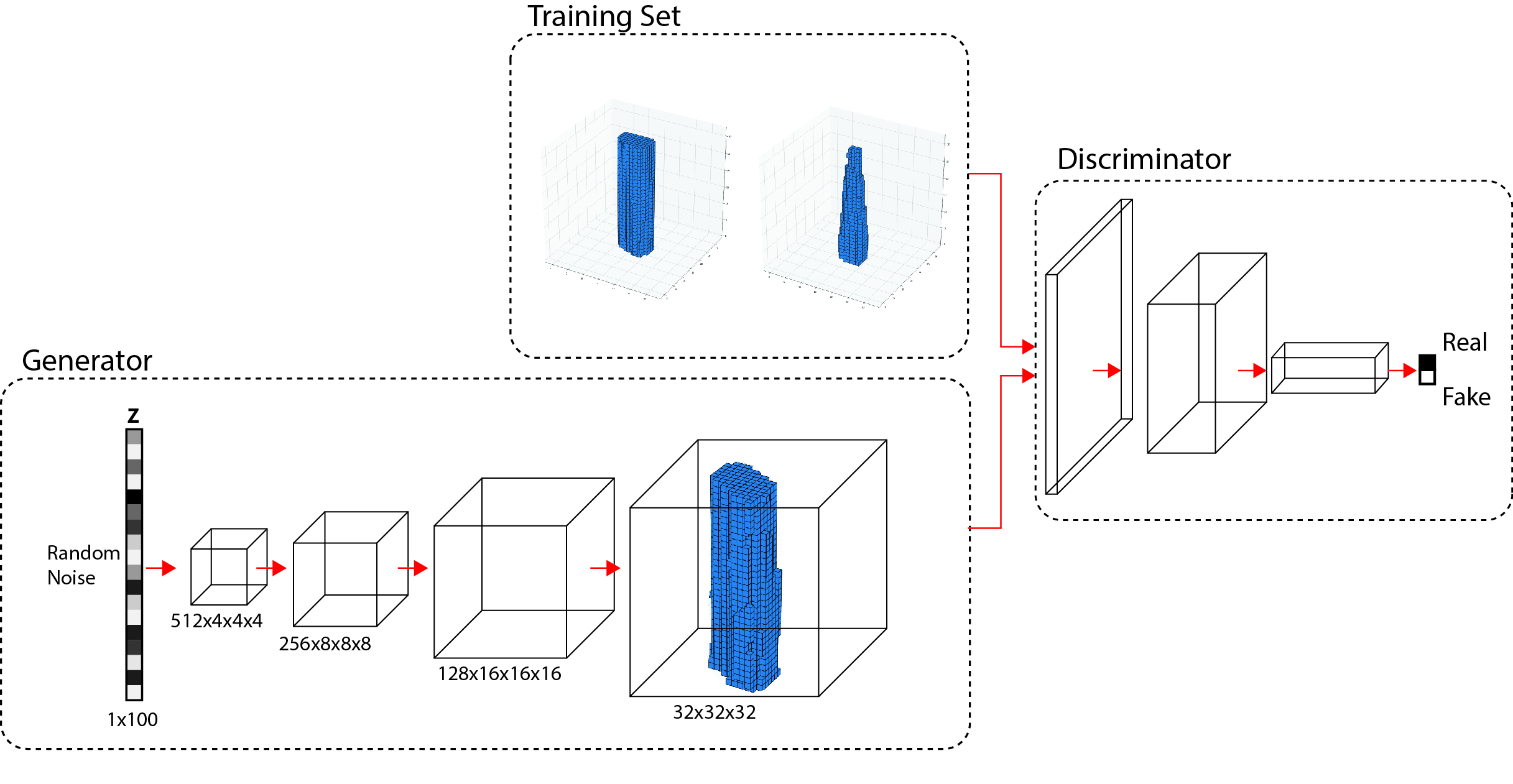

The network starts with a constant. A 4x4x512 tensor. This is weird, right? Usually, you start with noise. Here, the "starting point" is always the same, and the styles do the heavy lifting of shaping that block into a human face.

- Coarse styles: These affect things like pose, face shape, and hair style.

- Middle styles: These handle facial features and eyes.

- Fine styles: This is where the micro-details live—skin pores, individual hairs, and lighting.

It’s hierarchical. If you mess with the early layers, the whole person changes. If you mess with the late layers, you’re just changing the color palette. It’s intuitive. It’s elegant.

Why Real-World Data Is Messier Than the Papers Say

We talk about StyleGAN and its successors (StyleGAN2 and StyleGAN3) like they’re magic wands. They aren't. NVIDIA used the FFHQ (Flicker-Faces-HQ) dataset to train the original. That’s 70,000 high-quality images of humans.

But try training a style-based generator architecture on a small dataset of, say, specialized medical imagery or rare architectural styles. It breaks. You get "mode collapse," where the generator just keeps making the same three images because it found a way to trick the discriminator.

🔗 Read more: Why the Audio-Technica ATH-M50xSTS StreamSet is Actually Changing the Game for Creators

There's also the "droplet" artifact problem. If you look at early StyleGAN images, you’ll sometimes see these weird, glossy blobs that look like water stains. That was a flaw in the original normalization math. StyleGAN2 fixed this by redesigning how the weights are scaled, but it just goes to show that even "perfect" architectures have weird bugs in the code.

The Computation Problem Nobody Likes to Talk About

Building a style-based generator architecture isn't cheap. We’re talking about weeks of training on clusters of NVIDIA A100s or H100s.

If you're a hobbyist at home with a single GPU, you’re probably not going to train a high-res StyleGAN from scratch. You’re going to use "transfer learning." You take a model that already knows what a face looks like and you "finetune" it to look like something else—like anime characters or oil paintings.

It’s efficient. But it also means the AI carries the "biases" of the original dataset. If the original model was trained on 70,000 celebrities, your "oil painting" version is still going to look suspiciously like Hollywood actors.

StyleGAN3 and the Battle Against "Aliasing"

In 2021, NVIDIA dropped StyleGAN3. It was a massive leap because it addressed a specific annoyance: "texture sticking."

In older versions, if you animated a face moving, the skin pores would sometimes stay fixed in space while the face moved under them. It looked like a weird screen-door effect. StyleGAN3 reimagined the generator as a continuous signal. It's "alias-free."

This matters for movies. For VR. For anything where the image needs to move. If the textures don't stick to the geometry, the illusion of reality shatters instantly.

What’s the Catch?

The elephant in the room is ethics. A style-based generator architecture is the engine behind some of the most convincing deepfakes on the internet. While researchers like Adobe and NVIDIA are working on "Content Authenticity" initiatives, the tech is out of the bottle.

We also have to deal with the "uncanny valley." As these models get better, they sometimes hit a point where they are too symmetrical or too clean. Human faces have imperfections that GANs sometimes struggle to replicate naturally without looking like a digital filter.

Getting Hands-On: Your Next Steps

If you actually want to use this stuff, don't just read about it. The math is dense, but the implementation is surprisingly accessible thanks to the open-source community.

- Browse the Latent Space: Go to StyleGAN-themed sites and look at the "interpolation" videos. Watch how one face melts into another. That’s the $W$ space in action.

- Run a Colab Notebook: You don't need a supercomputer. Look for "StyleGAN2-ADA" notebooks on Google Colab. You can upload 500 pictures of your cat and see what the architecture makes of them.

- Study the Discriminator: Most people focus on the Generator, but the Discriminator is the "teacher." If the teacher is bad, the student never learns. Look into how R1 regularization keeps the training stable.

- Check out Diffusion: Just a heads-up—while GANs are incredible for speed and specific styles, "Diffusion Models" (like Midjourney or DALL-E) are currently winning the popularity contest for general art. However, GANs are still king for real-time video and high-speed generation.

The style-based generator architecture isn't just a footnote in AI history. It’s the reason digital humans look the way they do today. Whether you're a dev or just curious, understanding that "mapping" layer is the key to seeing how machines finally learned to be creative.

Stay curious. Keep breaking things. It’s the only way to actually learn how the "magic" works.