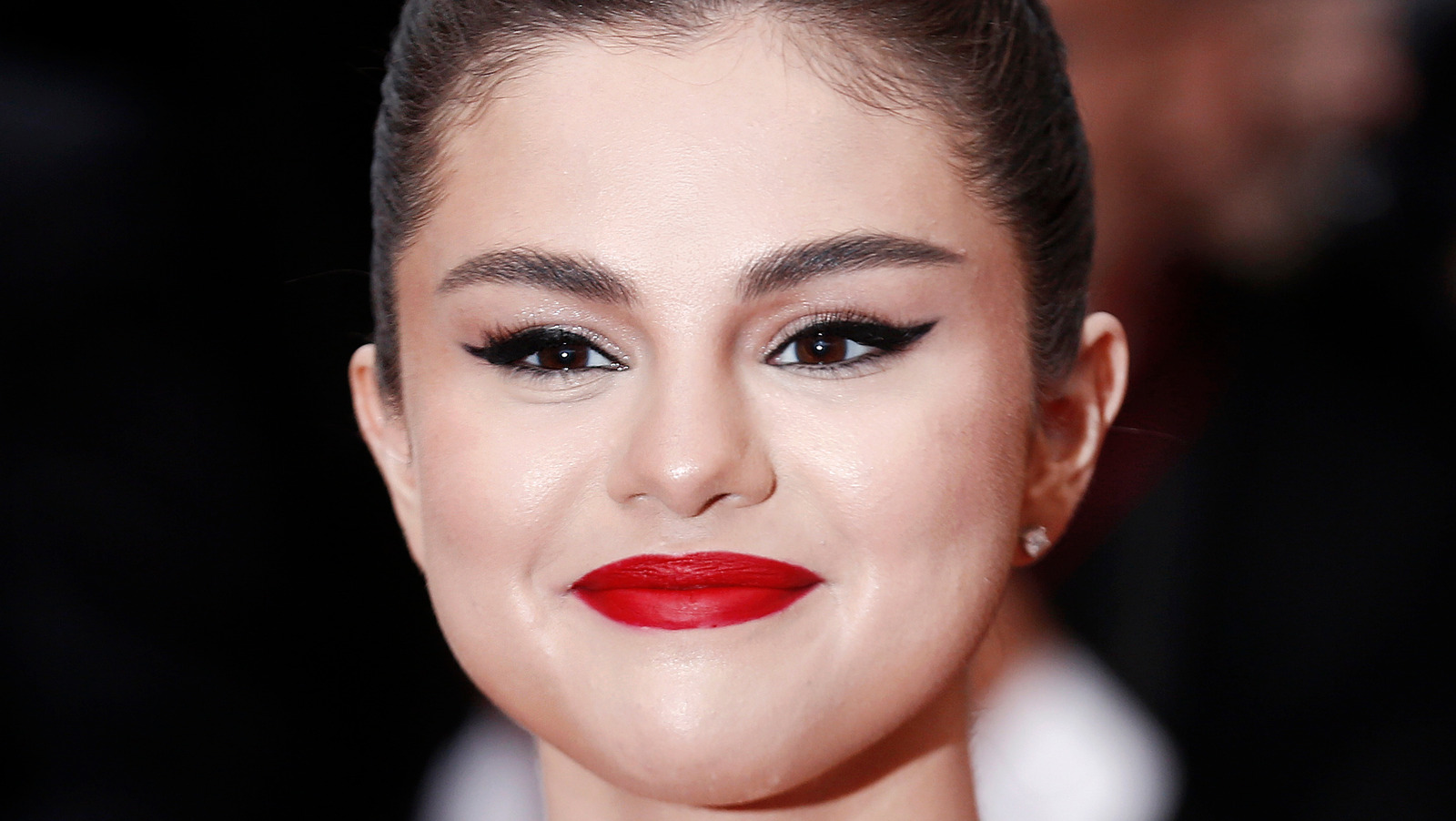

You’ve probably seen the headlines or stumbled across a thumbnail that made you double-take. It looks exactly like her. The same dark, arched eyebrows. The signature pout. That specific way she tilts her head. But it isn't her. Not really. The "porn Selena Gomez look alike" phenomenon has exploded from a niche corner of the internet into a full-blown digital crisis that involves everything from sophisticated AI deepfakes to look-alike performers specifically marketed to capitalize on her "Only Murders in the Building" fame.

Honestly, it’s a mess.

We aren't just talking about a coincidence anymore. In the old days of the adult industry, you had "parodies" where someone with a passing resemblance would put on a wig. Now? We have algorithms that can map Selena’s face onto another body with such precision that it’s almost impossible for the average person to spot the seams. It’s a weird, parasocial exploitation that raises massive questions about consent, digital identity, and why our laws are struggling to keep up with 2026 technology.

The Reality Behind the Look Alike Trend

The adult industry has always chased celebrity aesthetics. It’s basic supply and demand. If a star is trending, the search volume for their "leaked" content skyrockets. Since Selena Gomez is one of the most followed humans on the planet, she’s a primary target. But here’s what most people get wrong: there’s a huge difference between a human performer who happens to look like her and the flood of AI-generated content.

Human look-alikes have existed for decades. Performers like Raquel Roper have been caught in the crossfire of this. Back in 2019, Roper actually discovered a trailer for one of her own films where her face had been digitally replaced with Selena’s without her knowledge. Think about that for a second. The actual performer was being erased, and the celebrity was being exploited. It’s a double violation.

Fast forward to today, and the "porn Selena Gomez look alike" search isn't just leading people to look-alikes; it's leading them to deepfake marketplaces.

👉 See also: Jaden Newman Leaked OnlyFans: What Most People Get Wrong

In early 2024, a massive scandal broke when thousands of AI-generated, sexually explicit images of celebrities—including Selena Gomez and Taylor Swift—were found for sale on eBay. People were literally profiting from non-consensual digital clones. It wasn't just a few hobbyists on a forum; it was a commercialized industry. These "look-alikes" are often just digital masks stretched over someone else's life.

Why This Matters More Than You Think

It’s easy to shrug this off as "celebrity problems." You might think, well, she's famous and rich, who cares? But the technology being used to create a porn Selena Gomez look alike is the same tech being used to harass high school students and coworkers. Selena is just the high-profile proof of concept.

The psychological toll is real.

Activists like Noelle Martin have pointed out that this isn't just about "fakes." It’s about the dehumanization of a person’s image. When an AI can generate a convincing video of a star, it erodes the very idea of bodily autonomy. If you can’t control your own face, what do you actually own?

The Tech Gap

- Generative AI: Tools like Grok or open-source Stable Diffusion models have been manipulated to bypass "safety" filters.

- Face-Swapping: Software that used to take a Hollywood budget now runs on a mid-tier gaming laptop.

- Voice Synthesis: It’s not just the look; 2026 AI can mimic her rasp and cadence perfectly.

The adult industry has seen a rise in "AI performers" who aren't even based on real people but are "trained" on celebrity datasets. They are essentially digital chimeras designed to look 80% like Selena Gomez and 20% like a generic model to avoid immediate legal takedowns. It’s a loophole that’s driving her legal team crazy.

✨ Don't miss: The Fifth Wheel Kim Kardashian: What Really Happened with the Netflix Comedy

New Laws and the Fight for Digital Identity

For a long time, the internet was the Wild West. If a deepfake of you appeared, you had almost no recourse unless you could prove "right of publicity" violations, which is a slow, expensive civil process. But things are finally shifting.

In May 2025, the TAKE IT DOWN Act was signed into law. This was a massive turning point. It finally made it a federal crime to "knowingly publish" non-consensual intimate imagery, specifically including AI-generated deepfakes. It also forced platforms like X (formerly Twitter) and Reddit to pull this content down within 48 hours of a report.

Then came the DEFIANCE Act of 2026.

This one is the real kicker. It allows victims—whether they are a global superstar or a private citizen—to sue the individuals who generate the images for statutory damages up to $150,000. You don't just go after the website anymore; you go after the "creep" in the basement making the content.

Selena herself has been quiet on the specifics of these cases, but her legal strategy is clear. Like Matthew McConaughey, who recently secured landmark trademarks for his "human brand," many stars are now treating their faces like corporate logos. If you use a Selena Gomez look-alike in a commercial adult context, you aren't just violating her privacy; you're infringing on a trademarked identity.

🔗 Read more: Erik Menendez Height: What Most People Get Wrong

Spotting the Fake: How to Tell

If you're browsing the web and see something that claims to be a "leaked" video, it's 99.9% a fake. Here is how the tech usually gives itself away:

- The Blink Test: Many AI models still struggle with natural blinking patterns. The eyes often look "glassy" or static.

- Edge Distortion: Look at the jawline or where the hair meets the forehead. If there’s a slight "shimmer" or blurring when the person moves quickly, it’s a face-swap.

- Ear Inconsistency: For some reason, AI is terrible at ears. They often look asymmetrical or melt into the neck.

- Lighting Mismatch: If the face is perfectly lit but the body is in shadow, the shadows won't move correctly across the facial features.

What Should You Actually Do?

If you stumble upon this kind of content, don't share it. Even "outrage sharing" to point out how bad it is just feeds the algorithms and gives the creators the engagement they want.

If you or someone you know is being targeted by look-alike or deepfake harassment, there are actual tools now. Organizations like StopNCII.org use "hashing" technology to help prevent your images from being uploaded to major platforms in the first place.

Next Steps for Protecting Your Own Image:

- Audit your "Public" Photos: High-resolution, front-facing photos are the "training data" for deepfakes. If your Instagram is public, an AI can clone you in minutes.

- Use Watermarking: If you're a creator, subtle digital watermarks can make it harder for AI tools to cleanly scrape your face.

- Stay Informed on Legislation: Keep an eye on the DEFIANCE Act and local "Right of Publicity" laws. The legal landscape is moving faster than the tech for the first time in history.

- Report Violations: Use the reporting tools on platforms specifically citing "Non-Consensual Intimate Imagery." Most sites now have a dedicated fast-track for this thanks to the 2025 federal mandates.

The "porn Selena Gomez look alike" trend isn't going to vanish overnight. As long as there is money to be made from her likeness, people will try to exploit it. But with new federal criminal penalties and the ability to sue for six-figure sums, the risk-reward ratio is finally starting to flip. We're moving toward a world where your digital face is as protected as your physical body. Or at least, that’s the goal.