It’s kind of wild to think about, but the phone sitting in your pocket right now has more raw processing power than the entire guidance system used to land the Apollo 11 mission on the moon. Honestly, it’s not even a close competition. We aren't just talking about a slight upgrade. We are talking about a literal million-fold increase in performance. This isn't some random accident of history. It’s the result of a very specific, very famous observation made back in the mid-sixties that we’ve come to know as Moore’s Law.

Most people think Moore’s Law is some kind of physical law, like gravity or thermodynamics. It’s not. It was actually just a prediction—a bit of a hunch, really—made by a guy named Gordon Moore. In 1965, Moore was working as the director of R&D at Fairchild Semiconductor before he went on to co-found Intel. He noticed that the number of transistors engineers could cram onto a single silicon chip was doubling roughly every year. He wrote this down in a paper for Electronics magazine, and the world of computing was never the same again.

What is Moore's Law and why did it change everything?

By 1975, Moore tweaked his timeline slightly. He realized that doubling every single year was maybe a bit too aggressive, so he slowed the projection down to doubling every two years. That’s the version that stuck.

Transistors are basically microscopic light switches. They represent the 1s and 0s that make up all digital data. If you have more switches in the same amount of space, your computer can do more math, faster, while using less power. It sounds simple, but the implications are staggering. Because the growth is exponential rather than linear, the progress builds on itself like a snowball rolling down a mountain.

Think about it this way. If you take 30 linear steps, you’ve walked across your backyard. If you take 30 exponential steps—where each step is double the length of the previous one—you’ve walked to the moon and back. Several times. That’s why Moore’s Law feels so magical. It’s the reason why a computer that used to fill an entire air-conditioned room in the 1970s can now fit inside a smartwatch that tracks your heart rate while you sleep.

The economics of shrinking silicon

One thing people often overlook is that Moore's Law isn't just about speed. It’s about money. Gordon Moore wasn't just talking about physics; he was talking about the "cost per component." He realized that as you make transistors smaller, they become cheaper to produce in bulk. This created a virtuous cycle in the tech industry.

✨ Don't miss: When Can I Pre Order iPhone 16 Pro Max: What Most People Get Wrong

- Companies make smaller transistors.

- Chips get more powerful and cheaper.

- More people buy computers.

- Companies use that profit to fund R&D for even smaller transistors.

Wash, rinse, repeat for sixty years. This cycle is exactly why technology is one of the few things in life that actually gets better while getting cheaper. Your car doesn't get 50% faster every two years for the same price, but your laptop basically does.

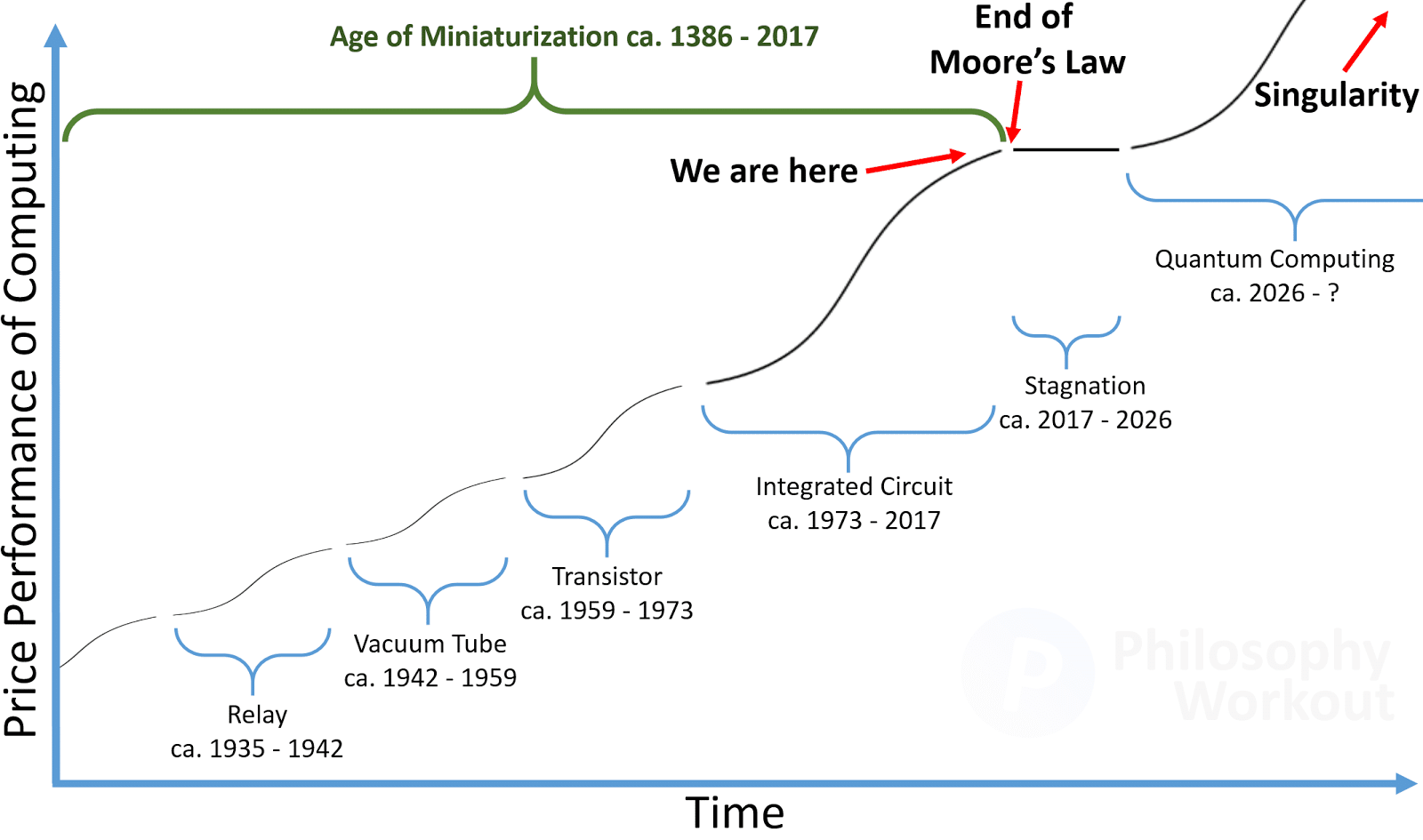

Is Moore's Law finally dead?

If you hang out in tech circles, you've probably heard someone declare that Moore's Law is dead. People have been saying this since the 90s. Even Jensen Huang, the CEO of Nvidia, famously claimed it was over a couple of years ago. And honestly? They kind of have a point, but it's complicated.

We are hitting some very real, very annoying walls of physics.

Right now, we are carving transistors that are only a few nanometers wide. For context, a strand of human DNA is about 2.5 nanometers in diameter. We are literally manipulating matter at the atomic level. When transistors get that small, weird things start to happen. Electricity starts to "leak" out of the wires because the walls are too thin. This is called quantum tunneling. It's basically like trying to keep water in a pipe that's made of tissue paper. It doesn't work very well.

Furthermore, the heat is a massive problem. When you pack billions of tiny heat-generating switches into a space the size of a fingernail, things get hot. Fast. If we kept shrinking them at the old rate without changing the architecture, the chips would literally melt. This is why your CPU doesn't just keep getting "faster" in terms of Gigahertz (clock speed). If you look at the specs, clock speeds have actually hovered around 3GHz to 5GHz for over a decade. Instead of making one "brain" faster, engineers started putting more brains (cores) on a single chip.

🔗 Read more: Why Your 3-in-1 Wireless Charging Station Probably Isn't Reaching Its Full Potential

The shift from "smaller" to "smarter"

So, if we can't make the transistors much smaller, is the party over? Not exactly. The industry is just moving the goalposts.

Instead of just shrinking the 2D footprint, companies like TSMC, Intel, and Samsung are going 3D. They are stacking transistors on top of each other. It’s like moving from a sprawling suburban neighborhood to a high-rise skyscraper in Manhattan. You get more density in the same zip code.

They are also using new materials. Silicon has had a great run, but we are looking at things like graphene or gallium nitride to handle higher voltages and better heat dissipation. We are also seeing a huge rise in "domain-specific" chips. Instead of a general-purpose processor that’s okay at everything, we have AI chips (like Nvidia’s H100s) that are specifically designed to do the heavy lifting for neural networks.

Real-world impact you can actually see

It’s easy to get lost in the jargon, but Moore’s Law has real-world consequences that affect your daily life. It’s the reason why "smart" devices exist.

Back in the day, if you wanted to do voice recognition, you needed a server rack. Now, your toaster can probably do it. This explosion of "The Internet of Things" is a direct byproduct of Moore’s prediction. We’ve reached a point where we have so many transistors to spare that we put them in lightbulbs and refrigerators.

💡 You might also like: Frontier Mail Powered by Yahoo: Why Your Login Just Changed

It also changed the way we build software. Software developers used to have to be incredibly stingy with code. Every byte mattered. Today? Not so much. Because hardware has become so powerful, developers can afford to write "heavy" code that focuses on user experience and features rather than just raw efficiency. It’s a bit of a double-edged sword, though—it's why some apps feel sluggish even on brand-new hardware.

The Environmental Elephant in the Room

We have to talk about the downside. This relentless pace of "newer, faster, better" creates a staggering amount of e-waste. Every time a new chip generation makes the old one obsolete, millions of devices end up in landfills. The energy required to manufacture these chips is also immense. Fabrication plants (Fabs) use billions of gallons of water and massive amounts of electricity. As we push the limits of Moore’s Law, the environmental cost of that "extra 10% performance" is going up.

What comes after the silicon era?

We are approaching the endgame for traditional silicon. Most experts agree that by the 2030s, we will have reached the absolute physical limit of what we can do with standard transistors. But that doesn't mean progress stops. It just means we need a new trick.

- Quantum Computing: Instead of bits (0 or 1), quantum computers use qubits. This allows them to solve problems that would take a traditional supercomputer thousands of years. It won't replace your laptop, but it will revolutionize things like drug discovery and encryption.

- Neuromorphic Computing: This is a fancy way of saying "chips that act like brains." Instead of rigid logic, these chips mimic the way human neurons fire, which could make AI incredibly more efficient.

- Optical Computing: Using light (photons) instead of electricity (electrons) to move data. Light is faster and doesn't generate heat in the same way.

Actionable Takeaways: What this means for you

Understanding Moore's Law helps you make better decisions as a consumer and a professional. You shouldn't just look at it as a history lesson.

- Don't overbuy hardware: Unless you are doing heavy video editing or AI training, you probably don't need the "latest and greatest" every year. The gains are becoming more incremental for average users.

- Focus on Software and Optimization: Since hardware gains are slowing down, the real "speed" in the next decade will come from better-written code and specialized AI acceleration.

- Invest in "Good Enough": We are in a plateau period for many devices. A high-end laptop from three years ago is still remarkably capable today, which wasn't true in the 90s.

- Watch the Cloud: Because local hardware is hitting limits, more "heavy lifting" is moving to data centers. Reliable high-speed internet is becoming more important than the processor inside your actual device.

The spirit of Moore's Law—the idea that human ingenuity will always find a way to do more with less—is very much alive. Even if the physical shrinking of transistors stops, the evolution of how we use them is only just getting started.

Next Steps for Deepening Your Tech Knowledge:

- Audit your current hardware: Check if your bottleneck is actually your CPU or if it's simply RAM or slow storage.

- Research "System-on-a-Chip" (SoC) architecture to see how Apple and others are bypassing traditional limits by integrating components.

- Explore cloud computing options like GeForce Now or AWS to offload processing power needs.