Machine learning models are basically toddlers. You spend months teaching them exactly how the world works, feeding them data, and patting them on the back when they get a prediction right. Then you turn your back for five minutes, and suddenly, they’re eating paste.

That’s model drift.

It’s the silent killer of AI projects. Honestly, it’s the reason why that high-tech recommendation engine your company built last year is now suggesting winter parkas to people in Miami during a July heatwave. Everything was perfect in the lab. The accuracy scores were through the roof. But out in the wild? Reality changed, and your model didn't get the memo.

So, What Is Model Drift Anyway?

At its simplest, model drift is the decay of a machine learning model's predictive power due to changes in the environment. It isn't a bug in your code. The math is still doing exactly what you told it to do. The problem is that the relationship between your input data and the target you're trying to predict has shifted.

Think of it like a map. If you have a map of London from 1920, the map itself isn't "broken." The ink is still there. The paper is fine. But if you try to use it to find a Starbucks in 2026, you're going to have a bad time. The world moved; the map stayed still. That’s drift.

The Two Main Flavors of Decay

Most people in the industry, including researchers like those at Databricks or Google Cloud, split this headache into two primary categories: Concept Drift and Data Drift.

Concept Drift is the really sneaky one. This happens when the very definition of what you’re trying to predict changes. A classic example is fraud detection. In 2020, a "fraudulent transaction" might have looked like a sudden $5,000 purchase in a foreign country. But hackers are smart. They evolve. By 2026, fraud might look like a series of $2.00 transactions across forty different accounts. The concept of fraud has evolved. Your model is still looking for the $5,000 "big hit," so it misses the small-scale siphoning entirely.

Data Drift (or Feature Drift) is a bit more straightforward. The "logic" of the world remains the same, but the data coming into the system changes. Imagine a predictive maintenance model for a factory. It was trained on sensors from machines made in Germany. Suddenly, the factory replaces those parts with new ones from a supplier in Japan. The new sensors might measure temperature in Celsius instead of Fahrenheit, or they might just be more sensitive. The model gets confused because the "features" it's seeing now don't look like the "features" it saw during training.

Why This Actually Happens in the Real World

Things break. It’s a fact of life.

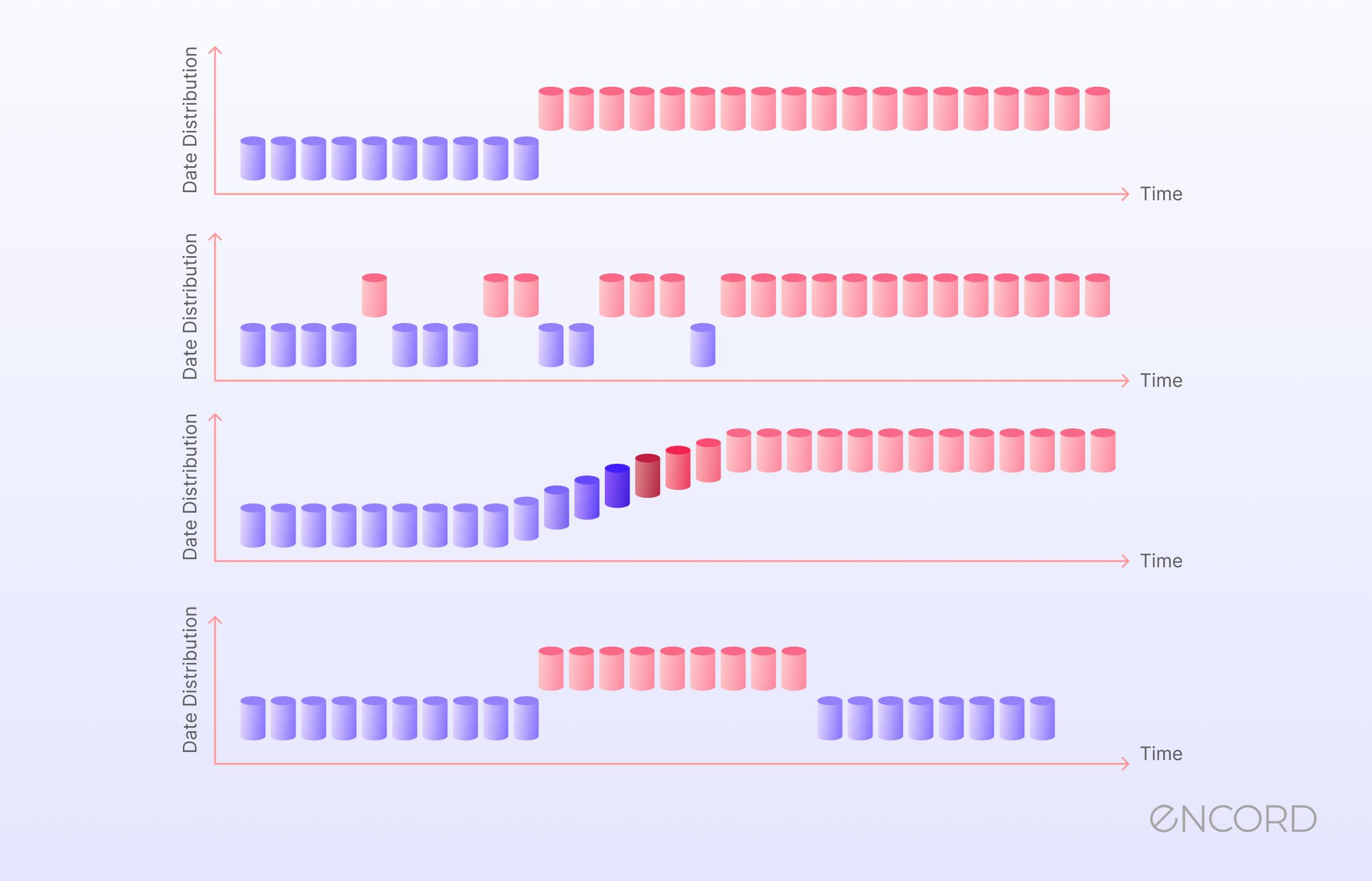

Sometimes drift is sudden. Think about March 2020. Almost every retail forecasting model on the planet broke overnight because of COVID-19. Nobody was buying office clothes; everyone was buying toilet paper and yeast. That’s a black swan event causing instantaneous drift.

Other times, it's a "gradual drift." This is more like a slow leak in a tire. Consumer preferences shift over years. Language evolves—slang that was "fire" three years ago is now "cringe." If you’re running a sentiment analysis model on social media posts and you haven't retrained it lately, it’s probably misreading everything.

There’s also seasonal drift. This is the one that trips up juniors. If you train a model on energy consumption data during the winter, it will be hilariously wrong when July hits. It’s not that the model is bad; it’s just that it’s a "winter specialist" being asked to play a summer game.

💡 You might also like: Why the blue thumbs up emoji is actually ruining your group chats

The High Cost of Ignoring the Decay

You might think, "Okay, so the accuracy drops from 95% to 88%. Big deal."

Actually, it is a big deal.

In healthcare, model drift in diagnostic AI can lead to missed tumors. In finance, it can lead to massive credit risks that go undetected until a bank's balance sheet is in shambles. Zillow’s famous "iBuying" collapse is often cited as a cautionary tale here. They used algorithms to flip houses, but the models couldn't keep up with the rapidly shifting real estate market dynamics in 2021. They ended up overpaying for thousands of homes, leading to a $400 million loss and massive layoffs.

That wasn't just a "math error." That was model drift meeting a lack of human oversight.

How to Spot Drift Before It Ruins Your Tuesday

You can't just wait for your customers to complain. By then, the damage is done. You need a monitoring strategy.

1. Statistical Checks

Experts like Chip Huyen, author of Designing Machine Learning Systems, often suggest using statistical tests to compare your training data distribution to your live production data.

- Kolmogorov-Smirnov (K-S) test: Good for seeing if two distributions differ.

- Population Stability Index (PSI): A classic in the banking world to see how much a distribution has shifted over time.

- Jensen-Shannon Divergence: A way to measure the "distance" between two probability distributions.

2. Performance Monitoring

This is the most obvious one. You track your F1 score, Precision, Recall, or Mean Absolute Error (MAE) in real-time. The catch? You often don't get "ground truth" labels immediately. If you predict whether a customer will churn, you won't actually know if you were right for 30 or 60 days. This lag is why statistical checks on the input data are often better early-warning signs than performance metrics.

3. Adversarial Validation

This is a clever trick. You try to train a new model to see if it can tell the difference between your training data and your current production data. If the new model can easily distinguish between them, you have drift. If the model is confused, your data is still relatively stable.

The Great Retraining Myth

"Just retrain the model every week!"

I hear this a lot. It sounds like a silver bullet, but it’s often a waste of money. Retraining is expensive. It requires compute power, engineer hours, and—most importantly—clean, labeled data.

Sometimes, retraining on the "new" data makes the model worse because the new data is just a temporary spike or noise. You don't want to retrain your stock market bot because of a one-day flash crash. You’d be "overfitting" to an anomaly.

Instead of blind retraining, smart teams use trigger-based retraining. You set a threshold. If the PSI hits 0.2, or if accuracy drops below a certain percentile for three consecutive days, then you kick off the pipeline.

💡 You might also like: Internet Connected Smoke Alarms: Why Your Old Beeping Detector is Actually Kinda Useless Now

Human-in-the-Loop: The Unsung Hero

We like to think AI is autonomous. It’s not.

The best defense against model drift is a human who actually understands the business. An analyst who says, "Hey, why are we suddenly rejecting every loan application from Ohio?" is worth more than ten automated dashboards.

There is a concept called Active Learning. This is where the model identifies the cases it's most "unsure" about and sends them to a human expert for labeling. This ensures that the model is constantly learning from the most difficult, most current examples, rather than just chewing on old data it already understands.

Actionable Steps to Protect Your Systems

If you're managing a model right now, don't wait for it to break. Start here:

- Audit your training data: Look at the date range. If your model was trained on data older than six months and your industry moves fast (like fashion or tech), you’re likely already drifting.

- Implement a "Shadow Model": Run your new, retrained model alongside the old one in production. Don't let it make decisions yet. Just compare its predictions to the old model. This "A/B testing" approach lets you see if the new model is actually better before you give it the keys to the kingdom.

- Check your data pipeline: Sometimes "drift" is actually just a broken sensor or a changed API schema. Before you blame the math, check the plumbing. If a field that used to be "0 or 1" is now "Yes or No," your model is going to have a meltdown.

- Document your assumptions: Write down what the world looked like when you built the model. "We assume interest rates stay between 3% and 5%." When those assumptions break, you know it's time to re-evaluate the model's validity.

The reality is that no model is "set it and forget it." In the world of machine learning, entropy always wins. Your job isn't just to build a great model; it's to build a system that knows when it's no longer great. Stop looking at your model as a static product and start treating it like a living organism that needs constant feeding, monitoring, and the occasional trip to the doctor.