Mathematics isn't really about numbers. It's about shorthand. If you had to write out "the square root of the sum of two squares" every time you wanted to find the hypotenuse of a triangle, you’d probably just give up and go into marketing. We use symbols because humans are fundamentally lazy, and symbols let us pack massive, complex ideas into tiny little ink marks. But here is the thing: if you don’t know maths all symbols name, the page looks like an alien manuscript.

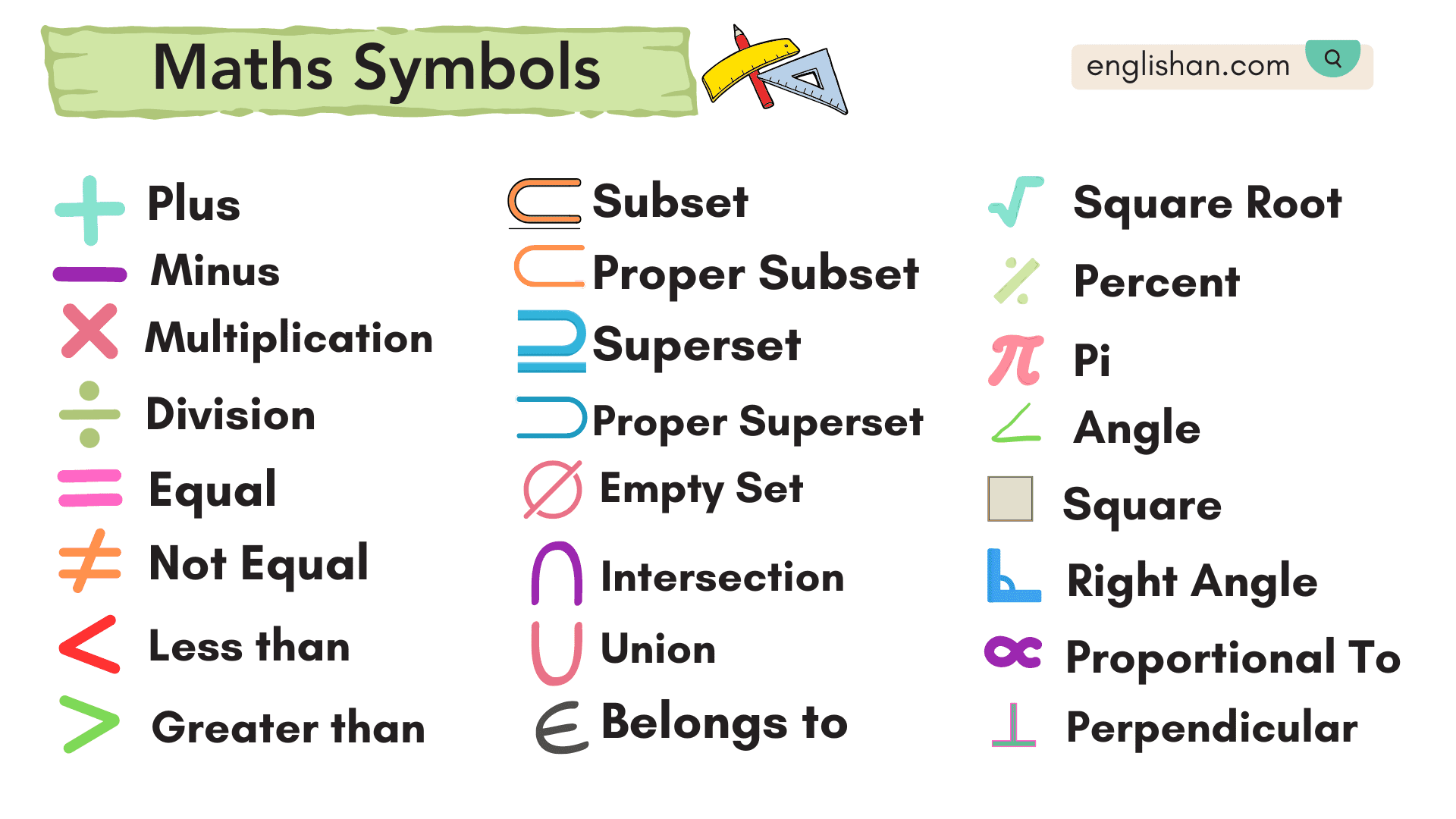

Most people stop learning new math symbols around age 16. You know the plus, the minus, maybe the "greater than" sign if you were paying attention. Then you hit college or a technical job and suddenly there’s a squiggle that looks like a dying snake (the integral) or a Greek letter that looks like a fancy 'E' (sigma). Honestly, it's intimidating. But these aren't just decorations. They are instructions.

Basic Arithmetic and The Equality Problem

We start with the "equals" sign ($=$). It’s two parallel lines. Simple, right? Robert Recorde invented it in 1557 because he was tired of writing "is equal to" over and over. He chose parallel lines because "no two things can be more equal."

Then you’ve got the plus ($+$) and minus ($-$). The plus is just a contraction of the Latin word "et," meaning "and." The minus sign's history is a bit murkier, likely coming from a bar written over a letter to show subtraction in merchant records.

Multiplication is where it gets messy. You have the cross ($\times$), which we call the "times" sign or the "multiplication sign." But in higher math, we ditch the cross because it looks too much like the letter $x$. We use a dot ($\cdot$) or just smash the letters together. Division follows a similar path. You have the obelus ($\div$), but most mathematicians just use a fraction bar or a slash ($/$).

- $<$ : Less than

- $>$ : Greater than

- $

eq$ : Not equal to - $\approx$ : Approximately equal to (the "wavy" equals)

People often mix up the inequality signs. Just remember the "alligator" metaphor from primary school—the mouth always eats the bigger number. It’s a bit childish, sure, but it works even when you're doing complex calculus.

The Greek Alphabet Takeover

If you're looking for maths all symbols name, you eventually have to face the Greeks. Mathematics hijacked their alphabet centuries ago.

$\pi$ (Pi) is the celebrity of the group. It’s the ratio of a circle's circumference to its diameter. It's an irrational number, meaning it goes on forever without repeating. Most people know it as 3.14, but it’s actually an infinite mess of digits.

Then there’s $\Sigma$ (Sigma). When you see a giant $\Sigma$, it means "summation." It's an instruction to add up a whole bunch of numbers in a sequence. If you're into statistics or data science, you'll see this everywhere. Similarly, $\Delta$ (Delta) usually means "change." If you see $\Delta t$, it just means the change in time.

$\theta$ (Theta) is the go-to symbol for an unknown angle. Why? Honestly, probably because it looks cool. It's the standard in trigonometry. If you’re measuring the slope of a roof or the trajectory of a rocket, you’re probably looking for $\theta$.

Sets, Logic, and Why They Matter

Logic symbols are the "grammar" of math. They tell you how ideas connect.

Take the "for all" symbol ($\forall$). It looks like an upside-down 'A'. It's used in formal proofs to say that something is true for every single member of a group. Its counterpart is the "there exists" symbol ($\exists$), which is a backwards 'E'. It means at least one thing in the group fits the description.

Then you have set theory.

$\in$ means "is an element of."

$\subset$ means "is a subset of."

$\cup$ is the "union" (everything in both groups).

$\cap$ is the "intersection" (only the stuff that overlaps).

Basically, if you’re trying to program an AI or organize a database, you are using set theory. You might not use the symbols in your daily Python code, but the logic is exactly the same. The symbols are just the shorthand for the logic gates in your brain.

The "Scary" Calculus Symbols

Calculus is usually where people tap out. But the symbols are actually quite descriptive once you stop panicking.

The integral symbol ($\int$) is basically just a stylized 'S'. It stands for "sum." It’s what happens when you try to add up an infinite number of infinitely small things to find the area under a curve.

Then there’s the "del" or "nabla" ($

abla$). It looks like an upside-down triangle. It represents the gradient. If you’re standing on a hill, the gradient tells you which way is the steepest and how fast you’ll roll down it. It’s fundamental in physics, especially when dealing with electromagnetism or fluid dynamics.

$\infty$ is the infinity symbol. It’s a lemniscate. It’s not a number; it’s a concept. It represents something that grows without bound. You can't "reach" infinity, but you can approach it, which is the whole basis of limits ($\lim$).

Practical Cheat Sheet for Everyday Use

If you're reading a technical paper or trying to help a kid with homework, here’s a quick-fire list of the heavy hitters you’ll encounter.

🔗 Read more: Astronauts Stuck in Space: What Really Happens When the Return Flight Gets Cancelled

$\therefore$ : Therefore. It’s three dots in a triangle. Use it when you’ve finished an argument and you’re about to drop the final answer.

$\because$ : Because. The upside-down version of "therefore."

$\pm$ : Plus or minus. Used when an answer could be either positive or negative, like in the quadratic formula.

$\sqrt{x}$ : Square root. This symbol is called a "radical." It asks: what number multiplied by itself gives me $x$?

$!$ : Factorial. No, the math isn't shouting at you. $5!$ means $5 \times 4 \times 3 \times 2 \times 1$.

Why We Still Use These Symbols in 2026

In a world of Large Language Models and AI, you might think symbols are becoming obsolete. It’s actually the opposite. Coding languages like LaTeX are built entirely on the names of these symbols. If you want to tell an AI to generate a complex formula, you need to know that $\lambda$ is "lambda" and $\partial$ is "partial derivative."

Mathematical notation is a universal language. A mathematician in Tokyo can read a paper by a mathematician in Berlin without speaking a word of German or Japanese. The symbols bridge the gap. They are the purest form of communication we have because they don't allow for the ambiguity of spoken language.

When you learn the names of these symbols, you aren't just memorizing vocabulary. You are gaining access to a toolkit that has been under construction for three thousand years.

Next Steps for Mastering Notation

- Print a physical reference sheet. Keep a list of the Greek alphabet and common set theory symbols on your desk. Don't rely on your memory; the visual association helps it stick over time.

- Learn LaTeX basics. If you do any technical writing, learn how to type these symbols using code (e.g.,

\sigmafor $\sigma$). It forces you to learn the actual names of the characters. - Read symbols aloud. When looking at an equation, don't just scan it. Say the names: "The integral of $f$ of $x$ with respect to $x$ from $a$ to $b$." It bridges the gap between the visual symbol and the logical concept.

- Use a math OCR tool. Apps like Mathpix allow you to take a photo of any symbol, and it will give you the LaTeX name and definition. This is the fastest way to learn on the fly when you encounter something weird in a PDF.