Math is just another language. Honestly, if you look at it that way, the scary-looking squiggles on a chalkboard start to feel a lot less like a threat and more like shorthand for people who were too lazy to write out long sentences. We use mathematical symbols and meanings to compress massive, universe-sized ideas into tiny little ink marks. But here is the thing: most of us stop learning these symbols after high school geometry, and that’s where the trouble starts.

If you can’t read the symbols, you can’t read the logic.

Think about the first time you saw a summation sign. That giant, jagged $\sum$. It looks like something out of a horror movie for anyone who struggled with algebra. But all it really means is "add everything up." That's it. We complicate things by assuming there is some mystical secret behind the notation, but usually, it's just a shortcut.

The Secret Life of Basic Notation

You’ve known the plus sign since you were five. But have you ever thought about why it looks like that? It’s actually a shorthand for the Latin word "et," which means "and." Over centuries of monks and scholars scribbling in the margins of manuscripts, the "e" and the "t" fused together into the cross we see today.

Then there is the equals sign ($=$). Robert Recorde, a Welsh physician and mathematician, invented it in 1557 because he was tired of writing "is equal to" over and over again. He chose two parallel lines because, in his words, "no two things can be more equal."

It’s almost poetic.

But as we move into higher-level mathematical symbols and meanings, the poetry gets a bit more abstract. Take the asterisk ($*$). In basic arithmetic, we use it for multiplication because the "x" looked too much like the variable $x$. In computer science and logic, it takes on entirely different roles. Context is everything. If you see a dot ($\cdot$) between two numbers, it’s multiplication. If you see it in a vector calculus textbook, it’s a dot product, which is a completely different beast involving angles and magnitudes.

When Logic Meets Symbols

Logic symbols are where things get kinda weird for most people. If you’ve ever looked at a philosophy paper or a complex piece of code, you’ve probably seen the upside-down "A" ($\forall$) or the backwards "E" ($\exists$).

These are quantifiers.

🔗 Read more: Getty Images v Stability AI Explained: Why the Landmark Ruling Didn't Settle Everything

- $\forall$ means "for all."

- $\exists$ means "there exists."

Imagine you are trying to prove that every person in a room has a phone. Instead of writing that whole sentence out, a mathematician would write something like $\forall x \in P, \text{hasPhone}(x)$. It’s cleaner. It’s faster. But if you don't know the code, it looks like gibberish.

The "implies" arrow ($\rightarrow$ or $\Rightarrow$) is another big one. It’s the backbone of every "if-then" statement ever made. If it rains, then the ground is wet. $P \Rightarrow Q$. This is the stuff that drives the logic gates in your smartphone and the algorithms that decide what you see on social media. We aren't just talking about schoolwork here; we are talking about the literal architecture of the modern world.

Why the Greek Alphabet Hijacked Math

You can’t talk about mathematical symbols and meanings without mentioning the Greeks. Why do we use $ \pi $? Why $ \theta $?

Essentially, the early pioneers of modern mathematics were obsessed with classical antiquity. Leonhard Euler, arguably the most prolific mathematician to ever live, popularized $\pi$ for the ratio of a circle's circumference to its diameter in the 1700s. Before him, people just wrote out "the constant" or used clunky fractions.

$\Delta$ (Delta) usually stands for "change." If you see $ \Delta x $, it just means the change in the value of $x$. It's used in physics to describe how fast a car is accelerating or in economics to show how a price shift affects demand.

📖 Related: Classy Apple Watch Faces: Why Most People Get It Wrong

Then you have $ \infty $—the lemniscate. John Wallis introduced it in 1655. It’s not a number; it’s a concept. That is a distinction that trips people up constantly. You can’t "reach" infinity. You can only approach it. When you see that sideways eight, you’re looking at a process that never ends.

The Symbols That Scare Everyone (But Shouldn't)

Let's talk about the big ones: Integrals ($\int$) and Derivatives ($\frac{dy}{dx}$).

Calculus is basically the study of change. The derivative symbol looks like a fraction, and in many ways, it behaves like one, but it represents a "rate." It’s how fast the "y" is changing compared to the "x."

The integral symbol is actually just a long, stylized "S." It stands for "summa," or sum. It’s used to find the area under a curve. If you break an irregular shape into an infinite number of tiny rectangles and add their areas together, you get the total area. The $\int$ symbol is just a way of saying "add up all these tiny bits."

It's actually quite beautiful when you realize that all of calculus is just fancy addition and subtraction performed on things that are infinitely small.

Common Misconceptions That Mess Everything Up

A huge mistake people make is thinking that a symbol always means the same thing.

It doesn't.

💡 You might also like: My Talking Pet: Why This App Keeps Going Viral Years Later

In some contexts, the exclamation point ($!$) means you’re excited. In math, it’s a factorial. $5!$ isn't a loud "five"; it’s $5 \times 4 \times 3 \times 2 \times 1$.

[Image showing the calculation of a factorial number 5 factorial]

Also, the way we write "division" changes depending on where you are in the world. The obelus ($\div$) is common in US elementary schools, but almost no professional mathematician uses it. They use the solidus ($/$) or just write things as fractions. The colon ($:$) is often used for ratios, but in some European countries, it’s the standard sign for division.

Then there's the "not equal to" sign ($

eq$). It’s simple, right? A slash through the equals sign. But in programming, that same concept might be written as != or <>. If you're moving between math and tech, you have to be bilingual in symbols.

Practical Steps to Master Math Symbols

You don't need a PhD to stop being intimidated by these marks. If you're looking to actually improve your fluency with mathematical symbols and meanings, start with these specific actions:

- Learn the Greek alphabet: At least the first ten letters and the last few. Knowing alpha, beta, gamma, delta, epsilon, theta, lambda, pi, sigma, and omega will cover 90% of what you'll see in science and math.

- Translate symbols into plain English: When you see an equation, try to say it out loud as a sentence. Instead of seeing $ f(x) = y $, say "The function of x gives us y." This grounds the abstract symbols in real-world logic.

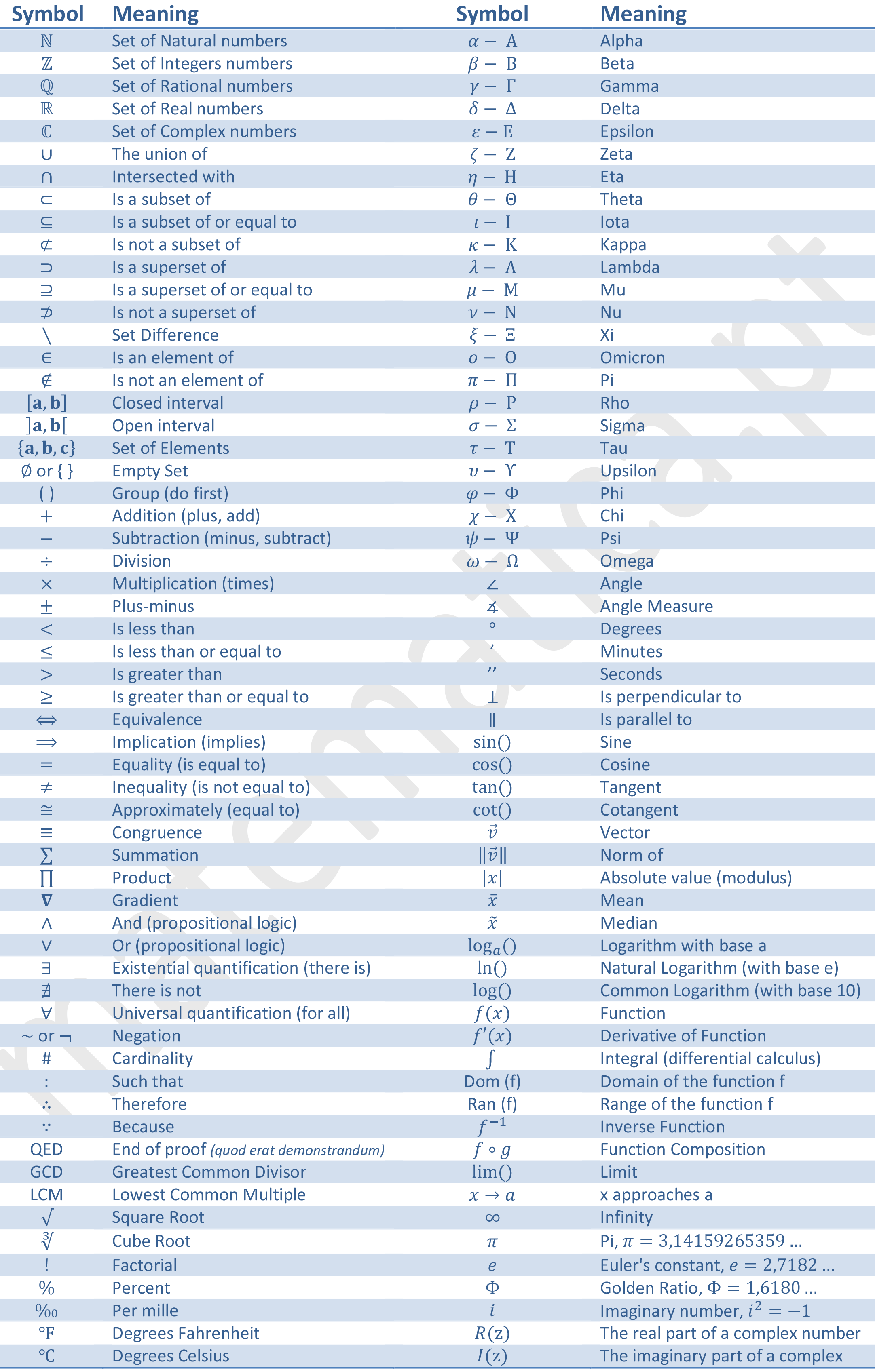

- Use a reference sheet: Don't try to memorize everything at once. Keep a "cheat sheet" of symbols nearby when you're reading technical papers. The Wolfram MathWorld site is an incredible resource for looking up obscure symbols.

- Focus on the "Why": Don't just learn that $\sqrt{}$ means square root. Understand that it’s asking: "What number multiplied by itself gives me the value inside?"

- Practice notation by hand: There's a weird neurological connection between drawing a symbol and understanding it. If you're learning a new concept, write the equations out by hand rather than typing them.

Math notation isn't a barrier; it’s a tool. It was built by people who wanted to communicate complex ideas as efficiently as possible. Once you stop seeing symbols as "math" and start seeing them as "vocabulary," the whole world of logic opens up.

If you want to dive deeper, your next step should be looking into Set Theory. It’s the foundation of almost all modern math and uses some of the coolest symbols, like $\cup$ (union), $\cap$ (intersection), and $\subset$ (subset). Mastering those will give you a massive leg up in understanding how data is organized in the real world.