You've probably been there. You are listening to a track—maybe a rare B-side or a classic 1970s soul record—and you think, "Man, I wish I could hear just the singer." Maybe you’re a producer looking for that perfect acapelle for a remix. Or maybe you just want to settle a bet about what the lyrics actually are. Whatever the reason, the quest to isolate vocals from song files used to be a fool's errand. It was messy. It sounded like underwater garble.

Honestly? It's still kinda hard to get it perfect, despite what the "one-click" advertisements tell you.

Most people assume that there is some magic "undo" button in digital audio. They think the vocal is just a separate layer sitting on top of the music. In reality, once a song is mixed down to a stereo file, the frequencies are baked together like eggs in a cake. You can't just "un-bake" the eggs without some serious science. But things changed around 2018 when a specific type of AI—Source Separation—hit the mainstream. Since then, we've moved from phase cancellation tricks to deep learning models that actually understand what a human voice sounds like versus a snare drum.

The Old School Way vs. The AI Revolution

Back in the day, if you wanted to isolate vocals from song tracks, you relied on phase inversion. This basically involved taking a karaoke version (if you had it), flipping the polarity, and hoping the instruments cancelled out. If you didn't have the instrumental, you were stuck using "Center Channel Extraction." Since vocals are usually panned dead center, you'd tell the software to kill everything on the sides. The result? A thin, robotic vocal that sounded like it was being screamed through a tin can at the bottom of a swimming pool.

👉 See also: M6 OLED MacBook Pro 2026 Redesign: Why This Refresh Actually Matters

Then came Spleeter.

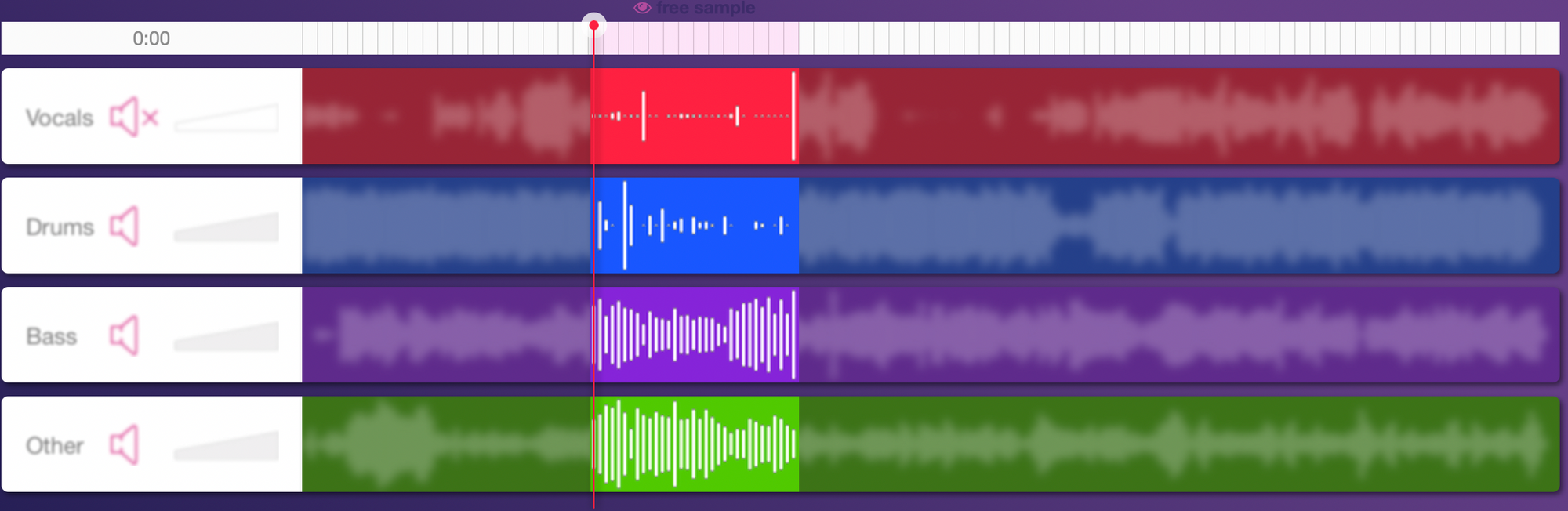

In 2019, the research team at Deezer released an open-source tool called Spleeter. It was a game-changer. Suddenly, anyone with a bit of command-line knowledge could split a song into two, four, or five "stems." It wasn't perfect, but it was lightyears ahead of the old "Oops" (Out of Phase Stereo) effect. It used a U-Net architecture, a type of convolutional neural network. Basically, the AI was trained on thousands of songs where it had access to both the full mix and the isolated tracks. It learned to recognize the visual patterns in a spectrogram that represent a human voice.

Today, the gold standard is arguably the Demucs model, developed by Alexandre Défossez at Meta AI. If you've used a website like LALAL.AI or Moises, you're likely using a modified version of these open-source models. These tools don't just "filter" the sound; they actually reconstruct the vocal based on what the AI predicts should be there. It's more like a restoration than a simple extraction.

Why Your Isolations Still Sound Like Garbage

You try to isolate vocals from song files and it sounds... crunchy. Why? Artifacts.

Artifacts are the digital leftovers—the "ghosts" of the drums or the shimmering metallic noise that happens when the AI can't quite distinguish a high-frequency vocal fry from a hi-hat hit. This happens most often with older recordings. If you’re trying to pull a vocal from a 1940s jazz record, the AI is going to struggle because the noise floor is high and the vocal frequencies overlap heavily with the brass section.

Bitrate matters too. If you’re trying to rip a vocal from a 128kbps MP3 you found on a dusty hard drive, stop. The compression has already destroyed the data the AI needs to make a clean break. You need WAV or FLAC. High-quality source material is the difference between a pro-level acapella and something that sounds like a glitchy radio transmission.

Another big hurdle is reverb.

✨ Don't miss: Why QR Code Version 25 Is Actually Overkill for Most People

Reverb is the enemy of isolation. When a singer records in a big hall, that echo isn't just on the vocal; it's smeared across the entire frequency spectrum. Most AI models struggle to decide if the "tail" of a reverb belongs to the singer or the room. This is why you often hear a weird, watery echo trailing off even after the vocal has been "isolated." Some newer tools, like RipX DAW, allow you to manually go in and "paint" out these frequencies, but it takes a lot of patience.

Choosing the Right Tool for the Job

There isn't a one-size-fits-all solution here.

If you're a casual listener just wanting to hear a lyric, a browser-based tool like Vocal Remover (vocalremover.org) is fine. It’s fast. It’s free. It gets the job done. But if you are a professional DJ or a remixer, you need something with more "meat" on the bones.

- Ultimate Vocal Remover (UVR5): This is the holy grail for nerds. It's free, open-source, and runs locally on your computer. It gives you access to dozens of different models (MDX-Net, VR Architecture, Demucs). You can "ensemble" them, meaning you run the song through three different AIs and have them vote on the best result. It’s heavy on your GPU, but the results are unmatched.

- Spectral Layers (Steinberg): This is for the surgeons. It treats audio like a photograph in Photoshop. You can see the vocals and literally "un-erase" parts that the AI missed.

- Moises.ai: Great for musicians. It’s an app that lets you mute the vocals in real-time so you can sing along. It’s less about "high-fidelity extraction" and more about "practice utility."

Honestly, I’ve found that running a track through UVR5 using the "Kim_Vocal_2" model usually yields the cleanest results for modern pop, whereas the "MDX-Net" models tend to handle rock and distorted guitars a bit better.

The Ethics and Legality of the "Un-Mix"

We have to talk about the elephant in the room. Just because you can isolate vocals from song files doesn't always mean you should—at least not if you plan on releasing it.

The music industry is currently in a fever dream over AI. In 2023, we saw the "Heart on My Sleeve" Drake/The Weeknd AI track go viral, which sparked a massive legal debate. While isolating a vocal for personal use (like making a "clean" version for your kids or practicing guitar) is generally considered fair use in many jurisdictions, using that isolated vocal in a commercial remix is a copyright minefield.

Sampling laws haven't changed just because the tech got better. You still need a mechanical license and a master use license if you're going to put that acapella on Spotify. The AI isn't "creating" a new vocal; it's extracting a copyrighted performance. Companies like Apple and Sony are already working on "watermarking" technology that can survive AI isolation, making it easier for them to track down unauthorized uses of their artists' stems.

Beyond the Vocal: The Future of Stem Separation

Where is this going? It's not just about the voice anymore. We are seeing a move toward "un-mixing" entire soundscapes.

Imagine being able to take a mono recording of The Beatles from 1963 and turning it into a full Dolby Atmos surround sound mix. Actually, you don't have to imagine it—Peter Jackson’s team did exactly that for the Get Back documentary and the "Now and Then" release. They used a proprietary AI they nicknamed "MAL" (after Mal Evans) to de-mix the messy rehearsal tapes.

This tech is democratizing music education. Bassists can solo the bass line of a Motown track to hear exactly what James Jamerson was doing. Drummers can remove the percussion to play along with the original masters. We are effectively entering an era where the "final mix" is no longer final.

Actionable Steps for Cleanest Isolation

If you want to try this yourself and get professional-grade results, don't just drag an MP3 into the first website you find. Follow this workflow:

- Source the highest quality file possible. A 24-bit WAV is the dream. A 320kbps MP3 is the bare minimum.

- Use Ultimate Vocal Remover (UVR5). It is free. If you have a decent PC with an NVIDIA card, use the MDX-Net models.

- Process in passes. Sometimes it's better to remove the drums first, then take that "no-drums" file and try to isolate the vocal from the remaining instruments.

- Post-Process. Once you have your vocal, it will likely have some "fizz" in the high end. Use a De-esser or a dynamic EQ (like TDR Nova, which is free) to smooth out those digital artifacts.

- Check for "Bleed." Listen to the silent parts of the vocal track. If you hear a ghost of a snare drum, use a gate or manually cut those sections out.

Isolating vocals isn't just a gimmick anymore; it's a legitimate tool in the modern producer's kit. It takes a mix of the right AI model and some manual cleanup, but we've finally reached the point where the "un-mix" is a reality.