You’re sitting in a third-grade classroom. The teacher draws a long horizontal line on the chalkboard. There’s a big fat dot right in the middle labeled "0." To the right, the numbers grow into a land of "more." To the left, they shrink into a frozen tundra of "less." It seems so simple. But then the question hits: is zero positive or negative?

Most of us just assume it's one or the other, or maybe it's both? Honestly, it’s neither. Zero is the ultimate fence-sitter of the mathematical world. It’s the Switzerland of the number line.

In the world of real numbers, zero is defined as the additive identity. That's a fancy way of saying if you add it to anything, nothing happens. It doesn’t push the value toward the sun or pull it into the abyss. It just exists. It is an unsigned integer. While that might sound like a technicality, this weird "neutrality" is actually the backbone of everything from the GPS on your phone to the way your bank account calculates interest (or lack thereof).

Why we struggle with the "neutral" label

Humans love binaries. Left or right. Up or down. Win or lose. When we see a number, our brains instinctively want to put it into a category. Positive numbers represent "having" something—three apples, ten dollars, a hundred likes. Negative numbers represent a "debt" or a "deficiency"—owing five bucks or being ten floors below ground.

Zero is the absence. How do you categorize the absence of something?

📖 Related: Why the 2026 summer intern program - information technology is already getting competitive

Mathematically, a positive number is defined as any value $x$ such that $x > 0$. A negative number is any value $x$ such that $x < 0$. If you look at those two definitions, zero is excluded from both by design. It cannot be greater than itself, and it certainly cannot be less than itself.

The "Non-Negative" work-around

You’ve probably seen the term "non-negative" in a programming manual or a tax document. This is where things get slightly messy for the average person. When a computer scientist says they want a "non-negative" integer, they mean ${0, 1, 2, 3, ...}$. In this specific context, zero is grouped with the positive crowd, but it doesn't actually become positive. It’s just not negative.

It’s like being at a party where the host says, "Everyone who isn't wearing a red shirt, come into the kitchen." If you're naked, you're going into the kitchen. You aren't wearing a blue shirt or a green shirt—you're just "not wearing red." Zero is "not negative," and it is also "not positive."

The history of the "nothing" that changed everything

For a huge chunk of human history, zero didn't even exist as a number. The Greeks were geniuses at geometry, but they found the concept of "nothing" as a "something" deeply unsettling. How can nothing be a number?

It wasn't until around the 5th century in India, with mathematicians like Brahmagupta, that zero started to be treated as a number in its own right rather than just a placeholder. Brahmagupta actually wrote down rules for dealing with it. He called it shunya. He understood that if you subtract a number from itself, you get zero.

But even then, the concept of "negative" was still weird. People viewed numbers as physical quantities. You can have three cows. You can't have "negative three cows." Zero was the floor. It was the absolute bottom.

Zero in the age of Calculus

Fast forward to the 17th century. Gottfried Wilhelm Leibniz and Isaac Newton are fighting over who invented calculus. They needed zero to be a very specific kind of nothing. They dealt with "infinitesimals"—values so incredibly close to zero that they might as well be zero, but they aren't quite there yet.

If zero were positive or negative, the entire foundation of limits would crumble. Think about the graph of $f(x) = \frac{1}{x}$. As $x$ approaches zero from the positive side, the value shoots up to infinity. As it approaches from the negative side, it plunges to negative infinity. Zero is the "wall" where these two worlds meet but never touch. If zero took a side, the symmetry of the universe would literally break.

Signed Zero: When computers get weird

Now, if you want to get your head spinning, let's talk about computer science. While pure mathematics says zero has no sign, your laptop might disagree.

The IEEE 754 standard for floating-point arithmetic—which is basically how almost every computer handles decimals—actually allows for something called signed zero.

- Positive Zero (+0)

- Negative Zero (-0)

Wait, didn't I just say that's impossible? In the realm of abstract math, yes. But in the world of bits and bytes, it’s a tool. Computers use signed zero to indicate how a number arrived at zero. If a value was so small and negative that the computer couldn't track it anymore (underflow), it labels it as -0. If it was a tiny positive value that disappeared, it’s +0.

For most tasks, the computer treats them as equal ($+0 == -0$ returns true). But in specific calculations, like dividing by zero, the sign matters. Dividing by +0 gives you positive infinity. Dividing by -0 gives you negative infinity.

It’s a hack. A very useful, very technical hack. But it doesn't change the fundamental nature of the number.

Parity: The one thing zero actually chooses

While zero refuses to be positive or negative, it does take a side on the even vs. odd debate.

Zero is even.

🔗 Read more: How Much Is a Chromebook Worth: Why Most People Guess Too Low

This bothers people way more than it should. People think of even numbers as $2, 4, 6, 8$. But the definition of an even number is any integer that can be expressed as $2n$, where $n$ is also an integer.

$2 \times 0 = 0$.

Boom. Even.

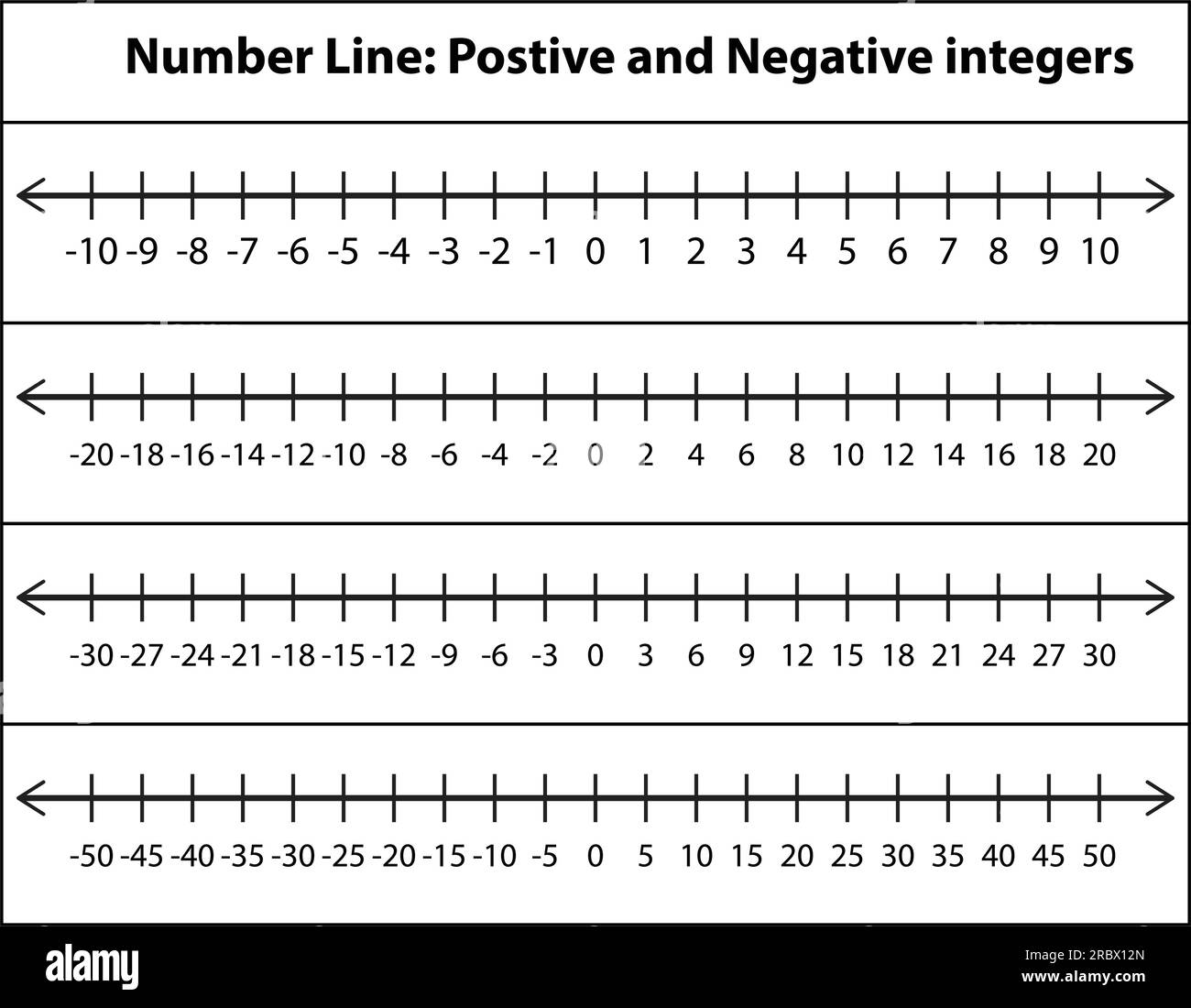

Also, if you look at a number line, even and odd numbers must alternate. Seven is odd. Six is even. Five is odd. Four is even. Three is odd. Two is even. One is odd. What comes next? It has to be even.

The psychological weight of zero

We treat zero differently in real life than we do on paper. Consider temperature.

In Celsius, $0^\circ$ is the freezing point of water. It feels "cold." If the temperature goes from $-2^\circ$ to $0^\circ$, it feels like a "positive" change. But $0^\circ$ Celsius isn't "zero" energy. That would be Absolute Zero ($-273.15^\circ \text{C}$), which is a different beast entirely.

💡 You might also like: How to save voicemails on an iphone: The tricks most people miss

In finance, zero is the terrifying line between solvent and broke. If you have $$0.00$, you aren't "positive." You’re just... waiting. But if you owe a penny, you’re suddenly in the negative. This is why we have such a hard time seeing zero as neutral; in our lives, it's usually a warning sign or a goalpost.

Common misconceptions that still float around

- Misconception: "Zero is a positive number because it isn't negative."

Reality: Incorrect. It's neither. It is the boundary. - Misconception: "You can't have zero of something."

Reality: Of course you can. Ask my bank account. Zero is a cardinal number that represents the size of an empty set. - Misconception: "Zero is basically just a placeholder."

Reality: It was used that way in ancient Babylon (like the 0 in 105), but as a standalone number, it's a fully functional mathematical object with its own rules.

Can zero ever be "both"?

In some very niche algebraic structures, you might find people playing with the definitions, but for 99.9% of the world—including every math test you will ever take—the answer is a hard no. Zero is the only real number that is neither positive nor negative.

Even the Complex Plane doesn't change this. In the world of imaginary numbers ($i$), zero remains the origin. It's the point where the real axis and the imaginary axis intersect. It remains the anchor of the entire system.

Actionable steps for mastering zero

If you're dealing with zero in a professional or academic setting, here is how to handle it without looking like an amateur:

- Specify your sets: If you are writing code or a technical paper, don't just say "positive numbers" if you mean to include zero. Use the term "non-negative integers" (which includes 0) or "natural numbers" (though be careful, as some definitions of natural numbers start at 1).

- Watch the division: Never, ever divide by zero. Whether it's positive or negative zero in a computer system, division by zero in standard arithmetic is undefined. It’s the "divide by zero error" for a reason—it breaks the logic of the equation.

- Check your inequalities: When writing formulas, pay close attention to the difference between $>$ and $\ge$. That little line under the "greater than" symbol is the difference between including zero and leaving it out in the cold.

- Think in vectors: If you’re struggling with the "neutral" concept, think of zero as a vector with no length. It has no direction (no positive or negative orientation). It's just a point in space.

Zero is arguably the most powerful tool in the mathematician's toolkit. It’s the balance point of the universe. By refusing to take a side between positive and negative, it allows every other number to have a meaningful place. It isn't a void; it's the center of everything.