You're staring at a screen. The sentences are perfect. Maybe too perfect. There’s a specific kind of rhythm—a steady, unrelenting hum of competence that feels slightly... off. You find yourself asking, is this essay ai generated, or did the writer just have a really good cup of coffee?

Honestly, it’s getting harder to tell.

💡 You might also like: How do you pay Verizon bill from phone? The fastest ways to get it done today

The gap between human creativity and Large Language Models (LLMs) like GPT-4 or Claude 3.5 is shrinking every single day. We used to look for "hallucinations" or weird facts about George Washington inventing the internet. Now? Now we're looking for subtle patterns in syntax and a lack of "burstiness." It's a weird world.

The Myth of the 100% Accurate Detector

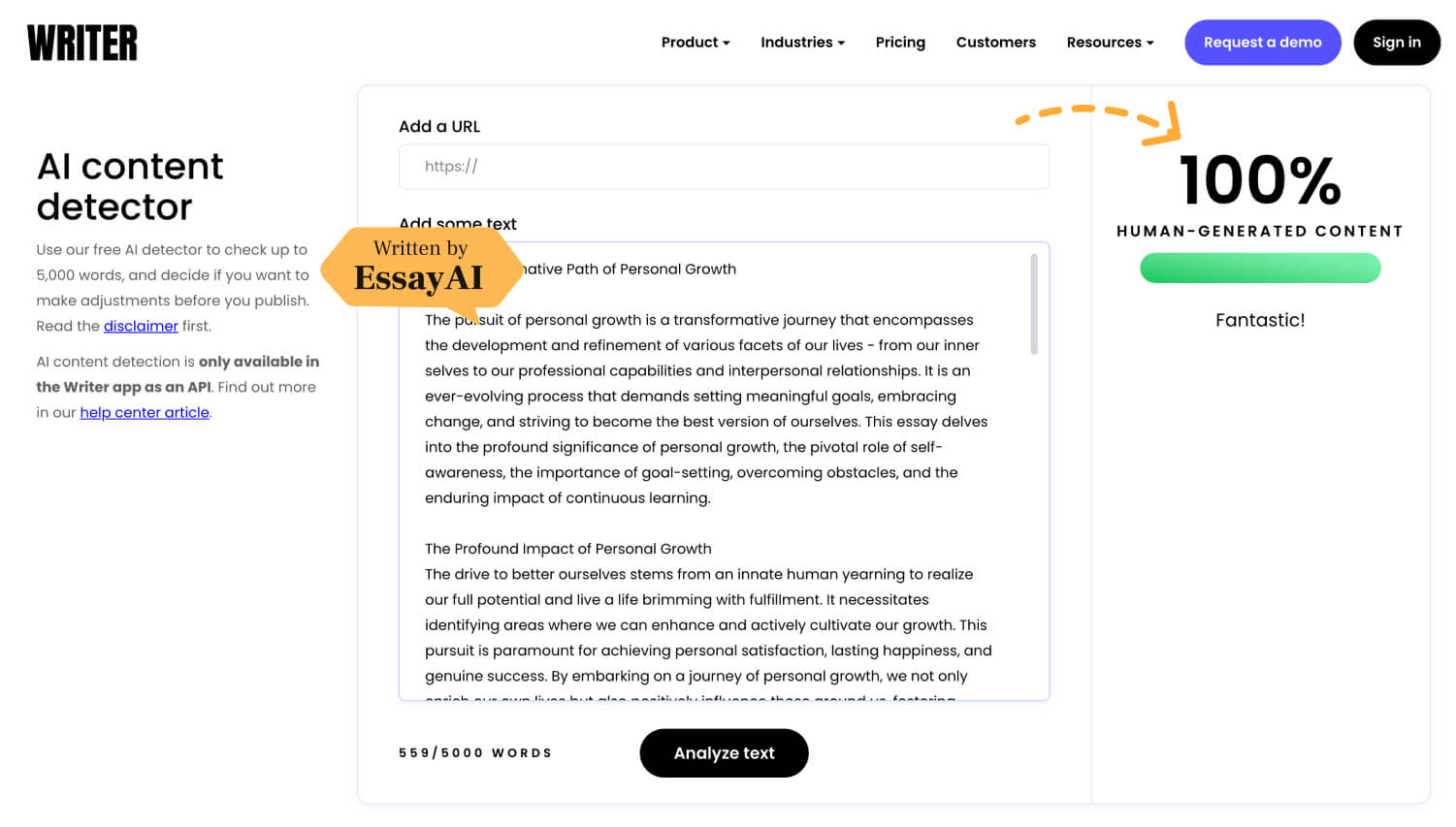

Let's get one thing straight: no tool can tell you with absolute certainty if a piece of writing came from a machine. Not Turnitin. Not GPTZero. Not Originality.ai.

They work on probability, not proof.

These detectors look for two main things: perplexity and burstiness. Perplexity is basically a measure of how "surprising" the text is to the model. If a bot can predict the next word easily, it flags it as AI. Burstiness refers to the variation in sentence length and structure. Humans are messy. We write a long, rambling sentence about our childhood dog and then follow it up with: "It sucked." AI doesn't usually do that. It stays middle-of-the-road.

But here’s the kicker. Highly skilled academic writers often have low perplexity. They write clearly and predictably. This leads to the "false positive" nightmare. A study from Stanford University actually found that AI detectors are biased against non-native English speakers. Because these writers often use simpler, more predictable sentence structures, the software flags their original work as machine-made. That’s a massive problem in classrooms.

What Real AI Writing Actually "Feels" Like

If you want to know is this essay ai generated, stop looking for typos. Look for the "sandwich" structure.

AI loves a good sandwich. It starts with a broad introductory statement, gives you three neatly packaged points (often with those annoying bold headers), and wraps it up with a summary that starts with "In conclusion" or "Ultimately." It's incredibly polite. Too polite. It lacks an edge.

Have you ever noticed how ChatGPT refuses to take a controversial stand unless you practically bully it into doing so? If an essay reads like a corporate HR manual—bland, balanced, and utterly devoid of a soul—your "bot-dar" should be going off.

The "Vibe Check" List

- The Adverb Overload: AI loves words like "seamlessly," "intricately," and "comprehensively."

- The Lack of Personal Anecdote: Unless prompted, AI rarely includes a specific, gritty detail that doesn't quite fit the narrative but feels real.

- The Perfect Transition: Every paragraph flows into the next like a paved highway. Real human thought is more like a dirt path with some tripping hazards.

Why Technical Evidence Isn't Always Enough

Researchers like Irene Solaiman have pointed out that as models get better at mimicking "human" flaws, detection becomes a game of cat and mouse. You can literally ask an AI to "write with high burstiness and varied perplexity," and it will bypass many filters.

Then there's the "Cyborg" problem.

What happens when a student writes an outline, asks AI to flesh out the middle, and then rewrites the intro? Is that AI-generated? Is it AI-assisted? The lines are blurry. Most universities are moving away from "gotcha" detection and toward "process-based" assessment. If a student can't explain their thesis in person, it doesn't matter what the software says.

We also have to talk about watermarking. Google and OpenAI have toyed with the idea of "digital watermarking"—embedding invisible patterns in the word choices that only a scanner can see. But it's not a silver bullet. If I take an AI essay and swap out 10% of the words, the watermark often breaks.

The Future of "Is This Essay AI Generated"

We are heading toward a world where the "humanity" of a text is found in its errors and its weirdness.

If you're an educator or an editor, look for the "Why." AI is great at the "What" and the "How." It can summarize the French Revolution in seconds. But it struggles to connect the French Revolution to a specific, niche feeling you had while walking through a park in 2024.

Nuance is the final frontier.

🔗 Read more: Why You Can't Just Walk In: How to Make Apple Support Appointment Help Actually Work

Actually, let's look at the data. OpenAI shuttered its own "AI Classifier" tool because the accuracy was embarrassingly low—around 26%. When the creators of the tech can't even catch it, we have to admit that the "Is this essay AI generated" question might be the wrong one to ask. Maybe we should be asking: "Is this essay insightful?"

Practical Steps for Verifying Content

If you're suspicious of a piece of writing, don't just dump it into a detector and call it a day. Do a bit of manual sleuthing.

- Check the References: AI is much better at facts now, but it still "hallucinates" sources. Look up the specific page numbers or DOI links. If they lead to 404 errors or books that don't exist, you've found a bot.

- Look for the "Mid-Point" Slump: AI tends to get repetitive in the middle of long-form content. It will restate the same three points using slightly different synonyms.

- Run a Google Search on Unique Phrases: AI often pulls from its training data in ways that create "cliché clusters." If you see a phrase that feels like a marketing slogan, search for it.

- The "Live" Test: If you're a teacher, ask the student to write one paragraph on a related but different topic right in front of you. The difference in "voice" is usually jarring if the original was faked.

The reality is that AI is a tool. Using it to generate a first draft isn't "cheating" in every context, but passing it off as original thought is a breach of trust. As these models evolve, our ability to detect them will rely less on software and more on our own intuition for what makes a human voice unique. We have to look for the scars, the weird metaphors, and the opinions that aren't "balanced."

Don't rely on a percentage score from a website. Trust the lack of personality. If it feels like it was written by a committee of a thousand polite robots, it probably was.

Actionable Next Steps

- Audit your own writing: If you're worried about being flagged, intentionally vary your sentence lengths and use more idiosyncratic language.

- Cross-reference detectors: If you must use software, run the text through at least three different tools (e.g., GPTZero, Copyleaks, and Originality) to see if there is a consensus.

- Focus on citations: Verify every single quote and date manually; this is the quickest way to spot high-level AI generation that hasn't been fact-checked.

- Adopt a "Human-in-the-Loop" philosophy: If you use AI for work, rewrite at least 50% of the output to ensure your specific "voice" and "expert perspective" are the dominant features of the text.