Wait. Let’s stop right there because if you’re asking how many bytes make a bit, you’re actually looking at the digital world through the wrong end of the telescope. It's a classic mix-up. Most people get it flipped.

In the world of computing, the bit is the absolute smallest atom of data. It’s a single binary choice. Yes or no. On or off. Black or white. A byte, on the other hand, is a collection of those bits. So, to answer the question directly: Zero bytes make a bit. In fact, it takes 0.125 bytes to make a single bit.

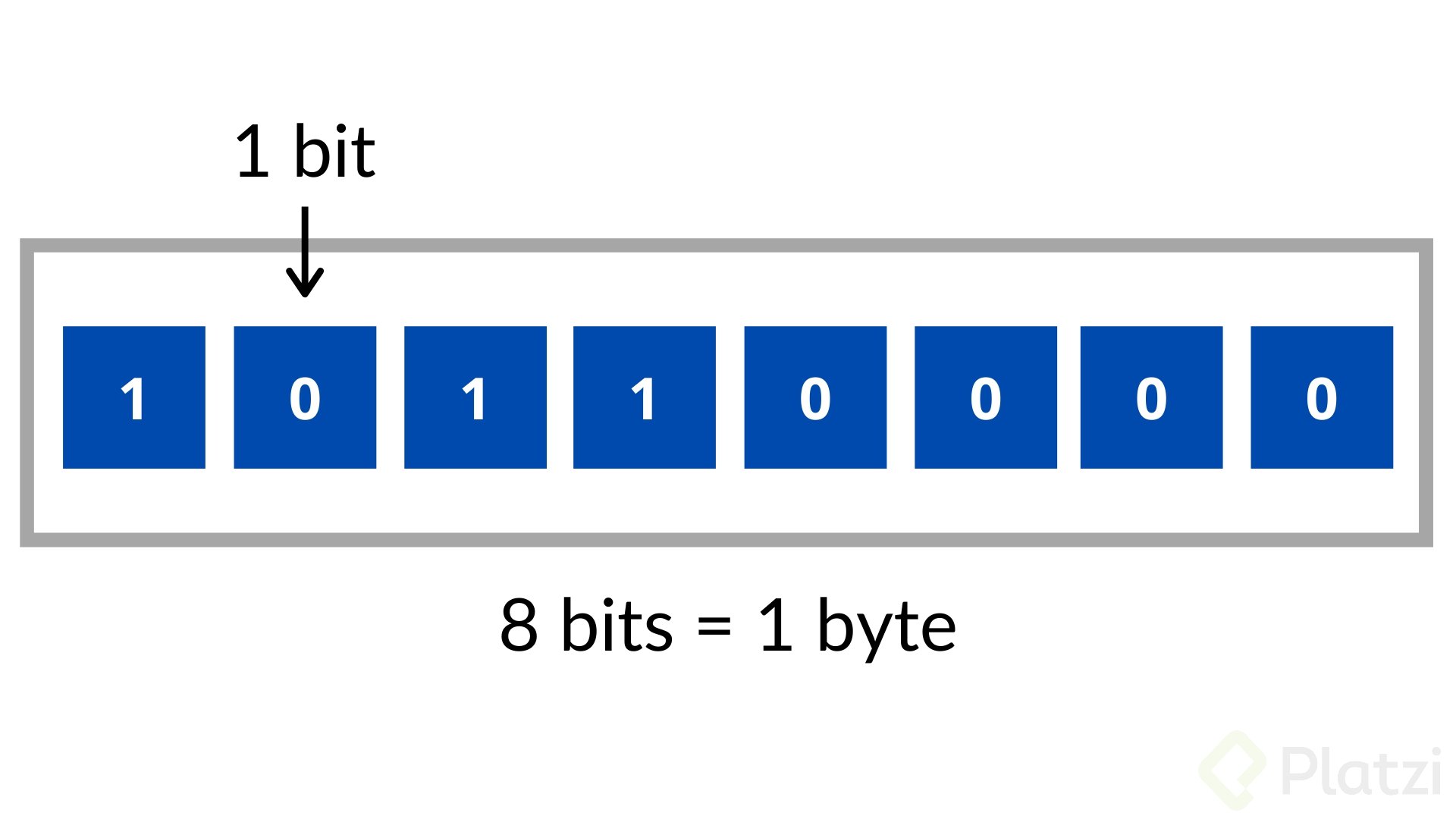

Flip that around, and you get the standard rule we all live by: 8 bits make exactly 1 byte.

It seems simple. But the history of why we ended up with eight—and not four, six, or ten—is a messy, human story involving early telecommunications, 1960s hardware limitations, and a guy named Werner Buchholz who just wanted to stop people from confusing "bits" with "bytes" at the dinner table.

Why the 8-Bit Byte Became the Global Standard

Computers don't think in numbers. They think in electricity. High voltage or low voltage. That’s your bit. But a single bit can only tell you two things. That’s not enough to write a book or even spell your name. You need groups.

In the early days of computing, there wasn't a "universal" size for these groups. Some early systems used 6-bit chunks. Why? Because 6 bits give you 64 possible combinations ($2^6$), which is just enough for the English alphabet (26), some numbers (10), and a handful of punctuation marks. It was efficient. It was lean. It was also incredibly limited once the world realized computers needed to do more than just crunch lab data.

✨ Don't miss: Why the Makita Hammer Drill 18V Still Rules the Job Site

IBM changed everything.

During the development of the IBM System/360 in the 1960s, engineers realized they needed more "room" for data. By moving to an 8-bit byte, they opened up 256 possible values ($2^8$). This allowed for upper and lowercase letters, more complex symbols, and eventually, the foundation of the ASCII (American Standard Code for Information Interchange) system. Werner Buchholz, an IBM scientist, coined the term "byte" during this era. He intentionally misspelled it with a "y" to prevent engineers from accidentally shortening "bite" to "bit" in conversation.

The Math: Converting Bits to Bytes (And Why Your Internet Speed Feels Slow)

If you're trying to figure out how many bytes make a bit for a homework assignment or a technical spec, the math is simple but easy to mess up.

- 1 Bit = 0.125 Bytes

- 8 Bits = 1 Byte

- 1,024 Bytes = 1 Kilobyte (KB)

Here is where it gets annoying for the average consumer. Your internet service provider (ISP) sells you speed in bits, but your computer measures file sizes in bytes.

Ever notice that?

When Comcast or AT&T tells you that you have "1,000 Mbps" (Megabits per second) internet, you might think you can download a 1,000 MB (1 Gigabyte) file in one second. You can't. You have to divide that 1,000 by 8. In reality, your peak download speed is 125 Megabytes per second. It’s a marketing trick as old as time, capitalizing on the fact that bits make the numbers look much larger than bytes do.

The "Nibble" and Other Weird Sizes

The digital world isn't just bits and bytes. There's a middle ground that almost sounds like a joke. It’s called a nibble (sometimes spelled nybble).

A nibble is 4 bits. Since a byte is 8 bits, a nibble is exactly half a byte.

You’ll rarely hear a software engineer talk about nibbles unless they are working in low-level assembly language or dealing with hexadecimal (Base-16) values. In hex, one digit represents exactly four bits—one nibble. So, a full byte like 1111 1111 in binary becomes FF in hex. It’s clean. It’s symmetrical. It’s also why old-school gamers talk about "8-bit" consoles like the NES. Those machines processed data in 8-bit chunks, meaning the processor could "see" values up to 255 in a single cycle.

The "Kibibyte" Controversy You Probably Missed

Wait. There’s a catch.

Technically, a kilobyte isn't always 1,000 bytes. In decimal (Base-10), "kilo" means 1,000. But computers operate in binary (Base-2). For decades, we all just agreed that a kilobyte was $2^{10}$, or 1,024 bytes.

Then the International Electrotechnical Commission (IEC) stepped in. They decided this was confusing. They introduced the "Kibibyte" (KiB) to represent 1,024, leaving "Kilobyte" (KB) to mean exactly 1,000.

Most people ignored them.

Microsoft Windows still calculates file sizes using 1,024-byte increments but labels them as "KB." MacOS and Linux shifted to the 1,000-byte standard years ago. This is why a hard drive you buy at Best Buy says "1 Terabyte" on the box, but when you plug it into your PC, it shows up as "931 GB." The manufacturer is using the 1,000-count (decimal), while your OS is using the 1,024-count (binary).

You aren't actually losing space. You're just caught in a linguistic war between two different ways of counting the same pile of bits.

Real-World Scaling: From Bits to Zettabytes

To put the scale of how many bytes make a bit into perspective, consider how far we've come. A single bit is a grain of sand. A byte is a small pebble.

If a single byte was the size of a snowflake, a Megabyte would be a large snowball. A Gigabyte would be a massive glacier. By the time you get to a Zettabyte—the scale at which we measure global internet traffic—you’re looking at an entire planet made of ice.

We are currently moving toward the "Yottabyte" era. That’s $10^{24}$ bytes. It’s a number so large it’s basically incomprehensible to the human brain. And yet, every single one of those trillions of terabytes is still just a massive, organized collection of single bits.

Putting the Knowledge to Work

Understanding the bit-to-byte ratio isn't just for trivia night. It's practical.

If you are building a website, every byte matters. A high-resolution image might be 5 Megabytes (MB). That’s 40,000,000 bits that a user has to download over their connection. If they are on a 40 Mbps mobile connection, that one image takes a full second to load. In the world of SEO and user experience, a second is an eternity.

Next time you're looking at your phone's storage or your internet bill, remember the 8-to-1 rule.

Actionable Insights for the Tech-Savvy:

- Check your ISP speeds: Divide the "Mbps" number by 8 to see your actual "MB/s" transfer rate. This manages expectations for large downloads.

- Audit your site assets: If you're a creator, use tools like TinyPNG to shave kilobytes off your images. Every byte saved is eight fewer bits for the browser to process.

- Storage Reality Check: When buying a drive, multiply the advertised capacity by 0.93 to get a realistic idea of how much space Windows will actually report.

- Coding efficiency: If you're learning to code, remember that using an

integer(usually 32 or 64 bits) to store a simple true/false value is overkill when aboolean(1 bit, though usually padded) will do.

Digital literacy starts with the smallest unit. Now that you know 0.125 bytes make a bit, you're already ahead of most people staring at their router in confusion.