You're looking at a file on your computer. It says 1 KB. You think, "Okay, that's 1,000 bytes." Then you talk to a software engineer who insists it’s 1,024. Who’s lying? Honestly, both of them and neither of them. It's a mess. This tiny discrepancy—just 24 bytes—is the reason your 500GB hard drive looks like 465GB the second you plug it into a Windows machine.

Understanding how many bytes in a kilobyte isn't just a math trivia question. It’s a decades-long war between the International System of Units (SI) and the International Electrotechnical Commission (IEC). We’ve spent forty years using the same words to mean different things, and it’s finally time to sort out why your computer’s math feels like it's gaslighting you.

The 1,000 vs. 1,024 Identity Crisis

In the world of standard weights and measures, "kilo" means 1,000. Period. A kilometer is 1,000 meters. A kilogram is 1,000 grams. If you go to the grocery store and buy a kilo of flour, and they give you 1,024 grams, you’d be confused, though maybe slightly happier.

But computers don't think in base-10. They think in binary.

Everything in a computer is a switch: on or off, 1 or 0. Because of this, memory addresses and storage capacities naturally scale by powers of two. When early computer scientists saw that $2^{10}$ was 1,024, they realized it was remarkably close to 1,000. They got lazy. Instead of inventing a new word, they just hijacked "kilobyte."

This worked fine when we were dealing with tiny amounts of data. In the 1970s, a 24-byte difference was a rounding error. But data grows exponentially. By the time we hit Megabytes, the error was 4.8%. At Gigabytes, it's over 7%. At Terabytes? You’re "missing" nearly 100GB of space because of a naming convention.

Why binary matters for hardware

If you're building a RAM chip, you literally cannot make it "exactly" 1,000 bytes efficiently. The physical architecture of the circuitry—the rows and columns of transistors—dictates a binary structure. To a programmer working close to the hardware, 1,024 is the only number that makes sense. It’s "clean" in binary: 10000000000.

Meet the Kibibyte (The word everyone hates)

In 1998, the IEC tried to fix this. They realized the confusion was costing people money and causing lawsuits. Their solution was the kibibyte (KiB).

- Kilobyte (KB): 1,000 bytes (Base-10)

- Kibibyte (KiB): 1,024 bytes (Base-2)

It sounds like something a toddler would say. "Kibibyte." It’s a portmanteau of "kilo" and "binary." While it’s technically the "correct" way to refer to 1,024 bytes, almost nobody uses it in casual conversation. However, if you open a Linux distribution like Ubuntu or check certain technical specs in macOS, you'll see "KiB" or "MiB" everywhere. They’re trying to be precise.

Windows, on the other hand, is the holdout. Windows still calculates everything in binary (1,024) but labels it as "KB." This is the primary reason for the "disappearing storage" phenomenon. When you buy a "1TB" Western Digital drive, the manufacturer is using the SI definition (1,000,000,000,000 bytes). When you plug it into Windows, the OS divides that number by 1,024 three times ($1024^3$ or $1024^4$ depending on the unit), making the drive appear much smaller than the box promised.

🔗 Read more: Why Big Bang Theory Images Still Mess With Our Heads

Real-world impact: Why should you care?

Imagine you’re a network engineer. Bandwidth is almost always measured in decimal. A "100 Mbps" connection moves 100,000,000 bits per second. But your file transfer software might be measuring speed in binary (MiB/s). If you try to calculate how long a download will take without accounting for the 1,000 vs 1,024 difference, your estimate will be wrong every single time.

It also matters for cloud billing. Companies like AWS or Google Cloud sometimes bill based on binary Gigabytes (GiB) while others use decimal. If you are moving petabytes of data, that "small" difference represents thousands of dollars in unbudgeted costs.

Historical context: The 1990s and the transition

There was a time when the industry tried to ignore this. In the early 90s, the confusion was actually used as a marketing tactic. Hard drive manufacturers realized they could make their drives sound bigger by using the 1,000-byte definition while their competitors might still be using 1,024. Eventually, this led to class-action lawsuits.

In Cho v. Seagate Technology (2006), consumers sued because they felt cheated by the capacity labels. Seagate won, essentially because the court agreed that "kilo" has meant 1,000 for centuries, and the tech industry shouldn't have redefined it in the first place. This is why you now see tiny asterisks on every hard drive box saying "1GB = 1 billion bytes."

How to calculate it yourself

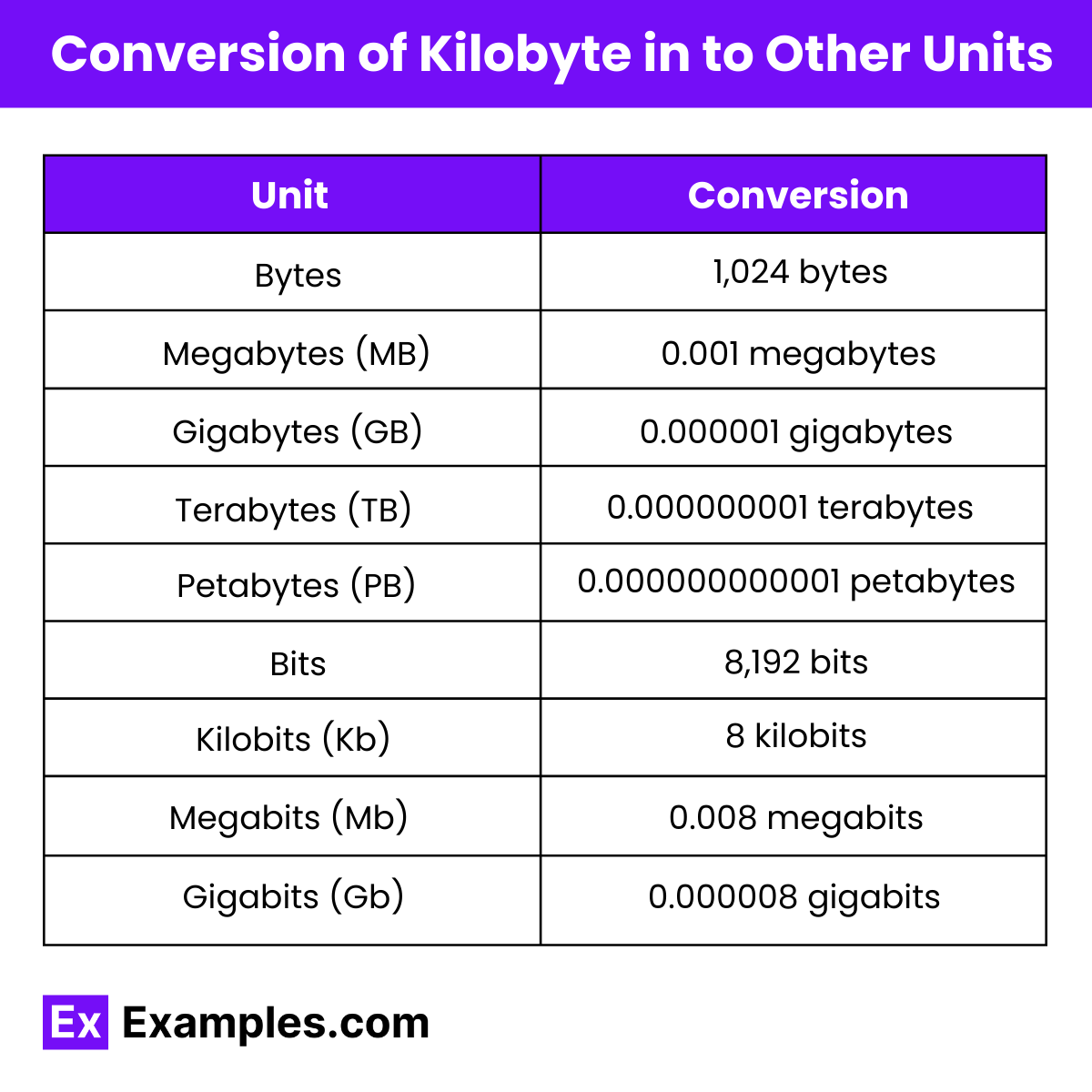

If you want to be a nerd about it, here is how the math breaks down:

To find how many bytes in a kilobyte (the SI version), you just multiply by 10. To find the binary version (kibibyte), you use the power of two.

- Take your number of Kilobytes.

- If you want the SI total, multiply by $10^3$.

- If you want the Binary total, multiply by $2^{10}$.

It seems simple, but when you scale up to a Yottabyte, the gap between the two definitions is massive. A Yottabyte (decimal) is $10^{24}$ bytes. A Yobibyte (binary) is $2^{80}$ bytes. The difference between those two numbers is greater than the total amount of data that existed in the world in the early 2000s.

Practical steps for managing data

Stop trusting the "label" on your files and start looking at the raw byte count if you need precision.

Right-click any file in Windows and select "Properties." You’ll see two numbers. One is the size in the "user-friendly" format (like 1.2 MB), and right next to it, in parentheses, will be the exact byte count. That raw number is the only truth.

When buying storage, always assume you will lose about 7-10% of the advertised space to this "conversion tax." A 1TB drive will give you roughly 931 GiB of actual usable space in Windows.

If you are a developer, use libraries that handle these conversions for you. Don't hardcode 1024 into your apps unless you are specifically working with memory-addressing logic. If you are building a UI for a general user, stick to the 1,000-byte standard; it's what they expect, even if it feels "wrong" to your CS degree.

The fight between 1,000 and 1,024 isn't going away. It’s baked into the history of computing. Just remember: kilo means 1,000 in the street, but 1,024 in the sheets (of a spreadsheet).