It’s the first thing they teach you in any Intro to CS class. You ask how many bits in a byte, and the professor barks back "eight" before you can even finish the sentence. It feels like a fundamental law of the universe, right up there with gravity or the speed of light.

But it hasn't always been that way.

The "eight-bit byte" is actually a historical accident that just happened to win the tech wars of the 1960s and 70s. If you were sitting in a computer lab fifty years ago, that answer might have been six, or nine, or even something totally bizarre like twelve. Today, we treat the 8-bit byte as the atom of computing, but understanding why it stuck—and how it actually works—is the difference between just using a computer and actually understanding the machine.

💡 You might also like: Samsung Flip Phone Smartphone: Why People are Actually Switching Back

The Basic Math: How Many Bits in a Byte Right Now?

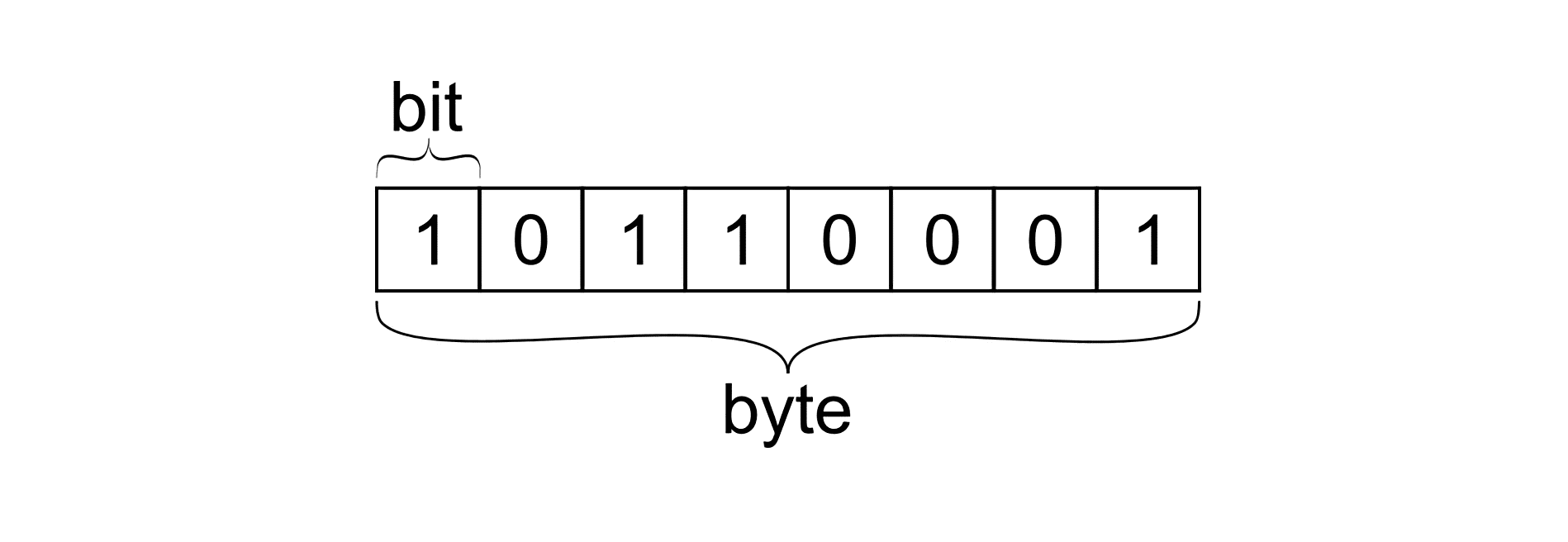

Let’s get the direct answer out of the way for the sake of your sanity. In modern computing, there are 8 bits in 1 byte.

A bit is a binary digit. It is the smallest possible unit of data, representing either a 0 or a 1. Think of it like a light switch that is either off or on. When you group eight of these switches together, you get a byte.

Why eight?

Well, it comes down to combinations. With 8 bits, you can create $2^8$ different patterns. That equals 256 unique combinations. This turned out to be the "sweet spot" for early computer scientists. It was just enough space to encode the entire English alphabet (both upper and lower case), numbers 0-9, and a bunch of punctuation marks and control characters. This gave birth to ASCII (American Standard Code for Information Interchange), which effectively cemented the 8-bit standard for the rest of human history.

Honestly, if we had stayed with 6-bit bytes, we’d only have 64 combinations ($2^6$). You couldn't even have lowercase letters and numbers in the same set without doing some weird technical gymnastics. Eight just worked.

Werner Buchholz and the Birth of the Byte

The word "byte" itself isn't some ancient mathematical term. It was coined in 1956 by a guy named Werner Buchholz. He was working on the IBM Stretch computer.

🔗 Read more: Inch fraction to decimal conversions: Why your tape measure is lying to you

He actually spelled it with a "y" on purpose.

Why? Because he was worried that if he spelled it "bite," engineers would get it confused with "bit" during quick conversations. It was a branding move. Back then, a byte was just defined as a "group of bits used to encode a character." On the IBM Stretch, a byte could actually be any length from one to six bits.

It wasn't until the IBM System/360 hit the market in the 1960s that the 8-bit byte became the industry standard. IBM was the 800-pound gorilla in the room. Whatever they did, everyone else had to follow if they wanted their hardware to talk to each other. Suddenly, everyone stopped asking how many bits in a byte because the answer was officially locked in.

Is a Byte Always Eight Bits?

Technically, no.

If you want to be a real pedant at a nerd party, you should use the word "octet." In networking protocols and formal international standards (like those from the IEC), an octet specifically refers to exactly eight bits.

Why the different word? Because in the early days of the internet, some computers—like the PDP-10—used 36-bit words and 9-bit bytes. If you sent a "byte" from an 8-bit machine to a 9-bit machine, everything broke. Engineers started using "octet" to be 100% clear they meant eight bits, regardless of what the hardware thought.

You’ll still see the term "octet" in RFCs (Request for Comments) and technical manuals for routers and switches. But for 99% of the world, a byte is an octet.

Bits, Nibbles, and Shifting Scales

Digital storage moves fast. It’s not just about the single byte anymore; it’s about the massive prefixes we attach to them. But before we get to Gigabytes, we have to talk about the "Nibble."

Yes, a nibble is a real thing. It’s half a byte. Four bits.

It’s a bit of a joke name that stuck. Since a byte is $2^8$, a nibble is $2^4$, which gives you 16 combinations. This is perfect for representing a single hexadecimal digit (0-F).

✨ Don't miss: Why Your Home Assistant Alarm Clock is Probably Overdesigned and How to Fix It

When we scale up, things get confusing because humans like base-10 (powers of 10) but computers love base-2 (powers of 2).

- A Kilobyte (KB) is technically 1,000 bytes in the decimal world, but 1,024 bytes in the binary world.

- A Megabyte (MB) is roughly a million bytes.

- A Gigabyte (GB) is a billion.

- A Terabyte (TB) is a trillion.

This is why when you buy a 1TB hard drive, your computer says you only have about 931GB of space. The manufacturer is counting in base-10 (1,000) while the operating system is counting in base-2 (1,024). You aren't being scammed; they're just using different math.

Why Does This Matter for You?

Understanding how many bits in a byte isn't just trivia. It affects your daily life in ways you might not realize, especially when it comes to internet speeds.

Have you ever noticed that your "100 Megabit" internet connection only downloads files at about 12 Megabytes per second?

That’s because ISPs (Internet Service Providers) advertise speeds in bits (small 'b'), while your browser shows download speeds in bytes (capital 'B'). Since there are 8 bits in a byte, you have to divide that 100Mbps by 8 to get the real-world speed.

- 100 Megabits per second / 8 = 12.5 Megabytes per second.

It's a clever marketing trick. 100 looks way bigger than 12.5. Always check the casing of the "B." If it’s lowercase, you’re looking at bits. If it’s uppercase, you’re looking at bytes.

The Future: Will the Byte Ever Change?

Is it possible we’ll move away from the 8-bit byte?

Probably not.

Modern processors are 64-bit, meaning they can handle data in chunks of 64 bits at a time. However, those 64 bits are still just eight 8-bit bytes grouped together. The entire infrastructure of the internet, every file format (like .jpg or .mp3), and every programming language is built on the foundation of the 8-bit byte. Changing it now would be like trying to change the width of every railroad track in the world simultaneously. It would be a total catastrophe.

We are stuck with eight. And honestly, it works pretty well.

Actionable Takeaways for the Digital Age

- Check your "B"s: When looking at data plans or SSD speeds, remember that 1 Byte (B) = 8 bits (b). Don't let marketing numbers confuse you.

- The 1024 Rule: If your storage looks "short," remember that computers count by 1,024, not 1,000.

- Hexadecimal Awareness: If you're doing any basic coding or color picking (CSS), remember that those 6-digit hex codes (like #FFFFFF) are just three 8-bit bytes representing Red, Green, and Blue.

- Octet vs. Byte: If you're reading a technical networking manual and see "octet," don't panic. It's just a fancy way of saying a byte to ensure there's no confusion with legacy systems.

- Character Encoding: Most of the web uses UTF-8 now. It's a "variable-width" encoding, meaning a character can be one byte, or it can be up to four bytes. This is how we get emojis and non-English characters to work on a system originally designed for 256 basic characters.

The 8-bit byte is the unsung hero of the information age. It's the standard that didn't have to be, but because it was "good enough" for IBM in the 60s, it's the heartbeat of every device in your pocket today.