You’re looking at a screen right now. Whether it’s a high-end OLED or a cracked smartphone display, everything you see is built on a lie—or at least, a very specific, agreed-upon fiction. We're taught from day one that there are exactly eight bits in a byte. It's the gospel of the digital age. But honestly, if you stepped into a time machine and headed back to the 1960s, that answer would get you laughed out of a research lab.

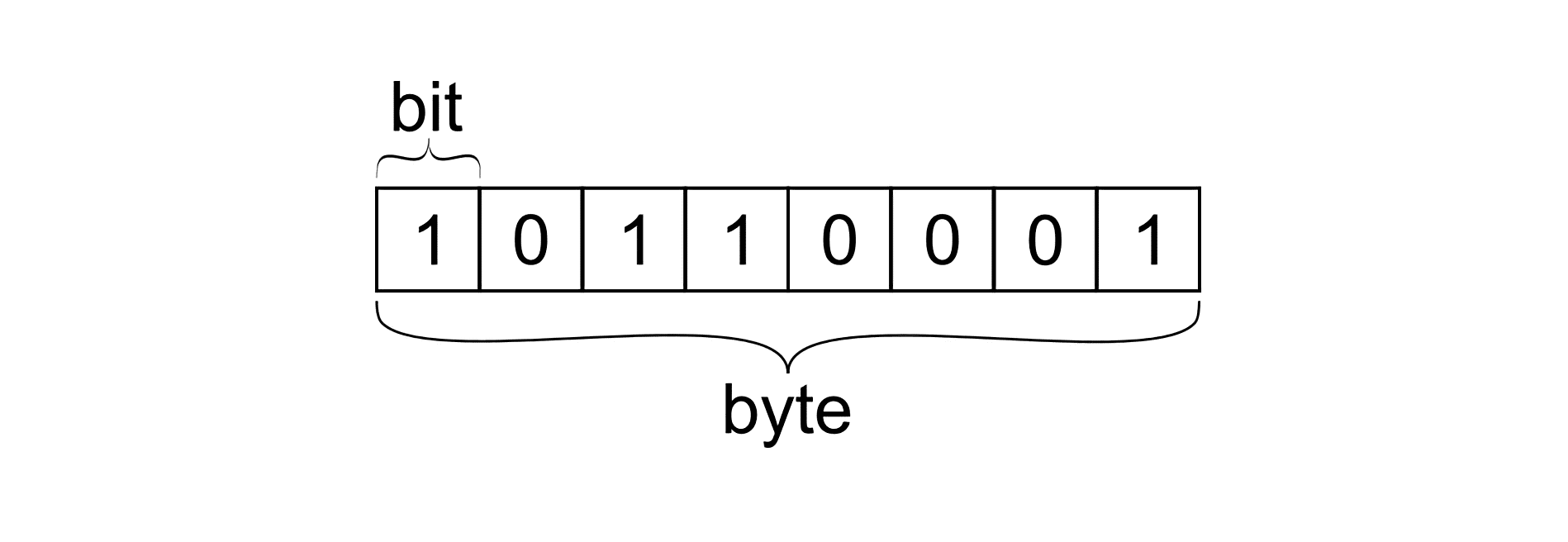

Computers don't actually care about the number eight. They care about electricity. Specifically, is the gate open or closed? That’s your bit—the binary digit. It's the smallest, most annoying little atom of data. But a single bit can’t do much. It’s like a single letter in an alphabet that only has two characters. To say anything meaningful, you have to group them. And for a long time, how we grouped them was basically the Wild West of engineering.

Why We Settled on 8 Bits in a Byte

The "8-bit byte" wasn't some divine revelation from the heavens. It was a business decision. Specifically, it was an IBM decision. Back in the mid-1950s, Werner Buchholz, a computer scientist working on the IBM Stretch project, coined the term "byte." He misspelled it on purpose so people wouldn't confuse it with "bit." At the time, a byte could be anything from one to six bits.

Then came the IBM System/360. This was the mainframe that changed everything. IBM decided to move away from 6-bit characters (which couldn't handle lowercase letters very well) to an 8-bit system. Why eight? Because it’s a power of two ($2^3$). It’s elegant for hardware. It allowed for 256 different combinations, which was more than enough for the entire English alphabet, numbers, punctuation, and a few weird symbols for good measure. Because IBM dominated the market, everyone else eventually had to fall in line or risk being incompatible.

The Rivals: 6, 7, and 9-Bit Bytes

It’s easy to forget that the 36-bit architecture was once a huge deal. Machines like the PDP-10 used 36-bit words. On those systems, you might find bytes that were 6 bits or 9 bits long. If you were working on a Honeywell 6000 series, your "byte" might look nothing like the one on your modern MacBook.

Even the early internet wasn't sure. The original TCP/IP protocols used the term "octet" instead of byte. Go read an RFC (Request for Comments) document from the 70s or 80s. You’ll see "octet" everywhere. Engineers used that word because they couldn't guarantee that the computer on the other end of the connection agreed that a byte was eight bits. An octet, by definition, is always eight. Today, "byte" and "octet" are effectively synonyms in 99.9% of contexts, but that "octet" label is a scar from a time when the 8-bit standard was still winning its fight for dominance.

🔗 Read more: Weather Radar Belleville Illinois: Why Your Phone Might Be Lying to You

Bits, Nibbles, and the Math of Memory

Let's get small for a second. Half a byte is called a nibble. I'm serious. That is the actual technical term.

A nibble is 4 bits. While you won't see "nibble" on a spec sheet for a new gaming PC, it's still vital in hexadecimals. One hex digit (0-F) represents exactly four bits. So, two hex digits represent one 8-bit byte. This is why colors in CSS look like #FFAA00. That's just three bytes of data—one for Red, one for Green, and one for Blue.

- 1 Bit: A single switch. 0 or 1.

- 4 Bits: A nibble. Can represent 16 values.

- 8 Bits: The standard byte. 256 values.

- 16 Bits: A "word" (on many systems). 65,536 values.

The jump from 8 bits to 16, 32, and 64 is where things get interesting. When people talk about a "64-bit processor," they aren't talking about the size of the byte. A byte is still 8 bits. They’re talking about the "word size"—how much data the CPU can chew on in a single cycle. It’s like the difference between the size of a bite of food and how big the actual spoon is.

The Massive Scale: From Bytes to Yottabytes

We’ve reached a point where talking about a single byte is almost pointless. Your average "low-quality" photo is a million bytes. A short 4K video? Billions. This is where the prefixes come in, and where most people (and even some tech companies) get the math wrong.

There is a long-standing feud between "decimal" and "binary" measurements. Hard drive manufacturers love decimal. To them, 1 Kilobyte is 1,000 bytes. It makes the numbers on the box look bigger. But your operating system—Windows, specifically—usually thinks in binary. To Windows, 1 Kilobyte is 1,024 bytes ($2^{10}$).

This is why you buy a 1TB drive, plug it in, and your computer tells you it only has about 931GB. You didn't get ripped off; you're just caught in a crossfire between base-10 and base-2 math. To fix this confusion, the IEC (International Electrotechnical Commission) tried to introduce "Kibibytes" (KiB) for 1,024 and keep "Kilobytes" (KB) for 1,000. Hardly anyone uses Kibibyte in conversation because it sounds like a breakfast cereal, but it’s the technically "correct" way to distinguish the two.

Real-World Data Sizes in 2026

- A single character in a text file: Usually 1 byte (if using ASCII) or up to 4 bytes (for complex Emoji in UTF-8).

- A typical email (text only): About 10 KB to 50 KB.

- A high-res JPEG: 2 MB to 5 MB.

- One minute of uncompressed audio: Roughly 10 MB.

- A modern AAA video game: 100 GB to 200 GB.

Why 8 Bits Still Rules

You might wonder why we don't just move to 10-bit bytes or something "cleaner" for our base-10 brains. We can't. The entire foundation of modern computing—every compiler, every operating system, every file system—is baked into the 8-bit architecture. Changing the definition of a byte now would be like trying to change the length of a second or the distance of a meter. The "technical debt" would be catastrophic.

We’ve optimized our hardware to move these 8-bit chunks with incredible efficiency. Modern CPUs use SIMD (Single Instruction, Multiple Data) to process dozens of bytes at the exact same time. We've built massive highways designed specifically for 8-wheeled trucks. Switching to 10-wheeled trucks would mean rebuilding every road on earth.

Moving Forward with This Knowledge

Understanding that there are 8 bits in a byte is the starting point for really grasping how your digital life works. It explains why your internet speed is measured in bits (Mbps) but your file sizes are in Bytes (MB).

Remember: Internet speed is usually small 'b' (bits), and storage is big 'B' (Bytes). Since there are 8 bits in a byte, a 100 Mbps internet connection will actually download a file at a maximum of 12.5 MB per second. Knowing that simple division ($100 / 8$) prevents a lot of frustration when you're wondering why your "fast" internet is taking forever to download a 1GB game.

If you want to apply this practically, start looking at your file metadata. Look at a simple .txt file. Type one letter. Save it. Check the size. It'll be 1 byte. Type another. 2 bytes. It’s the most direct way to see the "ghost in the machine" and realize that everything—every movie, every AI model, every video call—is just a massive, vibrating string of those eight-bit groups.

To get a better handle on your own data, take these steps:

- Check your ISP contract: Look for "Mbps" vs "MB/s." Divide the number by 8 to see your actual real-world download speed.

- Audit your storage: Use a tool like WinDirStat or DaisyDisk to see where your Gigabytes are going. You'll see how small text files compare to bloated video "blobs."

- Learn Hexadecimal basics: If you’re into coding or design, understanding how two hex characters represent one 8-bit byte will make debugging CSS or low-level code feel like a superpower.