Ever stared at a spec sheet and wondered why everything in computing seems to revolve around these weird, specific numbers? You see 32-bit, 64-bit, 4-byte chunks, and it feels like a math test you didn't study for. Honestly, the conversion from 32 bits to bytes is one of the most fundamental concepts in computer science, yet it’s often explained with so much jargon that people just tune out.

It’s simple. 4 bytes.

That’s the "too long; didn't read" version. But if you stop there, you miss why this ratio defined an entire era of gaming, why your old Windows XP machine couldn't use all your RAM, and how modern processors actually "talk."

The Math Behind 32 Bits to Bytes

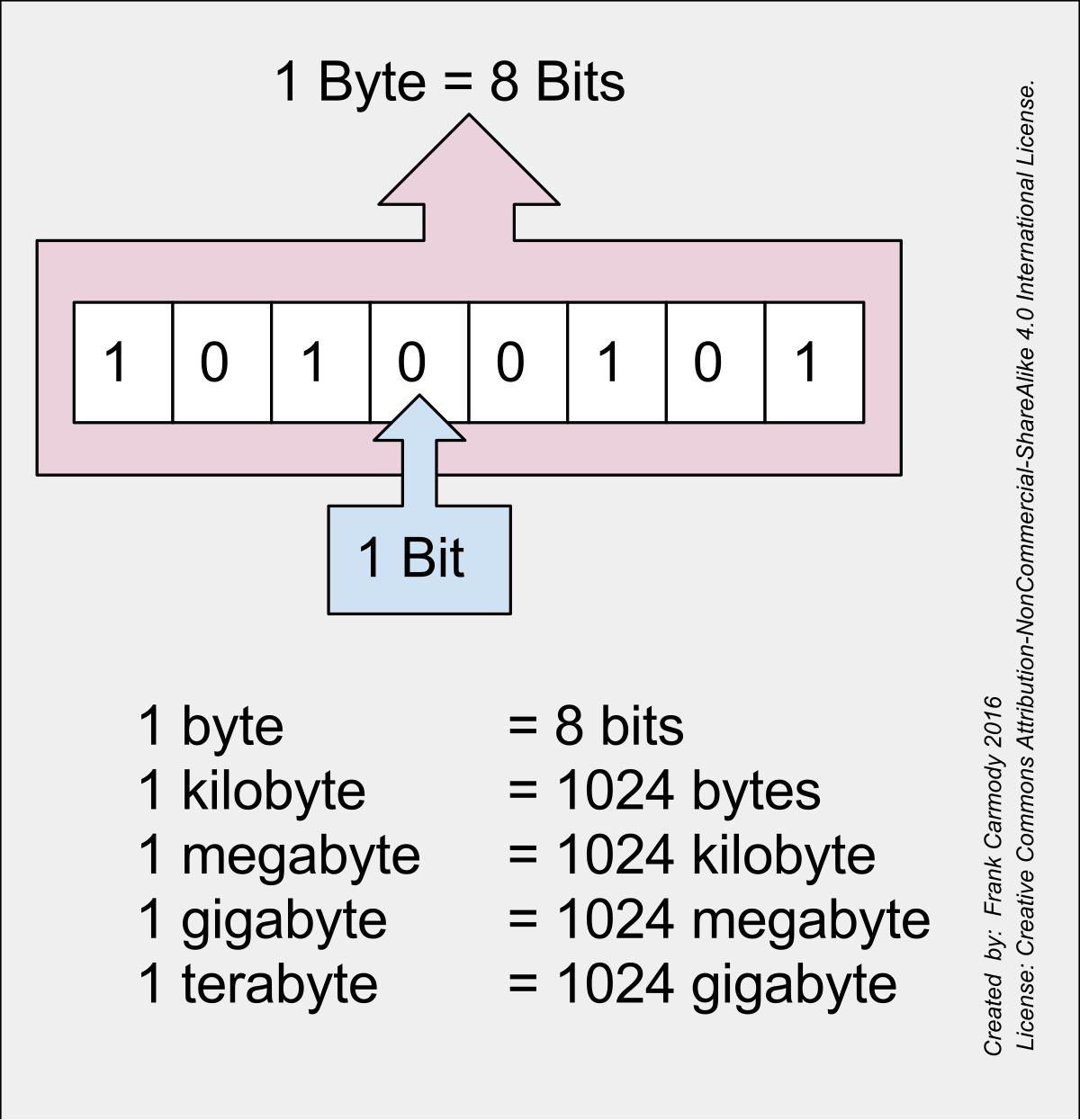

Computer memory is just a massive grid of light switches. On or off. 1 or 0. That's a bit. But a single bit is useless for anything complex. To make it functional, we group them.

Since 1964, when the IBM System/360 hit the scene, the industry basically shook hands on the idea that 8 bits make 1 byte. Why eight? It was a compromise between efficiency and the ability to represent enough characters (like the alphabet and numbers) in a single block.

So, when you do the math for 32 bits to bytes, you’re just dividing 32 by 8.

$32 \div 8 = 4$

You’ve got 4 bytes. In the world of C programming or C++, this is typically the size of an "integer." When a programmer tells the computer to remember a whole number, the computer carves out a 4-byte hole in the RAM to stick that number in.

The 4GB Ceiling: Why 32 Bits Ruined Your RAM Upgrade

Here is where it gets spicy. For decades, "32-bit" was the gold standard for operating systems. If you remember the transition from Windows XP to Windows 7, you might remember the heartbreak of buying 8GB of RAM and seeing your computer only recognize about 3.5GB.

This isn't a glitch. It’s a mathematical wall.

A 32-bit processor uses those bits as "addresses." Imagine a mailman trying to deliver mail. If his clipboard only has room for a 32-digit binary number, he can only find a certain amount of houses.

Specifically, $2^{32}$ houses.

That equals 4,294,967,296 unique locations. Since each location holds one byte of data, a 32-bit system can only "see" 4,294,967,296 bytes.

Convert that back: 4,294,967,296 bytes divided by 1,024 (to get KB), then by 1,024 (to get MB), then by 1,024 (to get GB). You get exactly 4GB.

Because of how hardware reserved some of those addresses for things like video cards, you usually ended up with even less. It was a hard limit. You could stick 128GB of RAM in that motherboard, but a 32-bit CPU would just stare at it, confused, only using the first 4GB. This is why we eventually had to move to 64-bit architecture, which can address exabytes of data.

✨ Don't miss: James Webb Deep Field: Why the SMACS 0723 Image Still Blows Our Minds

Does Anyone Still Use 32-Bit?

Surprisingly, yeah. While your iPhone and your MacBook are firmly in the 64-bit camp, the world of "Embedded Systems" is crawling with 32-bit logic.

Think about your microwave. Or a simple thermostat. Or the controller in a cheap drone. These devices don't need 16GB of RAM. They barely need 1MB. Using a 32-bit architecture is cheaper, consumes less power, and is more than enough to handle the simple task of "is the popcorn done?"

Microcontrollers like the STM32 or various ARM Cortex-M chips are the backbone of modern electronics. They are 32-bit because 32 bits to bytes (that 4-byte "word") is the perfect sweet spot for processing sensor data without burning through a battery.

Color Depth and the 32-Bit Lie

If you’ve ever messed with Photoshop or video game settings, you’ve seen "32-bit color." This is actually a bit of a misnomer compared to CPU architecture.

In digital imaging, color is usually 24-bit.

- 8 bits for Red

- 8 bits for Green

- 8 bits for Blue

$8 + 8 + 8 = 24$. That gives you about 16.7 million colors. So where does the 32-bit come from?

The "Alpha Channel."

That extra 8 bits (1 byte) is used for transparency. So, 24 bits of color plus 8 bits of "how see-through is this pixel" equals 32 bits. When a GPU processes a 32-bit pixel, it’s reading 4 bytes of data per dot on your screen.

This is why high-resolution textures eat up so much VRAM. Every single pixel on a 4K display is pulling 4 bytes. 4K is roughly 8.3 million pixels.

$8,300,000 \times 4$ bytes = roughly 33.2MB per single frame.

👉 See also: 450 kph to mph: What Hitting This Speed Actually Feels Like

At 60 frames per second, your hardware is shoving gigabytes of 4-byte "chunks" through the bus every second.

The Year 2038 Problem

You've heard of Y2K? The "Year 2038" problem is the sequel, and it's all because of 32 bits to bytes.

Many Unix-based systems (which include parts of Linux and macOS) store time as the number of seconds that have passed since January 1st, 1970. They store this number in a—you guessed it—32-bit signed integer.

A signed integer uses 1 bit for the plus/minus sign and 31 bits for the number. The maximum number you can fit in 31 bits is 2,147,483,647.

On January 19, 2038, at 03:14:07 UTC, the number of seconds since 1970 will hit 2,147,483,647.

One second later? The bit flips. The number becomes negative. Suddenly, these computers will think it’s December 13, 1901.

Engineers are currently racing to update legacy systems to 64-bit "time_t" structures to avoid the digital meltdown. It's a literal countdown based on the physical limitation of fitting 32 bits into 4 bytes of memory.

How to Handle Data Conversions

If you are working in Excel, Python, or just trying to calculate data rates for a network, you need to be careful with the "b" and the "B."

- Small "b" = bits.

- Big "B" = Bytes.

Internet service providers love this trick. They sell you "1,000 Mbps" (Megabits). People see the 1,000 and think they can download a 1,000MB file in one second. Nope. You have to divide by 8. Your 1,000Mbps line is actually a 125MB/s line.

If you have a data packet that is 32 bits wide, and you’re trying to calculate how much storage it takes in a database, always remember the 4-byte rule.

Actionable Steps for Technical Management

Understanding the jump from 32 bits to bytes isn't just trivia; it changes how you buy or build tech.

Check your Legacy Apps

If you are running an old business server, check if the software is 32-bit. If it is, it cannot use more than 4GB of RAM regardless of your hardware. You are literally wasting money on RAM sticks the software can't see.

Audit Your IoT Devices

If you're developing hardware, don't automatically reach for 64-bit processors. A 32-bit ARM chip is often a third of the price and significantly more power-efficient for tasks that only require 4-byte integer precision.

Validate Your Database Types

In SQL, using a BIGINT (8 bytes/64 bits) when an INT (4 bytes/32 bits) will suffice can double your storage costs over millions of rows. If your ID column will never exceed 2.1 billion, stick to the 4-byte 32-bit integer.

Mind the Network Latency

When calculating throughput, always convert your bits to bytes immediately. Most bottlenecks happen because someone calculated their "budget" in bytes but the hardware was capped in bits.

The transition from 32 bits to bytes is the heartbeat of computer architecture. It defines the limits of our memory, the depth of our colors, and even the "end of time" for legacy servers. Four bytes might seem small, but in the right context, they are everything.