You've probably been there. Your app is flying during development, but the moment you hit production-level data, everything drags. It’s frustrating. Usually, the culprit isn't a complex algorithm or a slow database—it’s a simple ratio you might've ignored in your computer science 101 class. We call it the hash table load factor.

Basically, it's the measure of how full your hash table is. If you treat it like a suitcase, the load factor tells you if you're neatly packed or if you're sitting on the lid trying to get the zipper to close. Most devs just use the default settings in their language of choice and hope for the best. That’s a mistake.

What Actually Is Hash Table Load Factor?

Think of a hash table as a row of lockers. When you want to store something, a hash function tells you exactly which locker number to use. Ideally, every item gets its own locker. But life isn't perfect. Sometimes two items want the same locker. That’s a collision.

Mathematically, the load factor is defined as:

$$\alpha = \frac{n}{k}$$

where $n$ is the number of entries occupied and $k$ is the total number of buckets (slots).

If you have 70 items in 100 slots, your load factor is 0.7. Simple, right? But the implications are massive. As $\alpha$ increases, the probability of a collision skyrockets. When collisions happen, the $O(1)$ constant-time lookup we all love starts decaying into $O(n)$ linear time. Your "fast" hash table suddenly acts like a slow, clunky linked list.

Honestly, it's the difference between grabbing your keys off the hook and digging through a junk drawer to find them.

The Magic Number 0.75

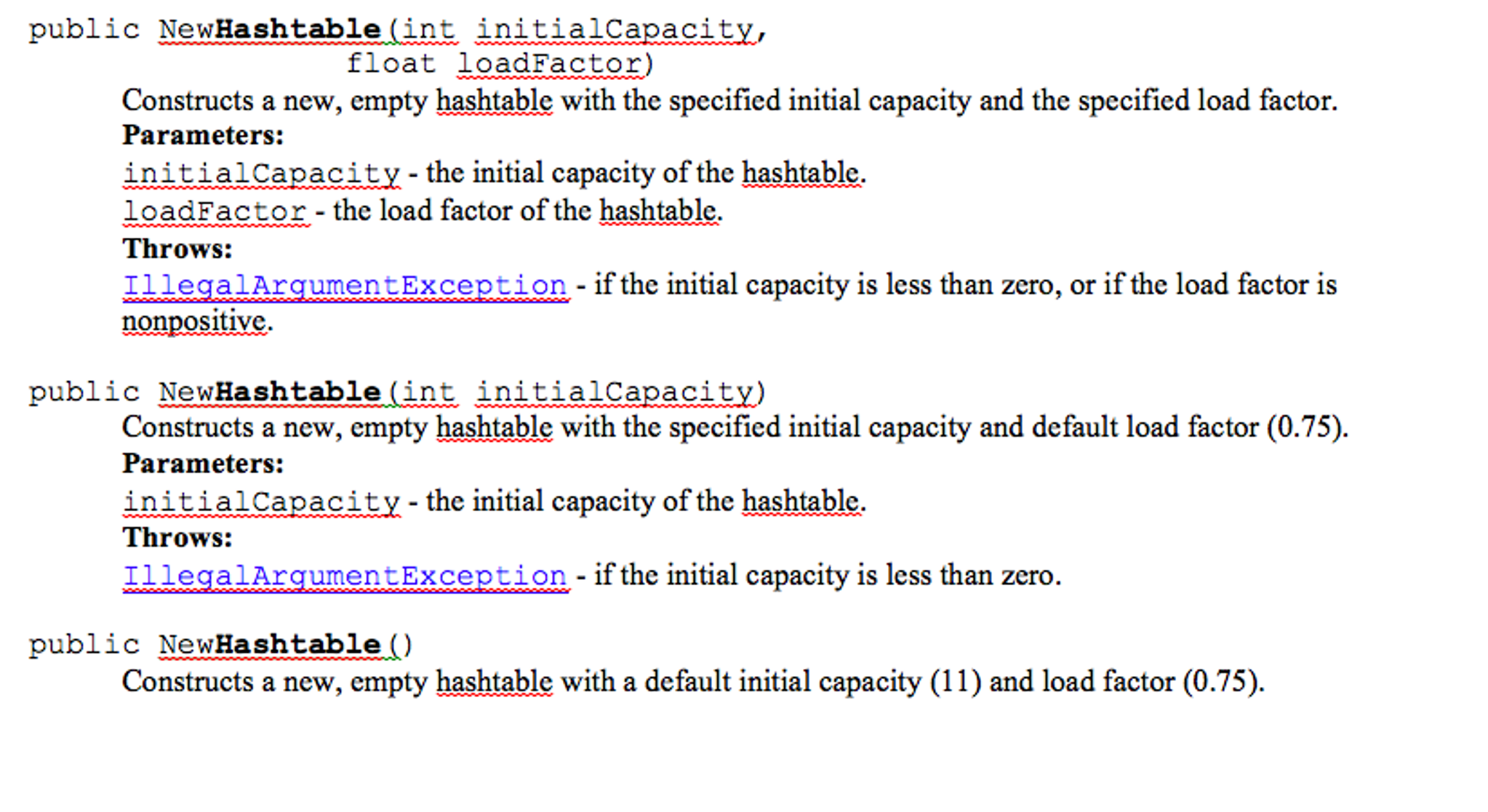

Java’s HashMap defaults to 0.75. Python’s dict handles things differently but keeps things sparse to maintain speed. Why 0.75? It's not a random guess. It's a trade-off.

If you set the load factor too low, say 0.1, you’re wasting a ton of memory. You’d have 1,000 slots just to store 100 items. RAM isn't free. On the flip side, if you wait until 0.9 or 1.0 to resize, your performance tanks because the "chains" of items in each bucket get too long.

Engineers like Doug Lea, who contributed heavily to the Java collections framework, leaned on this 0.75 threshold because it strikes a sweet spot for the Poisson distribution of items. It keeps the expected number of probes low while keeping memory overhead manageable.

But here is the kicker: 0.75 isn't a law. It's a suggestion.

When the Defaults Fail You

If you're working in an environment with extreme memory constraints—think embedded systems or high-frequency trading where every byte of L3 cache counts—the default load factor might be your enemy.

- High-Memory Environments: If you have terabytes of RAM and speed is the only thing that matters, drop that load factor. Setting it to 0.5 makes collisions rare.

- Memory-Constrained Systems: If you're running on a tiny chip, you might crank it up to 0.9. Just be ready for the CPU to work harder.

- The Resize Penalty: This is what kills people in real-time systems. When a hash table hits its load factor limit, it has to "rehash."

Rehashing means the system allocates a new, larger array (usually double the size) and moves every single item from the old one to the new one. If your table has 10 million items, your program will "freeze" for a few milliseconds while this happens. In a video game or a high-speed trading bot, that's a disaster.

Real-World Collision Scenarios

Let's look at how different languages handle the mess when the hash table load factor gets too high.

🔗 Read more: Reddit app dark mode: Why your eyes (and battery) are still begging for it

- Chaining: This is what Java does. Each bucket is actually a head of a linked list (or a tree in newer versions). If two things land in the same spot, they just hang out together.

- Open Addressing: Python uses this. If a spot is taken, it looks for the next available spot based on a specific probe sequence. This is why Python dictionaries are so fast but also why they're sensitive to the load factor. Once an open-addressed table gets too full, finding an empty spot becomes an expensive scavenger hunt.

I remember a project where we were processing genomic data. We used a standard hash map to count k-mers. Because we didn't pre-size the table, the map kept hitting its load factor and resizing. The process took 40 minutes. After we manually set the initial capacity and lowered the load factor to 0.6, it finished in under 12 minutes. That's the power of understanding what's happening under the hood.

Practical Steps to Optimize Your Tables

Don't just take the defaults. Use these strategies to make your code actually perform.

Pre-size your tables. If you know you’re going to store about 1,000 items, don’t start with a default size of 16. If you use a load factor of 0.75, initialize your table to $1000 / 0.75 \approx 1334$. This prevents those expensive "stop-the-world" rehash events.

Choose the right hash function. A bad hash function that clusters data in one corner of the table makes the load factor irrelevant. Even at 0.1 load, if everything hashes to the same three buckets, you’re in trouble. Use MurmurHash3 or SipHash if you're worried about distribution or security against hash flooding attacks.

Profile, don't guess. Use tools like VisualVM for Java or tracemalloc for Python. Look for spikes in memory allocation and CPU usage that correlate with map insertions. If you see "sawtooth" patterns in memory, you're resizing too often.

Consider the Load Factor's cousin: the Growth Factor. Most tables double in size ($2\times$). Some use $1.5\times$ to be more memory-efficient. If you are extremely tight on space, you can customize this in some low-level C++ implementations (like std::unordered_map or custom Abseil maps).

📖 Related: What Number Blocks Caller ID? Here is How to Actually Disappear on a Call

The goal isn't to have a "perfect" table. The goal is to have one that doesn't surprise you when the data gets big. Start by checking the documentation for your specific language's implementation—you'll be surprised how much control you actually have.