You're standing in a crowded terminal at Tokyo’s Haneda Airport. Someone is speaking to you rapidly in Japanese, and all you’ve got is your smartphone. You open the app, tap the mic, and pray that google translate audio to english actually works this time. It’s a scene played out thousands of times a day. We live in an era where universal translators aren't just sci-fi tropes from Star Trek; they're sitting in our pockets, usually for free. But if you’ve ever used it to translate a lecture, a business meeting, or a complex medical conversation, you know the results can be... well, messy. Honestly, it's kinda miraculous and frustrating all at once.

The tech behind turning spoken waves into English text isn't just one piece of software. It’s a multilayered sandwich of Automatic Speech Recognition (ASR) and Neural Machine Translation (NMT). When you ask Google to handle audio, it first has to "hear" the phonemes, turn them into text in the original language, and then flip that text into English. Every step is a chance for a glitch. If the speaker has a thick accent or there’s a bus screeching in the background, the whole thing falls apart.

The Reality of Google Translate Audio to English Accuracy

Let’s be real. Most people think Google Translate is a dictionary. It’s not. It’s a prediction engine. When you feed it audio, it’s basically guessing what the next most likely word is based on billions of lines of previously translated text.

Back in 2016, Google switched to the Neural Machine Translation system. Before that, it was translating phrase-by-phrase, which is why older translations sounded like a broken robot. Now, it looks at the whole sentence. This is huge for English because our language is obsessed with word order. In a language like German, the verb might hide at the very end of a long sentence. The NMT system waits for that verb before it starts spitting out the English audio.

But here is where it gets tricky. Google’s AI is trained heavily on formal documents—think UN transcripts and European Parliament records. It’s great at "The delegate from France proposes a motion." It’s significantly worse at "Yo, let's grab some grub at that spot around the corner." Slang is the kryptonite of google translate audio to english workflows. If you’re trying to translate a casual conversation, the AI often defaults to the most literal, formal version of a word, which can completely change the vibe of what’s being said.

The Problem with "Transcribe" Mode

If you’re using the "Transcribe" feature for long-form audio, you’ve probably noticed it gets "tired." It doesn't actually get tired, obviously, but the context window—the amount of previous text the AI can "remember" to inform the current translation—is limited.

💡 You might also like: Examples of an Apple ID: What Most People Get Wrong

- Background noise is the biggest killer. A humming air conditioner can turn "I'd like a seat" into "I like the meat."

- Multiple speakers confuse the engine. It doesn't have "diarization" (the ability to distinguish between different voices) built into the standard mobile app very effectively.

- Homophones are a nightmare. "Their," "there," and "they're" sound identical. The AI has to use context clues to pick the right one. If the sentence is short, it’s a coin flip.

How the Pros Actually Use Google Translate Audio

If you think professional interpreters are worried about their jobs, you're half right. For basic transactional stuff—buying a train ticket or asking where the bathroom is—the app is king. But for anything involving legalities or deep emotion, humans are still the gold standard.

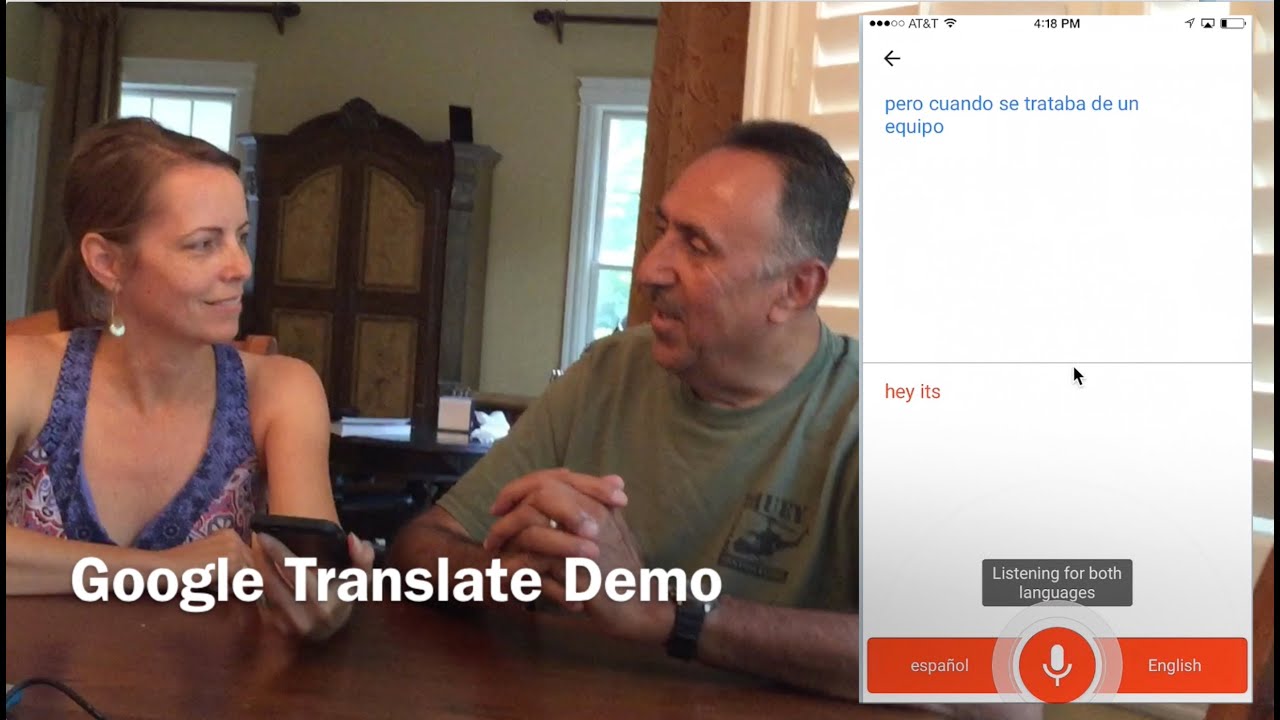

I’ve seen travelers use the "Conversation" mode, which is the split-screen interface. It’s designed to be a bridge. You speak, it translates. They speak, it translates. The trick is brevity. If you give the app a three-minute monologue about your life story, it will choke. If you speak in five-to-seven-word bursts, the accuracy of the google translate audio to english output skyrockets.

There's also the "Live Translate" feature on Pixel phones. This is a bit different because it uses on-device processing. It’s faster and more private, but because it’s not tapping into the massive server-side power of Google’s main data centers, it can sometimes be slightly less "smart" than the cloud-based version. It’s a trade-off. Speed versus depth.

Regional Dialects: The Unspoken Barrier

Spanish isn't just Spanish. The Spanish spoken in Madrid is a different beast than the Spanish spoken in Mexico City or Buenos Aires. Google is decent at detecting these broad differences, but it struggles with "rurals." If you’re in a small village in the mountains of Peru, the local dialect might have enough variations in pitch and vocabulary that the audio-to-English engine produces total gibberish.

The same goes for English outputs. Sometimes the English it gives you is technically correct but sounds "uncanny valley." It might use a British idiom when an American one was expected, or vice versa. It’s a reminder that language is a living, breathing thing, not just a set of rules for an algorithm to solve.

📖 Related: AR-15: What Most People Get Wrong About What AR Stands For

Technical Workarounds for Better Audio Results

If you're serious about getting a clean translation from a recording or a live speaker, you can't just hold your phone up in the air and hope for the best. You need to be deliberate.

First, use an external mic if you can. Even a cheap pair of wired earbuds with an inline mic will perform better than the pinhole microphone at the bottom of your phone, which is mostly designed to catch your voice from three inches away. If you're trying to capture audio from a speaker across a room, the "noise floor" will be too high. The AI will try to translate the echoes instead of the words.

Second, check your settings. Most people don't realize you can download languages for offline use. While the online version is technically more powerful, the offline models are surprisingly robust now. If you're in an area with spotty 4G, your google translate audio to english experience will be laggy and prone to cutting out. Downloading the English and source language packs ensures the processing happens locally without the "stutter" of a bad connection.

- Speak like a news anchor. Enunciate. Avoid "um" and "uh."

- Eliminate idioms. Don't say "it's raining cats and dogs." Say "it is raining heavily." The AI will thank you.

- Watch the screen. Google Translate shows you the text it thinks it heard before it translates it. If the transcription is wrong, the translation will be garbage. Correct the transcription first if you're in a position to do so.

The Future of Live Translation

We're moving toward a world of "seamless" translation. Google’s "Interpreter Mode" on Nest devices and the integration with Assistant are getting closer to real-time. We're talking about latencies under 500 milliseconds. That's fast enough to hold a nearly natural conversation.

But we aren't there yet. The biggest hurdle isn't vocabulary; it's pragmatics. Pragmatics is the study of how context contributes to meaning. If I say "Thanks a lot" with a sarcastic tone, a human knows I'm annoyed. Google Translate might just see the words and translate them as a genuine expression of gratitude. Until AI can reliably "hear" sarcasm, irony, and cultural subtext, google translate audio to english will remain a tool for information, not necessarily for connection.

👉 See also: Apple DMA EU News Today: Why the New 2026 Fees Are Changing Everything

It's also worth noting the privacy aspect. When you use the cloud-based version of the tool, your audio snippets are often processed on Google servers. While Google has strict data handling policies, if you're translating sensitive corporate secrets or private medical data, you might want to look into "On-Device" translation options or enterprise-grade tools that offer end-to-end encryption and don't use your data to train their models.

Practical Steps for High-Accuracy Translation

Stop treating the app like a magic wand and start treating it like a specialized tool. If you need to translate audio to English for a project, a trip, or a meeting, follow these specific steps to ensure you don't end up with a nonsensical mess.

Prepare your environment.

If you are recording a speaker, get as close to the sound source as possible. Use a dedicated recording app first, then play that audio back into Google Translate in a quiet room. This "double-hop" method often works better than trying to translate live in a noisy hall because you can control the playback volume and clarity.

Verify with "Reverse Translation."

This is a classic pro tip. Take the English text that Google gave you and translate it back into the original language. If the meaning stays the same, you’re probably safe. If the reverse translation comes back as something completely different, the original translation was likely flawed.

Use the "History" feature.

Don't let a good translation vanish. If you get a complex sentence right, star it in your history. You can export these later into a Google Sheet or a text file. This is incredibly helpful for students or researchers who are using audio translation for fieldwork.

Leverage Google Lens for context.

Sometimes the audio isn't enough. If you’re at a conference, use Google Lens to snap a photo of the PowerPoint slides while the audio is running. Having the visual text and the audio translation side-by-side will help you fill in the gaps where the audio engine might have tripped over technical jargon.

Check for "Sensing" updates.

Google updates its models constantly. Make sure your app is updated before a big trip. New "transcription" engines are rolled out frequently that improve how the software handles long-form speech versus short commands. Using a version of the app from six months ago means you're missing out on significant leaps in NMT accuracy.