You've probably tried it. You type something simple like "a cat in a space suit" into the chat box, hit enter, and wait. What comes back is... fine. It’s a cat. It’s in a suit. But it looks like every other AI image clogging up your social feed. It’s plastic. It’s a bit soulless. The truth is that getting a high-quality gemini prompt for image generation right isn't about being a "prompt engineer" with a fake degree; it's about talking to the model like it’s a director who’s had too much caffeine and needs specific instructions to stay on track.

Google’s Imagen 3 model, which powers the generation inside Gemini, is incredibly capable. It understands nuance better than the old versions. But if you give it a lazy prompt, you get a lazy result.

The "Vibe" Problem in Gemini Image Generation

Most people treat AI like a search engine. They use keywords. "Sunset, beach, 4k, realistic." Stop doing that. Gemini is a Large Language Model (LLM) first. It wants prose. It wants a story. When you're crafting a gemini prompt for image generation, you need to describe the mood, not just the objects.

Think about lighting. Is it "golden hour" or is it "the harsh, flickering fluorescent hum of a 1980s office building"? Those two descriptions change the entire DNA of the image. Gemini thrives on texture. Tell it about the grit in the sidewalk or the way the silk fabric catches the light. If you don't specify these things, the AI defaults to its "average," which is that clean, sterile look we’re all tired of seeing. Honestly, the best images come from prompts that sound like they were ripped out of a gothic novel or a gritty screenplay.

Stop Using "Photorealistic"

It’s a trap. Using the word "photorealistic" is actually one of the worst things you can do for a gemini prompt for image generation. Why? Because the AI already knows it’s supposed to make an image. When you use that word, you’re often triggering a specific "AI-style" filter that makes skin look like polished marble and hair look like plastic threads.

Instead, talk about the camera. You don't need to be a pro photographer, but knowing a few basics helps.

👉 See also: Lost Your AirPods Case? Here Is What Actually Happens Next

- Aperture: Mention a "shallow depth of field" if you want that blurry background (bokeh) that makes portraits pop.

- Film Stock: Ask for "grainy 35mm film" or "Polaroid aesthetics" to get rid of the digital sheen.

- Lighting Gear: Use terms like "rim lighting," "cinematic backlighting," or "softbox diffusion."

If you tell Gemini to "photograph this on a Fujifilm XT-4 with a 35mm lens," it interprets the request through the lens of millions of real photos taken with that gear. It’s a shortcut to authenticity.

Dealing with the "Safety" Refusals

We have to talk about the elephant in the room. Google is terrified of a PR nightmare. Because of this, Gemini can be a bit... sensitive. You might find your gemini prompt for image generation blocked because it thinks you're trying to create a celebrity or something "harmful."

Sometimes it triggers a refusal for no clear reason. If you’re trying to generate a historical battle and it says no, try shifting the focus to the architecture or the landscape of the era rather than the conflict itself. Avoid names of real people entirely. Instead of asking for a specific actor, describe their features: "A middle-aged man with salt-and-pepper hair, deep-set eyes, and a weathered face reflecting years of sea travel." This usually bypasses the "no celebrities" hard-stop while still getting you the look you want.

Composition and the Rule of Thirds

A common mistake is putting the subject right in the middle. It’s boring. It looks like a passport photo. To make your gemini prompt for image generation stand out, dictate the composition. Use phrases like:

- "Wide-angle shot from a low perspective looking up at a giant."

- "Close-up macro shot focusing only on the iris of the eye."

- "Bird's eye view of a sprawling neon city at night."

- "Dutch angle to create a sense of unease and disorientation."

By moving the "camera," you force the AI to calculate shadows and perspectives it wouldn't normally use. This is how you get those "how did they do that?" images.

Structure Matters (But Not the Way You Think)

You’ve likely seen those massive prompts that are 500 words long. They don't always work better. Gemini has a "context window," but it also has a "weighted focus." The stuff at the beginning of your prompt is usually what it prioritizes.

🔗 Read more: Why CapCut Is Banned: The Real Story Behind the Restrictions

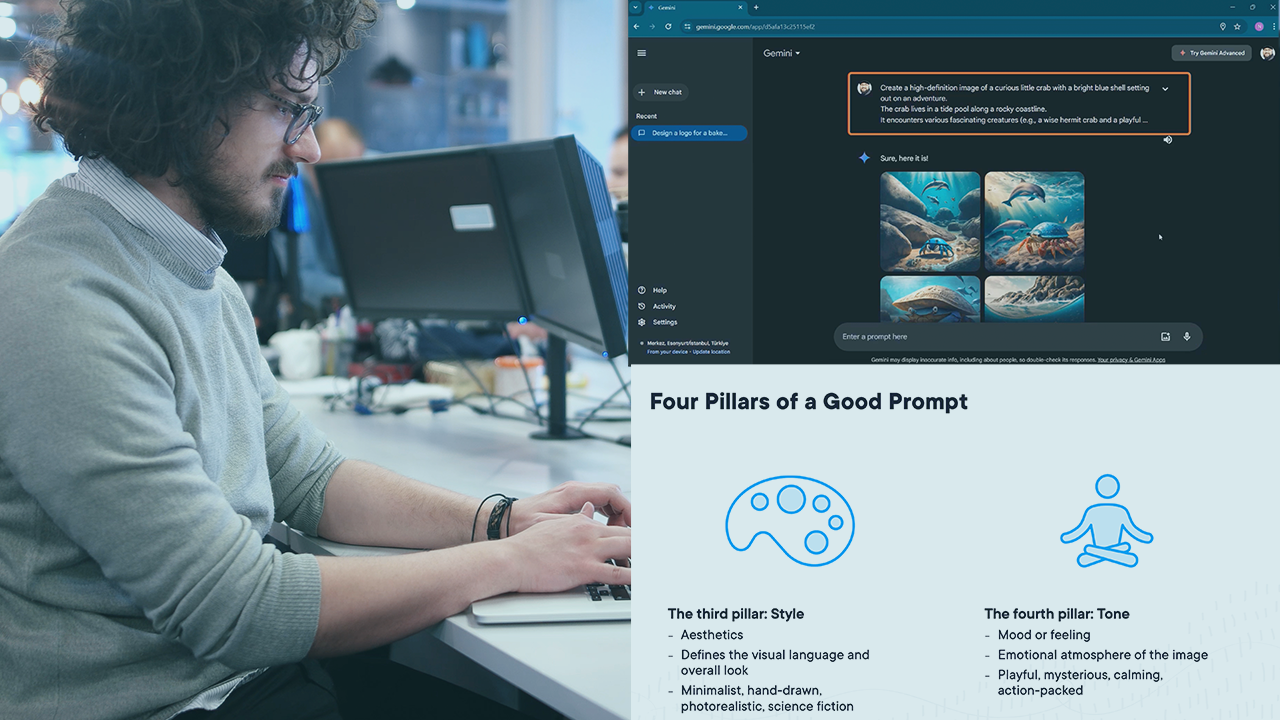

If you want a dragon in a library, but you spend the first four sentences describing the dust motes and the type of wood the shelves are made of, you might end up with a very detailed library and a tiny, weird-looking dragon. Lead with the subject. Subject first, environment second, style third, lighting last. Here is a real-world example of a "bad" vs "good" prompt:

Bad: A futuristic city with flying cars, high quality, 8k.

Good: A street-level view of a rain-slicked cyberpunk alleyway. Neon signs in teal and magenta reflect in deep puddles. In the background, a rusted flying vehicle is parked lopsidedly near a noodle stand. The atmosphere is heavy with fog and steam. Shot on 35mm film with heavy grain.

See the difference? The second one gives the AI a "mood" to aim for. It’s not just a city; it’s a specific kind of city.

The Secret of Negative Prompting (The Gemini Way)

Gemini doesn't have a dedicated "negative prompt" box like Stable Diffusion does. You can't just type "--no clouds." However, you can bake it into your natural language. You can say, "Create a minimalist room with absolutely no furniture or clutter, just bare white walls and a single window." Or, "A portrait of a woman where the background is completely dark and void of any detail."

Basically, you have to tell the AI what not to do by describing the absence of those things. It sounds counterintuitive, but it works because of how LLMs process "tokens" of meaning.

Why 2026 is the Year of Iteration

We're past the "wow" phase of AI art. Now, it's about control. Google is constantly updating the weights behind Imagen. What worked last month might feel different today. The best way to use a gemini prompt for image generation right now is to treat the first result as a draft.

If the image is almost perfect but the colors are too bright, don't start over. Type: "Keep the same layout, but mute the colors to a sepia tone and make the lighting much darker." Gemini is getting better at "image-to-image" consistency within the chat interface. Use that to your advantage.

🔗 Read more: How to Log Off X Without Feeling Like You Are Missing Out

Actionable Steps for Better Results

- Describe the Texture: Use words like "oxidized metal," "porous stone," "damp wool," or "iridescent scales."

- Set the Time: "3:00 AM blue light" looks vastly different than "high noon desert sun."

- Reference Art Movements: Instead of "cool painting," try "in the style of 1920s Art Deco" or "reminiscent of Caravaggio’s chiaroscuro."

- Use Extreme Perspectives: Ask for an "extreme close-up" or an "ultra-wide panoramic."

- Humanize the Subject: If drawing a person, give them an emotion. "A tired chef leaning against a brick wall" is a better prompt than "a chef."

Experiment with adding one weird, unrelated detail. Tell Gemini there’s a "single red balloon stuck in a tree" in your gritty war scene. These "anchor points" often force the AI to render the rest of the scene with more intentionality because it has to figure out how that weird object fits into the lighting and physics of the world.

Stop settling for the first thing the "Generate" button gives you. Twist the prompt. Change the lens. Make it weird. That's where the real art happens.