You’re staring at a screen. Maybe it’s a calculus problem involving a rotating solid, or perhaps it’s just a tricky percentage increase that doesn’t quite make sense in your head. You do what everyone does. You type the problem into a search bar. Finding reliable answers for math questions has become a digital reflex, but honestly, the results are getting weirder.

Google’s snippets might give you a direct number, but where did it come from? Photomath gives you a step-by-step, but does it explain why it chose the substitution method over elimination? We’ve reached this bizarre point where we have all the answers and none of the understanding. It’s frustrating. It's also why so many students—and engineers, for that matter—end up with "correct" results that are fundamentally broken when applied to the real world.

The Problem With Instant Answers

We have to talk about Large Language Models (LLMs) like GPT-4o and Claude 3.5. They are incredible at poetry. They are okay at coding. They are, quite frequently, terrible at arithmetic. This is because these models predict the next "token" or word based on probability, not logic. If you ask an AI for answers for math questions involving large prime numbers or complex multi-step word problems, it might hallucinate a digit because that digit looks like it belongs there.

In 2024, researchers found that even top-tier models struggled with "reversal" logic in math. If a model knows that $A$ is $B$, it doesn't always automatically grasp that $B$ is $A$ without specific training. This is a huge deal. If you're relying on a chatbot to help you study, you're basically asking a very well-read librarian to perform surgery. They might have read the textbook, but they don't have the "hands-on" logical framework to know when a decimal point is in the wrong place.

How Search Engines Actually Index Math

When you search for answers for math questions on Google, you aren't just searching for text. You're searching for LaTeX—the typesetting language used for math.

Most high-ranking sites like Stack Exchange, WolframAlpha, and Brilliant use LaTeX to render equations. If you want better search results, you actually have to learn how to search like a mathematician. Instead of typing "how to find the area of a circle," typing "Area = $\pi r^2$ derivation" will lead you to academic papers and peer-reviewed educational content rather than SEO-optimized "homework helper" sites that are often riddled with ads and half-baked explanations.

📖 Related: How to Use Social Media Person Search Without Getting Banned or Scammed

WolframAlpha remains the gold standard here. Unlike Google, Wolfram is a computational knowledge engine. It doesn't "search" the web for your answer; it computes it using a massive internal library of algorithms and curated data. It’s the difference between asking someone what the weather is and looking at a thermometer yourself.

The "Check My Work" Fallacy

Everyone wants the shortcut. I get it. But there’s a psychological trap called the "fluency heuristic." When you see answers for math questions laid out in a clean, digital font with a green checkmark next to them, your brain thinks, "Oh, I totally get that."

You don't.

Usually, you've just recognized the pattern of the solution, which is not the same as being able to replicate it. Real learning happens in the "stuck" phase. If you bypass the struggle by jumping straight to a solution app, you're essentially watching someone else lift weights at the gym and wondering why your own muscles aren't growing.

Common Pitfalls in Digital Math Help

- Rounding Errors: Many automated solvers round intermediate steps, leading to a final answer that’s slightly off. In engineering or chemistry, that's a disaster.

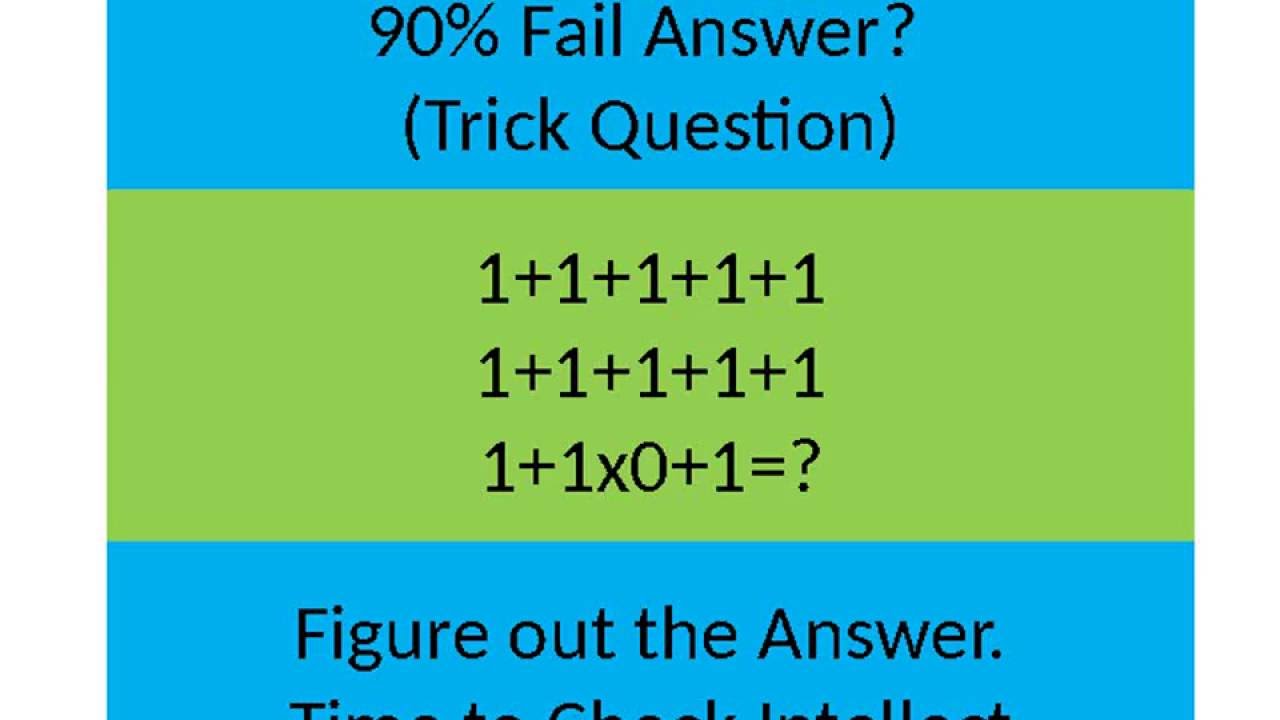

- Order of Operations: Believe it or not, some basic calculators still struggle with $PEMDAS/BODMAS$ when complex fractions are involved.

- Assumed Units: If you don't specify "radians" or "degrees," an automated solver will guess. It usually guesses wrong based on whatever its last query was.

Why Symbols Matter More Than Words

Think about the last time you used an app to scan a worksheet. These apps use Optical Character Recognition (OCR). OCR is brilliant until it hits a smudge, a curly bracket that looks like a 1, or a variable like '$v$' that looks like a '$

u$' (nu).

If you're looking for answers for math questions that involve Greek letters or subscripts, manual entry into a tool like Desmos or Symbolab is infinitely more reliable than a camera scan. These platforms allow you to build the equation block by block. This forces you to acknowledge the structure of the problem. You start seeing the "skeleton" of the math.

The Professional Context: Beyond the Classroom

If you're in business or data science, "math answers" aren't about solving for $x$. They’re about interpreting a p-value or understanding the variance in a supply chain model. Here, the "answer" is often a "maybe."

Take the Monty Hall Problem. It’s a classic probability puzzle that sounds like it has one answer, but it’s so counter-intuitive that even Paul Erdős—one of the most prolific mathematicians of the 20th century—remained skeptical until he saw a computer simulation. If a genius can get it wrong, your first Google result definitely can.

When searching for professional-grade answers for math questions, you should be looking at sites like ArXiv for pre-print papers or specialized forums like MathOverflow. This is where the real nuance lives. It's where people argue about the validity of a proof rather than just handing out a number.

Trust, But Verify

The best way to use the internet for math is as a tutor, not a ghostwriter. If you find an answer, try to work backward. If you’re solving a quadratic equation, plug your result back into the original formula. If the two sides of the equals sign don't match, the internet lied to you.

✨ Don't miss: Prime Numbers: What They Actually Are and Why They Break Our Brains

It happens more than you'd think.

Actionable Steps for Better Math Accuracy

Stop treating Google like a calculator and start treating it like a library. Here is how to actually get reliable results:

- Use Search Operators: If you need help with a specific topic like Taylor Series, search

site:edu "Taylor Series"to skip the fluff and get straight to university lecture notes. - Cross-Reference Solvers: Run your problem through both WolframAlpha and Symbolab. If they disagree, the problem is likely in how you've input the syntax or a specific "edge case" in the formula.

- Check the "Talk" Pages: On Wikipedia, the "Talk" tab at the top of a math entry is a goldmine. It’s where experts debate errors in the article. If a formula is controversial or has a common typo, you'll find it there.

- Visualize First: Before looking for a numerical answer, use Desmos to graph the function. If the graph doesn't look like what you expected, the "answer" doesn't matter because you've likely set up the problem incorrectly.

- Identify the Source: If an answer comes from a site that requires a subscription to see the "steps," be wary. These are often AI-generated on the fly and aren't peer-reviewed.

Math isn't a spectator sport. The tools we have now are meant to be the bicycle for the mind, not the engine. Use them to check your logic, explore visualizations, and access high-level proofs, but always keep your own "sanity check" running in the background. If a result looks too simple or wildly complex for the context, it probably is.

Verify the variables. Check your units. Never trust a single source without a second opinion.