You’re scrolling. It’s 11:00 PM. You see a headline that makes your blood boil or your jaw drop. Maybe it’s a politician saying something truly unhinged, or a "miracle cure" for a disease that doctors have been fighting for decades. You share it. Your aunt shares it. Within three hours, it’s everywhere. Then, the correction comes out two days later. By then, nobody cares. The damage is done. False news on social media isn’t just a "glitch" in our digital lives; it’s basically the engine that drives a lot of the internet's most profitable platforms.

It’s frustrating.

We like to think we’re too smart to be fooled, but the data says otherwise. A massive study by MIT researchers, published in Science, found that false news travels six times faster than the truth on Twitter (now X). It wasn't because of bots. It was because of us. Real people. We are attracted to the "novelty" and the emotional punch of a lie. Truth is often boring. Lies are cinematic.

The Viral Architecture of Deception

The platforms aren't neutral observers. Whether we’re talking about TikTok, Meta, or X, the algorithms are designed to keep you looking at the screen. Engagement is the only currency that matters. If a post about false news on social media generates a thousand angry comments, the algorithm sees that as a "success" and pushes it to ten thousand more people. It doesn't have a moral compass. It just has a spreadsheet.

Gordon Pennycook, a psychologist who has spent years studying why people believe weird stuff, points out that it’s not always about a lack of intelligence. It’s about a lack of attention. We’re "lazy" thinkers when we’re on our phones. We lean on our intuition rather than our analytical brain. If a headline fits what we already believe about the world, we hit share without even clicking the link. Honestly, most people don't even read past the headline before passing it on to their entire contact list.

Think about the "Pope Francis Endorses Donald Trump" story from 2016. It was a total fabrication. Yet, it racked up nearly a million engagements on Facebook. Or consider the 2023 AI-generated image of an explosion at the Pentagon. It went viral in minutes, caused a brief dip in the stock market, and was debunked by the Arlington Fire Department shortly after. The speed of the lie outpaced the physical reality of the situation.

Why Fact-Checking Often Fails

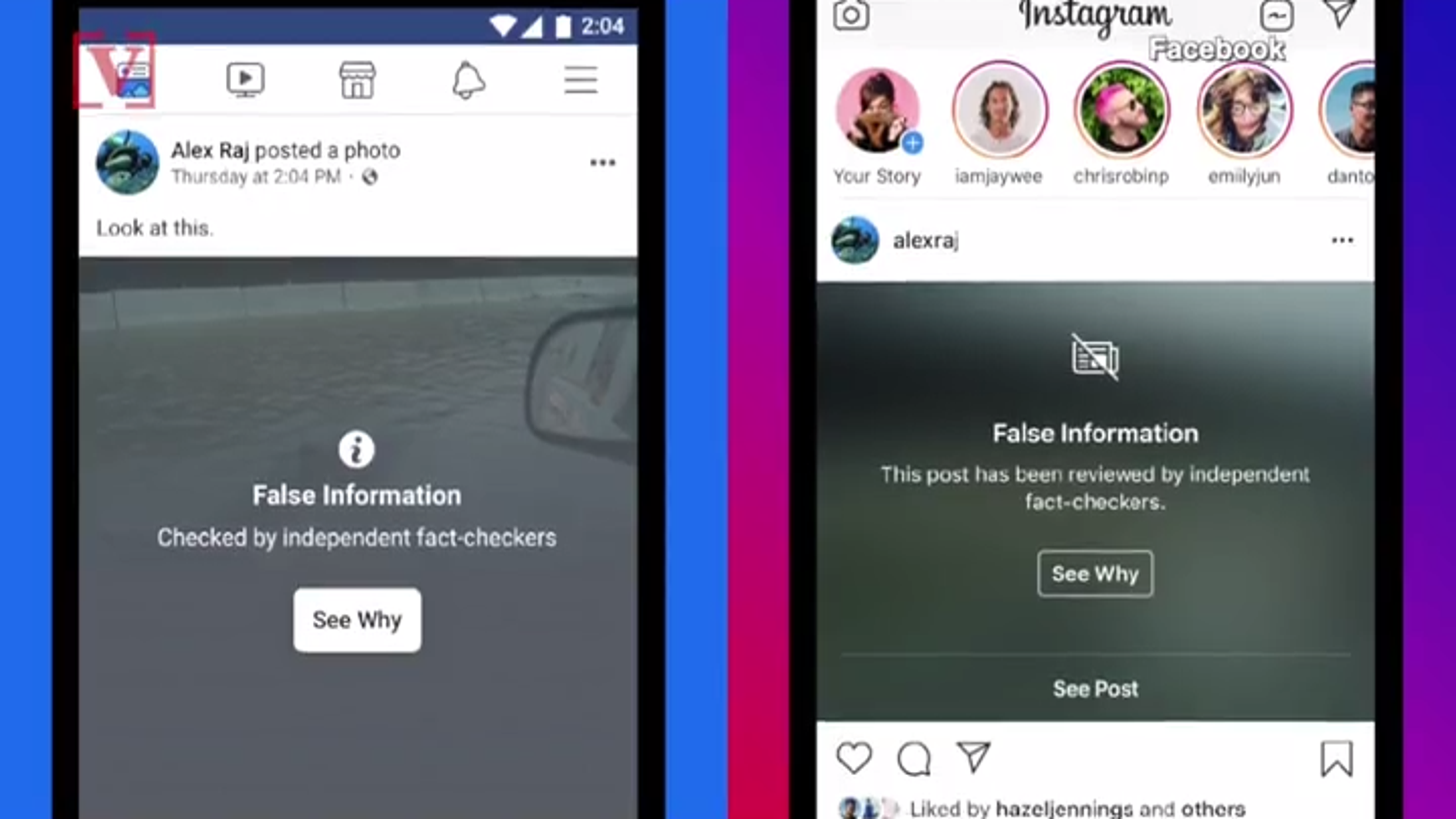

You’d think a simple "Fact Check" label would fix things. It doesn't. Sometimes, it actually makes it worse. This is known as the "backfire effect," though recent social science suggests it’s more about "identity-protective cognition." Basically, if you tell someone their favorite political meme is false news on social media, they don't always thank you for the correction. Often, they dig their heels in deeper. They see the fact-checker as part of a "biased establishment."

It’s a mess.

We’ve moved into an era where "truth" is becoming decentralized. When everyone has a printing press in their pocket, the gatekeepers are gone. That’s good for democracy in some ways, but it’s a nightmare for shared reality.

The Business of Lies: Follow the Money

False news isn't always about politics. A lot of the time, it's just about a paycheck. In the lead-up to the 2016 US election, a group of teenagers in Veles, Macedonia, ran over 100 websites churning out sensationalist American political news. They didn't care about the candidates. They cared about Google AdSense revenue. They found that pro-Trump stories performed better than pro-Clinton stories in terms of clicks, so they pivoted. It was a business model built on friction and fabrication.

Today, that model has evolved. We have "pink slime" journalism—sites that look like local news outlets but are actually funded by political dark money. They use names like The Lansing Sun or The Arizona Monitor to sound legitimate, but they’re just content farms for false news on social media. They exploit the trust people still have in local reporting to slip in biased or outright false narratives.

The Role of Generative AI

If 2016 was the year of the "troll farm," 2024 and 2025 have been the years of the "AI deluge." It’s never been easier to create high-quality nonsense. Deepfakes used to require a Hollywood-level studio. Now? You can make a video of a world leader saying something scandalous using a free app and 30 seconds of audio.

The problem isn't just that we'll believe the fake stuff. It’s that we’ll stop believing the real stuff. This is called the "liar’s dividend." When everything could be fake, a politician caught in a real scandal can just say, "That’s an AI deepfake," and their supporters will believe them. It erodes the very foundation of evidence-based debate.

How to Spot the Grift Without Losing Your Mind

You don't need a PhD in communications to navigate this. You just need to slow down. The biggest enemy of false news on social media is a five-second pause.

First, check the source. Is it a URL you recognize? Is it bbc.com or is it bbc-news-reports-site.xyz? Scammers love to "typosquat" or use slightly off-brand domains to trick your brain. If the "About Us" page is vague or non-existent, that’s a massive red flag.

Second, look for the "primary source." If an article says "Study finds that eating chocolate cures cancer," find the actual study. Most of the time, the study says something like "High doses of a specific compound found in cocoa beans showed a 2% reduction in cell growth in a petri dish." The jump from the lab to the headline is where the lie lives.

Third, use lateral reading. This is a technique used by professional fact-checkers. Instead of staying on the page and trying to figure out if it's true, open a new tab and search for the topic. See what other, reputable outlets are saying. If a major event happened, The New York Times, Wall Street Journal, and Associated Press will be talking about it. If only "TruthEagleDaily.net" has the scoop, it’s probably not a scoop. It’s a fabrication.

The Emotional Trigger Test

This is the most important one. Ask yourself: "How does this make me feel?"

If a post makes you feel incredibly angry, smug, or scared, it was likely designed to do exactly that. Professional manipulators know that when we are in a high-arousal emotional state, our critical thinking centers shut down. We stop being skeptics and start being soldiers for our "side."

Actionable Steps to Clean Up Your Feed

We can’t wait for the tech giants to save us. They’ve shown time and again that their loyalty is to their shareholders, not to the truth. You have to take control of your own information diet.

- Diversify your follows. If your entire feed is an echo chamber of people you agree with, you are the perfect target for false news on social media. Follow a few people you disagree with—not the trolls, but the smart, principled ones. It forces your brain to stay sharp.

- Check the date. Old news is often recirculated as "breaking" to spark outrage. A photo of a riot from 2014 might be shared today to make it look like a city is currently on fire.

- Reverse image search. If a photo looks too perfect or too shocking, right-click it and "Search Image with Google." You’ll often find it’s from a completely different context or a different country.

- Support local journalism. Real reporters with names and reputations on the line are the best defense against anonymous digital lies. If you can afford it, pay for a subscription to a newspaper that actually employs editors and fact-checkers.

- Report, don't just ignore. Most platforms have a "misinformation" reporting tool. It’s not perfect, but it flags the content for human or AI review. If enough people flag a blatant lie, it can limit its reach.

The internet is a wild place. It’s basically the Wild West, but with fiber-optic cables and better graphics. Staying informed requires work. It requires us to be more than just passive consumers. It requires us to be citizens who value reality over clicks.

Start by being the person who doesn't share the "outrage of the day" until you've spent three minutes verifying it. You might not get as many likes, but you'll have your integrity. And in an era of digital chaos, that's worth a lot more.

🔗 Read more: Apple Series 2 GPS: Why it was the biggest turning point for the Watch

Verify the timestamp on any viral video before sharing to ensure it isn't "zombie content" from years ago. Use tools like the InVID verification plugin or Snopes to cross-reference breaking claims. Finally, set a "2-minute rule" for any political or health-related post: if you haven't read the linked source for at least two minutes, do not hit the share button.