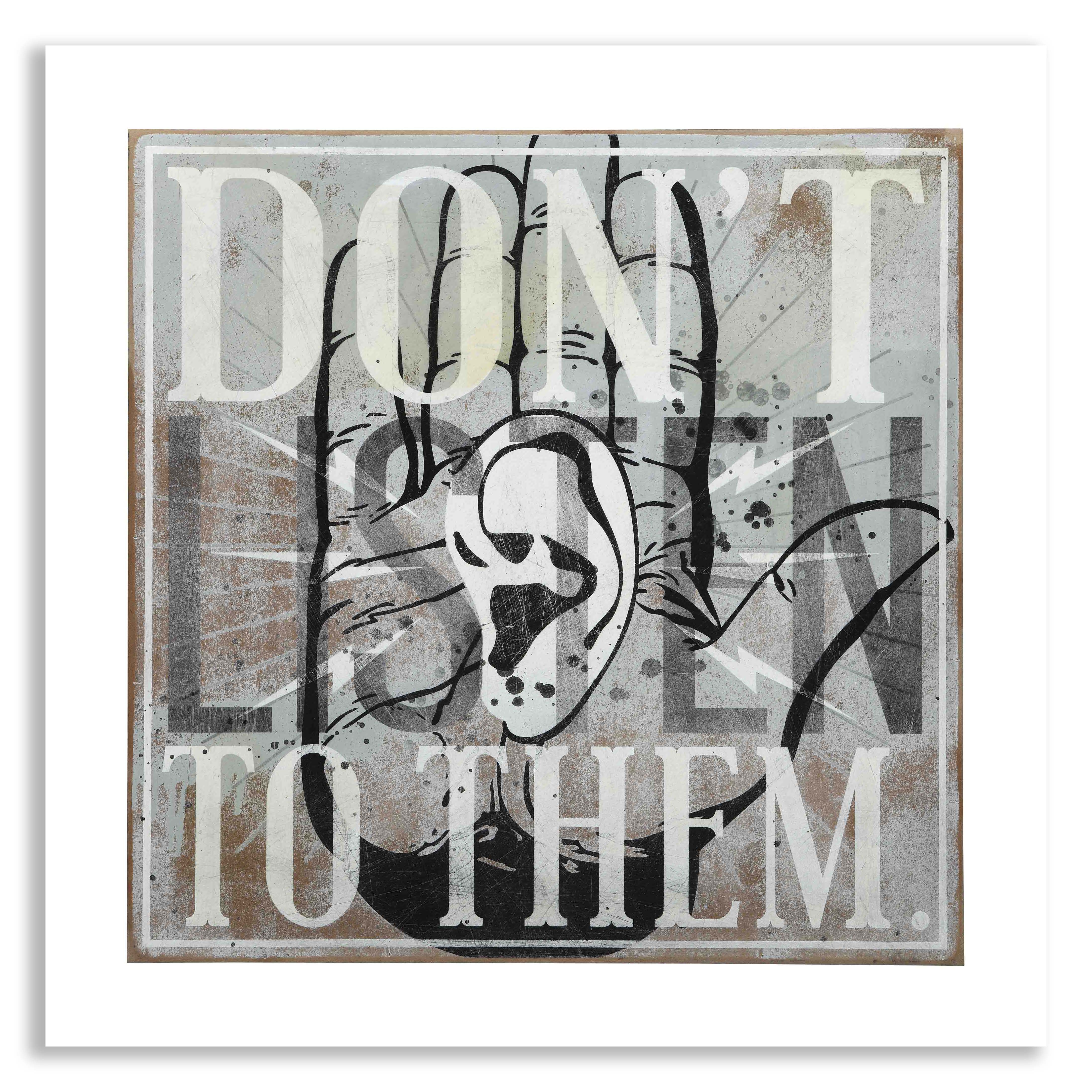

You've probably heard the hype. Every "thought leader" on LinkedIn is screaming that if you aren't using a trillion-parameter LLM for your local bakery's inventory system, you're basically living in the Stone Age. It's exhausting. Honestly, the loudest voices in the machine learning space are often the ones furthest from the actual code. When people say dont listen to them ml is a mindset, they aren't just being contrarian for the sake of it. They’re reacting to a massive disconnect between academic research and the messy, high-latency, budget-constrained reality of production environments.

Machine learning has a noise problem.

The field moves so fast that "best practices" from six months ago are now considered "technical debt." If you listen to every influencer or venture-backed researcher, you’ll end up over-engineering a solution that costs $50,000 a month in inference fees just to categorize emails. It’s overkill. Pure and simple.

The Myth of "Bigger is Always Better"

There is this pervasive idea that state-of-the-art (SOTA) benchmarks are the only thing that matters. If a paper comes out showing a 0.2% increase in F1 score on the ImageNet dataset, the hype train leaves the station immediately. But here is the thing: SOTA doesn't mean "good for your business."

Most of these massive models are trained on clean, curated datasets that look nothing like the "garbage in, garbage out" data you're likely dealing with. If you're building a recommendation engine for a niche e-commerce site, a well-tuned XGBoost model will probably outperform a complex transformer architecture nine times out of ten. It's cheaper. It's faster. You can actually explain to your boss why it made a specific decision. That last part is huge. Interpretability isn't just a buzzword; it’s a legal requirement in many industries now, especially with the AI Act and similar regulations worldwide.

Why do they keep pushing the big stuff? Because big models sell GPUs and cloud credits. It’s an ecosystem designed to keep you spending. When you hear that "linear regression is dead," remember that most of the world's most successful financial systems still run on versions of it. Don't let the shiny new toy distract you from the tool that actually works.

🔗 Read more: Why No Name TikTok is Actually the Smartest Way to Grow Right Now

Stop Obsessing Over Clean Data

You’ll hear data scientists say they spend 80% of their time cleaning data. Then they tell you that you must have a perfectly labeled, pristine dataset before you even think about training.

That's a fantasy.

In the real world, data is a disaster. It’s missing values, it’s got duplicates, and half the timestamps are in the wrong time zone. If you wait for "perfect" data, you’ll never ship anything. Modern techniques like weak supervision or snorkel-based labeling allow you to move forward with "noisy" labels. Some of the most robust systems in production today—think Google’s search ranking or Amazon’s logistics—thrive on the very noise that academics try to prune away. The noise is often where the signal lives. It represents the actual chaos of the human experience.

The Problem With Automated Everything

AutoML is the latest "miracle" tool. "Just drop your data in, and we'll find the best model!" they say.

It sounds great on paper. In practice? It’s a black box that frequently overfits to the most irrelevant features in your dataset. If your AutoML tool decides that "user ID" is the best predictor of "churn," you haven't found a breakthrough. You've found a leak. You still need a human in the loop who understands the domain. Machine learning is a tool for experts, not a replacement for them.

Dont Listen to Them ML: The Case for Small Data

We are told we need "Big Data." Millions of rows. Terabytes of logs.

Actually, you can do a lot with a few hundred high-quality examples. Transfer learning changed the game here, but people still act like it’s 2012. You can take a pre-trained model, freeze the weights, and fine-tune it on a tiny, specific dataset to get incredible results. This is how medical AI is actually making progress. You don't have a billion X-rays of a rare lung condition. You might have fifty. Using specialized architectures like U-Nets or few-shot learning techniques is far more effective than trying to "feed the beast" with more mediocre data.

Small data is also more ethical. It’s easier to audit. You can actually look at every single row and see if there’s a bias problem. When you have a billion rows, bias is a ghost in the machine—you know it's there, but good luck finding exactly where it’s hiding.

The "Real-Time" Fallacy

"Your model needs to update in real-time!"

Does it? Really?

Implementing online learning—where the model updates with every new piece of data—is an absolute nightmare for stability. One bad batch of data can "poison" the model and tank your performance across the board. Most businesses are perfectly fine with batch processing. If your recommendation engine updates once every 24 hours, your users probably won't even notice. The cost and complexity of building a real-time streaming ML pipeline are rarely worth the marginal gains in accuracy.

Stick to what you can maintain. A model that works 95% of the time and is easy to fix is infinitely better than a "real-time" model that breaks every Sunday at 3:00 AM.

Technical Realities vs. Marketing Speak

Let’s talk about the hardware. The "them" in dont listen to them ml usually want you to rent out an A100 cluster.

- Quantization: You can often shrink a model by 4x with almost zero loss in accuracy.

- CPU Inference: For many tasks, a modern CPU is plenty fast. You don't always need a dedicated GPU.

- Pruning: Removing "dead" neurons from a network can make it run significantly faster on edge devices.

These aren't the things that get the most clicks on tech news sites because they aren't "revolutionary." They are just good engineering. If your model takes three seconds to respond, it doesn't matter how smart it is; the user has already closed the tab. Latency is a feature.

The Evaluation Trap

Don't trust a single metric. Accuracy is the most lied-about stat in the industry. If 99% of your users don't churn, and your model predicts "no one will ever churn," your model is 99% accurate.

It’s also completely useless.

You need to look at precision, recall, and specifically the confusion matrix. You need to understand the cost of a False Positive versus a False Negative. In cancer detection, a False Negative is a catastrophe. In spam filtering, a False Positive (missing an important email) is arguably worse than seeing a little extra junk. The "experts" will give you a generic score. You need to define what "success" looks like for your specific business case.

Moving Toward Pragmatic Machine Learning

So, how do you actually apply this? Start by ignoring the "all-in" approach. You don't need an "AI Strategy." You need to solve a specific problem.

- Start with a Heuristic: Can you solve this with an

if/elsestatement? If yes, do that first. It’s your baseline. If your "advanced" ML model can’t beat a simple rule-based system, scrap the model. - Focus on Features, Not Architectures: Spending a week engineering a better feature (like "time since last login") will almost always yield better results than spending a week tuning hyperparameters on a neural network.

- Deploy Early: Don't wait for the perfect model. Put a "dumb" model in production and see how it handles real-world traffic. This will teach you more about your data than any offline validation ever could.

- Monitor Drift: Models decay. The world changes. A model trained on pre-2020 data was useless once the pandemic hit. Build monitoring systems that tell you when the model's environment has shifted.

The most successful ML practitioners are the ones who are the most skeptical. They treat every new "breakthrough" with a grain of salt. They prioritize reliability over flashiness. When you stop listening to the noise, you can finally start building things that actually provide value. Machine learning is just software. It’s high-stakes software with a lot of math involved, but it’s still just a tool to get a job done.

Keep your models lean. Keep your data understood. And for heaven's sake, keep your costs under control. That's how you actually win in this field.

Actionable Next Steps:

- Audit your current ML pipeline for "complexity debt"—identify one part of the system that could be simplified without losing performance.

- Set up a "baseline" comparison using a simple linear model to see if your complex deep learning model is actually earning its keep.

- Interview your end-users to see if the "accuracy" they care about matches the metrics you are tracking in your dashboard.