You've seen the clips. Those eerie, hyper-realistic faces melting into landscapes or flowers blooming with impossible textures on Twitter and Reddit. Naturally, you’re asking: does Midjourney do video yet? If you’re looking for a simple "Generate Video" button inside the Discord server, you’re going to be disappointed. It isn't quite that easy.

Honestly, the state of Midjourney video is a bit of a "yes, but actually no" situation.

For years, Midjourney has been the king of static imagery. David Holz, the founder, has been relatively quiet but intentional about moving into motion. We aren't in the era of full-scale filmmaking within a single prompt just yet, but the platform has slowly leaked out features that hint at where we are going. It’s a messy, exciting transition period.

The short answer to "Does Midjourney do video?"

Right now, Midjourney does not have a native, text-to-video engine that competes directly with things like OpenAI's Sora or Kling. If you type /imagine a cat flying through space and expect a MP4 file back, it won't happen.

What you can do is use the --video parameter.

This is an old trick, but it's still there. When you add this tag to the end of your prompt, the bot records a short timelapse of the image being generated. It shows the noisy clouds of pixels slowly resolving into your final artwork. To get the link, you have to react to the job with an envelope emoji. It's a bit clunky. It's also not "video" in the sense of characters moving or a plot unfolding. It’s just a recording of the math happening in the background.

But things changed recently with the "Pan" and "Zoom" features.

By using the "Zoom Out" or "Directional Pan" buttons on a generated image, users have found they can "flicker" through multiple frames to create a sense of motion. It's more like traditional animation where you're stitching together high-quality stills. It's tedious work. It requires a third-party editor like Premiere Pro or CapCut. But it's how those viral "infinite zoom" videos are made.

Why Midjourney hasn't gone full Sora yet

Building a video model is exponentially harder than a static image model. Think about it. An image is a single slice of time. A video requires temporal consistency. If a character blinks in frame one, their eyes need to be in the same place in frame two.

Midjourney's obsession has always been aesthetic quality.

If you look at competitors like Runway Gen-2 or Pika Labs, they have motion, but they often lack that "Midjourney soul"—that specific, artistic lighting and texture that makes MJ stand out. David Holz mentioned in a Discord Office Hours session that they are working on a video model, but they won't release it until it meets their standards for "high-quality" pixels. They don't want grainy, shaky footage. They want cinematic excellence.

There is also the hardware problem.

💡 You might also like: Getting Past News Paywalls: Why Your Usual Tricks Don't Work Anymore

Processing video requires massive amounts of VRAM. Midjourney already puts a strain on Discord's infrastructure. Moving to video means scaling up their GPU farms significantly. It’s a business hurdle as much as a technical one.

The workaround: The Midjourney-to-Runway pipeline

Since the answer to "does Midjourney do video" is "not natively," the pro community has developed a workaround. This is currently the industry standard for AI filmmaking.

Basically, you use Midjourney as the "Director of Photography." You generate a stunning, high-resolution still image that captures the lighting, the mood, and the character design. You then take that image and drop it into a dedicated video AI tool like Runway Gen-3, Luma Dream Machine, or Kling.

These tools have an "Image-to-Video" feature.

- Generate your masterpiece in Midjourney.

- Upscale it.

- Upload it to Luma or Runway.

- Add a motion prompt like "camera pans left" or "slow hair movement."

This workflow gives you the best of both worlds. You get Midjourney’s unmatched aesthetic with another tool’s motion engine. It’s how most of the "AI movie trailers" you see on YouTube are actually produced. It’s not a one-click process, but the results are stunning.

What about the Midjourney Web Alpha?

If you’ve been invited to the Midjourney web-based editor, you’ve probably noticed the interface is much cleaner than Discord. There have been rumors and small leaks regarding a "Motion" slider appearing in internal testing.

Some users have seen a "Style Reference" (SREF) and "Character Reference" (CREF) system that works incredibly well for maintaining consistency. Consistency is the holy grail of video. If Midjourney can make a character look identical in 100 different images, they are 90% of the way to a video model.

The speculation in the community—and based on Holz's recent comments—is that we might see a dedicated "Motion" tool within the web interface before the end of the year. It likely won't be "text-to-video" at first. It will probably be "image-to-video," allowing you to animate the stills you’ve already created.

Why the --video parameter still matters

Even though it’s just a timelapse, the --video parameter is actually a great way to prove your work is "human-directed" AI. In an era where people are skeptical of AI-generated content, showing the "growth" of an image from noise to art is satisfying.

It’s also a great way to create social media "process" videos.

If you're a designer trying to show off your prompting skills, a 4x speed timelapse of a Midjourney generation is perfect for a TikTok background or an Instagram Reel. It captures the eye. It's a tiny bit of motion in a world of static squares.

Real-world examples of "Pseudo-Video" in Midjourney

Check out the work of creators like Stas Kulesh or the AIFilm community on X (Twitter). They aren't waiting for a video button. They use a technique called "Inpainting" to change small parts of an image while keeping the rest static.

Imagine a portrait of a woman. You use the "Vary Region" tool to change only her eyes to be closed, then open, then looking left. You save each image. You string them together in an editor. Suddenly, you have a flickering, stop-motion style animation that looks incredibly intentional.

It’s a "hack." But in the creative world, hacks are everything.

Actionable steps for creators

If you want to start making video with Midjourney today, stop looking for a play button. Start thinking like a compositor.

First, master the "Character Reference" (CREF) tag. Use --cref [URL] to make sure your subject stays the same across multiple generations. This is the foundation of any video project. If your character’s face changes every time they move, the video will look like a fever dream (unless that's what you're going for).

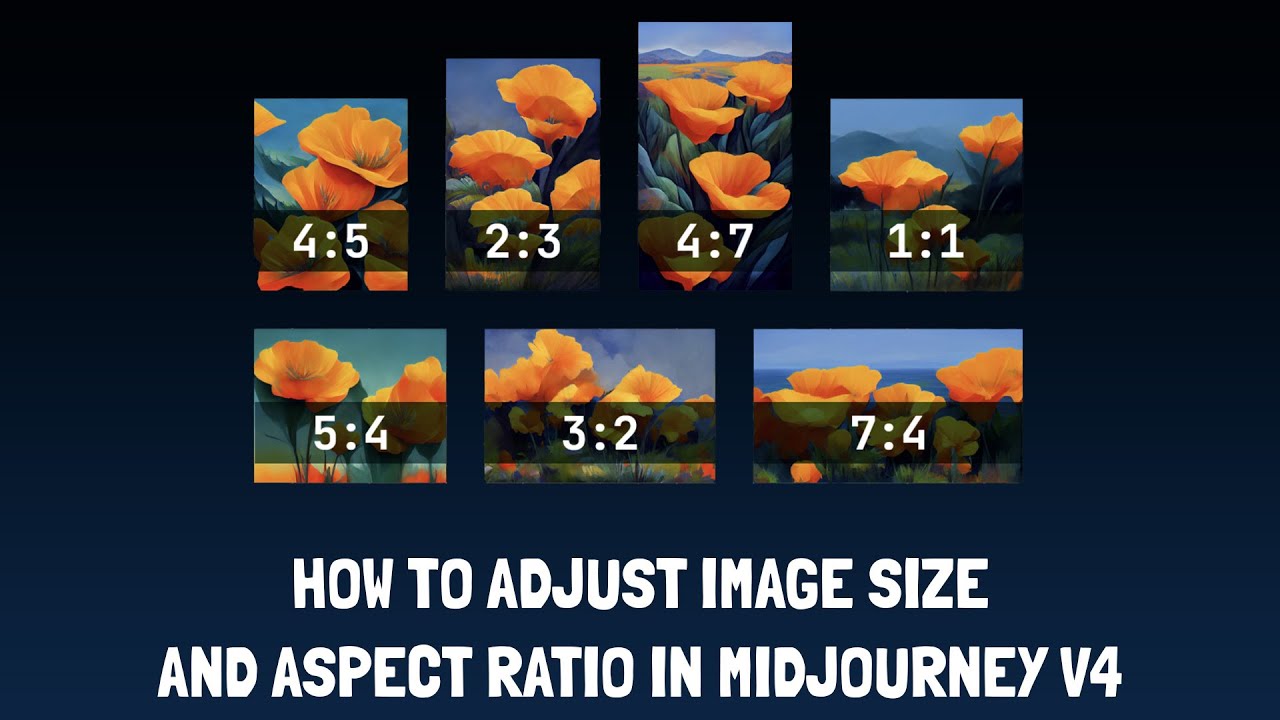

Second, export your images in 16:9 aspect ratio. Use the --ar 16:9 parameter at the end of your prompt. Most video AI tools prefer landscape images. If you feed a square image into a video generator, it will often crop it awkwardly or "hallucinate" the edges of the frame with messy results.

Third, try the "Zoom Out" method. Generate a close-up of an eye. Use the Zoom Out 2x button. Then do it again. And again. Take those five or six images into a video editor and play them in reverse. You’ve just created a "cinematic pull-back" shot that looks like it was filmed on a $50,000 rig.

🔗 Read more: Finding iPhone 10 cases Apple: What actually still exists and what you should avoid

Fourth, get a subscription to a "bridge" tool. Luma Dream Machine currently offers a few free credits, and it’s perhaps the best tool for taking a Midjourney image and making it move realistically. Upload your MJ image, don't even write a prompt, and just hit "Extend." The AI will guess how the scene should move. Usually, it’s surprisingly accurate.

The road ahead

Midjourney is a research lab. They don't move fast, and they don't care about being first; they care about being the best. While Sora grabbed the headlines, Midjourney is quietly perfecting the "V6" and "V7" models which will likely serve as the "brain" for their eventual video tool.

Don't wait for the update. The skills you learn now—how to prompt for lighting, how to maintain character consistency, and how to use external motion tools—will be the exact same skills you need when the "Video" button finally arrives.

The "video" in Midjourney right now is a DIY project. It's a puzzle. But for those willing to piece it together using the current toolset and external AI video generators, the results are already professional-grade.

Start by generating a high-contrast cinematic shot with --ar 16:9 and --style raw. Take that image and experiment with a motion tool. That is the current "pro" way to answer the question: does Midjourney do video? It does, as long as you're willing to be the editor.