Look at the number 5. Just sitting there. It looks clean, right? No trailing bits, no messy dots. If you ask a middle schooler if that number has a decimal, they’ll probably say "no" without blinking. But if you ask a software engineer or a pure mathematician, they might give you a look that says, "Well, it’s complicated."

So, do integers have decimals?

The short answer is no, not by definition. An integer is a whole number. It’s part of a specific set in mathematics—think of it like an exclusive club where nobody brings a fractional part to the party. But here is the kicker: just because you don't see a decimal doesn't mean it isn't effectively there in the background, lurking like a ghost in the machine.

Why We Say Integers Don't Have Decimals

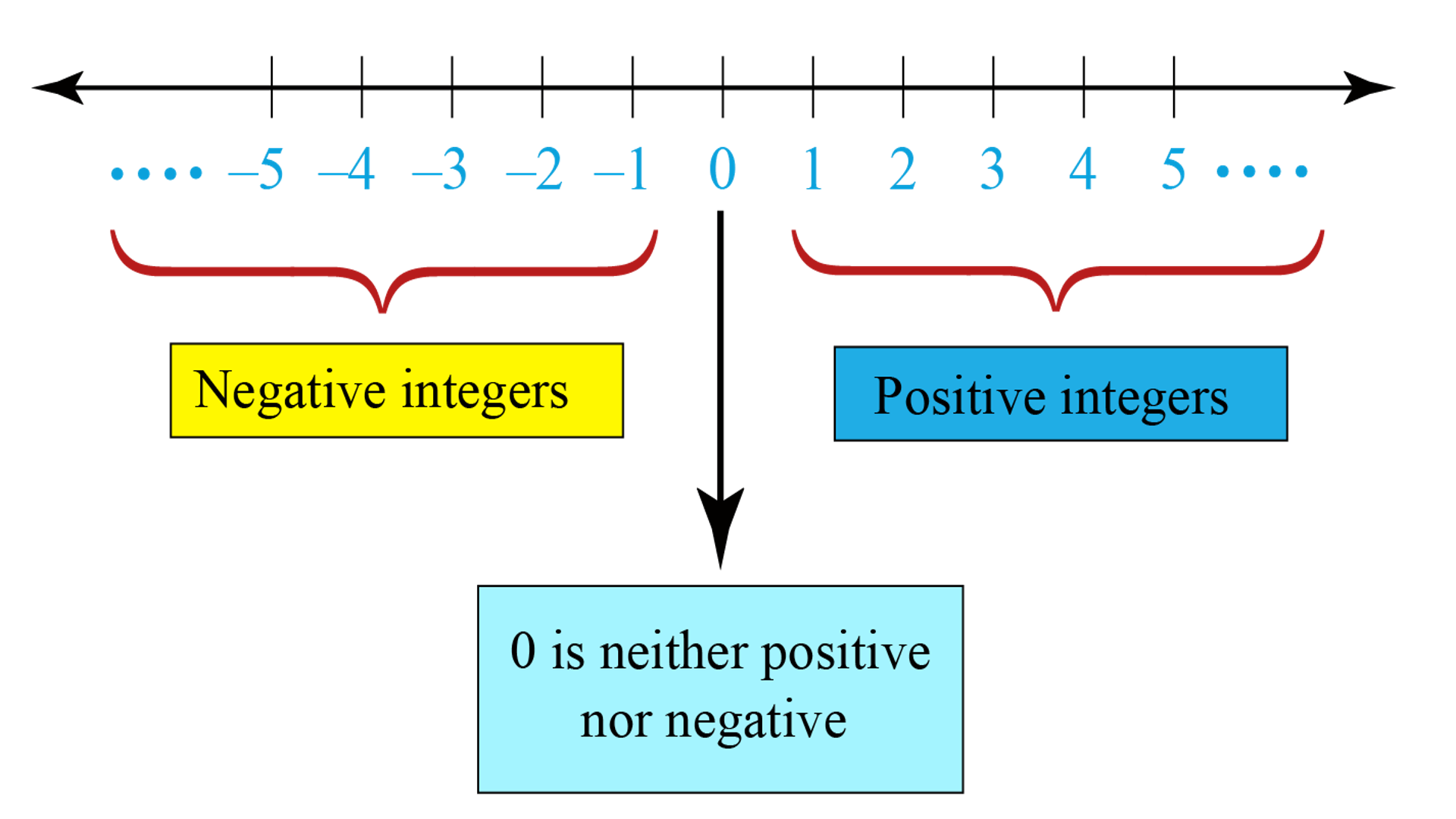

Let's get the textbook stuff out of the way first. In the world of set theory, integers are represented by the symbol $\mathbb{Z}$, which comes from the German word Zahlen. This set includes $...-3, -2, -1, 0, 1, 2, 3...$ and so on.

By their very nature, these numbers are "complete."

When you add a decimal point to a number, you are usually signaling that there is a fractional component involved. If you have 1.5, you have one whole and one half. If you have 1.0, you have one whole and... nothing else. This is where the confusion starts for most people.

Mathematically, $1$ and $1.0$ are equivalent in value. They represent the same point on a number line. However, they are not the same "type" of number when you get into the nitty-gritty of data science or advanced calculus.

The Decimal Point as a "Hidden" Feature

Think of an integer like a car without a trailer. It’s just the car. A decimal number (a "float" or "real number") is a car with a trailer hitched to the back. Even if the trailer is empty—a "point zero"—the hitch is still there.

You've probably seen this in a grocery store. A price tag might say $$5$. Another might say $$5.00$.

Are they the same? Yes, your wallet doesn't care. But the $$5.00$ implies a level of precision that the flat $$5$ doesn't necessarily shout about. In science, this is what we call significant figures. If a scientist records a weight as $10$ grams, it’s a bit vague. If they record it as $10.00$ grams, they are telling you they used a scale accurate enough to measure hundredths of a gram, and those hundredths happened to be zero.

Computers Care More Than You Do

This isn't just a "math nerd" debate. If you’ve ever dabbled in Python, Java, or C++, you know that the question of whether integers have decimals is a matter of digital life and death.

Computers store integers and decimals (floats) in completely different ways in their memory banks.

- Integers are stored as straightforward binary.

- Floating-point numbers use a complex system (often the IEEE 754 standard) that splits the number into a significand and an exponent.

If you try to tell a strict program that an integer has a decimal, it might just crash or give you a "Type Error." To a computer, 5 is a solid block of data. 5.0 is a complex instruction. It’s the difference between a whole brick and a bag of sand that just happens to weigh as much as a brick.

Misconceptions That Mess With Your Head

People often get tripped up because of how we write things. We use the "decimal system" (Base 10) for everything. Because we use a system named after decimals, we assume decimals are everywhere.

🔗 Read more: What Is GIFs Mean: Why This Weird File Format Won’t Ever Die

They kind of are.

Every integer can be expressed as a decimal. You just slap a .0 on the end. This is called "padding." But expressing something as a decimal doesn't change its fundamental identity as an integer. It’s like putting a costume on a dog. The dog is still a dog, even if it’s dressed as a taco.

The Number Line Reality

If you visualize a number line, integers are the big, bold marks. Between 1 and 2, there is an infinite abyss of decimals ($1.1, 1.11, 1.111...$). The integer is the destination. The decimals are the steps in between.

What About Fractions?

Fractions are the cousins of decimals. Every decimal can be a fraction ($0.5$ is $1/2$), and most fractions can be decimals. Integers can also be fractions—$4$ is just $4/1$.

But we don't call $4/1$ a "fractional number" in common speech because it simplifies down to a whole. The same logic applies to decimals. We don't call $7.000$ a "decimal number" in a pure math context; we just call it an integer in a fancy suit.

Why This Matters in the Real World

If you’re balancing a checkbook, you’re dealing with decimals because money is divisible. If you’re counting people in a room, you’re dealing with integers. You can't have $12.4$ people. If you do, someone has had a very bad day.

Precision matters. In engineering, assuming an integer doesn't have a decimal "tail" can lead to rounding errors. These errors have actually caused real-world disasters.

Take the Patriot Missile failure in 1991. A small tracking error caused by how the system handled decimals (or failed to handle them with enough precision) resulted in the system losing track of an incoming Scud missile. It wasn't a "math" error in the way we think of it, but a failure of how numbers are converted between types.

How to Handle the "Decimal" Question

When you're faced with this question, the best way to handle it is to look at the context.

- In a Math Class: Integers do not have decimals. They are whole. Period.

- In Programming: Keep them separate. An integer with a decimal is a float, and mixing them can lead to bugs.

- In General Science: Adding a decimal to an integer is a way to show how precise your measurement is.

Honestly, the whole debate is mostly about semantics. It's about how we define "having" something. Does a house "have" a basement if the basement is filled with concrete? It’s there, but you can’t use it. An integer "has" a decimal place of zero, but since it doesn't change the value, we usually just ignore it for the sake of our sanity.

Actionable Takeaways for Using Integers Correctly

Stop treating integers and decimals as the same thing just because they look similar on paper. If you're working in Excel, a spreadsheet might hide the decimals, but they could still be there affecting your sums. Always check your "cell formatting."

If you are teaching kids, emphasize that integers are "counting numbers" (plus the negatives). Use physical objects. You can't have a "decimal" of a marble.

In coding, always explicitly "cast" your variables. If you need an integer to behave like a decimal, convert it (e.g., float(my_integer)). Don't leave it to the compiler to guess what you mean.

Understand that "integer" refers to the value, while "decimal" often refers to the notation. You can write an integer in decimal notation, but that doesn't make it a "decimal number" in the eyes of a mathematician.

Keep your data types clean. It saves a lot of headaches later on.