You’re staring at $f(x) = |x|$ on your homework or a coding problem, and you need the slope. Easy, right? It’s just a "V" shape. But the second you try to find the derivative of absolute value, things get weird. Calculus usually loves smooth curves, but this function has that sharp, jagged point at the origin. It’s a "corner." In the world of limits and continuity, corners are basically roadblocks.

Most students think they can just ignore the sharp turn at zero. They can't. If you try to take the derivative at $x = 0$, the math breaks. Literally. One side says the slope is $-1$, and the other side insists it's $1$. Since they can’t agree, the derivative doesn't exist at that exact spot. This isn't just a math quirk; it’s a fundamental rule that governs how we build neural networks and physics simulations today.

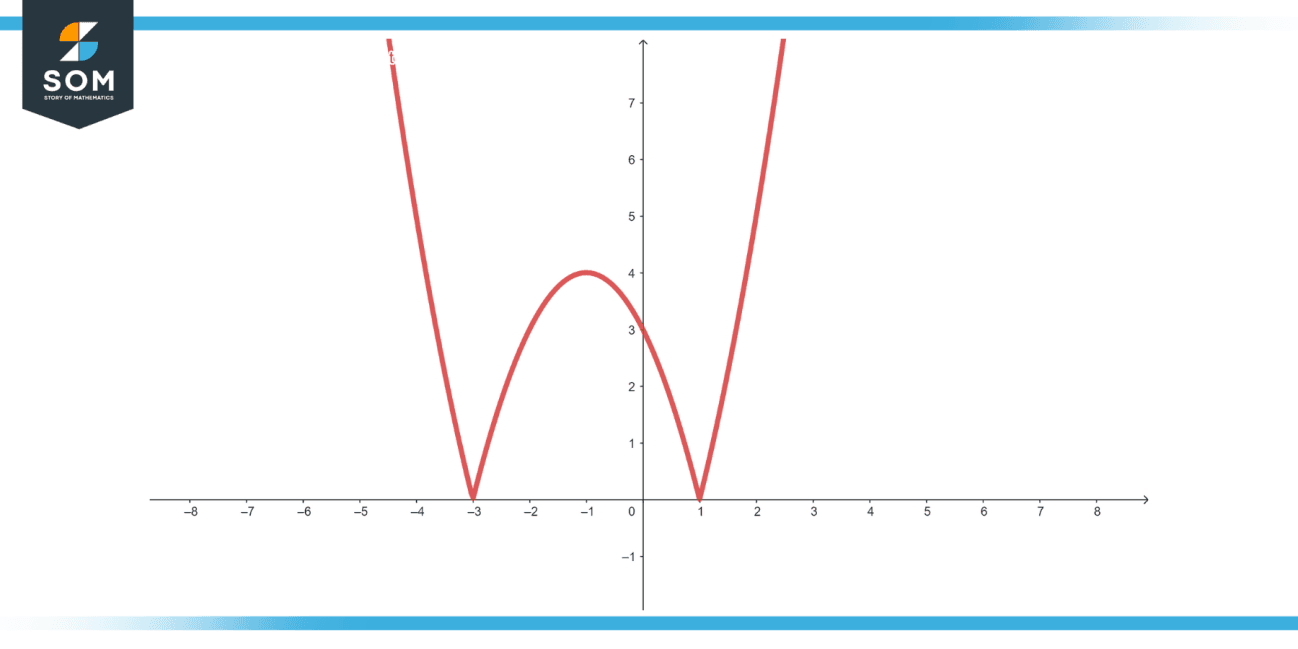

The Piecewise Reality of the Absolute Value

Let's be real: the absolute value function is just two linear functions wearing a trench coat. It’s a bit of a trickster. When $x$ is positive, the function is just $y = x$. When $x$ is negative, it’s $y = -x$. Because of this, the derivative of absolute value has to be handled in pieces. It’s not a single, elegant expression like the derivative of $x^2$ being $2x$.

Think about the slope. If you’re walking down the left side of that "V," you’re headed downhill. That’s a constant slope of $-1$. Once you pass the origin and start climbing the right side, you’re going uphill at a constant slope of $1$. So, the derivative is actually a step function. It jumps from $-1$ to $1$ instantly. Mathematicians call this the signum function, or $sgn(x)$.

✨ Don't miss: Updating Your Home Address Google Maps: Why It Breaks and How to Actually Fix It

It looks like this:

$$\frac{d}{dx}|x| = \frac{x}{|x|}$$

Or, if you prefer the other way:

$$\frac{d}{dx}|x| = sgn(x), \quad x

eq 0$$

The $x/|x|$ version is actually pretty clever. If you plug in $5$, you get $5/5 = 1$. If you plug in $-5$, you get $-5/5 = -1$. It works perfectly everywhere except at zero, where you end up trying to divide by zero. And we all know the universe hates that.

Why the Chain Rule Changes Everything

Rarely do you just see $|x|$ in the wild. Usually, it’s something messier, like $|x^2 - 4|$ or $|\sin(x)|$. This is where the derivative of absolute value starts to trip people up. You have to use the chain rule, which basically means you take the derivative of the "outside" (the absolute value part) and multiply it by the derivative of the "inside" (whatever is stuck between the bars).

Suppose you have $f(x) = |g(x)|$. The derivative becomes:

$$f'(x) = \frac{g(x)}{|g(x)|} \cdot g'(x)$$

Let’s try a real example. Imagine you’re tracking the error of a sensor, and you care about the magnitude of that error. You’re looking at $|3x^2 - 6|$. To find the rate of change, you take the inside part—that’s $3x^2 - 6$—and its derivative, which is $6x$.

📖 Related: Why Old Nokia Phones Ringtones Still Live Rent-Free in Our Heads

The final result is $\frac{3x^2 - 6}{|3x^2 - 6|} \cdot 6x$.

It looks gnarly. It is gnarly. But it's logical. You're basically taking the direction (the sign) and multiplying it by the speed of the inner function. If the inner function is zero, again, you’re out of luck. No derivative for you.

Machine Learning and the "Corner" Problem

You might wonder why anyone cares about a broken derivative at zero. Well, if you’re into AI or data science, you’ve heard of ReLU (Rectified Linear Unit). It’s an activation function that looks a lot like half of an absolute value graph.

When engineers train neural networks using backpropagation, they need derivatives to update the weights. If the math hits a point where the derivative doesn't exist—like that sharp corner—the software has to decide what to do. Usually, they just pick a value (like 0 or 1) and move on. This is called a "subgradient."

Without understanding the derivative of absolute value and its limitations, we wouldn't have efficient computer vision or large language models. The "failure" of the derivative at $x=0$ is actually a feature, not a bug, in sparse modeling. It helps push unimportant weights to exactly zero, which makes the AI faster.

Common Traps and How to Avoid Them

- Forgetting the domain: You cannot say the derivative is $1$ or $-1$ without specifying where $x$ is. Always check if your $x$ value makes the inside of the bars zero.

- Power rule mistakes: Some people try to treat $|x|$ like $(x^2)^{1/2}$ and get lost in the algebra. While $|x| = \sqrt{x^2}$ is a valid identity, differentiating it is often more work than just using the $x/|x|$ shortcut.

- The "Zero" Myth: Many students think the derivative at zero is $0$ because the graph "turns" there. Nope. It’s undefined. A derivative only exists if the slope is the same coming from both directions. Here, it’s not.

Real-World Applications: Beyond the Classroom

Engineers use the derivative of absolute value when calculating "Total Variation" in image processing. It helps sharpen blurry photos by looking at the sharp changes (the derivatives) in pixel intensity. If you have an image with a lot of noise, taking the absolute difference between pixels and then looking at how that change fluctuates allows a computer to distinguish between actual edges and random grain.

In finance, traders use it to calculate "Mean Absolute Deviation." When they want to know how much a stock price is swinging relative to its average, they look at the rate of change of these absolute differences. It’s less sensitive to extreme outliers than squaring the numbers (like you do in standard deviation), making it a "robust" statistic.

📖 Related: Coinbase Customer Service Number Live Person: What Most People Get Wrong

Actionable Next Steps for Mastery

- Sketch it out: Draw the function $f(x) = |x-3|$. Notice the corner is now at $x=3$. This means the derivative is undefined at $3$, not $0$. Always find the "root" of the inside expression first.

- Practice the Signum: Replace $|x|'$ with $sgn(x)$ in your head. It simplifies the mental load. If $x$ is positive, slope is $1$. If $x$ is negative, slope is $-1$.

- Use the Square Root Identity: If you get stuck on a complex chain rule problem, rewrite $|f(x)|$ as $\sqrt{(f(x))^2}$. Then use the standard power rule. It’s a foolproof backup method that always yields the correct $\frac{f(x)}{|f(x)|} \cdot f'(x)$ result.

- Check for Differentiability: Before you start calculating, ask yourself: "Does the expression inside the absolute value bars equal zero at the point I'm investigating?" If yes, stop. The derivative does not exist.

Mastering this isn't about memorizing a formula. It's about recognizing that math has limits—literally. When functions get sharp, calculus gets picky. Understanding that "pickiness" is what separates a student from an expert.