Everyone is talking about DeepSeek. Honestly, it’s about time. For the longest time, the conversation around AI felt like a closed loop between San Francisco and Seattle. You had OpenAI, Google, and Anthropic basically setting the rules of the game. Then, seemingly out of nowhere, this lab from Hangzhou starts dropping weights for models that aren't just "good for being free," but are actually beating GPT-4 in specific coding and math benchmarks.

DeepSeek open source code isn't just a repository on GitHub; it’s a massive shift in how we think about the "moat" in artificial intelligence.

Let’s be real. Most "open source" AI is actually "open weights." There’s a difference. You get the final product, but you don’t get the recipe, the data cleaning scripts, or the specific hyperparameter tuning that made the model smart. DeepSeek is doing something slightly more transparent. They’ve been releasing architectural details that make researchers at Meta and Microsoft squint at their monitors.

They’re doing more with less. Much less.

What is DeepSeek open source code actually doing differently?

If you’ve spent any time looking at the DeepSeek-V3 or the R1 series, you’ve noticed the term Multi-head Latent Attention (MLA). It sounds like academic jargon. It mostly is. But the practical reality is that it allows the model to process information way more efficiently than the standard transformer architecture everyone else uses.

✨ Don't miss: Apple Music to MP3: The Truth About Offline Listening That Nobody Tells You

Standard models are heavy. They require massive amounts of VRAM to run because the "Key-Value cache" grows linearly with the length of the conversation. DeepSeek figured out a way to compress that cache. This means you can run a much more powerful model on cheaper hardware.

They also pioneered something called DeepSeekMoE. Mixture-of-Experts (MoE) is the tech behind GPT-4, where instead of one giant brain, you have a bunch of specialized "sub-brains." But DeepSeek’s version is more granular. It uses "shared experts" and "routed experts." Think of it like a kitchen where one chef does everything versus a kitchen where one person only chops onions, but everyone shares the same high-quality knives. It’s faster. It’s cheaper. And it’s why the DeepSeek open source code is being cloned thousands of times a day.

It’s about the money, too.

Training a frontier model usually costs hundreds of millions of dollars. DeepSeek-V3 was reportedly trained for a fraction of that. We’re talking about an order of magnitude difference in efficiency. When you look at their GitHub, you aren’t just seeing code; you’re seeing a blueprint for how to build world-class AI without needing a sovereign wealth fund behind you.

The R1 revolution and the "Reasoning" gap

We have to talk about DeepSeek-R1. This is their answer to OpenAI’s o1 "reasoning" models. For a while, people thought OpenAI had found a secret sauce with "Chain of Thought" processing that couldn't be replicated. Then R1 arrived.

The most fascinating part? They used Reinforcement Learning (RL) to teach the model how to think. But they didn't just throw human feedback at it. They let the model "discover" reasoning paths on its own. If you look at the DeepSeek open source code for their training pipelines, you’ll see how they incentivized the model to spend more time "thinking" before answering. It results in these long, rambling internal monologues where the model corrects itself in real-time.

"Wait, that's not right," the model might say to itself. "Let me try that derivative again."

This is open source. You can download the 671B parameter version, or you can get the "distilled" versions that are small enough to run on a decent consumer GPU. That’s a big deal. Usually, small models are kind of dumb. But because DeepSeek used the giant R1 model to "teach" the smaller models (like Llama and Qwen), these tiny models are punching way above their weight class.

Why Silicon Valley is paying attention

It’s not just about the code. It’s about the benchmark scores. In late 2024 and early 2025, DeepSeek started topping the LiveCodeBench and various MATH-500 leaderboards.

People used to dismiss Chinese models as being purely for Chinese language tasks. That’s over. DeepSeek is a coding monster. It handles Python, C++, and Rust with a level of precision that makes it a legitimate alternative to GitHub Copilot or Claude 3.5 Sonnet.

There’s a tension here, obviously.

Geopolitics is the elephant in the room. There are export controls on high-end chips like the NVIDIA H100s and B200s. You’d think that would slow a Chinese lab down. Instead, it forced them to become incredibly efficient at the software level. Because they couldn't just throw 100,000 more GPUs at the problem, they had to write better code. That’s exactly what ended up in the DeepSeek open source code repositories.

👉 See also: When Was Invented Internet? The Messy Reality Behind the World Wide Web

The "Censorship" question and technical limitations

Look, we have to be honest. These models are developed in China. That means they have guardrails that feel different from Western models. If you ask about specific political events or sensitive topics, the model might give you a canned response or refuse to answer.

For a developer building a weather app or a math tutor, that doesn't matter. For someone trying to use AI as a primary source for political history, it’s a massive limitation.

There is also the issue of support. OpenAI has a massive enterprise wing. DeepSeek is a research lab. If your production server goes down at 3:00 AM, you aren't calling Hangzhou for tech support. You’re on your own, digging through the DeepSeek open source code to figure out why the inference engine is bottlenecking.

How to actually use DeepSeek right now

You have three main paths if you want to get your hands dirty with this.

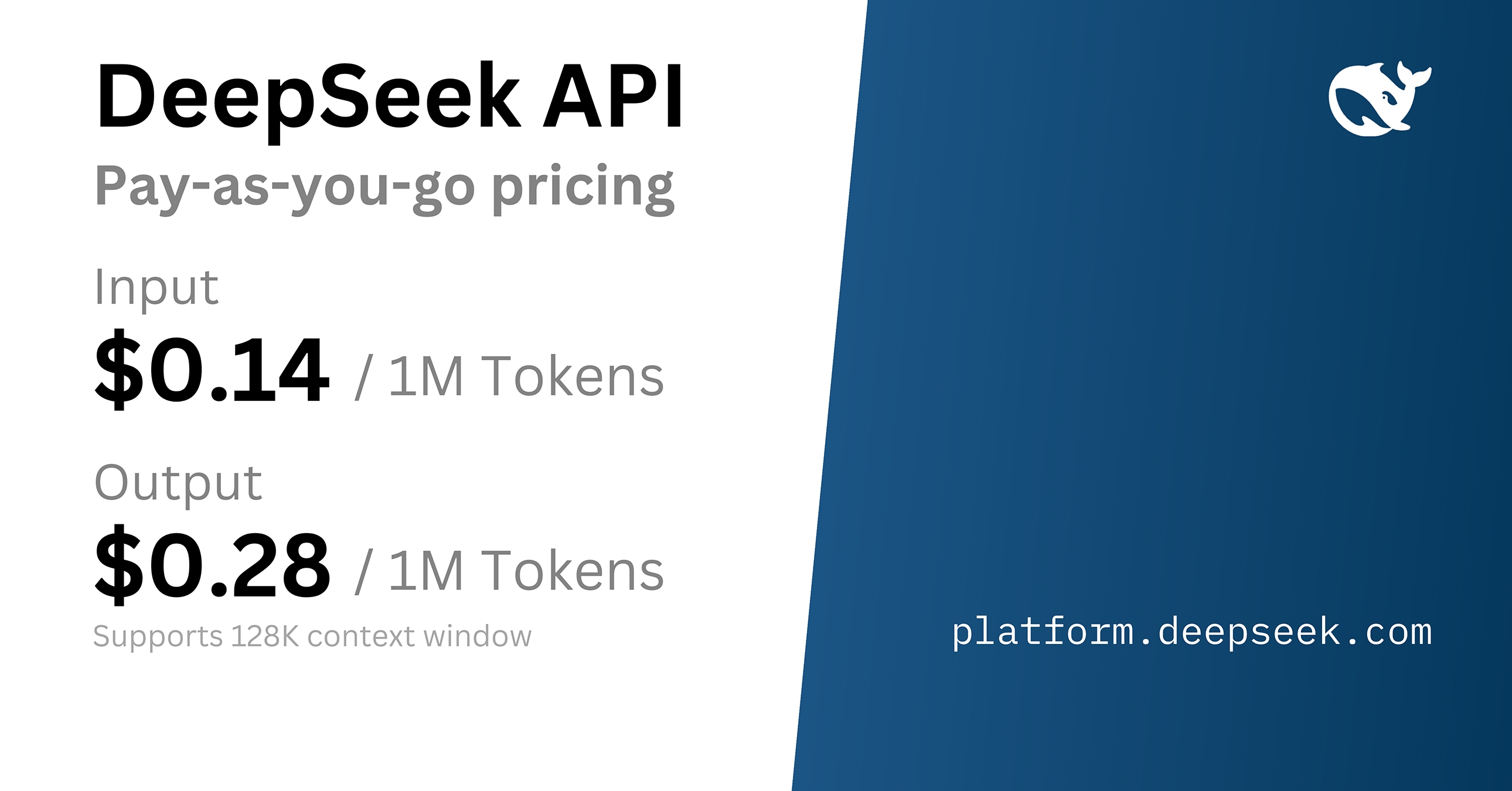

- The API: This is the easiest way. It’s dirt cheap. Like, "rounding error" cheap. They’ve priced it so low that it’s almost disruptive to the entire industry.

- Local Hosting: If you have a Mac with a lot of Unified Memory (M2/M3 Max/Ultra) or a rig with several 3090/4090s, you can run the distilled versions via Ollama or LM Studio. It takes about two minutes to set up.

- The GitHub: Go to the official DeepSeek-AI organization on GitHub. You’ll find the implementation for DeepSeek-V3, the R1 training logs, and the Flash-MLA code.

If you’re a developer, the Flash-MLA repo is where the real magic is. It’s an optimized Triton kernel for the decoding stage. It’s highly technical, but it’s the reason their inference is so snappy.

What most people get wrong about "Chinese AI"

There’s this lazy narrative that everything coming out of China is just a copy of Western tech. DeepSeek proves that’s dead wrong.

Their Multi-head Latent Attention (MLA) is an original architectural contribution. They didn't copy that from a Google paper. In fact, you’re starting to see Western researchers cite DeepSeek’s papers more than the other way around. They are leading in efficiency research.

Also, don't assume "open source" means "charity." DeepSeek is backed by High-Flyer Quant, a massive quantitative hedge fund. They built this model because they needed world-class AI for their own trading and data analysis. The fact that they released it to the public is a strategic move to build an ecosystem around their architecture. It's about influence.

The future of the DeepSeek ecosystem

We are moving toward a world where the gap between "Proprietary" and "Open" is shrinking.

A year ago, if you wanted the best reasoning model, you paid $20 a month to OpenAI. Today, you can download DeepSeek-R1-Distill-Llama-70B and get 90% of that performance for free, running on your own hardware.

That changes the economics of software. It means startups don't have to burn all their VC money on API credits. They can fine-tune a DeepSeek model for a specific niche—say, legal document review or medical coding—and own the weights forever.

The DeepSeek open source code represents a democratization of "high-end" intelligence. It’s not just a tool; it’s a signal that the era of AI secrecy is under fire.

Actionable steps for developers and tech leads

Stop treating DeepSeek as a curiosity. It’s a production-ready asset.

First, go to their GitHub and look at the "Inference" folder. If you’re running large-scale LLM deployments, the optimizations they’ve made for MoE architectures are probably better than what you’re currently using.

Second, test the R1 distilled models. Don't just jump to the biggest 671B model. The 14B and 32B versions are shockingly good at logic. They are small enough to be fast but smart enough to handle complex JSON extraction or multi-step reasoning.

Third, monitor their "DeepSeek-V3" repo. They frequently update their training tips and documentation. It’s basically a free masterclass in modern LLM engineering.

Finally, keep an eye on the licenses. While DeepSeek is generally very permissive (usually an MIT or a custom open license), you always want to check the fine print for commercial use if you’re at a massive corporation.

The world of AI just got a lot bigger, and a lot more interesting. The monopoly is over. Whether you’re a hobbyist or a CTO, ignoring what’s happening in Hangzhou is a mistake you can't afford to make.

Key takeaways to implement immediately:

👉 See also: Cases for Black iPhones: What Most People Get Wrong

- Swap out your generic "reasoning" tasks to DeepSeek-R1 to test the cost-to-performance ratio; you’ll likely save 80% on API costs.

- Integrate Flash-MLA if you are building custom inference kernels to reduce VRAM pressure.

- Use the "distilled" versions for edge computing or local-first applications where privacy is a hard requirement.

The tech is here. The code is open. It's just a matter of who uses it first.