If you think the GDPR is a finished piece of paper sitting in a dusty binder in Brussels, you’re missing the boat. Honestly, the rules are shifting right under our feet. Most of us spent 2018 panicking about cookie banners, but the data protection news today gdpr looks nothing like those early days of frantic compliance. We are seeing a massive pivot where the European Commission is trying to "simplify" things while simultaneously slapping billion-euro fines on companies that can't keep their AI in check. It’s a mess. A very expensive, legally dense mess.

The biggest shocker? As of January 1, 2026, we’ve entered a world where the "Digital Omnibus" is starting to rewrite the script. The EU is basically admitting that the original GDPR was a bit of a blunt instrument for the AI age. They are introducing ways to use personal data for training AI models under "legitimate interest" instead of begging for consent every five seconds. It sounds like a win for tech companies, but it's really just a different kind of trap.

The Massive Shift in Data Protection News Today GDPR

Let’s talk about what’s actually happening on the ground. Right now, the big talk in the privacy world is about "Sovereign Clouds." Just this week, Amazon (AWS) went live with its European Sovereign Cloud in Germany. Why? Because the GDPR and the constant bickering over US-EU data transfers have made businesses terrified of their data being snooped on by foreign governments. Amazon is dropping over €7.8 billion to build a "separate" infrastructure. That’s not just a technical update; it’s a political statement.

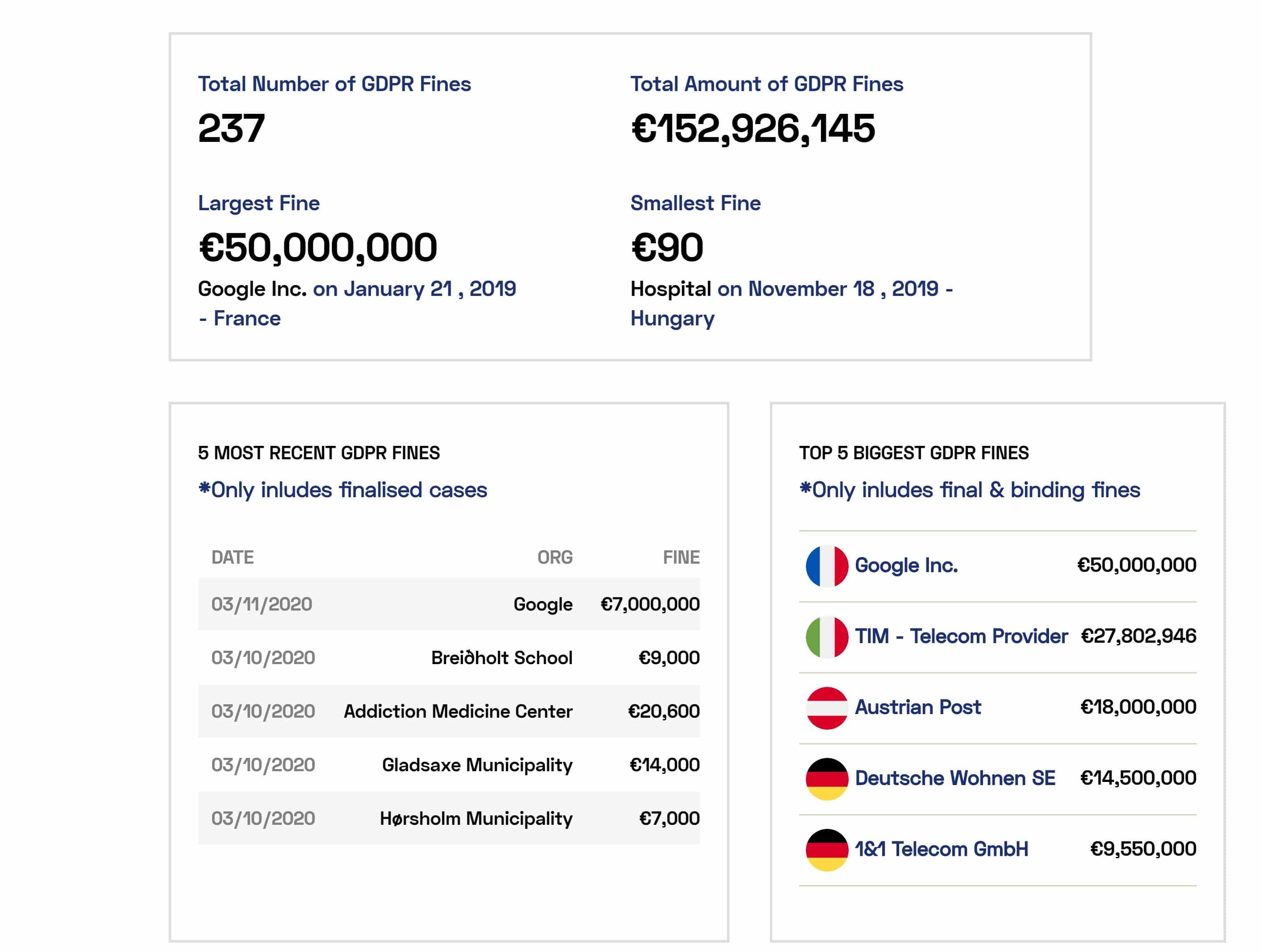

Then you've got the fines. If you haven't been keeping track, the numbers are getting stupid.

- TikTok just got hit with a €530 million fine.

- Meta is still paying off billions.

- Even Google got tagged for €200 million recently.

But the real news isn't the total amount. It's who they are coming for. Regulators are moving past the "Big Tech" obsession and starting to look at mid-sized companies and startups. The Spanish and Norwegian authorities are currently breathing down TikTok’s neck specifically about data going to China, and that’s setting a precedent for every other app with an international footprint.

Is Pseudonymization Still a Thing?

Actually, this is where it gets kinda weird. There was a huge case involving the Single Resolution Board (SRB) and the European Data Protection Supervisor that was supposed to settle once and for all when "masked" data counts as "personal data." In a weird twist on January 16, 2026, the case was withdrawn from the EU court without a final ruling.

🔗 Read more: how do you turn on imessage: What Most People Get Wrong

So, we are back in a gray area. If you pseudonymize data but someone could theoretically re-identify it, is it GDPR protected? Usually, yes. But the new 2026 proposals want to relax this. They want to say that if you can't re-identify it, it's not personal data for you, even if someone else could do it. This would be a massive relief for researchers, but privacy advocates are already calling it a "gaping hole" in the law.

Why the AI Act and GDPR are Crashing Into Each Other

If you’re running a business, you probably heard that the EU AI Act is the new big bad. But you can't separate it from the GDPR. By August 2026, the rules for high-risk AI systems will be fully applicable. This means if your AI uses personal data to decide who gets a loan or who gets a job interview, you are doubling your risk.

Regulators like the CNIL in France are already saying they won't wait for 2027 to start asking questions. They are using the GDPR’s existing "Automated Decision Making" rules (Article 22) to audit AI models right now. They don’t need the AI Act to fine you; they’ve already got the teeth to do it.

The Death of the "Cure Period"

Over in the US, things are getting just as spicy, and it affects anyone doing business globally. States like Indiana, Kentucky, and Rhode Island just had their privacy laws go live on January 1, 2026. Most importantly, the "cure period"—that grace period where a regulator tells you to fix a mistake before they fine you—is dying.

In Oregon and several other states, that safety net is gone as of this year. If you mess up, you pay. No warnings. No "oops, let me fix that." Just a bill.

What You Should Actually Do Right Now

Look, you don't need a 500-page manual. You need to fix the basics because that’s where the fines are happening. It’s rarely the complex stuff; it’s the lazy stuff.

1. Audit your "Shadow AI"

Your employees are likely using ChatGPT or Claude to process customer data. If they are, you are probably violating the GDPR. Right now. Today. You need a policy that explicitly bans pasting sensitive info into LLMs unless you have a corporate agreement that keeps that data out of the training set.

2. Check your "Reject All" button

Regulators are obsessed with "dark patterns." If your "Accept All" button is big and green and your "Reject All" button is hidden in a sub-menu or is a faint gray link, you are a target. Make them identical. It’s annoying for your marketing team, but it’s cheaper than a €10 million fine.

3. Update your Privacy Notice (Seriously)

The "Digital Omnibus" changes mean your old 2018 privacy policy is probably out of date. You need to explicitly state if you are using data for AI training and what your "legitimate interest" justification is. If it’s not in the text, it’s not a valid legal basis.

4. Data Minimization is the New Encryption

The best way to protect data is to not have it. Honestly, stop collecting "just in case" data. If a breach happens in 2026, the first thing a regulator will ask is: "Why did you even have this user's birth certificate from five years ago?" If you don't have a good answer, the fine doubles.

🔗 Read more: Apple Make Appointment Genius Bar: How to Actually Get Help Without the Headache

Data protection news today gdpr isn't about checking boxes anymore. It’s about risk management in a world where data is moving faster than the laws can keep up. The "simplification" the EU is promising might actually make things more complex because now you have to navigate the overlap between the GDPR, the AI Act, and the Data Act.

Get your records of processing activities (RoPA) in order. It’s the first thing a DPA asks for during an audit. If you can’t produce a clean, updated RoPA within 48 hours, they know you’re winging it. And regulators hate it when people wing it.

Actionable Next Steps:

- Conduct a "Dark Pattern" Review: Have someone outside your team try to opt-out of tracking on your site. If it takes more than two clicks, fix it.

- Map your AI Data Flows: Identify every point where personal data enters an automated system or LLM.

- Verify Sovereign Cloud Options: If you handle sensitive EU citizen data, look into the new AWS or Microsoft sovereign regions to mitigate "Schrems II" style transfer risks.