You've probably seen the headlines. They're everywhere. People are panicking because ChatGPT apparently drinks a bottle of water for every few prompts and Google's carbon footprint is exploding like a supernova. It feels like every time we generate an AI cat playing the piano, a power plant somewhere in Virginia groans under the pressure. The narrative is simple: data center energy use is an out-of-control monster that’s going to break our power grids and roast the planet.

But honestly? It’s more complicated than that.

The reality isn't a straight line to catastrophe. It's a weird, messy intersection of high-stakes engineering, massive corporate ego, and some genuinely clever math. We aren't just building bigger sheds for servers. We're rethinking how electricity moves across the earth.

The AI tax on our power lines

Let's get the scary stuff out of the way first. Training a large language model like GPT-4 isn't like running a laptop. It's a massive, industrial-scale event. When NVIDIA’s Jensen Huang talks about "AI factories," he isn't being poetic. These places are literally factories where the raw material is electricity and the finished product is intelligence.

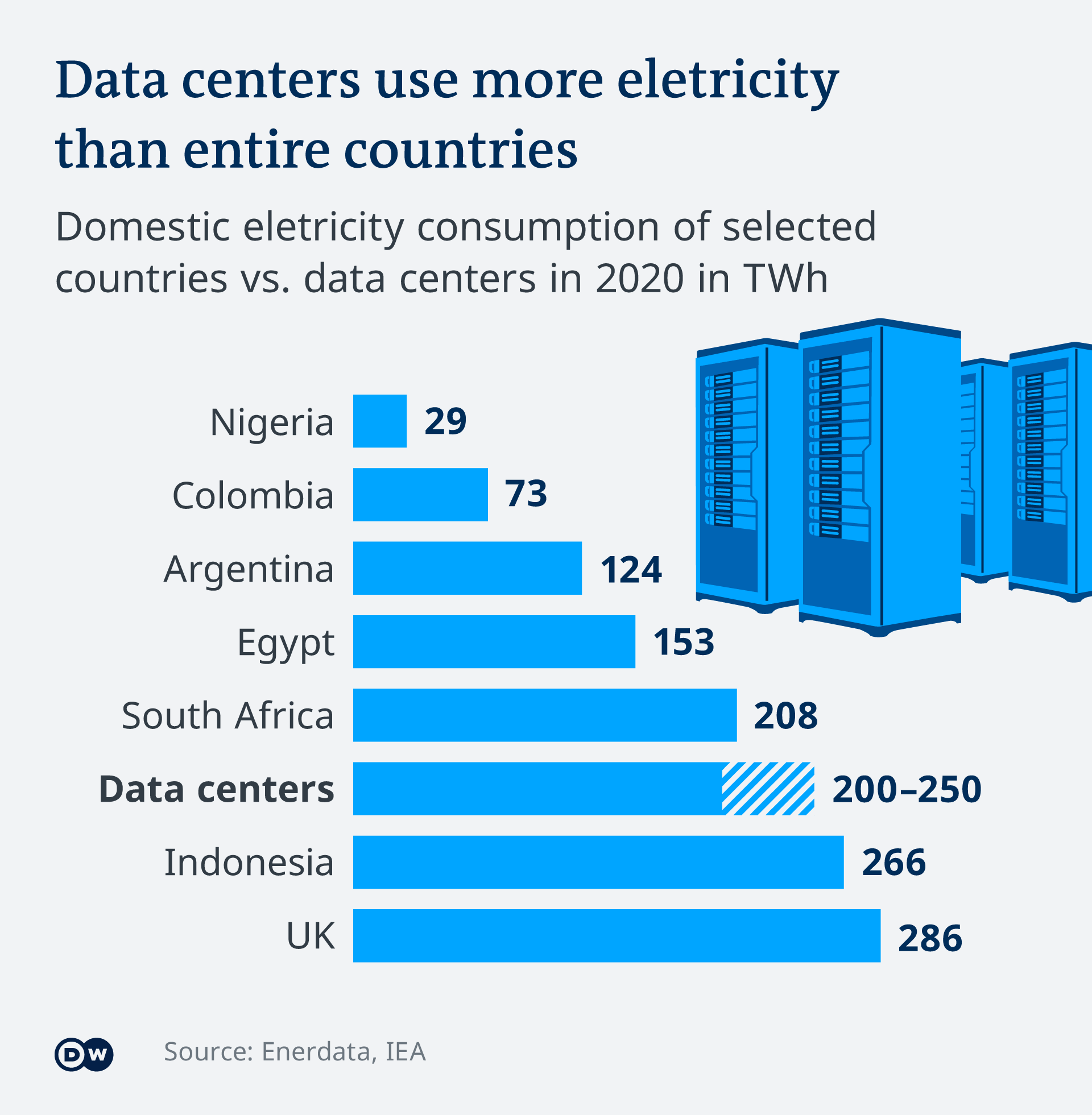

According to the International Energy Agency (IEA), data centers accounted for about 1% to 1.5% of global electricity consumption in 2022. That doesn't sound like much until you realize that with the AI boom, that number is projected to double—or more—by 2026. Ireland is the canary in the coal mine here. In Dublin, data centers already consume about 20% of the country's total electricity. Think about that for a second. One out of every five sparks of power in the entire nation is going to a server rack.

Why? Because of the H100s.

These NVIDIA chips are the gold standard for AI. A single H100 can have a peak power demand of 700 watts. That is more than some high-end refrigerators. Now, imagine a cluster of 30,000 of these things running at full tilt, 24/7, for months. It creates a massive heat problem. You can't just stick a fan in the window. You need complex liquid cooling systems that circulate chilled water directly over the silicon.

📖 Related: Spray On Shoes: What Most People Get Wrong About Fabrican

This is where the term PUE comes in. Power Usage Effectiveness. It’s basically a ratio of how much energy goes to the actual "thinking" versus how much goes to the "cooling and lighting." If your PUE is 2.0, you’re wasting as much energy as you’re using. Google and Microsoft have gotten this down to around 1.1 or 1.2, which is actually incredible engineering. But even with a perfect PUE, the sheer volume of data center energy use is still skyrocketing because we simply want more AI, more often.

Why the grid is actually the bottleneck

The problem isn't usually that we don't have enough power plants. The problem is the "last mile."

Our electrical grids were built for a world where houses and factories were spread out. They weren't designed for a single building in Northern Virginia to suddenly demand 500 megawatts of power. To put that in perspective, 500 MW can power roughly 400,000 homes. Utilities are freaking out. In some parts of the US, data center developers are being told they might have to wait five to ten years just to get a connection to the grid.

Small Modular Reactors: The nuclear hope?

Microsoft recently made waves by signing a deal to resurrect a unit at Three Mile Island (yes, that Three Mile Island). They want dedicated nuclear power. They aren't alone. Sam Altman has invested heavily in Helion Energy, a fusion startup.

The goal is clear: decoupling from the public grid.

If tech giants can build their own "behind-the-meter" power plants—especially Small Modular Reactors (SMRs)—they don't have to wait for the local utility to catch up. But SMRs are still mostly theoretical or in early pilot stages. We’re basically betting the future of the internet on technology that isn't even commercially available yet. It’s a massive gamble.

The "Efficiency Paradox" that nobody mentions

There’s this thing called Jevons Paradox. It basically says that when you make a resource more efficient, people don't use less of it. They use more.

💡 You might also like: When Will Technological Singularity Occur: Separating Hype From Reality

We saw this with cars. Engines got more efficient, so we just built bigger SUVs and drove further. We’re seeing it now with data center energy use. Cloud providers have made their servers unbelievably efficient. Virtualization allows one physical server to do the work of fifty. But because it’s now so cheap and easy to store data and run code, we’ve increased our total data output by a factor of a thousand.

We keep finding new ways to burn the "saved" energy. 4K streaming. Crypto. Now, LLMs.

- 10 years ago: We worried about the power used by idle servers.

- 5 years ago: We worried about the cooling for Bitcoin mining rigs.

- Today: We worry about the "inference" costs of every single search query.

Google’s own 2024 Environmental Report admitted their greenhouse gas emissions have climbed 48% since 2019. They’re trying to be "net zero," but the AI arms race is moving faster than their solar farm construction. It’s a treadmill they can’t get off.

The geographic shift: Following the cold

Where do you put these things? Traditionally, it was about being near people. Low latency.

But as data center energy use becomes the primary cost, the map is changing. We’re seeing massive builds in places like Norway, Iceland, and even the middle of the desert where solar is dirt cheap. If you can use "free" cold air from the Arctic to cool your servers, you save millions.

But there’s a catch. Latency matters for gaming and stock trading. It doesn't matter as much for training an AI model. So, we're seeing a split. "Edge" data centers stay near cities for fast response times. "Wholesale" or "Training" hubs go where the power is cheap and the air is cold.

What most people get wrong about "Green" data

A lot of companies claim they run on 100% renewable energy. It’s mostly a lie. Well, sort of.

They use Renewable Energy Certificates (RECs). They pay for a wind farm in Iowa to exist, and then they claim the "greenness" of that wind for their data center in Virginia. But the physical electrons hitting the servers in Virginia are often coming from coal or natural gas.

Lately, there’s a push for "24/7 Carbon-Free Energy." This is the real deal. It means the data center only runs if there is green power on that specific grid at that specific time. If the wind stops blowing, they scale back operations or switch to massive battery arrays. This is much harder to do, and honestly, most of the industry is nowhere near achieving it.

The water problem (The silent twin of energy)

You can't talk about energy without talking about water. Most data centers use evaporative cooling. Basically, they sweat.

A medium-sized data center can use hundreds of thousands of gallons of water a day. In drought-stricken areas like Arizona, this is a PR nightmare. Microsoft and Google are now experimenting with "water positive" goals, meaning they intend to return more water to the environment than they consume by 2030. They do this by investing in local watershed restoration. It’s a nice sentiment, but if you’re a local farmer and the data center next door is sucking the aquifer dry, a "restoration project" three counties over doesn't help you much.

Real-world impact: A quick reality check

It’s easy to get lost in the "billions of watts" talk. Let’s look at a concrete example.

Meta (Facebook) has a massive data center in Altoona, Iowa. It’s over 5 million square feet. That's nearly 90 football fields. When they first arrived, they basically transformed the local economy. But they also fundamentally changed how the local utility had to plan for the next 20 years.

This isn't just about "the internet." It’s about physical infrastructure. It’s about who gets priority when the grid is stressed during a heatwave. Does the hospital stay on, or does the AI training cluster stay on? We haven't had to make those choices yet, but we're getting uncomfortably close in some regions.

Actionable insights for the future

So, what do we actually do with this information? Whether you're a business leader or just someone curious about why your cloud storage costs might go up, here’s the ground truth.

Audit your "digital waste"

We store petabytes of "dark data"—backups of backups that no one ever looks at. Every gigabyte stored is a tiny, persistent draw on a server somewhere. Cleaning out old cloud storage isn't just about organization; it’s a tiny dent in the energy curve.

Demand transparency in PUE and WUE

If you're a business choosing a cloud provider (AWS, Azure, GCP), look past the "carbon neutral" marketing. Ask for their localized PUE and their water usage stats for the specific region where your data sits. The "East US" region is notoriously strained; moving workloads to "Central US" or "Canada" can actually be a "greener" choice purely because of the grid mix and climate.

Support "Grid-Interactive" technology

The next big thing is data centers that act as "giant batteries" for the grid. When there’s too much solar power during the day, the data center ramps up. When the grid is stressed in the evening, the data center throttles back or runs on its own stored energy. This "demand response" is the only way the grid survives the next decade.

Focus on "Small AI"

Not every task needs a trillion-parameter model. The trend toward SLMs (Small Language Models) that can run on a local device or a tiny server is a massive win for energy efficiency. If you're building a tool, don't use a sledgehammer to crack a nut.

The bottom line is that data center energy use is the new frontier of the climate fight. It’s not about stopping the tech; it’s about making sure the tech doesn't outpace the infrastructure that keeps the lights on for everyone else. We’re moving from an era of "move fast and break things" to "move fast and build your own power plant." It’s going to be a wild ride.