Honestly, using AI to make art used to feel like trying to explain a dream to someone who wasn't there. You’d type "a cat in a space suit," and you’d get something that looked more like a blurry potato in a silver bag. But things changed fast. The DALL-E 3 image generator basically killed the need for "prompt engineering" as we knew it.

It's built differently. Instead of you having to figure out the "secret code" of commas and lighting keywords, it just... listens. It's integrated directly into ChatGPT, which means you’re actually talking to a middleman that understands human nuances better than a raw algorithm ever could.

The Big Shift in How DALL-E 3 Thinks

Most people don't realize that DALL-E 3 isn't just a bigger version of DALL-E 2. It's a complete rethink of how an AI interprets text. Earlier models were notorious for "word salad" issues. If you asked for "a man holding a blue apple while standing next to a red car," the AI might give you a blue car and a red apple. Or a blue man.

DALL-E 3 uses a massive leap in caption fidelity.

OpenAI actually trained a separate "image captioner" to describe millions of images in extreme, painstaking detail. Then, they trained DALL-E 3 on those high-quality descriptions. Because the training data was so much more descriptive, the model finally "learned" that if a word is next to another word, they probably belong together.

Why the ChatGPT Connection Matters

You’ve probably noticed that when you ask ChatGPT for an image, it writes a whole paragraph before the picture appears. That’s not just for show.

ChatGPT acts as a prompt expander. If you give it a lazy prompt like "a cool mountain," ChatGPT turns that into: "A cinematic wide shot of a jagged, snow-capped mountain peak at sunset, with purple and orange hues reflecting off a crystal-clear alpine lake in the foreground, digital art style." It fills in the blanks. This is why DALL-E 3 feels so much smarter than Midjourney or Stable Diffusion for the average person. You don't need a degree in art history to get a decent result.

What Most People Get Wrong About the Limits

There is a huge misconception that DALL-E 3 is "uncensored" if you pay for it. Not true. OpenAI is incredibly strict about safety, sometimes to a frustrating degree.

💡 You might also like: Milwaukee Tool Power Source: Why Your Jobsite Setup Probably Needs One

- The Public Figure Ban: Try asking for a photo of a specific politician or celebrity. It won't happen. The system is hardcoded to swap those names for generic descriptions or just flat-out refuse.

- Artist Styles: You can't ask for "art in the style of [Living Artist Name]" anymore. OpenAI built this wall to respect intellectual property, though you can still ask for "Van Gogh style" because he’s been gone long enough for the lawyers not to care.

- The "C2PA" Metadata: Since early 2024, every image DALL-E 3 spits out has invisible "digital fingerprints." These are called C2PA metadata. If you upload a DALL-E image to a site that checks for it, the site will know it’s AI.

DALL-E 3 vs. The Competition: A Reality Check

Is it the "best"? Kinda depends on what you're doing.

If you want photorealism that looks like a National Geographic cover, Midjourney (specifically v6 or the newer v7) usually wins. Midjourney has a "vibe" that DALL-E 3 sometimes lacks. DALL-E 3 images can occasionally look a bit "too clean" or "plastic-y"—a look often called "AI gloss."

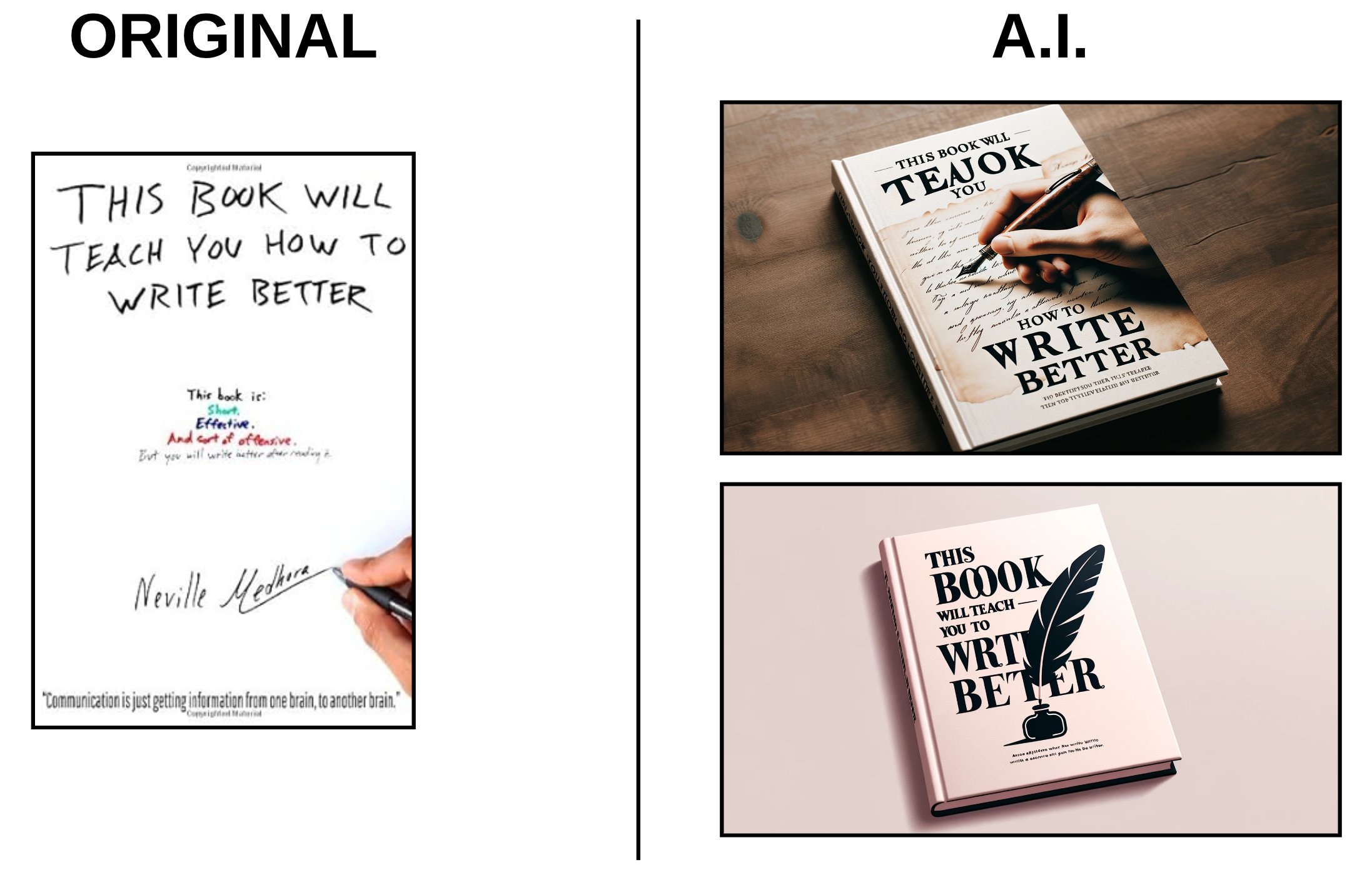

However, if you need text inside the image, DALL-E 3 is the undisputed king.

Before DALL-E 3, AI was famously bad at spelling. It would try to write "Welcome Home" and give you "WELECCM HOOMM." DALL-E 3 can actually handle sentences, signs, and labels with a high success rate. This makes it the go-to for making mockups, logos, or memes where the text actually matters.

Technical Quirks and Resolution

The model generally works in three aspect ratios:

- Square ($1024 \times 1024$)

- Wide ($1792 \times 1024$)

- Tall ($1024 \times 1792$)

It doesn't do custom sizes as easily as Stable Diffusion, and it doesn't "upscale" as well as some third-party tools. If you’re a power user, you’ve probably noticed that DALL-E 3 is a "one-and-done" generator. You get what it gives you. While you can use the "select and edit" tool inside ChatGPT to change specific parts of an image, it’s not as powerful as "Inpainting" in specialized software.

Real-World Use Cases for 2026

Small businesses are probably the biggest winners here. Think about it.

You need a hero image for a blog post about "Future of Gardening." Instead of spending 45 minutes on a stock photo site looking for a picture that isn't cringey, you just describe exactly what you want. It’s a massive time-saver.

Education is another one. Teachers are using it to create visual aids for history or science that literally didn't exist before. Want to show what a Roman market actually looked like based on a specific historical description? DALL-E 3 can do it in ten seconds.

Dealing With the "Uncanny Valley"

Even with all the progress, DALL-E 3 still trips up.

Hands are better, but they still occasionally have six fingers. Anatomy can get weird if two people are hugging—the AI sometimes fuses them into a strange human pretzel. And "physics" isn't a concept the AI understands; it just knows what things look like, not how they work. So, you might see a bicycle with a chain that goes to nowhere.

How to Actually Get What You Want

If you want to master the DALL-E 3 image generator, stop trying to use "hacks."

✨ Don't miss: Fast CO2 Car Designs: What People Get Wrong About Winning at the Drag Strip

- Be Specific about Lighting: Use words like "volumetric fog," "golden hour," or "harsh neon."

- Describe the Camera: Mention "low angle shot" or "macro lens" if you want a specific depth of field.

- Talk to ChatGPT: If the first image is bad, don't just start over. Say, "Keep the background, but make the person look older and change the shirt to green."

Actionable Next Steps

To get the most out of DALL-E 3 today, start by testing its ability to follow complex logic.

First, try a prompt with three distinct objects in three distinct colors—this tests the "spatial awareness" that separates it from older models. Second, use the "Natural" vs. "Vivid" setting if you are using the API or a specialized interface; "Natural" often removes that shiny AI look that makes images feel fake.

Finally, always check the metadata if you are using these for professional work. Tools like Content Credentials (verify.contentauthenticity.org) can help you see if your images are properly tagged, ensuring you stay transparent about using AI in your creative workflow.