Time is weirdly slippery once you start talking to machines. You might think five minutes is just the time it takes to brew a quick cup of coffee or wait for a slow elevator, but in the world of computing and digital logic, it's a massive, sprawling eternity. If you're looking for the quick answer, 5 min in ms is exactly 300,000 milliseconds.

That's the number. 300,000.

But why do we care? Honestly, unless you're a developer or a sysadmin trying to set a timeout for a server request, you probably don't. Yet, the moment you start digging into how our devices actually "think," you realize that milliseconds are the heartbeat of everything we do online. When a webpage hangs for a second, that’s 1,000 milliseconds of your life gone. When you’re looking at a five-minute window, you’re looking at a unit of time that can either be a tiny blip or a critical failure point depending on the context.

The Basic Math Behind 5 min in ms

Let’s break it down because the math is actually super simple once you see it. We start with the fact that one second contains 1,000 milliseconds. This is standard SI (International System of Units) stuff. Since there are 60 seconds in a single minute, one minute equals 60,000 milliseconds.

Now, just multiply that by five.

$60,000 \times 5 = 300,000$

💡 You might also like: The Coaxial Cable to Ethernet Adapter: Why Your Old TV Wiring Is Actually a Goldmine

It’s a clean number. Beautifully round. If you were writing code in JavaScript, you’d often see this expressed as a literal value in functions like setTimeout() or setInterval(). For instance, if you want a pop-up to appear after five minutes, you aren't typing "5 minutes." You’re typing 300000.

Why the "ms" Unit Dominates Tech

Computers don't really do "minutes." They don't even really do "seconds" in the way we perceive them. Most system clocks are ticking away at much higher frequencies. Using milliseconds (ms) provides a level of precision that "0.0833 hours" just can't touch. In gaming, we talk about "ping" in milliseconds. A ping of 50ms is great; a ping of 500ms makes the game unplayable.

Imagine trying to measure a 50ms lag spike in minutes. It would be 0.000833 minutes. That’s just annoying to read.

Real-World Applications for 300,000 Milliseconds

Where do you actually see this in the wild? It’s not just for school math problems.

One of the most common places you’ll find 5 min in ms is in Session Timeouts. You’ve probably experienced this: you’re logged into your bank account, you get distracted by a text, and when you look back, you’ve been logged out for security. Developers often set these "inactivity tokens" to expire at the five-minute mark. To the server, that’s a countdown starting at 300,000 and ticking down to zero.

Then there’s the world of Internet of Things (IoT).

Think about a smart thermostat. It doesn't need to report the temperature of your living room every single millisecond—that would murder the battery and clog your Wi-Fi. Instead, it might "sleep" and wake up every five minutes to send a packet of data. That "sleep" command is almost certainly written as a millisecond value in the firmware.

Video Editing and Latency

If you’re a video editor, five minutes is a standard length for a YouTube video or a long-form commercial. In high-end editing suites like Adobe Premiere or DaVinci Resolve, the timeline is often measured in frames, but the underlying metadata can be tracked in milliseconds for synchronization.

While 300,000ms is a lot of time in a CPU cycle, it's the blink of an eye in a production schedule.

The Human Perception of 300,000ms

Humans are terrible at judging time accurately without a clock. This is a psychological fact. In a study by researchers like David Eagleman, it’s been shown that our "internal clock" speeds up or slows down based on adrenaline and novelty.

If you are bored, five minutes feels like an hour.

If you are in a flow state, five minutes feels like ten seconds.

But for a computer? 300,000ms is always exactly 300,000ms. It is the ultimate objective observer. This discrepancy is why we rely on millisecond-precision for everything from air traffic control to high-frequency trading on Wall Street. In the time it takes you to blink once (about 300ms), a high-frequency trading algorithm could have executed hundreds of trades. By the time 5 min in ms has passed, millions of dollars have shifted across the globe.

Common Mistakes When Converting Units

People trip up on the zeros. It happens to the best of us.

- The 3,000 mistake: People often think 100ms is a second (it's 1,000), so they end up with 3,000ms for five minutes. Wrong. You’re off by a factor of 100.

- The "Micro" Confusion: Milliseconds (ms) and Microseconds (μs) are different. There are 1,000 microseconds in one millisecond. If you accidentally program a delay for 300,000 microseconds, your "five-minute" wait will actually last only 0.3 seconds. That’s a massive bug in the making.

The Impact on Web Performance

If you’re running a website, five minutes is a lifetime. According to Google’s Core Web Vitals, users start to get twitchy if a page takes more than 2,500ms (2.5 seconds) to load the main content. If your server takes 300,000ms to respond, your site is effectively dead.

In fact, most web servers (like Nginx or Apache) have a default timeout much lower than five minutes. If a request takes that long, the server assumes something went horribly wrong and kills the connection.

Converting Other Time Units to ms

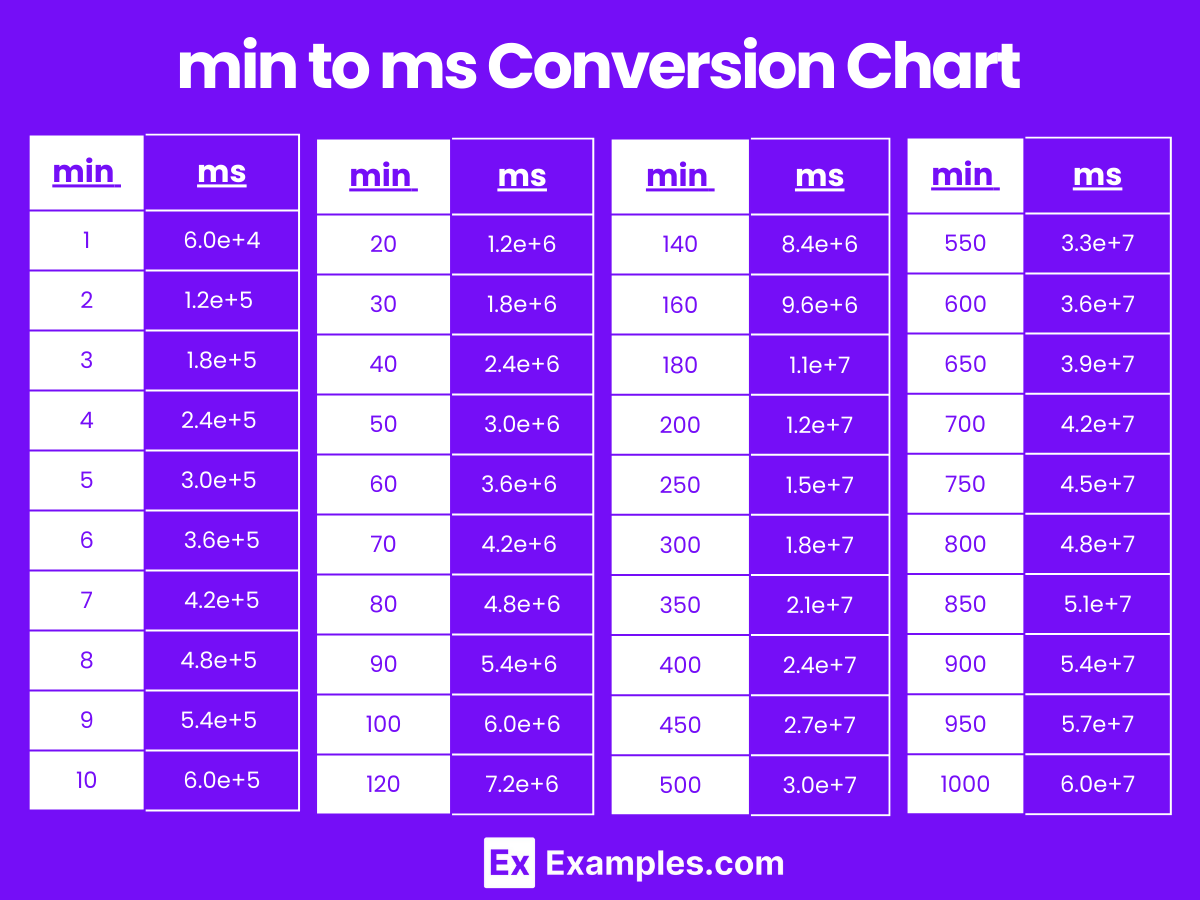

To get a better feel for the scale of 5 min in ms, it helps to look at the neighbors.

- 1 Minute: 60,000 ms

- 2 Minutes: 120,000 ms

- 10 Minutes: 600,000 ms

- 1 Hour: 3,600,000 ms

As you can see, the numbers get big, fast. This is why many programming languages have moved toward using "Duration" objects instead of raw integers. It’s way too easy to lose a zero when you’re typing out 3,600,000.

Practical Steps for Developers and Tech Enthusiasts

If you're working on a project where you need to implement a five-minute delay or timeout, don't just hardcode "300000" and call it a day. That's a recipe for "Magic Number" syndrome, where a year from now, you'll look at the code and wonder what that number means.

1. Use Constants

Define a variable like FIVE_MINUTES_IN_MS = 300000. It makes the code readable for humans while keeping it functional for the machine.

2. Check Your Library

Most modern languages have built-in time helpers. In Python, you might use timedelta(minutes=5). In JavaScript, you might use a library like ms that lets you write ms('5m') and converts it automatically.

3. Account for System Drift

On some hardware, especially low-cost microcontrollers, the internal clock isn't perfectly accurate. Over a 300,000ms stretch, you might find the clock drifts by a few milliseconds. If precision is life-or-death, you need an external Real-Time Clock (RTC).

4. Consider the User Experience

If you are forcing a user to wait for 300,000ms, give them a progress bar. Nothing is more frustrating than a screen that looks frozen because a background process is running on a five-minute loop.

Converting 5 min in ms is a fundamental skill in the digital age. It’s the bridge between how we live our lives and how our technology executes our commands. Whether you're timing a soft-boiled egg or setting a database backup interval, knowing that 300,000ms is your target keeps your projects precise and your logic sound.