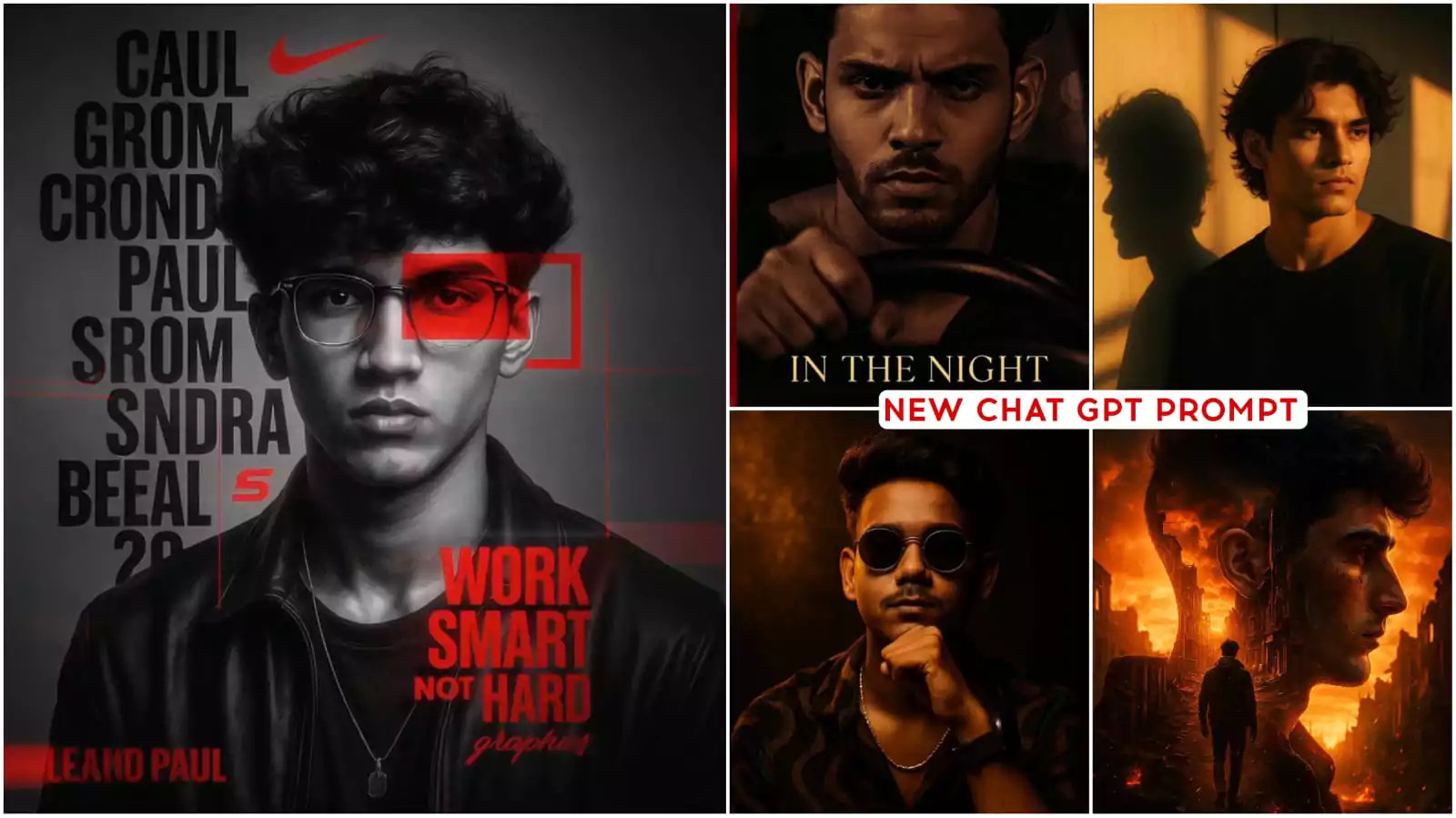

You’ve probably seen them. Those overly glossy, strangely symmetrical, "too perfect" images that scream AI. If you've spent any time messing around with DALL-E 3 inside the chat interface, you know the frustration of typing something cool and getting back a generic superhero or a weirdly lit landscape that looks like a stock photo from 2012. It's annoying.

The truth? Most chat gpt image prompts fail because people talk to the AI like it’s a search engine. It isn’t. It’s a literalist with a vivid imagination and zero common sense. If you ask for a "dog in a park," it gives you the most average, boring dog in the most average, boring park. You're getting the "average" of its entire training set.

To get something that actually looks like art—or a real photo—you have to break the machine's habit of being basic.

The Weird Logic of DALL-E 3 Inside ChatGPT

DALL-E 3 is the engine under the hood. When you type a prompt into ChatGPT, the AI actually rewrites your request into a much longer, more descriptive paragraph before sending it to the image generator. This is a double-edged sword. Sometimes ChatGPT makes your prompt better; often, it adds "fluff" that ruins the specific vibe you wanted.

Ever notice how every person in an AI image looks like a model? That's the safety and "aesthetic" padding OpenAI built in. If you want grit, you have to fight for it.

OpenAI’s lead researcher, Aditya Ramesh, has noted in various technical papers that DALL-E 3 is designed to follow complex instructions better than its predecessor. But that "following" comes at a cost of creative spontaneity. If you don't specify the lens, the lighting, or the texture, the AI fills in the blanks with its favorite default: high-contrast digital gloss.

Stop Using "Photorealistic" and Start Using Specs

Seriously. Stop. The word "photorealistic" is a dead giveaway for bad chat gpt image prompts. It tells the AI to simulate a photo, which usually results in that weird, plastic sheen. Real photographers don't use that word. They talk about gear and light.

Instead of asking for a "realistic photo of a man," try describing the camera. Say "shot on 35mm film, grainy texture, Kodak Portra 400 aesthetic." Or mention a specific lens like a "85mm f/1.8" for that blurry background (bokeh) that makes portraits look professional.

📖 Related: Installing a Push Button Start Kit: What You Need to Know Before Tearing Your Dash Apart

Lighting is the other big one. "Cinematic lighting" is another tired AI trope. Try "harsh midday sun with deep shadows" or "the cool, blue hour glow just after sunset." Small tweaks change everything.

Why Texture Is Your Best Friend

Texture is what makes an image feel human. Digital art is smooth. Reality is messy.

When you’re crafting your next batch of chat gpt image prompts, mention the imperfections. Ask for "scratched surfaces," "lint on a sweater," or "uneven skin texture with visible pores." It sounds gross, but it’s what prevents the "uncanny valley" effect where things look almost real but slightly creepy.

I once spent three hours trying to get a picture of an old library. Every result looked like a 3D render for a video game. It wasn't until I added "dust motes dancing in sunbeams" and "worn leather with cracked spines" that the AI finally gave me something that felt like I could smell the old paper.

The Secret of Negative Weighting (The Manual Way)

In Midjourney, you can use a command to tell the AI what not to include. ChatGPT doesn’t have a "negative prompt" button, but you can hack it. You have to be explicit.

"Create an image of a futuristic city. Do not use neon lights. Avoid purple and pink color palettes. Make it look dusty and industrial rather than clean and high-tech."

By defining the boundaries, you're narrowing the AI's search space. It forces the model out of its "default" futuristic city (which is always purple neon) and into something unique.

👉 See also: Maya How to Mirror: What Most People Get Wrong

Composition Tricks Most People Ignore

We focus so much on the what that we forget the where.

- Dutch Angle: Tilts the camera for a sense of unease or action.

- Low Angle: Makes the subject look powerful and looming.

- Wide Shot: Focuses on the environment, making the subject feel small.

- Extreme Close-Up: Focuses on emotion and detail, like the iris of an eye.

If you don't specify a composition, ChatGPT usually defaults to a "centered, medium shot." It’s the most boring way to look at the world. Tell the AI to put the subject in the bottom left corner using the "rule of thirds." It immediately looks more like a deliberate piece of art and less like a random generation.

Reference Styles Without Stealing

There is a lot of legal heat around AI and artist names. While you can mention famous painters, it's often more effective (and arguably more ethical) to describe the movement.

Instead of naming a specific living illustrator, try "Ukiyo-e woodblock print style" or "1970s dark fantasy book cover art." Use terms like "chiaroscuro" for dramatic light and shadow, or "minimalism" for clean lines. This gives the AI a stylistic framework to work within without just mimicking one person's hard work.

Practical Examples to Try Right Now

Don't just take my word for it. Try these frameworks. You'll see the difference immediately.

The "Old Photo" Look:

"A candid black and white photo from the 1940s of a busy street corner in Chicago. Heavy film grain, slightly out of focus, motion blur on the cars. The lighting is overcast and flat. Authentic vintage aesthetic."

The "Macrophotography" Look:

"A macro shot of a single drop of morning dew on a blade of grass. Inside the droplet, a tiny reflection of a nearby flower is visible. Shot with a 100mm macro lens, extremely shallow depth of field, soft morning light."

✨ Don't miss: Why the iPhone 7 Red iPhone 7 Special Edition Still Hits Different Today

The "Stylized Illustration" Look:

"A flat vector illustration of a mountain range. Use a limited color palette of earthy ochre, deep forest green, and slate gray. Clean lines, no gradients, isometric perspective."

Dealing with the "DALL-E 3 Glitch"

Sometimes, ChatGPT just ignores you. You ask for a red car, and it gives you a blue one. Or it adds text that looks like gibberish.

When this happens, don't just repeat the same prompt. The AI gets "stuck" in a latent space loop. You need to pivot. Change one major variable. If "red car" isn't working, ask for a "crimson vintage vehicle." Changing the vocabulary often nudges the AI into a different part of its neural network, breaking the cycle of errors.

Also, remember that DALL-E 3 is currently limited to square, wide (16:9), or tall (9:16) aspect ratios. If you don't specify, you get a square. For landscapes or cinematic scenes, always ask for "wide aspect ratio." It changes how the AI composes the entire scene, not just the borders.

Actionable Steps for Better Results

Stop being polite. ChatGPT is a tool, not a person. You don't need to say "please." You need to be a director.

- Define the Medium: Is it an oil painting, a polaroid, a 3D render, or a charcoal sketch?

- Set the Lighting: Golden hour, fluorescent office lights, candlelight, or strobe lighting.

- Pick a Lens/Angle: Wide-angle for scale, telephoto for compression, bird's-eye view for a map-like feel.

- Add "Grunge": Ask for imperfections, dirt, scratches, or asymmetrical features to kill the "AI look."

- Iterate, Don't Restart: If the image is 80% there, tell ChatGPT: "Keep the character exactly the same, but change the background to a snowy forest and make the lighting darker."

Mastering chat gpt image prompts is really just about learning to see the world like an artist or a photographer. Once you can describe why a real photo looks good—the grain, the light, the weird angle—you can make the AI replicate it. It takes a bit more effort than "cool robot," but the results are actually worth sharing.

Next time you open the app, try to describe a memory instead of an idea. Describe the smell of the air, the grit under your fingernails, and the way the light hit the floor. The AI will surprise you.